Boyan Duan

Data blurring: sample splitting a single sample

Dec 21, 2021

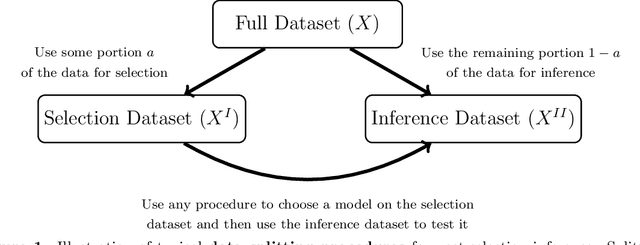

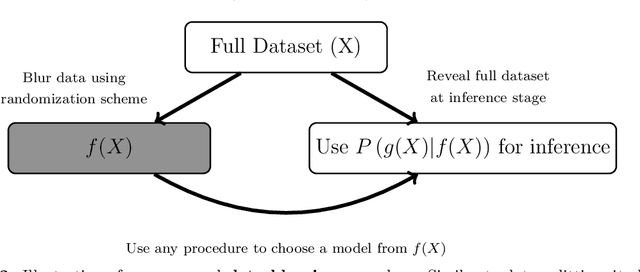

Abstract:Suppose we observe a random vector $X$ from some distribution $P$ in a known family with unknown parameters. We ask the following question: when is it possible to split $X$ into two parts $f(X)$ and $g(X)$ such that neither part is sufficient to reconstruct $X$ by itself, but both together can recover $X$ fully, and the joint distribution of $(f(X),g(X))$ is tractable? As one example, if $X=(X_1,\dots,X_n)$ and $P$ is a product distribution, then for any $m<n$, we can split the sample to define $f(X)=(X_1,\dots,X_m)$ and $g(X)=(X_{m+1},\dots,X_n)$. Rasines and Young (2021) offers an alternative route of accomplishing this task through randomization of $X$ with additive Gaussian noise which enables post-selection inference in finite samples for Gaussian distributed data and asymptotically for non-Gaussian additive models. In this paper, we offer a more general methodology for achieving such a split in finite samples by borrowing ideas from Bayesian inference to yield a (frequentist) solution that can be viewed as a continuous analog of data splitting. We call our method data blurring, as an alternative to data splitting, data carving and p-value masking. We exemplify the method on a few prototypical applications, such as post-selection inference for trend filtering and other regression problems.

Pose Agnostic Cross-spectral Hallucination via Disentangling Independent Factors

Sep 10, 2019

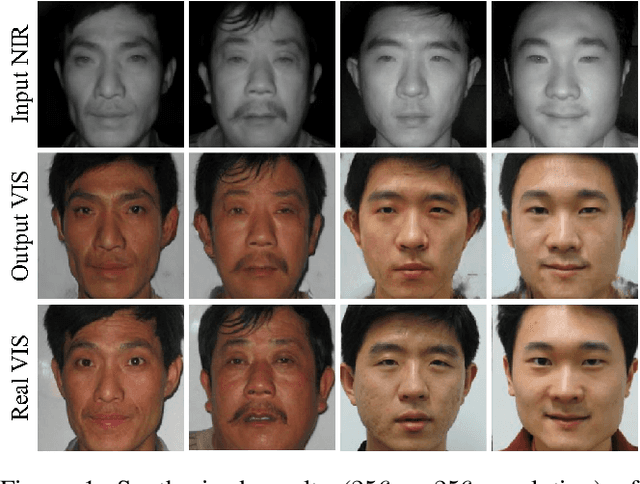

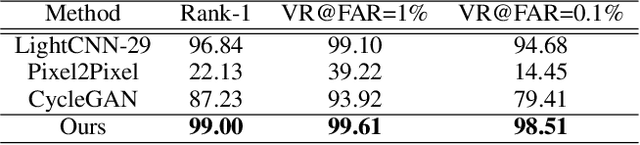

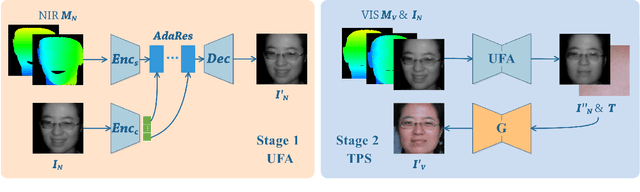

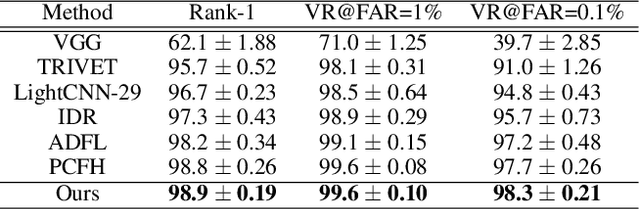

Abstract:The cross-sensor gap is one of the challenges that arise much research interests in Heterogeneous Face Recognition (HFR). Although recent methods have attempted to fill the gap with deep generative networks, most of them suffered from the inevitable misalignment between different face modalities. Instead of imaging sensors, the misalignment primarily results from geometric variations (e.g., pose and expression) on faces that stay independent from spectrum. Rather than building a monolithic but complex structure, this paper proposes a Pose Agnostic Cross-spectral Hallucination (PACH) approach to disentangle the independent factors and deal with them in individual stages. In the first stage, an Unsupervised Face Alignment (UFA) network is designed to align the near-infrared (NIR) and visible (VIS) images in a generative way, where 3D information is effectively utilized as the pose guidance. Thus the task of the second stage becomes spectrum transform with paired data. We develop a Texture Prior Synthesis (TPS) network to accomplish complexion control and consequently generate more realistic VIS images than existing methods. Experiments on three challenging NIR-VIS datasets verify the effectiveness of our approach in producing visually appealing images and achieving state-of-the-art performance in cross-spectral HFR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge