Bowen Hu

SG-GAN: Fine Stereoscopic-Aware Generation for 3D Brain Point Cloud Up-sampling from a Single Image

May 22, 2023Abstract:In minimally-invasive brain surgeries with indirect and narrow operating environments, 3D brain reconstruction is crucial. However, as requirements of accuracy for some new minimally-invasive surgeries (such as brain-computer interface surgery) are higher and higher, the outputs of conventional 3D reconstruction, such as point cloud (PC), are facing the challenges that sample points are too sparse and the precision is insufficient. On the other hand, there is a scarcity of high-density point cloud datasets, which makes it challenging to train models for direct reconstruction of high-density brain point clouds. In this work, a novel model named stereoscopic-aware graph generative adversarial network (SG-GAN) with two stages is proposed to generate fine high-density PC conditioned on a single image. The Stage-I GAN sketches the primitive shape and basic structure of the organ based on the given image, yielding Stage-I point clouds. The Stage-II GAN takes the results from Stage-I and generates high-density point clouds with detailed features. The Stage-II GAN is capable of correcting defects and restoring the detailed features of the region of interest (ROI) through the up-sampling process. Furthermore, a parameter-free-attention-based free-transforming module is developed to learn the efficient features of input, while upholding a promising performance. Comparing with the existing methods, the SG-GAN model shows superior performance in terms of visual quality, objective measurements, and performance in classification, as demonstrated by comprehensive results measured by several evaluation metrics including PC-to-PC error and Chamfer distance.

3D Brain Reconstruction by Hierarchical Shape-Perception Network from a Single Incomplete Image

Jul 23, 2021

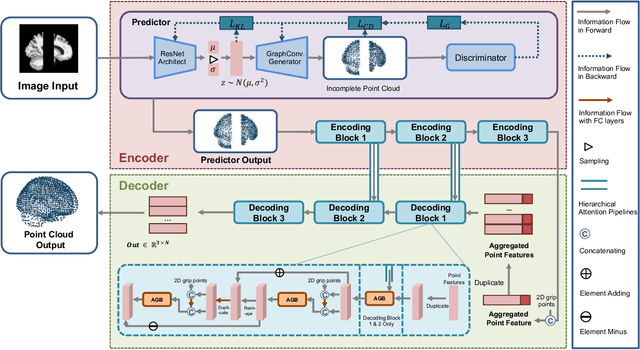

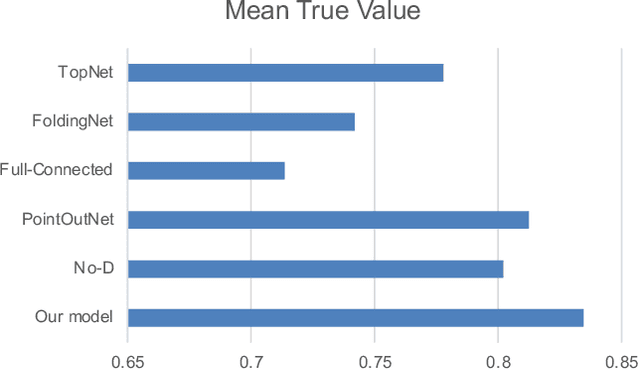

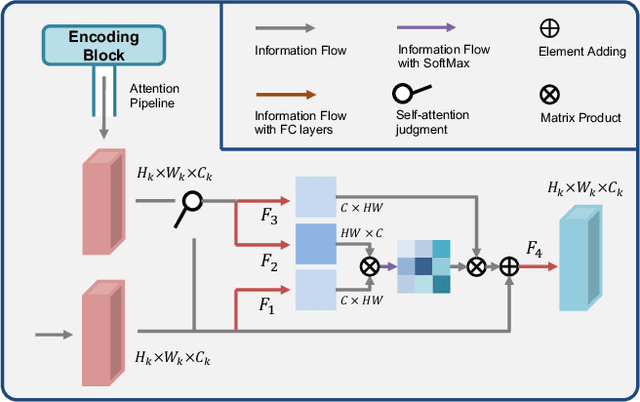

Abstract:3D shape reconstruction is essential in the navigation of minimally-invasive and auto robot-guided surgeries whose operating environments are indirect and narrow, and there have been some works that focused on reconstructing the 3D shape of the surgical organ through limited 2D information available. However, the lack and incompleteness of such information caused by intraoperative emergencies (such as bleeding) and risk control conditions have not been considered. In this paper, a novel hierarchical shape-perception network (HSPN) is proposed to reconstruct the 3D point clouds (PCs) of specific brains from one single incomplete image with low latency. A tree-structured predictor and several hierarchical attention pipelines are constructed to generate point clouds that accurately describe the incomplete images and then complete these point clouds with high quality. Meanwhile, attention gate blocks (AGBs) are designed to efficiently aggregate geometric local features of incomplete PCs transmitted by hierarchical attention pipelines and internal features of reconstructing point clouds. With the proposed HSPN, 3D shape perception and completion can be achieved spontaneously. Comprehensive results measured by Chamfer distance and PC-to-PC error demonstrate that the performance of the proposed HSPN outperforms other competitive methods in terms of qualitative displays, quantitative experiment, and classification evaluation.

A Point Cloud Generative Model via Tree-Structured Graph Convolutions for 3D Brain Shape Reconstruction

Jul 21, 2021

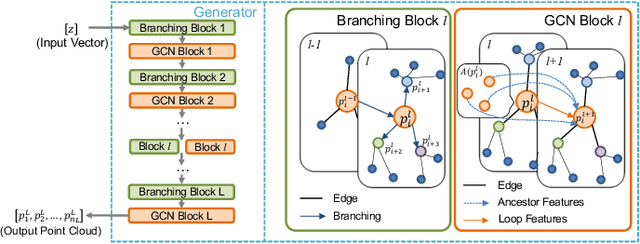

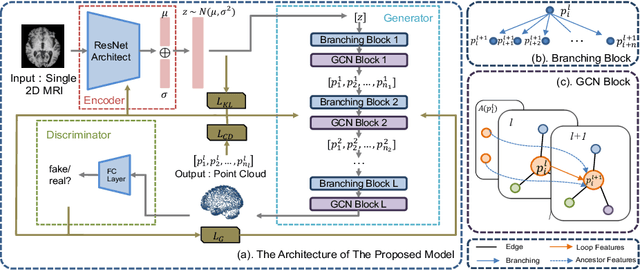

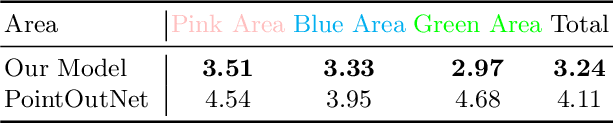

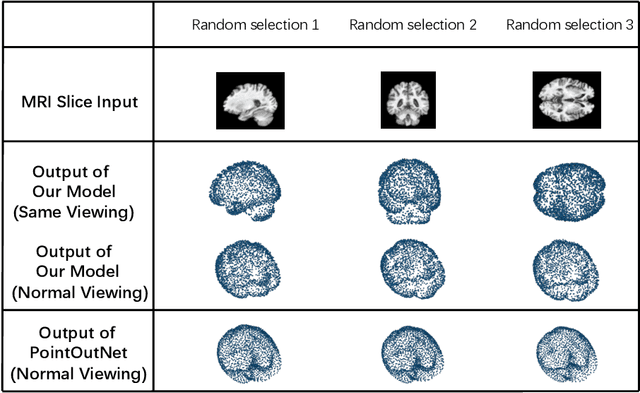

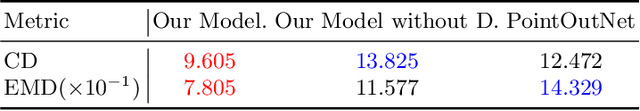

Abstract:Fusing medical images and the corresponding 3D shape representation can provide complementary information and microstructure details to improve the operational performance and accuracy in brain surgery. However, compared to the substantial image data, it is almost impossible to obtain the intraoperative 3D shape information by using physical methods such as sensor scanning, especially in minimally invasive surgery and robot-guided surgery. In this paper, a general generative adversarial network (GAN) architecture based on graph convolutional networks is proposed to reconstruct the 3D point clouds (PCs) of brains by using one single 2D image, thus relieving the limitation of acquiring 3D shape data during surgery. Specifically, a tree-structured generative mechanism is constructed to use the latent vector effectively and transfer features between hidden layers accurately. With the proposed generative model, a spontaneous image-to-PC conversion is finished in real-time. Competitive qualitative and quantitative experimental results have been achieved on our model. In multiple evaluation methods, the proposed model outperforms another common point cloud generative model PointOutNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge