Bor-Jeng Chen

NVAutoNet: Fast and Accurate 360$^{\circ}$ 3D Visual Perception For Self Driving

Mar 30, 2023

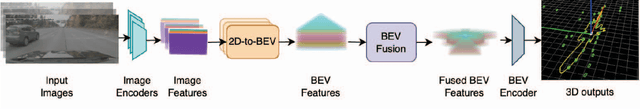

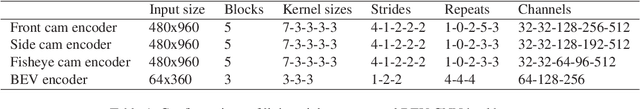

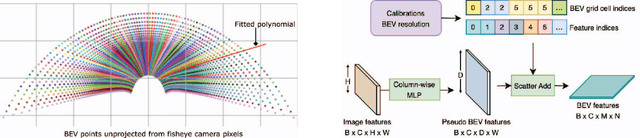

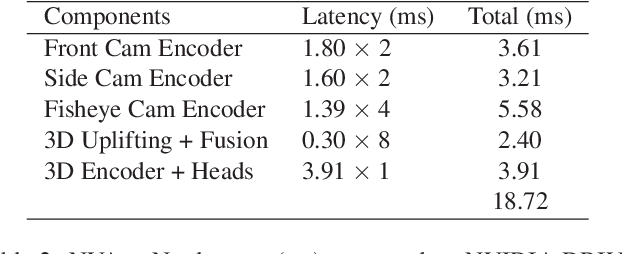

Abstract:Robust real-time perception of 3D world is essential to the autonomous vehicle. We introduce an end-to-end surround camera perception system for self-driving. Our perception system is a novel multi-task, multi-camera network which takes a variable set of time-synced camera images as input and produces a rich collection of 3D signals such as sizes, orientations, locations of obstacles, parking spaces and free-spaces, etc. Our perception network is modular and end-to-end: 1) the outputs can be consumed directly by downstream modules without any post-processing such as clustering and fusion -- improving speed of model deployment and in-car testing 2) the whole network training is done in one single stage -- improving speed of model improvement and iterations. The network is well designed to have high accuracy while running at 53 fps on NVIDIA Orin SoC (system-on-a-chip). The network is robust to sensor mounting variations (within some tolerances) and can be quickly customized for different vehicle types via efficient model fine-tuning thanks of its capability of taking calibration parameters as additional inputs during training and testing. Most importantly, our network has been successfully deployed and being tested on real roads.

Exploring Local Context for Multi-target Tracking in Wide Area Aerial Surveillance

Mar 28, 2016

Abstract:Tracking many vehicles in wide coverage aerial imagery is crucial for understanding events in a large field of view. Most approaches aim to associate detections from frame differencing into tracks. However, slow or stopped vehicles result in long-term missing detections and further cause tracking discontinuities. Relying merely on appearance clue to recover missing detections is difficult as targets are extremely small and in grayscale. In this paper, we address the limitations of detection association methods by coupling it with a local context tracker (LCT), which does not rely on motion detections. On one hand, our LCT learns neighboring spatial relation and tracks each target in consecutive frames using graph optimization. It takes the advantage of context constraints to avoid drifting to nearby targets. We generate hypotheses from sparse and dense flow efficiently to keep solutions tractable. On the other hand, we use detection association strategy to extract short tracks in batch processing. We explicitly handle merged detections by generating additional hypotheses from them. Our evaluation on wide area aerial imagery sequences shows significant improvement over state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge