Boon-Chong Seet

Cooperative Multi-Agent Reinforcement Learning Based Distributed Dynamic Spectrum Access in Cognitive Radio Networks

Jun 17, 2021

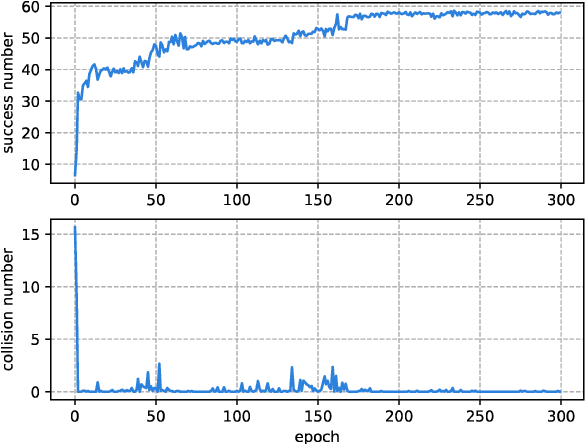

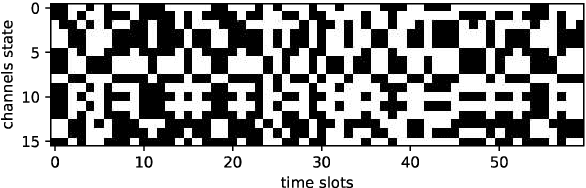

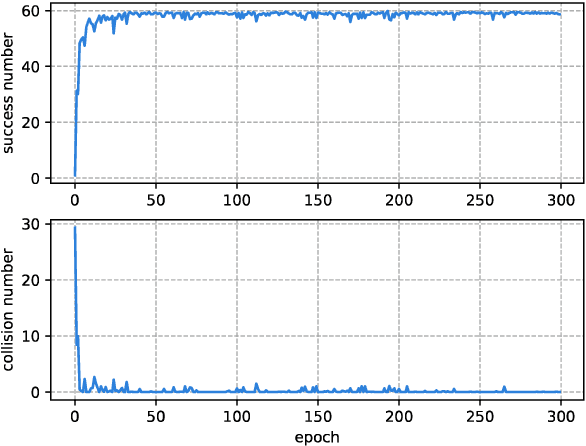

Abstract:With the development of the 5G and Internet of Things, amounts of wireless devices need to share the limited spectrum resources. Dynamic spectrum access (DSA) is a promising paradigm to remedy the problem of inefficient spectrum utilization brought upon by the historical command-and-control approach to spectrum allocation. In this paper, we investigate the distributed DSA problem for multi-user in a typical multi-channel cognitive radio network. The problem is formulated as a decentralized partially observable Markov decision process (Dec-POMDP), and we proposed a centralized off-line training and distributed on-line execution framework based on cooperative multi-agent reinforcement learning (MARL). We employ the deep recurrent Q-network (DRQN) to address the partial observability of the state for each cognitive user. The ultimate goal is to learn a cooperative strategy which maximizes the sum throughput of cognitive radio network in distributed fashion without coordination information exchange between cognitive users. Finally, we validate the proposed algorithm in various settings through extensive experiments. From the simulation results, we can observe that the proposed algorithm can converge fast and achieve almost the optimal performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge