Bojan Tunguz

Predictive models of RNA degradation through dual crowdsourcing

Oct 14, 2021

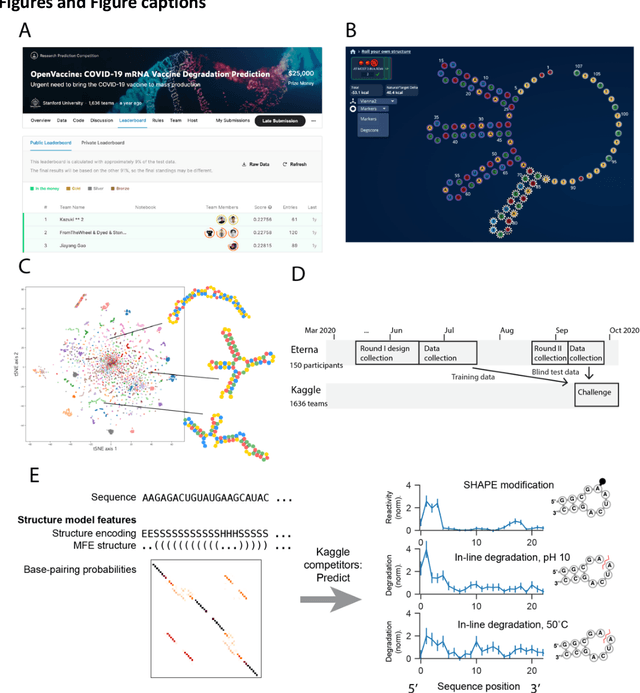

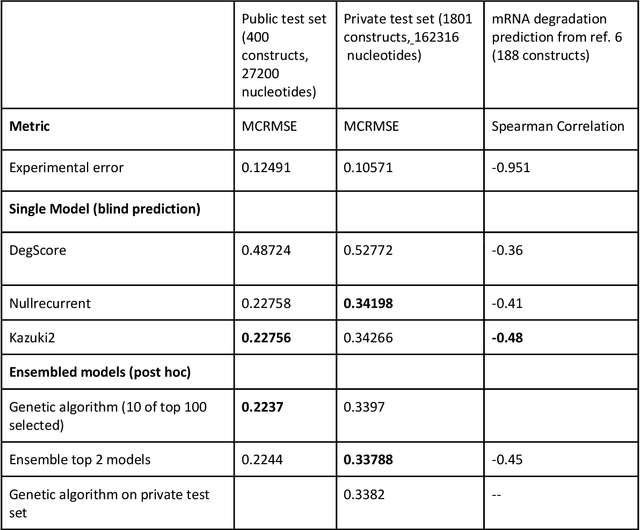

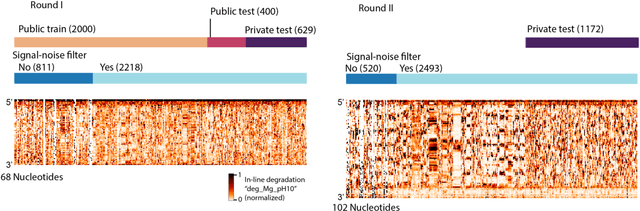

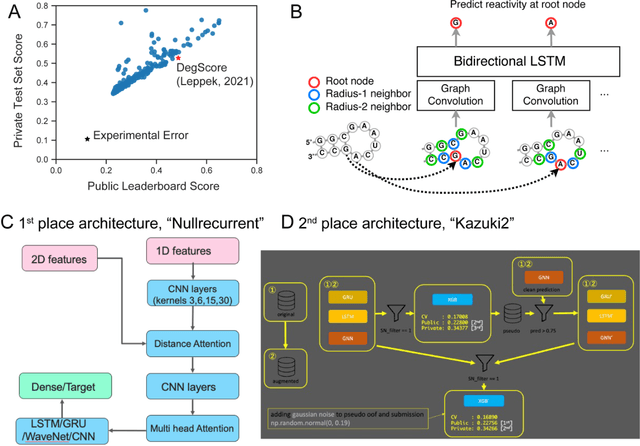

Abstract:Messenger RNA-based medicines hold immense potential, as evidenced by their rapid deployment as COVID-19 vaccines. However, worldwide distribution of mRNA molecules has been limited by their thermostability, which is fundamentally limited by the intrinsic instability of RNA molecules to a chemical degradation reaction called in-line hydrolysis. Predicting the degradation of an RNA molecule is a key task in designing more stable RNA-based therapeutics. Here, we describe a crowdsourced machine learning competition ("Stanford OpenVaccine") on Kaggle, involving single-nucleotide resolution measurements on 6043 102-130-nucleotide diverse RNA constructs that were themselves solicited through crowdsourcing on the RNA design platform Eterna. The entire experiment was completed in less than 6 months. Winning models demonstrated test set errors that were better by 50% than the previous state-of-the-art DegScore model. Furthermore, these models generalized to blindly predicting orthogonal degradation data on much longer mRNA molecules (504-1588 nucleotides) with improved accuracy over DegScore and other models. Top teams integrated natural language processing architectures and data augmentation techniques with predictions from previous dynamic programming models for RNA secondary structure. These results indicate that such models are capable of representing in-line hydrolysis with excellent accuracy, supporting their use for designing stabilized messenger RNAs. The integration of two crowdsourcing platforms, one for data set creation and another for machine learning, may be fruitful for other urgent problems that demand scientific discovery on rapid timescales.

GPU Accelerated Exhaustive Search for Optimal Ensemble of Black-Box Optimization Algorithms

Dec 12, 2020

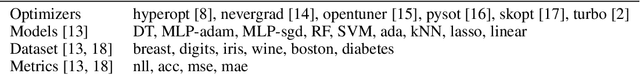

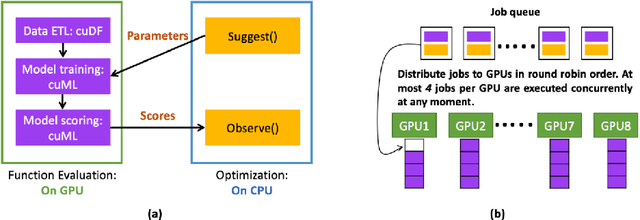

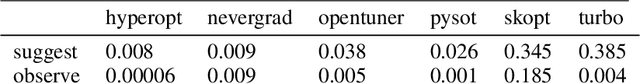

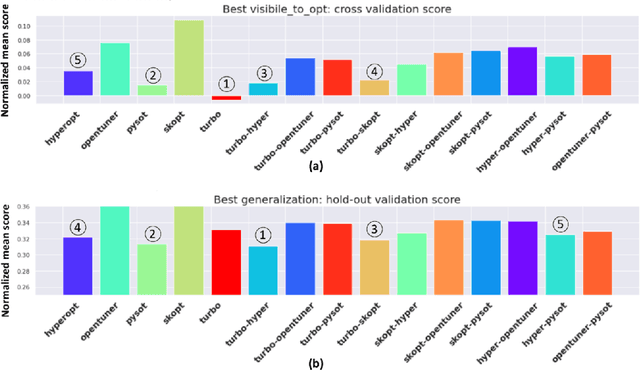

Abstract:Black-box optimization is essential for tuning complex machine learning algorithms which are easier to experiment with than to understand. In this paper, we show that a simple ensemble of black-box optimization algorithms can outperform any single one of them. However, searching for such an optimal ensemble requires a large number of experiments. We propose a Multi-GPU-optimized framework to accelerate a brute force search for the optimal ensemble of black-box optimization algorithms by running many experiments in parallel. The lightweight optimizations are performed by CPU while expensive model training and evaluations are assigned to GPUs. We evaluate 15 optimizers by training 2.7 million models and running 541,440 optimizations. On a DGX-1, the search time is reduced from more than 10 days on two 20-core CPUs to less than 24 hours on 8-GPUs. With the optimal ensemble found by GPU-accelerated exhaustive search, we won the 2nd place of NeurIPS 2020 black-box optimization challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge