Bingyao Huang

DPCS: Path Tracing-Based Differentiable Projector-Camera Systems

Mar 15, 2025Abstract:Projector-camera systems (ProCams) simulation aims to model the physical project-and-capture process and associated scene parameters of a ProCams, and is crucial for spatial augmented reality (SAR) applications such as ProCams relighting and projector compensation. Recent advances use an end-to-end neural network to learn the project-and-capture process. However, these neural network-based methods often implicitly encapsulate scene parameters, such as surface material, gamma, and white balance in the network parameters, and are less interpretable and hard for novel scene simulation. Moreover, neural networks usually learn the indirect illumination implicitly in an image-to-image translation way which leads to poor performance in simulating complex projection effects such as soft-shadow and interreflection. In this paper, we introduce a novel path tracing-based differentiable projector-camera systems (DPCS), offering a differentiable ProCams simulation method that explicitly integrates multi-bounce path tracing. Our DPCS models the physical project-and-capture process using differentiable physically-based rendering (PBR), enabling the scene parameters to be explicitly decoupled and learned using much fewer samples. Moreover, our physically-based method not only enables high-quality downstream ProCams tasks, such as ProCams relighting and projector compensation, but also allows novel scene simulation using the learned scene parameters. In experiments, DPCS demonstrates clear advantages over previous approaches in ProCams simulation, offering better interpretability, more efficient handling of complex interreflection and shadow, and requiring fewer training samples.

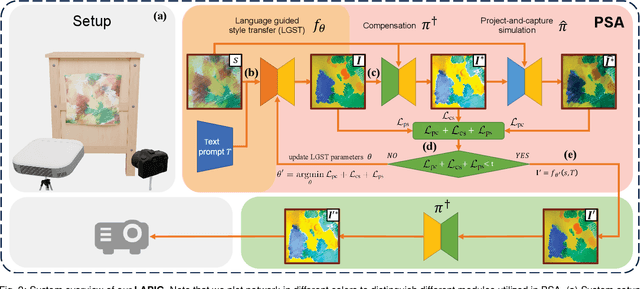

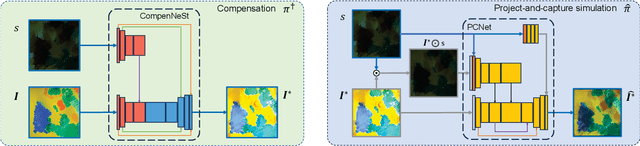

LAPIG: Language Guided Projector Image Generation with Surface Adaptation and Stylization

Mar 15, 2025

Abstract:We propose LAPIG, a language guided projector image generation method with surface adaptation and stylization. LAPIG consists of a projector-camera system and a target textured projection surface. LAPIG takes the user text prompt as input and aims to transform the surface style using the projector. LAPIG's key challenge is that due to the projector's physical brightness limitation and the surface texture, the viewer's perceived projection may suffer from color saturation and artifacts in both dark and bright regions, such that even with the state-of-the-art projector compensation techniques, the viewer may see clear surface texture-related artifacts. Therefore, how to generate a projector image that follows the user's instruction while also displaying minimum surface artifacts is an open problem. To address this issue, we propose projection surface adaptation (PSA) that can generate compensable surface stylization. We first train two networks to simulate the projector compensation and project-and-capture processes, this allows us to find a satisfactory projector image without real project-and-capture and utilize gradient descent for fast convergence. Then, we design content and saturation losses to guide the projector image generation, such that the generated image shows no clearly perceivable artifacts when projected. Finally, the generated image is projected for visually pleasing surface style morphing effects. The source code and video are available on the project page: https://Yu-chen-Deng.github.io/LAPIG/.

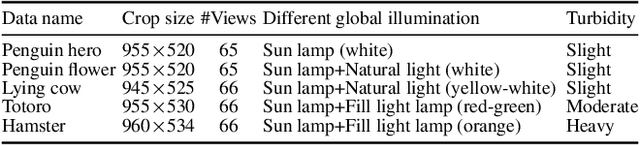

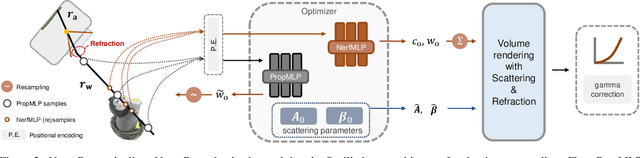

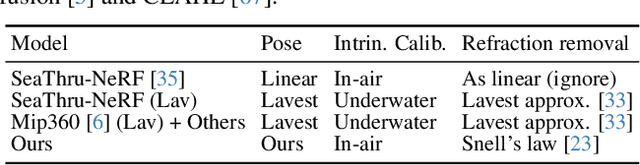

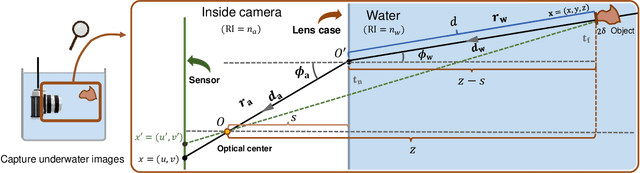

NeuroPump: Simultaneous Geometric and Color Rectification for Underwater Images

Dec 20, 2024

Abstract:Underwater image restoration aims to remove geometric and color distortions due to water refraction, absorption and scattering. Previous studies focus on restoring either color or the geometry, but to our best knowledge, not both. However, in practice it may be cumbersome to address the two rectifications one-by-one. In this paper, we propose NeuroPump, a self-supervised method to simultaneously optimize and rectify underwater geometry and color as if water were pumped out. The key idea is to explicitly model refraction, absorption and scattering in Neural Radiance Field (NeRF) pipeline, such that it not only performs simultaneous geometric and color rectification, but also enables to synthesize novel views and optical effects by controlling the decoupled parameters. In addition, to address issue of lack of real paired ground truth images, we propose an underwater 360 benchmark dataset that has real paired (i.e., with and without water) images. Our method clearly outperforms other baselines both quantitatively and qualitatively.

GS-ProCams: Gaussian Splatting-based Projector-Camera Systems

Dec 16, 2024

Abstract:We present GS-ProCams, the first Gaussian Splatting-based framework for projector-camera systems (ProCams). GS-ProCams significantly enhances the efficiency of projection mapping (PM) that requires establishing geometric and radiometric mappings between the projector and the camera. Previous CNN-based ProCams are constrained to a specific viewpoint, limiting their applicability to novel perspectives. In contrast, NeRF-based ProCams support view-agnostic projection mapping, however, they require an additional colocated light source and demand significant computational and memory resources. To address this issue, we propose GS-ProCams that employs 2D Gaussian for scene representations, and enables efficient view-agnostic ProCams applications. In particular, we explicitly model the complex geometric and photometric mappings of ProCams using projector responses, the target surface's geometry and materials represented by Gaussians, and global illumination component. Then, we employ differentiable physically-based rendering to jointly estimate them from captured multi-view projections. Compared to state-of-the-art NeRF-based methods, our GS-ProCams eliminates the need for additional devices, achieving superior ProCams simulation quality. It is also 600 times faster and uses only 1/10 of the GPU memory.

CompenHR: Efficient Full Compensation for High-resolution Projector

Nov 28, 2023

Abstract:Full projector compensation is a practical task of projector-camera systems. It aims to find a projector input image, named compensation image, such that when projected it cancels the geometric and photometric distortions due to the physical environment and hardware. State-of-the-art methods use deep learning to address this problem and show promising performance for low-resolution setups. However, directly applying deep learning to high-resolution setups is impractical due to the long training time and high memory cost. To address this issue, this paper proposes a practical full compensation solution. Firstly, we design an attention-based grid refinement network to improve geometric correction quality. Secondly, we integrate a novel sampling scheme into an end-to-end compensation network to alleviate computation and introduce attention blocks to preserve key features. Finally, we construct a benchmark dataset for high-resolution projector full compensation. In experiments, our method demonstrates clear advantages in both efficiency and quality.

Modeling Deep Learning Based Privacy Attacks on Physical Mail

Dec 22, 2020

Abstract:Mail privacy protection aims to prevent unauthorized access to hidden content within an envelope since normal paper envelopes are not as safe as we think. In this paper, for the first time, we show that with a well designed deep learning model, the hidden content may be largely recovered without opening the envelope. We start by modeling deep learning-based privacy attacks on physical mail content as learning the mapping from the camera-captured envelope front face image to the hidden content, then we explicitly model the mapping as a combination of perspective transformation, image dehazing and denoising using a deep convolutional neural network, named Neural-STE (See-Through-Envelope). We show experimentally that hidden content details, such as texture and image structure, can be clearly recovered. Finally, our formulation and model allow us to design envelopes that can counter deep learning-based privacy attacks on physical mail.

SPAA: Stealthy Projector-based Adversarial Attacks on Deep Image Classifiers

Dec 10, 2020

Abstract:Light-based adversarial attacks aim to fool deep learning-based image classifiers by altering the physical light condition using a controllable light source, e.g., a projector. Compared with physical attacks that place carefully designed stickers or printed adversarial objects, projector-based ones obviate modifying the physical entities. Moreover, projector-based attacks can be performed transiently and dynamically by altering the projection pattern. However, existing approaches focus on projecting adversarial patterns that result in clearly perceptible camera-captured perturbations, while the more interesting yet challenging goal, stealthy projector-based attack, remains an open problem. In this paper, for the first time, we formulate this problem as an end-to-end differentiable process and propose Stealthy Projector-based Adversarial Attack (SPAA). In SPAA, we approximate the real project-and-capture operation using a deep neural network named PCNet, then we include PCNet in the optimization of projector-based attacks such that the generated adversarial projection is physically plausible. Finally, to generate robust and stealthy adversarial projections, we propose an optimization algorithm that uses minimum perturbation and adversarial confidence thresholds to alternate between the adversarial loss and stealthiness loss optimization. Our experimental evaluations show that the proposed SPAA clearly outperforms other methods by achieving higher attack success rates and meanwhile being stealthier.

End-to-end Full Projector Compensation

Aug 04, 2020

Abstract:Full projector compensation aims to modify a projector input image to compensate for both geometric and photometric disturbance of the projection surface. Traditional methods usually solve the two parts separately and may suffer from suboptimal solutions. In this paper, we propose the first end-to-end differentiable solution, named CompenNeSt++, to solve the two problems jointly. First, we propose a novel geometric correction subnet, named WarpingNet, which is designed with a cascaded coarse-to-fine structure to learn the sampling grid directly from sampling images. Second, we propose a novel photometric compensation subnet, named CompenNeSt, which is designed with a siamese architecture to capture the photometric interactions between the projection surface and the projected images, and to use such information to compensate the geometrically corrected images. By concatenating WarpingNet with CompenNeSt, CompenNeSt++ accomplishes full projector compensation and is end-to-end trainable. Third, to improve practicability, we propose a novel synthetic data-based pre-training strategy to significantly reduce the number of training images and training time. Moreover, we construct the first setup-independent full compensation benchmark to facilitate future studies. In thorough experiments, our method shows clear advantages over prior art with promising compensation quality and meanwhile being practically convenient.

DeLTra: Deep Light Transport for Projector-Camera Systems

Mar 06, 2020

Abstract:In projector-camera systems, light transport models the propagation from projector emitted radiance to camera-captured irradiance. In this paper, we propose the first end-to-end trainable solution named Deep Light Transport (DeLTra) that estimates radiometrically uncalibrated projector-camera light transport. DeLTra is designed to have two modules: DepthToAtrribute and ShadingNet. DepthToAtrribute explicitly learns rays, depth and normal, and then estimates rough Phong illuminations. Afterwards, the CNN-based ShadingNet renders photorealistic camera-captured image using estimated shading attributes and rough Phong illuminations. A particular challenge addressed by DeLTra is occlusion, for which we exploit epipolar constraint and propose a novel differentiable direct light mask. Thus, it can be learned end-to-end along with the other DeLTra modules. Once trained, DeLTra can be applied simultaneously to three projector-camera tasks: image-based relighting, projector compensation and depth/normal reconstruction. In our experiments, DeLTra shows clear advantages over previous arts with promising quality and meanwhile being practically convenient.

CompenNet++: End-to-end Full Projector Compensation

Aug 17, 2019

Abstract:Full projector compensation aims to modify a projector input image such that it can compensate for both geometric and photometric disturbance of the projection surface. Traditional methods usually solve the two parts separately, although they are known to correlate with each other. In this paper, we propose the first end-to-end solution, named CompenNet++, to solve the two problems jointly. Our work non-trivially extends CompenNet, which was recently proposed for photometric compensation with promising performance. First, we propose a novel geometric correction subnet, which is designed with a cascaded coarse-to-fine structure to learn the sampling grid directly from photometric sampling images. Second, by concatenating the geometric correction subset with CompenNet, CompenNet++ accomplishes full projector compensation and is end-to-end trainable. Third, after training, we significantly simplify both geometric and photometric compensation parts, and hence largely improves the running time efficiency. Moreover, we construct the first setup-independent full compensation benchmark to facilitate the study on this topic. In our thorough experiments, our method shows clear advantages over previous arts with promising compensation quality and meanwhile being practically convenient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge