Bingyan Nie

MME-Unify: A Comprehensive Benchmark for Unified Multimodal Understanding and Generation Models

Apr 07, 2025Abstract:Existing MLLM benchmarks face significant challenges in evaluating Unified MLLMs (U-MLLMs) due to: 1) lack of standardized benchmarks for traditional tasks, leading to inconsistent comparisons; 2) absence of benchmarks for mixed-modality generation, which fails to assess multimodal reasoning capabilities. We present a comprehensive evaluation framework designed to systematically assess U-MLLMs. Our benchmark includes: Standardized Traditional Task Evaluation. We sample from 12 datasets, covering 10 tasks with 30 subtasks, ensuring consistent and fair comparisons across studies." 2. Unified Task Assessment. We introduce five novel tasks testing multimodal reasoning, including image editing, commonsense QA with image generation, and geometric reasoning. 3. Comprehensive Model Benchmarking. We evaluate 12 leading U-MLLMs, such as Janus-Pro, EMU3, VILA-U, and Gemini2-flash, alongside specialized understanding (e.g., Claude-3.5-Sonnet) and generation models (e.g., DALL-E-3). Our findings reveal substantial performance gaps in existing U-MLLMs, highlighting the need for more robust models capable of handling mixed-modality tasks effectively. The code and evaluation data can be found in https://mme-unify.github.io/.

Multi-View Factorizing and Disentangling: A Novel Framework for Incomplete Multi-View Multi-Label Classification

Jan 11, 2025

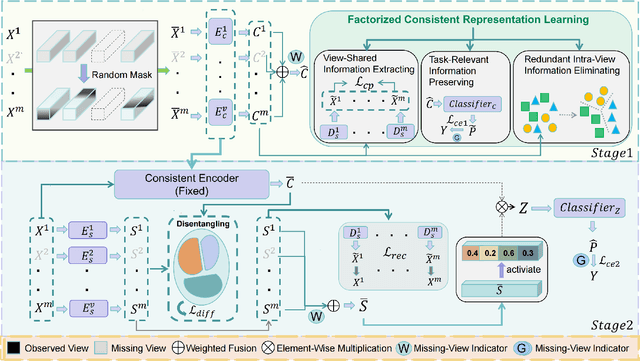

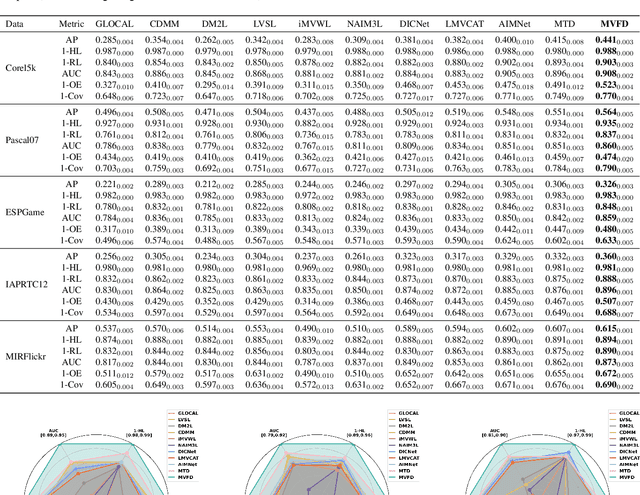

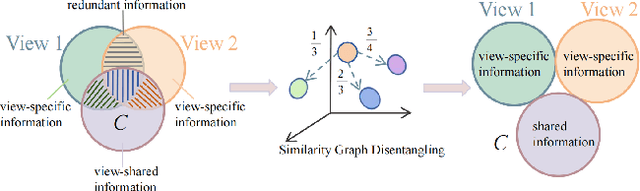

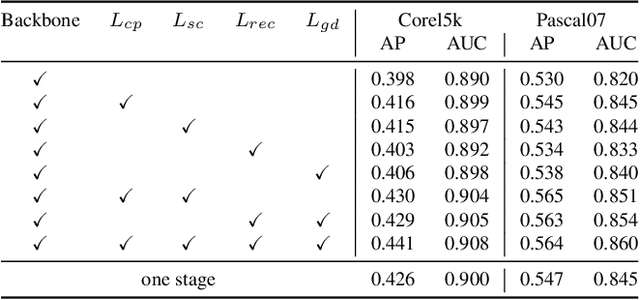

Abstract:Multi-view multi-label classification (MvMLC) has recently garnered significant research attention due to its wide range of real-world applications. However, incompleteness in views and labels is a common challenge, often resulting from data collection oversights and uncertainties in manual annotation. Furthermore, the task of learning robust multi-view representations that are both view-consistent and view-specific from diverse views still a challenge problem in MvMLC. To address these issues, we propose a novel framework for incomplete multi-view multi-label classification (iMvMLC). Our method factorizes multi-view representations into two independent sets of factors: view-consistent and view-specific, and we correspondingly design a graph disentangling loss to fully reduce redundancy between these representations. Additionally, our framework innovatively decomposes consistent representation learning into three key sub-objectives: (i) how to extract view-shared information across different views, (ii) how to eliminate intra-view redundancy in consistent representations, and (iii) how to preserve task-relevant information. To this end, we design a robust task-relevant consistency learning module that collaboratively learns high-quality consistent representations, leveraging a masked cross-view prediction (MCP) strategy and information theory. Notably, all modules in our framework are developed to function effectively under conditions of incomplete views and labels, making our method adaptable to various multi-view and multi-label datasets. Extensive experiments on five datasets demonstrate that our method outperforms other leading approaches.

Incomplete Multi-view Multi-label Classification via a Dual-level Contrastive Learning Framework

Nov 27, 2024Abstract:Recently, multi-view and multi-label classification have become significant domains for comprehensive data analysis and exploration. However, incompleteness both in views and labels is still a real-world scenario for multi-view multi-label classification. In this paper, we seek to focus on double missing multi-view multi-label classification tasks and propose our dual-level contrastive learning framework to solve this issue. Different from the existing works, which couple consistent information and view-specific information in the same feature space, we decouple the two heterogeneous properties into different spaces and employ contrastive learning theory to fully disentangle the two properties. Specifically, our method first introduces a two-channel decoupling module that contains a shared representation and a view-proprietary representation to effectively extract consistency and complementarity information across all views. Second, to efficiently filter out high-quality consistent information from multi-view representations, two consistency objectives based on contrastive learning are conducted on the high-level features and the semantic labels, respectively. Extensive experiments on several widely used benchmark datasets demonstrate that the proposed method has more stable and superior classification performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge