Bingxin Gu

DeepMSS: Deep Multi-Modality Segmentation-to-Survival Learning for Survival Outcome Prediction from PET/CT Images

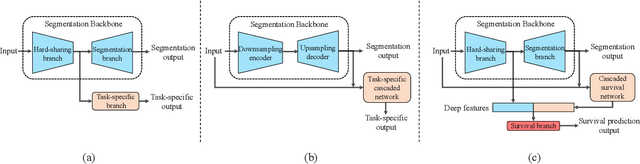

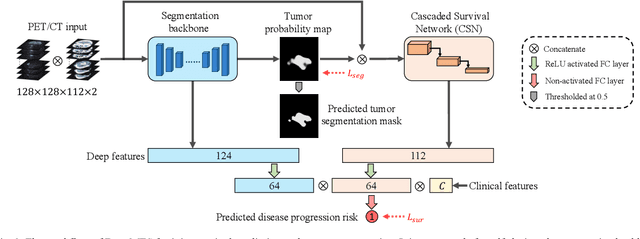

May 17, 2023Abstract:Survival prediction is a major concern for cancer management. Deep survival models based on deep learning have been widely adopted to perform end-to-end survival prediction from medical images. Recent deep survival models achieved promising performance by jointly performing tumor segmentation with survival prediction, where the models were guided to extract tumor-related information through Multi-Task Learning (MTL). However, existing deep survival models have difficulties in exploring out-of-tumor prognostic information (e.g., local lymph node metastasis and adjacent tissue invasions). In addition, existing deep survival models are underdeveloped in utilizing multi-modality images. Empirically-designed strategies were commonly adopted to fuse multi-modality information via fixed pre-designed networks. In this study, we propose a Deep Multi-modality Segmentation-to-Survival model (DeepMSS) for survival prediction from PET/CT images. Instead of adopting MTL, we propose a novel Segmentation-to-Survival Learning (SSL) strategy, where our DeepMSS is trained for tumor segmentation and survival prediction sequentially. This strategy enables the DeepMSS to initially focus on tumor regions and gradually expand its focus to include other prognosis-related regions. We also propose a data-driven strategy to fuse multi-modality image information, which realizes automatic optimization of fusion strategies based on training data during training and also improves the adaptability of DeepMSS to different training targets. Our DeepMSS is also capable of incorporating conventional radiomics features as an enhancement, where handcrafted features can be extracted from the DeepMSS-segmented tumor regions and cooperatively integrated into the DeepMSS's training and inference. Extensive experiments with two large clinical datasets show that our DeepMSS outperforms state-of-the-art survival prediction methods.

DeepMTS: Deep Multi-task Learning for Survival Prediction in Patients with Advanced Nasopharyngeal Carcinoma using Pretreatment PET/CT

Sep 16, 2021

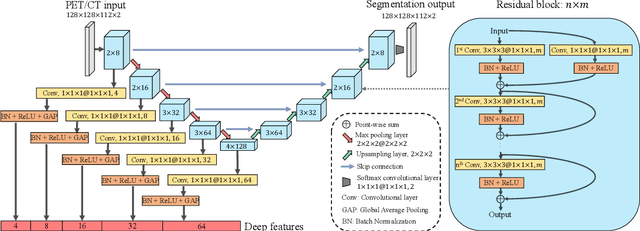

Abstract:Nasopharyngeal Carcinoma (NPC) is a worldwide malignant epithelial cancer. Survival prediction is a major concern for NPC patients, as it provides early prognostic information that is needed to guide treatments. Recently, deep learning, which leverages Deep Neural Networks (DNNs) to learn deep representations of image patterns, has been introduced to the survival prediction in various cancers including NPC. It has been reported that image-derived end-to-end deep survival models have the potential to outperform clinical prognostic indicators and traditional radiomics-based survival models in prognostic performance. However, deep survival models, especially 3D models, require large image training data to avoid overfitting. Unfortunately, medical image data is usually scarce, especially for Positron Emission Tomography/Computed Tomography (PET/CT) due to the high cost of PET/CT scanning. Compared to Magnetic Resonance Imaging (MRI) or Computed Tomography (CT) providing only anatomical information of tumors, PET/CT that provides both anatomical (from CT) and metabolic (from PET) information is promising to achieve more accurate survival prediction. However, we have not identified any 3D end-to-end deep survival model that applies to small PET/CT data of NPC patients. In this study, we introduced the concept of multi-task leaning into deep survival models to address the overfitting problem resulted from small data. Tumor segmentation was incorporated as an auxiliary task to enhance the model's efficiency of learning from scarce PET/CT data. Based on this idea, we proposed a 3D end-to-end Deep Multi-Task Survival model (DeepMTS) for joint survival prediction and tumor segmentation. Our DeepMTS can jointly learn survival prediction and tumor segmentation using PET/CT data of only 170 patients with advanced NPC.

Prediction of 5-year Progression-Free Survival in Advanced Nasopharyngeal Carcinoma with Pretreatment PET/CT using Multi-Modality Deep Learning-based Radiomics

Mar 09, 2021

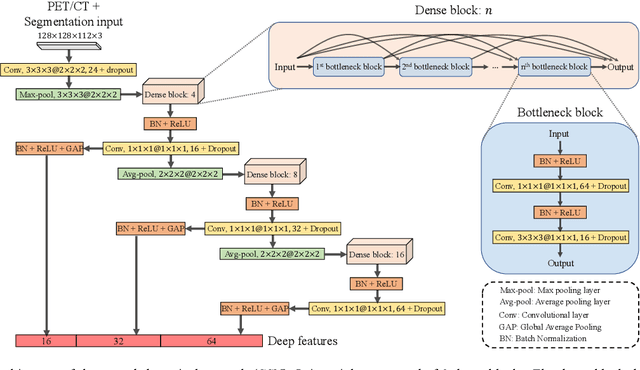

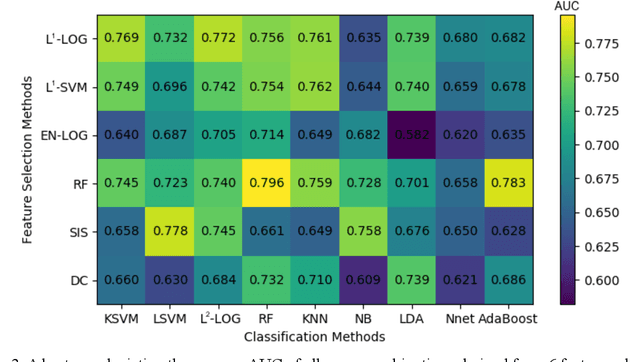

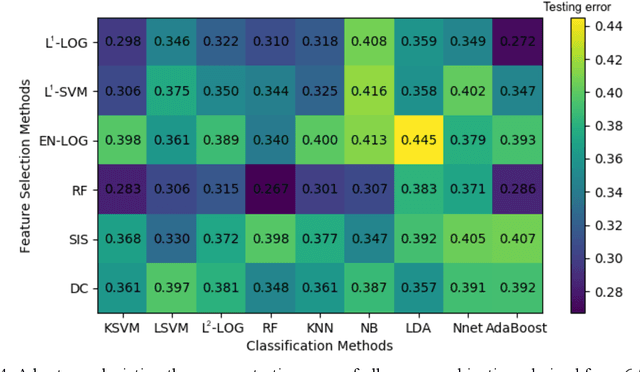

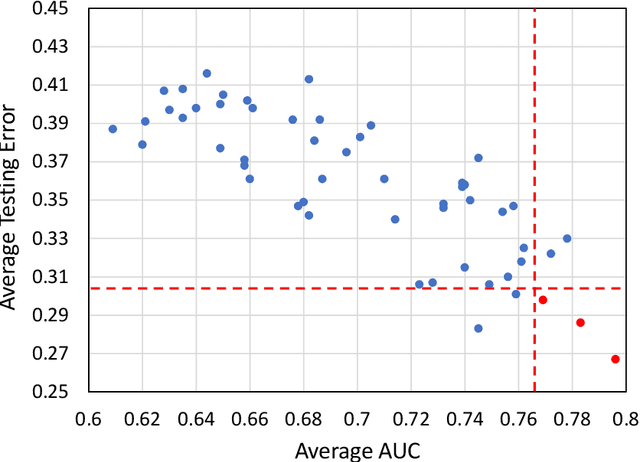

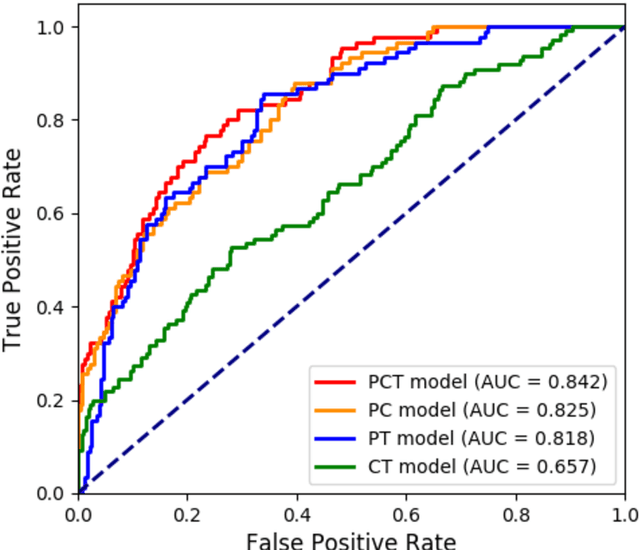

Abstract:Deep Learning-based Radiomics (DLR) has achieved great success on medical image analysis. In this study, we aim to explore the capability of DLR for survival prediction in NPC. We developed an end-to-end multi-modality DLR model using pretreatment PET/CT images to predict 5-year Progression-Free Survival (PFS) in advanced NPC. A total of 170 patients with pathological confirmed advanced NPC (TNM stage III or IVa) were enrolled in this study. A 3D Convolutional Neural Network (CNN), with two branches to process PET and CT separately, was optimized to extract deep features from pretreatment multi-modality PET/CT images and use the derived features to predict the probability of 5-year PFS. Optionally, TNM stage, as a high-level clinical feature, can be integrated into our DLR model to further improve prognostic performance. For a comparison between CR and DLR, 1456 handcrafted features were extracted, and three top CR methods were selected as benchmarks from 54 combinations of 6 feature selection methods and 9 classification methods. Compared to the three CR methods, our multi-modality DLR models using both PET and CT, with or without TNM stage (named PCT or PC model), resulted in the highest prognostic performance. Furthermore, the multi-modality PCT model outperformed single-modality DLR models using only PET and TNM stage (PT model) or only CT and TNM stage (CT model). Our study identified potential radiomics-based prognostic model for survival prediction in advanced NPC, and suggests that DLR could serve as a tool for aiding in cancer management.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge