Bingjie Wang

FutureX-Pro: Extending Future Prediction to High-Value Vertical Domains

Jan 18, 2026Abstract:Building upon FutureX, which established a live benchmark for general-purpose future prediction, this report introduces FutureX-Pro, including FutureX-Finance, FutureX-Retail, FutureX-PublicHealth, FutureX-NaturalDisaster, and FutureX-Search. These together form a specialized framework extending agentic future prediction to high-value vertical domains. While generalist agents demonstrate proficiency in open-domain search, their reliability in capital-intensive and safety-critical sectors remains under-explored. FutureX-Pro targets four economically and socially pivotal verticals: Finance, Retail, Public Health, and Natural Disaster. We benchmark agentic Large Language Models (LLMs) on entry-level yet foundational prediction tasks -- ranging from forecasting market indicators and supply chain demands to tracking epidemic trends and natural disasters. By adapting the contamination-free, live-evaluation pipeline of FutureX, we assess whether current State-of-the-Art (SOTA) agentic LLMs possess the domain grounding necessary for industrial deployment. Our findings reveal the performance gap between generalist reasoning and the precision required for high-value vertical applications.

Video Individual Counting With Implicit One-to-Many Matching

Jun 16, 2025

Abstract:Video Individual Counting (VIC) is a recently introduced task that aims to estimate pedestrian flux from a video. It extends conventional Video Crowd Counting (VCC) beyond the per-frame pedestrian count. In contrast to VCC that only learns to count repeated pedestrian patterns across frames, the key problem of VIC is how to identify co-existent pedestrians between frames, which turns out to be a correspondence problem. Existing VIC approaches, however, mainly follow a one-to-one (O2O) matching strategy where the same pedestrian must be exactly matched between frames, leading to sensitivity to appearance variations or missing detections. In this work, we show that the O2O matching could be relaxed to a one-to-many (O2M) matching problem, which better fits the problem nature of VIC and can leverage the social grouping behavior of walking pedestrians. We therefore introduce OMAN, a simple but effective VIC model with implicit One-to-Many mAtchiNg, featuring an implicit context generator and a one-to-many pairwise matcher. Experiments on the SenseCrowd and CroHD benchmarks show that OMAN achieves the state-of-the-art performance. Code is available at \href{https://github.com/tiny-smart/OMAN}{OMAN}.

Freqformer: Frequency-Domain Transformer for 3-D Visualization and Quantification of Human Retinal Circulation

Nov 17, 2024

Abstract:We introduce Freqformer, a novel Transformer-based architecture designed for 3-D, high-definition visualization of human retinal circulation from a single scan in commercial optical coherence tomography angiography (OCTA). Freqformer addresses the challenge of limited signal-to-noise ratio in OCTA volume by utilizing a complex-valued frequency-domain module (CFDM) and a simplified multi-head attention (Sim-MHA) mechanism. Using merged volumes as ground truth, Freqformer enables accurate reconstruction of retinal vasculature across the depth planes, allowing for 3-D quantification of capillary segments (count, density, and length). Our method outperforms state-of-the-art convolutional neural networks (CNNs) and several Transformer-based models, with superior performance in peak signal-to-noise ratio (PSNR), structural similarity index measure (SSIM), and learned perceptual image patch similarity (LPIPS). Furthermore, Freqformer demonstrates excellent generalizability across lower scanning density, effectively enhancing OCTA scans with larger fields of view (from 3$\times$3 $mm^{2}$ to 6$\times$6 $mm^{2}$ and 12$\times$12 $mm^{2}$). These results suggest that Freqformer can significantly improve the understanding and characterization of retinal circulation, offering potential clinical applications in diagnosing and managing retinal vascular diseases.

BreakNet: Discontinuity-Resilient Multi-Scale Transformer Segmentation of Retinal Layers

Aug 26, 2024Abstract:Visible light optical coherence tomography (vis-OCT) is gaining traction for retinal imaging due to its high resolution and functional capabilities. However, the significant absorption of hemoglobin in the visible light range leads to pronounced shadow artifacts from retinal blood vessels, posing challenges for accurate layer segmentation. In this study, we present BreakNet, a multi-scale Transformer-based segmentation model designed to address boundary discontinuities caused by these shadow artifacts. BreakNet utilizes hierarchical Transformer and convolutional blocks to extract multi-scale global and local feature maps, capturing essential contextual, textural, and edge characteristics. The model incorporates decoder blocks that expand pathwaproys to enhance the extraction of fine details and semantic information, ensuring precise segmentation. Evaluated on rodent retinal images acquired with prototype vis-OCT, BreakNet demonstrated superior performance over state-of-the-art segmentation models, such as TCCT-BP and U-Net, even when faced with limited-quality ground truth data. Our findings indicate that BreakNet has the potential to significantly improve retinal quantification and analysis.

Fully Automated OCT-based Tissue Screening System

May 15, 2024Abstract:This study introduces a groundbreaking optical coherence tomography (OCT) imaging system dedicated for high-throughput screening applications using ex vivo tissue culture. Leveraging OCT's non-invasive, high-resolution capabilities, the system is equipped with a custom-designed motorized platform and tissue detection ability for automated, successive imaging across samples. Transformer-based deep learning segmentation algorithms further ensure robust, consistent, and efficient readouts meeting the standards for screening assays. Validated using retinal explant cultures from a mouse model of retinal degeneration, the system provides robust, rapid, reliable, unbiased, and comprehensive readouts of tissue response to treatments. This fully automated OCT-based system marks a significant advancement in tissue screening, promising to transform drug discovery, as well as other relevant research fields.

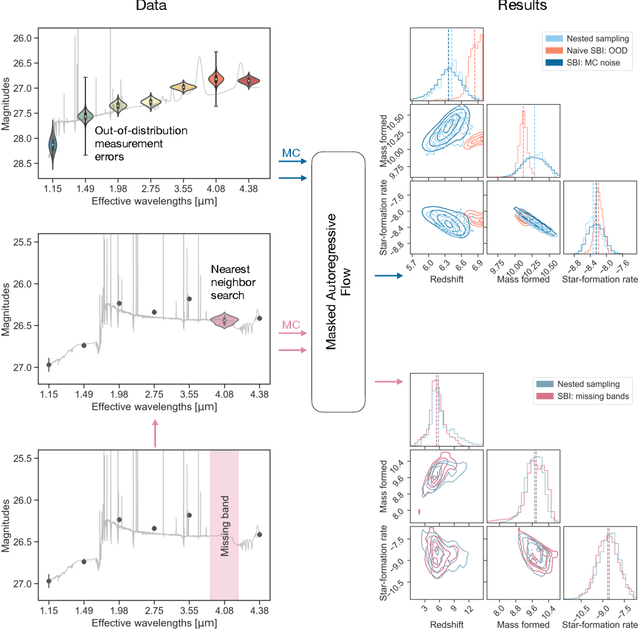

Monte Carlo Techniques for Addressing Large Errors and Missing Data in Simulation-based Inference

Nov 07, 2022

Abstract:Upcoming astronomical surveys will observe billions of galaxies across cosmic time, providing a unique opportunity to map the many pathways of galaxy assembly to an incredibly high resolution. However, the huge amount of data also poses an immediate computational challenge: current tools for inferring parameters from the light of galaxies take $\gtrsim 10$ hours per fit. This is prohibitively expensive. Simulation-based Inference (SBI) is a promising solution. However, it requires simulated data with identical characteristics to the observed data, whereas real astronomical surveys are often highly heterogeneous, with missing observations and variable uncertainties determined by sky and telescope conditions. Here we present a Monte Carlo technique for treating out-of-distribution measurement errors and missing data using standard SBI tools. We show that out-of-distribution measurement errors can be approximated by using standard SBI evaluations, and that missing data can be marginalized over using SBI evaluations over nearby data realizations in the training set. While these techniques slow the inference process from $\sim 1$ sec to $\sim 1.5$ min per object, this is still significantly faster than standard approaches while also dramatically expanding the applicability of SBI. This expanded regime has broad implications for future applications to astronomical surveys.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge