Bilal Taha

Link, Synthesize, Retrieve: Universal Document Linking for Zero-Shot Information Retrieval

Oct 24, 2024

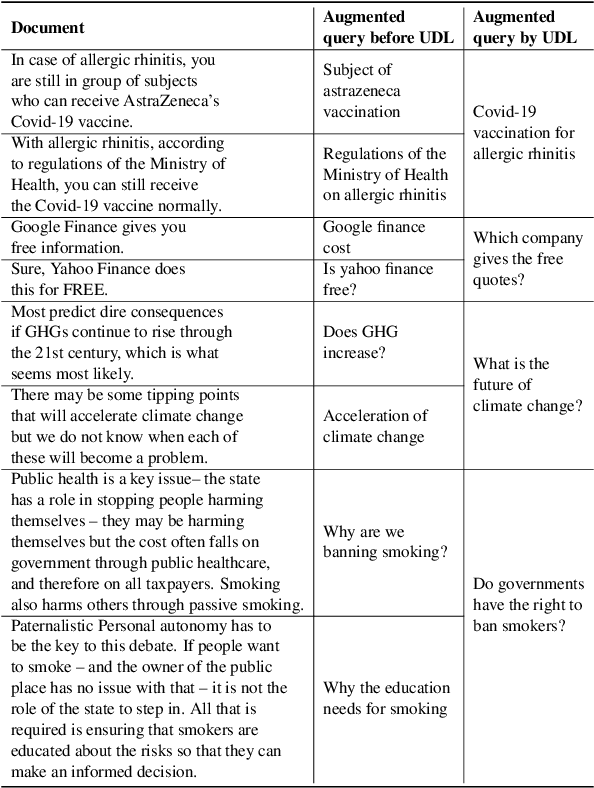

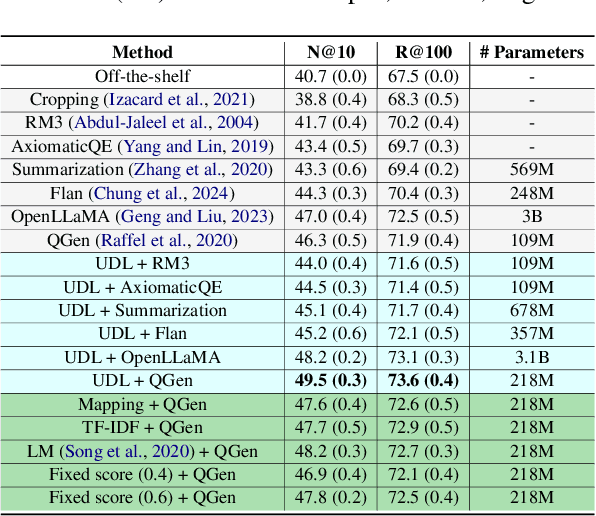

Abstract:Despite the recent advancements in information retrieval (IR), zero-shot IR remains a significant challenge, especially when dealing with new domains, languages, and newly-released use cases that lack historical query traffic from existing users. For such cases, it is common to use query augmentations followed by fine-tuning pre-trained models on the document data paired with synthetic queries. In this work, we propose a novel Universal Document Linking (UDL) algorithm, which links similar documents to enhance synthetic query generation across multiple datasets with different characteristics. UDL leverages entropy for the choice of similarity models and named entity recognition (NER) for the link decision of documents using similarity scores. Our empirical studies demonstrate the effectiveness and universality of the UDL across diverse datasets and IR models, surpassing state-of-the-art methods in zero-shot cases. The developed code for reproducibility is included in https://github.com/eoduself/UDL

CDFL: Efficient Federated Human Activity Recognition using Contrastive Learning and Deep Clustering

Jul 17, 2024Abstract:In the realm of ubiquitous computing, Human Activity Recognition (HAR) is vital for the automation and intelligent identification of human actions through data from diverse sensors. However, traditional machine learning approaches by aggregating data on a central server and centralized processing are memory-intensive and raise privacy concerns. Federated Learning (FL) has emerged as a solution by training a global model collaboratively across multiple devices by exchanging their local model parameters instead of local data. However, in realistic settings, sensor data on devices is non-independently and identically distributed (Non-IID). This means that data activity recorded by most devices is sparse, and sensor data distribution for each client may be inconsistent. As a result, typical FL frameworks in heterogeneous environments suffer from slow convergence and poor performance due to deviation of the global model's objective from the global objective. Most FL methods applied to HAR are either designed for overly ideal scenarios without considering the Non-IID problem or present privacy and scalability concerns. This work addresses these challenges, proposing CDFL, an efficient federated learning framework for image-based HAR. CDFL efficiently selects a representative set of privacy-preserved images using contrastive learning and deep clustering, reduces communication overhead by selecting effective clients for global model updates, and improves global model quality by training on privacy-preserved data. Our comprehensive experiments carried out on three public datasets, namely Stanford40, PPMI, and VOC2012, demonstrate the superiority of CDFL in terms of performance, convergence rate, and bandwidth usage compared to state-of-the-art approaches.

Learned 3D Shape Representations Using Fused Geometrically Augmented Images: Application to Facial Expression and Action Unit Detection

Apr 08, 2019

Abstract:This paper proposes an approach to learn generic multi-modal mesh surface representations using a novel scheme for fusing texture and geometric data. Our approach defines an inverse mapping between different geometric descriptors computed on the mesh surface or its down-sampled version, and the corresponding 2D texture image of the mesh, allowing the construction of fused geometrically augmented images (FGAI). This new fused modality enables us to learn feature representations from 3D data in a highly efficient manner by simply employing standard convolutional neural networks in a transfer-learning mode. In contrast to existing methods, the proposed approach is both computationally and memory efficient, preserves intrinsic geometric information and learns highly discriminative feature representation by effectively fusing shape and texture information at data level. The efficacy of our approach is demonstrated for the tasks of facial action unit detection and expression classification. The extensive experiments conducted on the Bosphorus and BU-4DFE datasets, show that our method produces a significant boost in the performance when compared to state-of-the-art solutions

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge