Bhavika Patel

Unsupervised Hybrid framework for ANomaly Detection (HAND) -- applied to Screening Mammogram

Sep 17, 2024

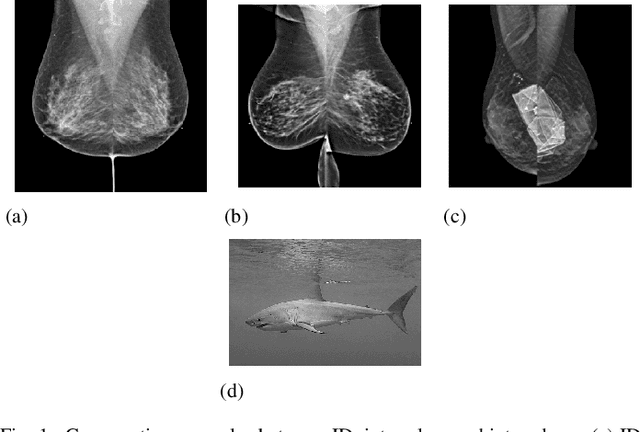

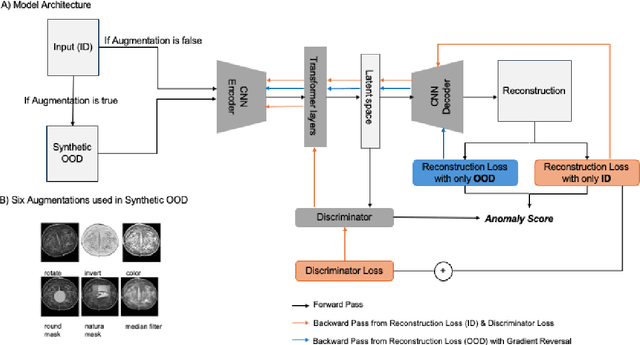

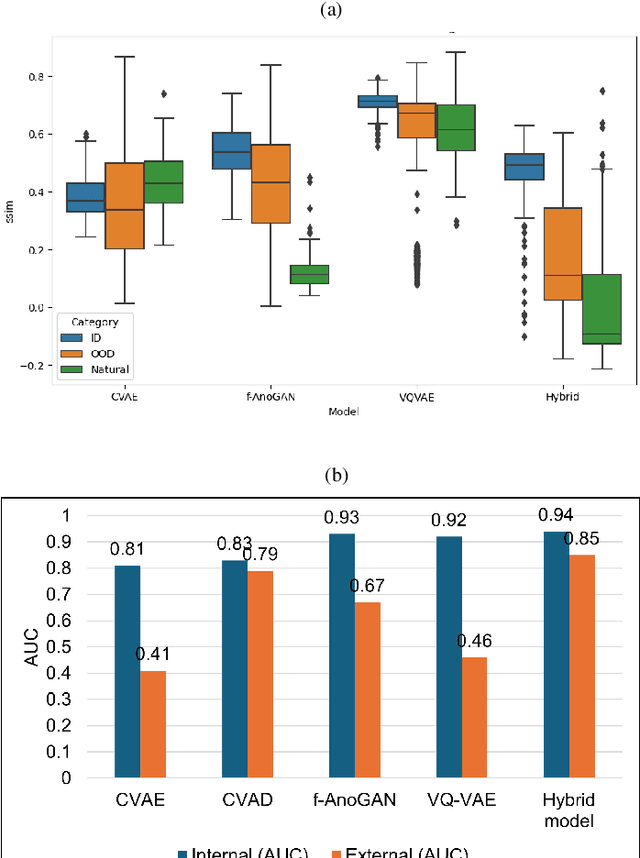

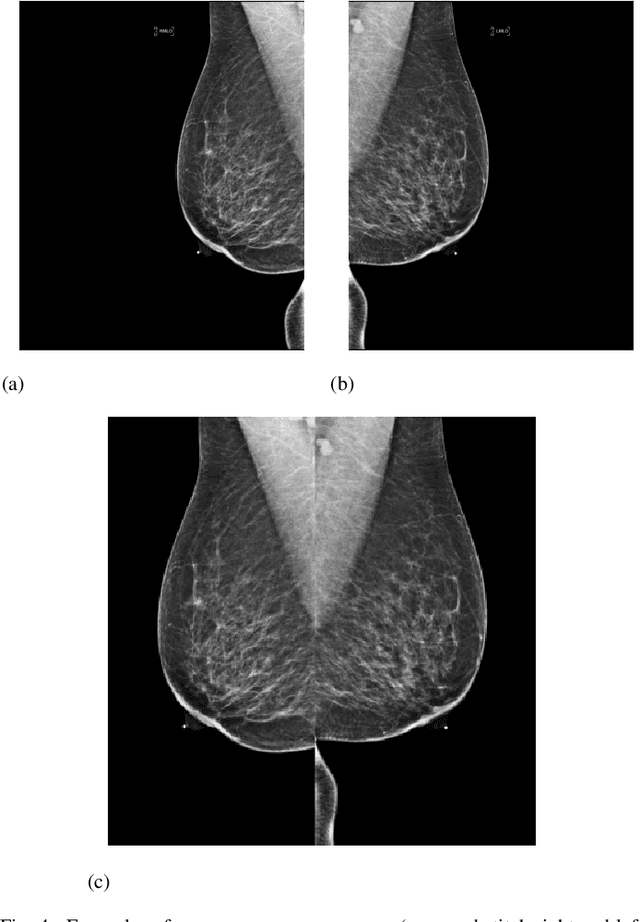

Abstract:Out-of-distribution (OOD) detection is crucial for enhancing the generalization of AI models used in mammogram screening. Given the challenge of limited prior knowledge about OOD samples in external datasets, unsupervised generative learning is a preferable solution which trains the model to discern the normal characteristics of in-distribution (ID) data. The hypothesis is that during inference, the model aims to reconstruct ID samples accurately, while OOD samples exhibit poorer reconstruction due to their divergence from normality. Inspired by state-of-the-art (SOTA) hybrid architectures combining CNNs and transformers, we developed a novel backbone - HAND, for detecting OOD from large-scale digital screening mammogram studies. To boost the learning efficiency, we incorporated synthetic OOD samples and a parallel discriminator in the latent space to distinguish between ID and OOD samples. Gradient reversal to the OOD reconstruction loss penalizes the model for learning OOD reconstructions. An anomaly score is computed by weighting the reconstruction and discriminator loss. On internal RSNA mammogram held-out test and external Mayo clinic hand-curated dataset, the proposed HAND model outperformed encoder-based and GAN-based baselines, and interestingly, it also outperformed the hybrid CNN+transformer baselines. Therefore, the proposed HAND pipeline offers an automated efficient computational solution for domain-specific quality checks in external screening mammograms, yielding actionable insights without direct exposure to the private medical imaging data.

SD-CNN: a Shallow-Deep CNN for Improved Breast Cancer Diagnosis

Oct 26, 2018

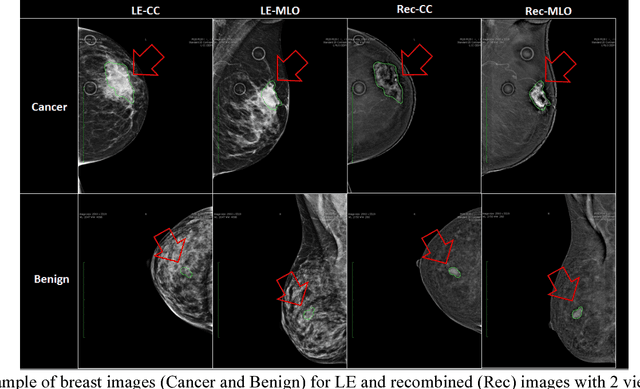

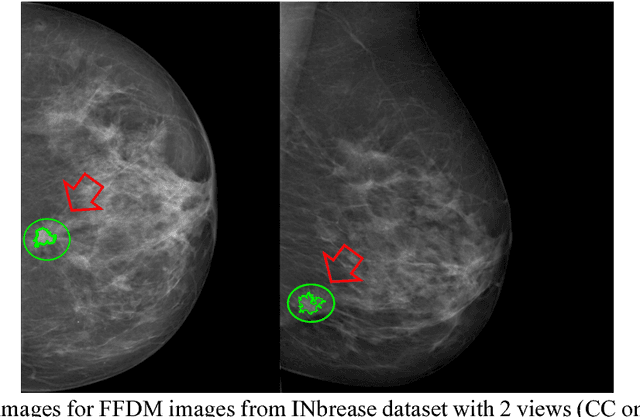

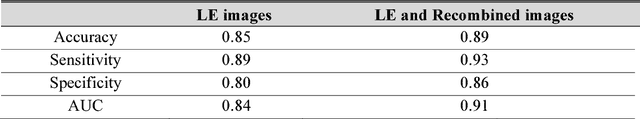

Abstract:Breast cancer is the second leading cause of cancer death among women worldwide. Nevertheless, it is also one of the most treatable malignances if detected early. Screening for breast cancer with digital mammography (DM) has been widely used. However it demonstrates limited sensitivity for women with dense breasts. An emerging technology in the field is contrast-enhanced digital mammography (CEDM), which includes a low energy (LE) image similar to DM, and a recombined image leveraging tumor neoangiogenesis similar to breast magnetic resonance imaging (MRI). CEDM has shown better diagnostic accuracy than DM. While promising, CEDM is not yet widely available across medical centers. In this research, we propose a Shallow-Deep Convolutional Neural Network (SD-CNN) where a shallow CNN is developed to derive "virtual" recombined images from LE images, and a deep CNN is employed to extract novel features from LE, recombined or "virtual" recombined images for ensemble models to classify the cases as benign vs. cancer. To evaluate the validity of our approach, we first develop a deep-CNN using 49 CEDM cases collected from Mayo Clinic to prove the contributions from recombined images for improved breast cancer diagnosis (0.86 in accuracy using LE imaging vs. 0.90 in accuracy using both LE and recombined imaging). We then develop a shallow-CNN using the same 49 CEDM cases to learn the nonlinear mapping from LE to recombined images. Next, we use 69 DM cases collected from the hospital located at Zhejiang University, China to generate "virtual" recombined images. Using DM alone provides 0.91 in accuracy, whereas SD-CNN improves the diagnostic accuracy to 0.95.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge