Beth Goldberg

AI and the Future of Digital Public Squares

Dec 13, 2024

Abstract:Two substantial technological advances have reshaped the public square in recent decades: first with the advent of the internet and second with the recent introduction of large language models (LLMs). LLMs offer opportunities for a paradigm shift towards more decentralized, participatory online spaces that can be used to facilitate deliberative dialogues at scale, but also create risks of exacerbating societal schisms. Here, we explore four applications of LLMs to improve digital public squares: collective dialogue systems, bridging systems, community moderation, and proof-of-humanity systems. Building on the input from over 70 civil society experts and technologists, we argue that LLMs both afford promising opportunities to shift the paradigm for conversations at scale and pose distinct risks for digital public squares. We lay out an agenda for future research and investments in AI that will strengthen digital public squares and safeguard against potential misuses of AI.

Generative AI Misuse: A Taxonomy of Tactics and Insights from Real-World Data

Jun 19, 2024

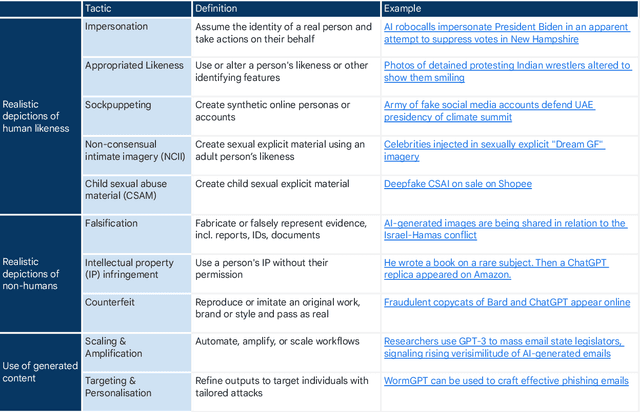

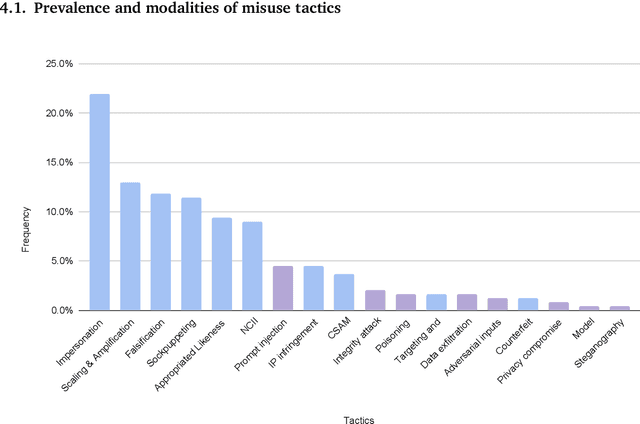

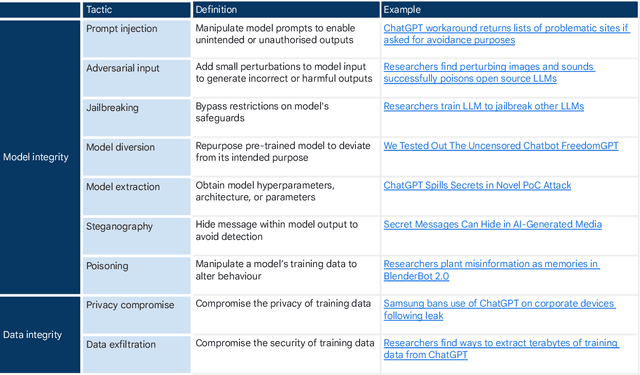

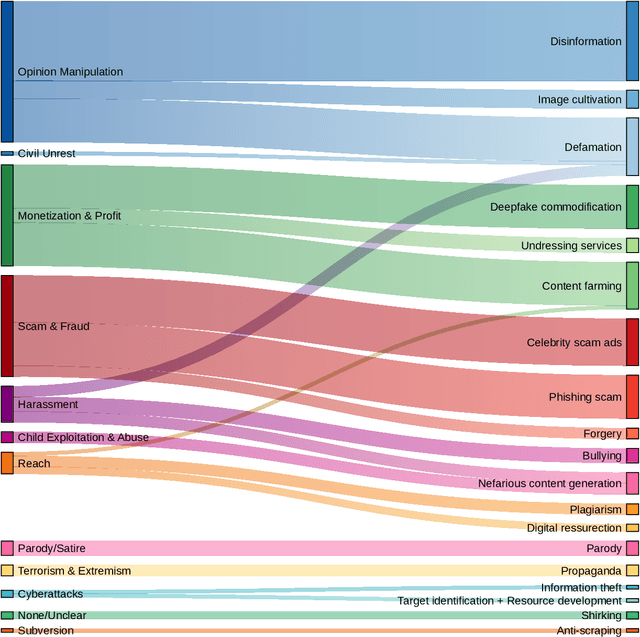

Abstract:Generative, multimodal artificial intelligence (GenAI) offers transformative potential across industries, but its misuse poses significant risks. Prior research has shed light on the potential of advanced AI systems to be exploited for malicious purposes. However, we still lack a concrete understanding of how GenAI models are specifically exploited or abused in practice, including the tactics employed to inflict harm. In this paper, we present a taxonomy of GenAI misuse tactics, informed by existing academic literature and a qualitative analysis of approximately 200 observed incidents of misuse reported between January 2023 and March 2024. Through this analysis, we illuminate key and novel patterns in misuse during this time period, including potential motivations, strategies, and how attackers leverage and abuse system capabilities across modalities (e.g. image, text, audio, video) in the wild.

The Influencer Next Door: How Misinformation Creators Use GenAI

May 22, 2024

Abstract:Advances in generative AI (GenAI) have raised concerns about detecting and discerning AI-generated content from human-generated content. Most existing literature assumes a paradigm where 'expert' organized disinformation creators and flawed AI models deceive 'ordinary' users. Based on longitudinal ethnographic research with misinformation creators and consumers between 2022-2023, we instead find that GenAI supports bricolage work, where non-experts increasingly use GenAI to remix, repackage, and (re)produce content to meet their personal needs and desires. This research yielded four key findings: First, participants primarily used GenAI for creation, rather than truth-seeking. Second, a spreading 'influencer millionaire' narrative drove participants to become content creators, using GenAI as a productivity tool to generate a volume of (often misinformative) content. Third, GenAI lowered the barrier to entry for content creation across modalities, enticing consumers to become creators and significantly increasing existing creators' output. Finally, participants used Gen AI to learn and deploy marketing tactics to expand engagement and monetize their content. We argue for shifting analysis from the public as consumers of AI content to bricoleurs who use GenAI creatively, often without a detailed understanding of its underlying technology. We analyze how these understudied emergent uses of GenAI produce new or accelerated misinformation harms, and their implications for AI products, platforms and policies.

New contexts, old heuristics: How young people in India and the US trust online content in the age of generative AI

May 03, 2024Abstract:We conducted an in-person ethnography in India and the US to investigate how young people (18-24) trusted online content, with a focus on generative AI (GenAI). We had four key findings about how young people use GenAI and determine what to trust online. First, when online, we found participants fluidly shifted between mindsets and emotional states, which we term "information modes." Second, these information modes shaped how and why participants trust GenAI and how they applied literacy skills. In the modes where they spent most of their time, they eschewed literacy skills. Third, with the advent of GenAI, participants imported existing trust heuristics from familiar online contexts into their interactions with GenAI. Fourth, although study participants had reservations about GenAI, they saw it as a requisite tool to adopt to keep up with the times. Participants valued efficiency above all else, and used GenAI to further their goals quickly at the expense of accuracy. Our findings suggest that young people spend the majority of their time online not concerned with truth because they are seeking only to pass the time. As a result, literacy interventions should be designed to intervene at the right time, to match users' distinct information modes, and to work with their existing fact-checking practices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge