Bert de Vries

Spike-Timing-Dependent Plasticity for Bernoulli Message Passing

Dec 19, 2025Abstract:Bayesian inference provides a principled framework for understanding brain function, while neural activity in the brain is inherently spike-based. This paper bridges these two perspectives by designing spiking neural networks that simulate Bayesian inference through message passing for Bernoulli messages. To train the networks, we employ spike-timing-dependent plasticity, a biologically plausible mechanism for synaptic plasticity which is based on the Hebbian rule. Our results demonstrate that the network's performance closely matches the true numerical solution. We further demonstrate the versatility of our approach by implementing a factor graph example from coding theory, illustrating signal transmission over an unreliable channel.

A Spiking Neural Network Implementation of Gaussian Belief Propagation

Dec 11, 2025Abstract:Bayesian inference offers a principled account of information processing in natural agents. However, it remains an open question how neural mechanisms perform their abstract operations. We investigate a hypothesis where a distributed form of Bayesian inference, namely message passing on factor graphs, is performed by a simulated network of leaky-integrate-and-fire neurons. Specifically, we perform Gaussian belief propagation by encoding messages that come into factor nodes as spike-based signals, propagating these signals through a spiking neural network (SNN) and decoding the spike-based signal back to an outgoing message. Three core linear operations, equality (branching), addition, and multiplication, are realized in networks of leaky integrate-and-fire models. Validation against the standard sum-product algorithm shows accurate message updates, while applications to Kalman filtering and Bayesian linear regression demonstrate the framework's potential for both static and dynamic inference tasks. Our results provide a step toward biologically grounded, neuromorphic implementations of probabilistic reasoning.

Expected Free Energy-based Planning as Variational Inference

Apr 21, 2025

Abstract:We address the problem of planning under uncertainty, where an agent must choose actions that not only achieve desired outcomes but also reduce uncertainty. Traditional methods often treat exploration and exploitation as separate objectives, lacking a unified inferential foundation. Active inference, grounded in the Free Energy Principle, offers such a foundation by minimizing Expected Free Energy (EFE), a cost function that combines utility with epistemic drives like ambiguity resolution and novelty seeking. However, the computational burden of EFE minimization has remained a major obstacle to its scalability. In this paper, we show that EFE-based planning arises naturally from minimizing a variational free energy functional on a generative model augmented with preference and epistemic priors. This result reinforces theoretical consistency with the Free Energy Principle, by casting planning itself as variational inference. Our formulation yields optimal policies that jointly support goal achievement and information gain, while incorporating a complexity term that accounts for bounded computational resources. This unifying framework connects and extends existing methods, enabling scalable, resource-aware implementations of active inference agents.

Improved Depth Estimation of Bayesian Neural Networks

Oct 15, 2024Abstract:This paper proposes improvements over earlier work by Nazareth and Blei (2022) for estimating the depth of Bayesian neural networks. Here, we propose a discrete truncated normal distribution over the network depth to independently learn its mean and variance. Posterior distributions are inferred by minimizing the variational free energy, which balances the model complexity and accuracy. Our method improves test accuracy on the spiral data set and reduces the variance in posterior depth estimates.

Toward Design of Synthetic Active Inference Agents by Mere Mortals

Jul 26, 2023Abstract:The theoretical properties of active inference agents are impressive, but how do we realize effective agents in working hardware and software on edge devices? This is an interesting problem because the computational load for policy exploration explodes exponentially, while the computational resources are very limited for edge devices. In this paper, we discuss the necessary features for a software toolbox that supports a competent non-expert engineer to develop working active inference agents. We introduce a toolbox-in-progress that aims to accelerate the democratization of active inference agents in a similar way as TensorFlow propelled applications of deep learning technology.

Realising Synthetic Active Inference Agents, Part I: Epistemic Objectives and Graphical Specification Language

Jun 13, 2023

Abstract:The Free Energy Principle (FEP) is a theoretical framework for describing how (intelligent) systems self-organise into coherent, stable structures by minimising a free energy functional. Active Inference (AIF) is a corollary of the FEP that specifically details how systems that are able to plan for the future (agents) function by minimising particular free energy functionals that incorporate information seeking components. This paper is the first in a series of two where we derive a synthetic version of AIF on free form factor graphs. The present paper focuses on deriving a local version of the free energy functionals used for AIF. This enables us to construct a version of AIF which applies to arbitrary graphical models and interfaces with prior work on message passing algorithms. The resulting messages are derived in our companion paper. We also identify a gap in the graphical notation used for factor graphs. While factor graphs are great at expressing a generative model, they have so far been unable to specify the full optimisation problem including constraints. To solve this problem we develop Constrained Forney-style Factor Graph (CFFG) notation which permits a fully graphical description of variational inference objectives. We then proceed to show how CFFG's can be used to reconstruct prior algorithms for AIF as well as derive new ones. The latter is demonstrated by deriving an algorithm that permits direct policy inference for AIF agents, circumventing a long standing scaling issue that has so far hindered the application of AIF in industrial settings. We demonstrate our algorithm on the classic T-maze task and show that it reproduces the information seeking behaviour that is a hallmark feature of AIF.

Automating Model Comparison in Factor Graphs

Jun 09, 2023

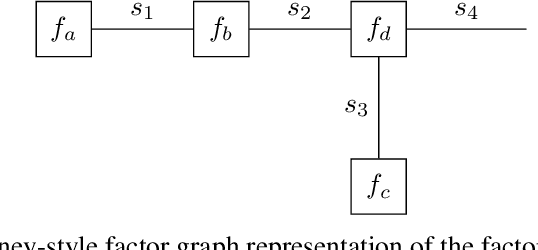

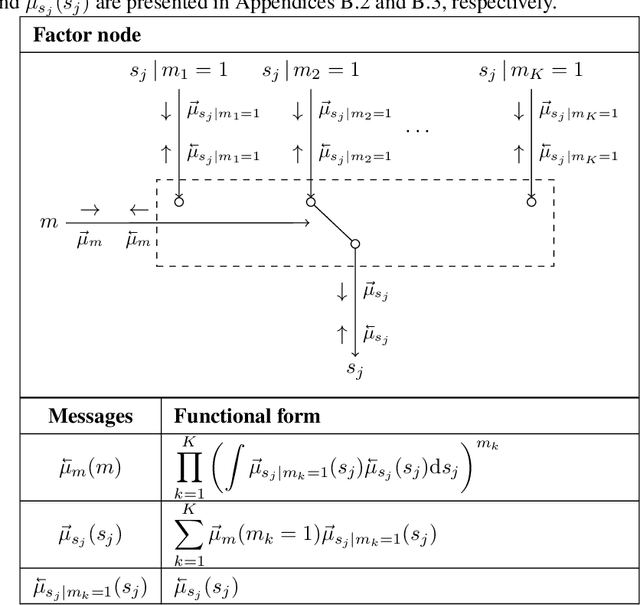

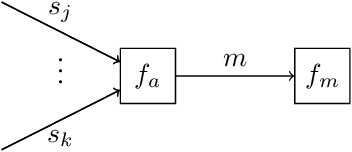

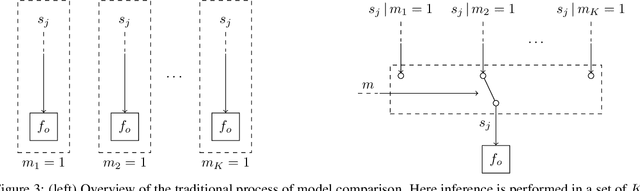

Abstract:Bayesian state and parameter estimation have been automated effectively in the literature, however, this has not yet been the case for model comparison, which therefore still requires error-prone and time-consuming manual derivations. As a result, model comparison is often overlooked and ignored, despite its importance. This paper efficiently automates Bayesian model averaging, selection, and combination by message passing on a Forney-style factor graph with a custom mixture node. Parameter and state inference, and model comparison can then be executed simultaneously using message passing with scale factors. This approach shortens the model design cycle and allows for the straightforward extension to hierarchical and temporal model priors to accommodate for modeling complicated time-varying processes.

Realising Synthetic Active Inference Agents, Part II: Variational Message Updates

Jun 05, 2023Abstract:The Free Energy Principle (FEP) describes (biological) agents as minimising a variational Free Energy (FE) with respect to a generative model of their environment. Active Inference (AIF) is a corollary of the FEP that describes how agents explore and exploit their environment by minimising an expected FE objective. In two related papers, we describe a scalable, epistemic approach to synthetic AIF agents, by message passing on free-form Forney-style Factor Graphs (FFGs). A companion paper (part I) introduces a Constrained FFG (CFFG) notation that visually represents (generalised) FE objectives for AIF. The current paper (part II) derives message passing algorithms that minimise (generalised) FE objectives on a CFFG by variational calculus. A comparison between simulated Bethe and generalised FE agents illustrates how synthetic AIF induces epistemic behaviour on a T-maze navigation task. With a full message passing account of synthetic AIF agents, it becomes possible to derive and reuse message updates across models and move closer to industrial applications of synthetic AIF.

Principled Pruning of Bayesian Neural Networks through Variational Free Energy Minimization

Oct 17, 2022

Abstract:Bayesian model reduction provides an efficient approach for comparing the performance of all nested sub-models of a model, without re-evaluating any of these sub-models. Until now, Bayesian model reduction has been applied mainly in the computational neuroscience community. In this paper, we formulate and apply Bayesian model reduction to perform principled pruning of Bayesian neural networks, based on variational free energy minimization. This novel parameter pruning scheme solves the shortcomings of many current state-of-the-art pruning methods that are used by the signal processing community. The proposed approach has a clear stopping criterion and minimizes the same objective that is used during training. Next to these theoretical benefits, our experiments indicate better model performance in comparison to state-of-the-art pruning schemes.

AIDA: An Active Inference-based Design Agent for Audio Processing Algorithms

Jan 10, 2022

Abstract:In this paper we present AIDA, which is an active inference-based agent that iteratively designs a personalized audio processing algorithm through situated interactions with a human client. The target application of AIDA is to propose on-the-spot the most interesting alternative values for the tuning parameters of a hearing aid (HA) algorithm, whenever a HA client is not satisfied with their HA performance. AIDA interprets searching for the "most interesting alternative" as an issue of optimal (acoustic) context-aware Bayesian trial design. In computational terms, AIDA is realized as an active inference-based agent with an Expected Free Energy criterion for trial design. This type of architecture is inspired by neuro-economic models on efficient (Bayesian) trial design in brains and implies that AIDA comprises generative probabilistic models for acoustic signals and user responses. We propose a novel generative model for acoustic signals as a sum of time-varying auto-regressive filters and a user response model based on a Gaussian Process Classifier. The full AIDA agent has been implemented in a factor graph for the generative model and all tasks (parameter learning, acoustic context classification, trial design, etc.) are realized by variational message passing on the factor graph. All verification and validation experiments and demonstrations are freely accessible at our GitHub repository.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge