Beom Jun Woo

HILCodec: High Fidelity and Lightweight Neural Audio Codec

May 08, 2024

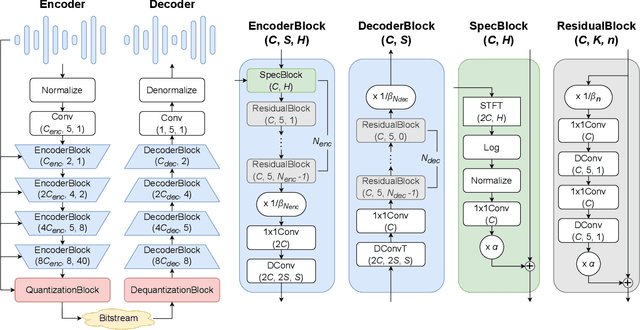

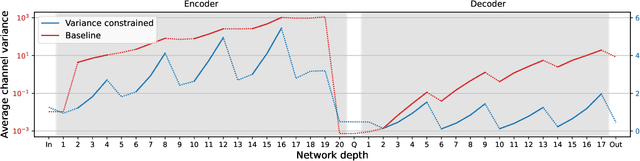

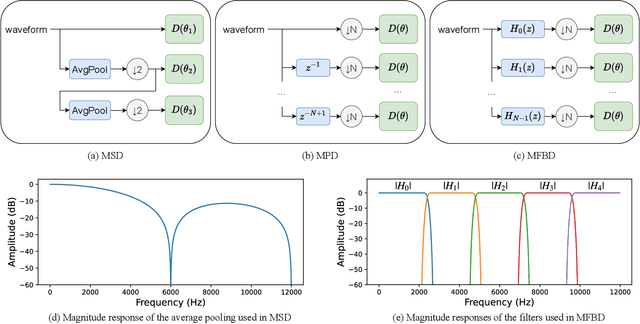

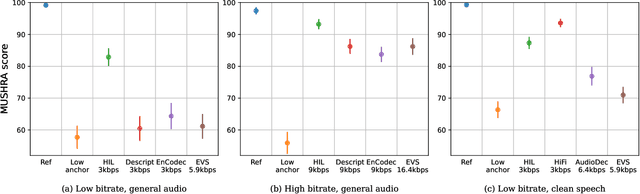

Abstract:The recent advancement of end-to-end neural audio codecs enables compressing audio at very low bitrates while reconstructing the output audio with high fidelity. Nonetheless, such improvements often come at the cost of increased model complexity. In this paper, we identify and address the problems of existing neural audio codecs. We show that the performance of Wave-U-Net does not increase consistently as the network depth increases. We analyze the root cause of such a phenomenon and suggest a variance-constrained design. Also, we reveal various distortions in previous waveform domain discriminators and propose a novel distortion-free discriminator. The resulting model, \textit{HILCodec}, is a real-time streaming audio codec that demonstrates state-of-the-art quality across various bitrates and audio types.

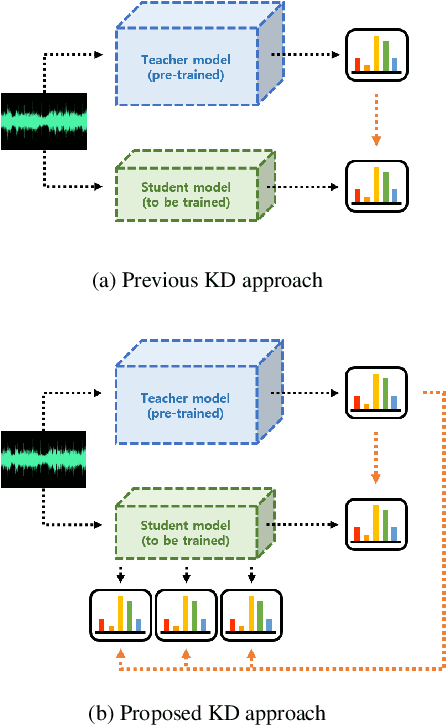

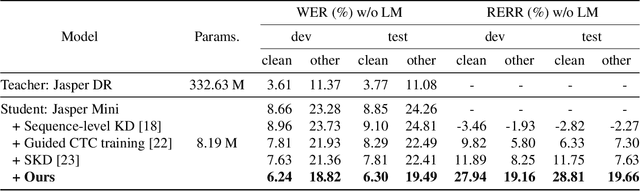

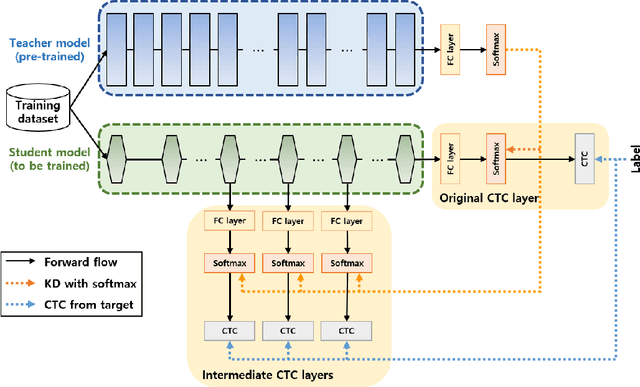

Inter-KD: Intermediate Knowledge Distillation for CTC-Based Automatic Speech Recognition

Nov 28, 2022

Abstract:Recently, the advance in deep learning has brought a considerable improvement in the end-to-end speech recognition field, simplifying the traditional pipeline while producing promising results. Among the end-to-end models, the connectionist temporal classification (CTC)-based model has attracted research interest due to its non-autoregressive nature. However, such CTC models require a heavy computational cost to achieve outstanding performance. To mitigate the computational burden, we propose a simple yet effective knowledge distillation (KD) for the CTC framework, namely Inter-KD, that additionally transfers the teacher's knowledge to the intermediate CTC layers of the student network. From the experimental results on the LibriSpeech, we verify that the Inter-KD shows better achievements compared to the conventional KD methods. Without using any language model (LM) and data augmentation, Inter-KD improves the word error rate (WER) performance from 8.85 % to 6.30 % on the test-clean.

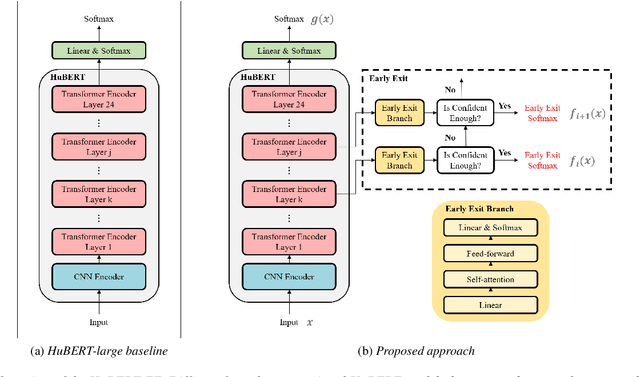

HuBERT-EE: Early Exiting HuBERT for Efficient Speech Recognition

Apr 13, 2022

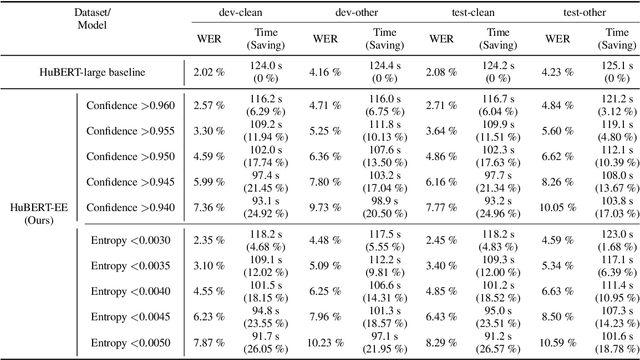

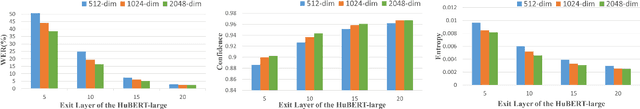

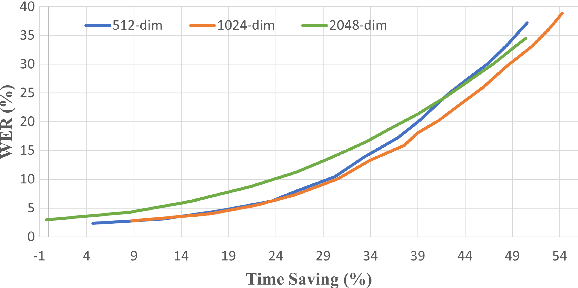

Abstract:Pre-training with self-supervised models, such as Hidden-unit BERT (HuBERT) and wav2vec 2.0, has brought significant improvements in automatic speech recognition (ASR). However, these models usually require an expensive computational cost to achieve outstanding performance, slowing down the inference speed. To improve the model efficiency, we propose an early exit scheme for ASR, namely HuBERT-EE, that allows the model to stop the inference dynamically. In HuBERT-EE, multiple early exit branches are added at the intermediate layers, and each branch is used to decide whether a prediction can be exited early. Experimental results on the LibriSpeech dataset show that HuBERT-EE can accelerate the inference of a large-scale HuBERT model while simultaneously balancing the trade-off between the word error rate (WER) performance and the latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge