HuBERT-EE: Early Exiting HuBERT for Efficient Speech Recognition

Paper and Code

Apr 13, 2022

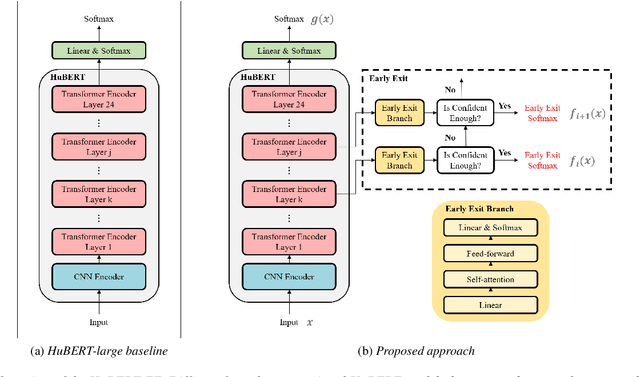

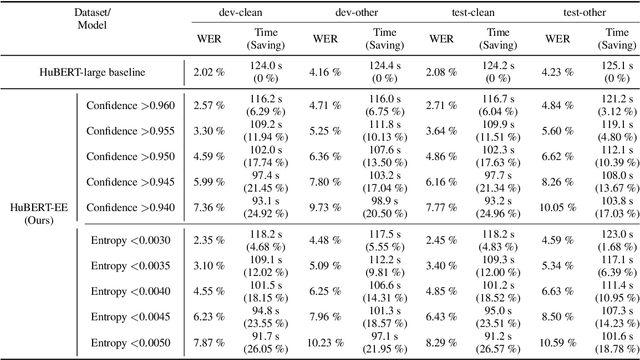

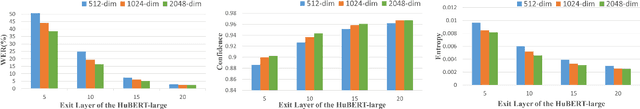

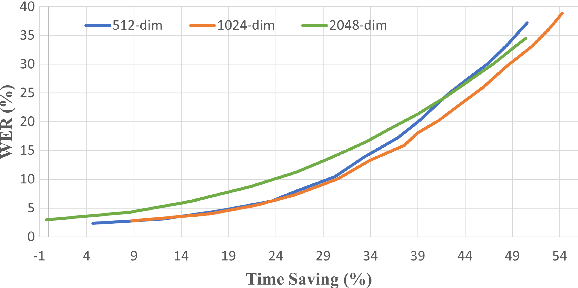

Pre-training with self-supervised models, such as Hidden-unit BERT (HuBERT) and wav2vec 2.0, has brought significant improvements in automatic speech recognition (ASR). However, these models usually require an expensive computational cost to achieve outstanding performance, slowing down the inference speed. To improve the model efficiency, we propose an early exit scheme for ASR, namely HuBERT-EE, that allows the model to stop the inference dynamically. In HuBERT-EE, multiple early exit branches are added at the intermediate layers, and each branch is used to decide whether a prediction can be exited early. Experimental results on the LibriSpeech dataset show that HuBERT-EE can accelerate the inference of a large-scale HuBERT model while simultaneously balancing the trade-off between the word error rate (WER) performance and the latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge