Barry Smith

AI Assistants to Enhance and Exploit the PETSc Knowledge Base

Jun 25, 2025Abstract:Generative AI, especially through large language models (LLMs), is transforming how technical knowledge can be accessed, reused, and extended. PETSc, a widely used numerical library for high-performance scientific computing, has accumulated a rich but fragmented knowledge base over its three decades of development, spanning source code, documentation, mailing lists, GitLab issues, Discord conversations, technical papers, and more. Much of this knowledge remains informal and inaccessible to users and new developers. To activate and utilize this knowledge base more effectively, the PETSc team has begun building an LLM-powered system that combines PETSc content with custom LLM tools -- including retrieval-augmented generation (RAG), reranking algorithms, and chatbots -- to assist users, support developers, and propose updates to formal documentation. This paper presents initial experiences designing and evaluating these tools, focusing on system architecture, using RAG and reranking for PETSc-specific information, evaluation methodologies for various LLMs and embedding models, and user interface design. Leveraging the Argonne Leadership Computing Facility resources, we analyze how LLM responses can enhance the development and use of numerical software, with an initial focus on scalable Krylov solvers. Our goal is to establish an extensible framework for knowledge-centered AI in scientific software, enabling scalable support, enriched documentation, and enhanced workflows for research and development. We conclude by outlining directions for expanding this system into a robust, evolving platform that advances software ecosystems to accelerate scientific discovery.

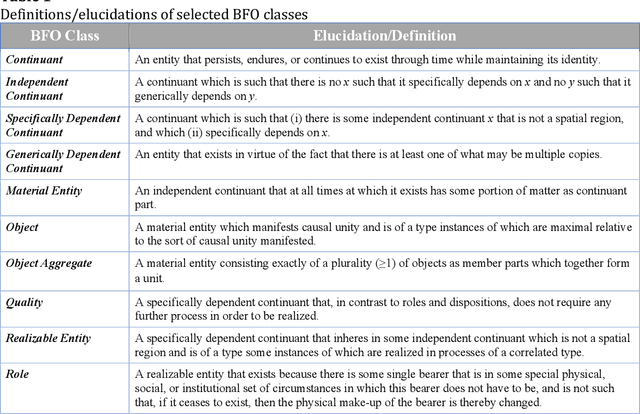

Capabilities

Apr 30, 2024

Abstract:In our daily lives, as in science and in all other domains, we encounter huge numbers of dispositions (tendencies, potentials, powers) which are realized in processes such as sneezing, sweating, shedding dandruff, and on and on. Among this plethora of what we can think of as mere dispositions is a subset of dispositions in whose realizations we have an interest a car responding well when driven on ice, a rabbits lungs responding well when it is chased by a wolf, and so on. We call the latter capabilities and we attempt to provide a robust ontological account of what capabilities are that is of sufficient generality to serve a variety of purposes, for example by providing a useful extension to ontology-based research in areas where capabilities data are currently being collected in siloed fashion.

Middle Architecture Criteria

Apr 27, 2024Abstract:Mid-level ontologies are used to integrate terminologies and data across disparate domains. There are, however, no clear, defensible criteria for determining whether a given ontology should count as mid-level, because we lack a rigorous characterization of what the middle level of generality is supposed to contain. Attempts to provide such a characterization have failed, we believe, because they have focused on the goal of specifying what is characteristic of those single ontologies that have been advanced as mid-level ontologies. Unfortunately, single ontologies of this sort are generally a mixture of top- and mid-level, and sometimes even of domain-level terms. To gain clarity, we aim to specify the necessary and sufficient conditions for a collection of one or more ontologies to inhabit what we call a mid-level architecture.

Why machines do not understand: A response to Søgaard

Jul 07, 2023Abstract:Some defenders of so-called `artificial intelligence' believe that machines can understand language. In particular, S{\o}gaard has argued in this journal for a thesis of this sort, on the basis of the idea (1) that where there is semantics there is also understanding and (2) that machines are not only capable of what he calls `inferential semantics', but even that they can (with the help of inputs from sensors) `learn' referential semantics \parencite{sogaard:2022}. We show that he goes wrong because he pays insufficient attention to the difference between language as used by humans and the sequences of inert of symbols which arise when language is stored on hard drives or in books in libraries.

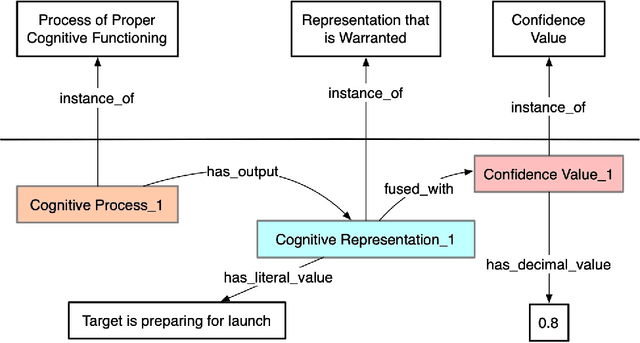

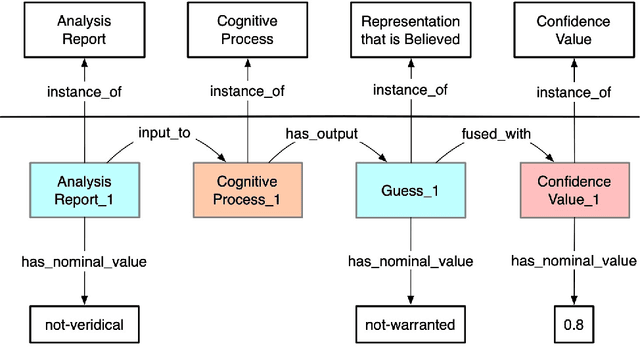

Ontology and Cognitive Outcomes

May 16, 2020

Abstract:The intelligence community relies on human-machine-based analytic strategies that 1) access and integrate vast amounts of information from disparate sources, 2) continuously process this information, so that, 3) a maximally comprehensive understanding of world actors and their behaviors can be developed and updated. Herein we describe an approach to utilizing outcomes-based learning (OBL) to support these efforts that is based on an ontology of the cognitive processes performed by intelligence analysts.

Making AI meaningful again

Jan 09, 2019

Abstract:Artificial intelligence (AI) research enjoyed an initial period of enthusiasm in the 1970s and 80s. But this enthusiasm was tempered by a long interlude of frustration when genuinely useful AI applications failed to be forthcoming. Today, we are experiencing once again a period of enthusiasm, fired above all by the successes of the technology of deep neural networks or deep machine learning. In this paper we draw attention to what we take to be serious problems underlying current views of artificial intelligence encouraged by these successes, especially in the domain of language processing. We then show an alternative approach to language-centric AI, in which we identify a role for philosophy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge