Baris Kayalibay

Filter-Aware Model-Predictive Control

Apr 20, 2023Abstract:Partially-observable problems pose a trade-off between reducing costs and gathering information. They can be solved optimally by planning in belief space, but that is often prohibitively expensive. Model-predictive control (MPC) takes the alternative approach of using a state estimator to form a belief over the state, and then plan in state space. This ignores potential future observations during planning and, as a result, cannot actively increase or preserve the certainty of its own state estimate. We find a middle-ground between planning in belief space and completely ignoring its dynamics by only reasoning about its future accuracy. Our approach, filter-aware MPC, penalises the loss of information by what we call "trackability", the expected error of the state estimator. We show that model-based simulation allows condensing trackability into a neural network, which allows fast planning. In experiments involving visual navigation, realistic every-day environments and a two-link robot arm, we show that filter-aware MPC vastly improves regular MPC.

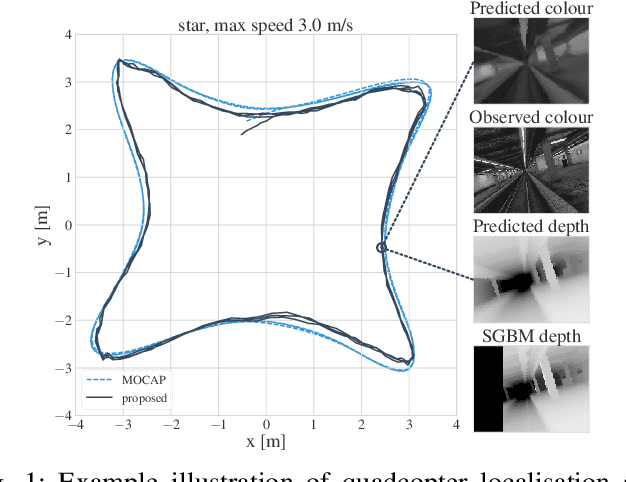

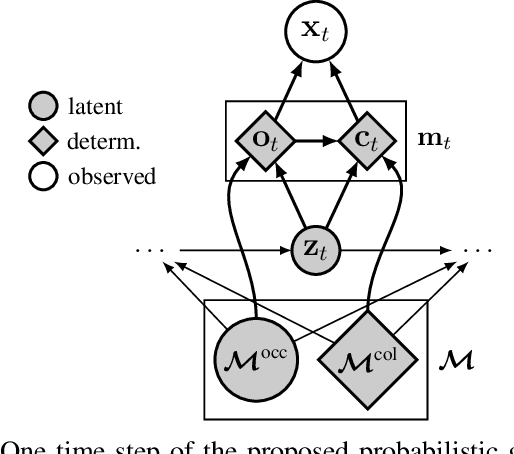

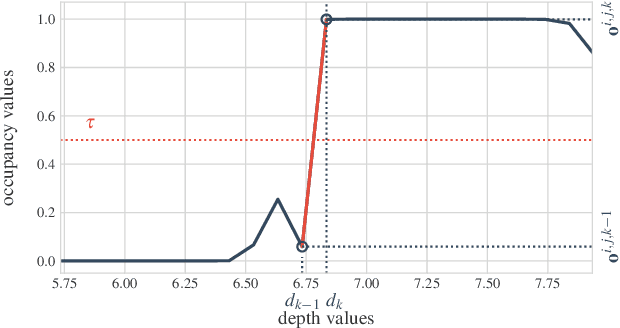

PRISM: Probabilistic Real-Time Inference in Spatial World Models

Dec 06, 2022Abstract:We introduce PRISM, a method for real-time filtering in a probabilistic generative model of agent motion and visual perception. Previous approaches either lack uncertainty estimates for the map and agent state, do not run in real-time, do not have a dense scene representation or do not model agent dynamics. Our solution reconciles all of these aspects. We start from a predefined state-space model which combines differentiable rendering and 6-DoF dynamics. Probabilistic inference in this model amounts to simultaneous localisation and mapping (SLAM) and is intractable. We use a series of approximations to Bayesian inference to arrive at probabilistic map and state estimates. We take advantage of well-established methods and closed-form updates, preserving accuracy and enabling real-time capability. The proposed solution runs at 10Hz real-time and is similarly accurate to state-of-the-art SLAM in small to medium-sized indoor environments, with high-speed UAV and handheld camera agents (Blackbird, EuRoC and TUM-RGBD).

Tracking and Planning with Spatial World Models

Jan 25, 2022

Abstract:We introduce a method for real-time navigation and tracking with differentiably rendered world models. Learning models for control has led to impressive results in robotics and computer games, but this success has yet to be extended to vision-based navigation. To address this, we transfer advances in the emergent field of differentiable rendering to model-based control. We do this by planning in a learned 3D spatial world model, combined with a pose estimation algorithm previously used in the context of TSDF fusion, but now tailored to our setting and improved to incorporate agent dynamics. We evaluate over six simulated environments based on complex human-designed floor plans and provide quantitative results. We achieve up to 92% navigation success rate at a frequency of 15 Hz using only image and depth observations under stochastic, continuous dynamics.

Mind the Gap when Conditioning Amortised Inference in Sequential Latent-Variable Models

Jan 18, 2021

Abstract:Amortised inference enables scalable learning of sequential latent-variable models (LVMs) with the evidence lower bound (ELBO). In this setting, variational posteriors are often only partially conditioned. While the true posteriors depend, e.g., on the entire sequence of observations, approximate posteriors are only informed by past observations. This mimics the Bayesian filter -- a mixture of smoothing posteriors. Yet, we show that the ELBO objective forces partially-conditioned amortised posteriors to approximate products of smoothing posteriors instead. Consequently, the learned generative model is compromised. We demonstrate these theoretical findings in three scenarios: traffic flow, handwritten digits, and aerial vehicle dynamics. Using fully-conditioned approximate posteriors, performance improves in terms of generative modelling and multi-step prediction.

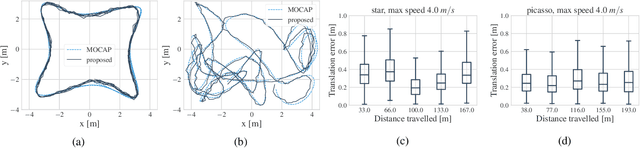

Variational State-Space Models for Localisation and Dense 3D Mapping in 6 DoF

Jun 17, 2020

Abstract:We solve the problem of 6-DoF localisation and 3D dense reconstruction in spatial environments as approximate Bayesian inference in a deep generative approach which combines learned with engineered models. This principled treatment of uncertainty and probabilistic inference overcomes the shortcoming of current state-of-the-art solutions to rely on heavily engineered, heterogeneous pipelines. Variational inference enables us to use neural networks for system identification, while a differentiable raycaster is used for the emission model. This ensures that our model is amenable to end-to-end gradient-based optimisation. We evaluate our approach on realistic unmanned aerial vehicle flight data, nearing the performance of a state-of-the-art visual inertial odometry system. The applicability of the learned model to downstream tasks such as generative prediction and planning is investigated.

Approximate Bayesian inference in spatial environments

May 18, 2018

Abstract:We propose to learn a stochastic recurrent model to solve the problem of simultaneous localisation and mapping (SLAM). Our model is a deep variational Bayes filter augmented with a latent global variable---similar to an external memory component---representing the spatially structured environment. Reasoning about the pose of an agent and the map of the environment is then naturally expressed as posterior inference in the resulting generative model. We evaluate the method on a set of randomly generated mazes which are traversed by an agent equipped with laser range finders. Path integration based on an accurate motion model is consistently outperformed, and most importantly, drift practically eliminated. Our approach inherits favourable properties from neural networks, such as differentiability, flexibility and the ability to train components either in isolation or end-to-end.

CNN-based Segmentation of Medical Imaging Data

Jul 25, 2017

Abstract:Convolutional neural networks have been applied to a wide variety of computer vision tasks. Recent advances in semantic segmentation have enabled their application to medical image segmentation. While most CNNs use two-dimensional kernels, recent CNN-based publications on medical image segmentation featured three-dimensional kernels, allowing full access to the three-dimensional structure of medical images. Though closely related to semantic segmentation, medical image segmentation includes specific challenges that need to be addressed, such as the scarcity of labelled data, the high class imbalance found in the ground truth and the high memory demand of three-dimensional images. In this work, a CNN-based method with three-dimensional filters is demonstrated and applied to hand and brain MRI. Two modifications to an existing CNN architecture are discussed, along with methods on addressing the aforementioned challenges. While most of the existing literature on medical image segmentation focuses on soft tissue and the major organs, this work is validated on data both from the central nervous system as well as the bones of the hand.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge