Avi Cohen

Motion Planning for Minimally Actuated Serial Robots

Aug 12, 2024Abstract:Modern manipulators are acclaimed for their precision but often struggle to operate in confined spaces. This limitation has driven the development of hyper-redundant and continuum robots. While these present unique advantages, they face challenges in, for instance, weight, mechanical complexity, modeling and costs. The Minimally Actuated Serial Robot (MASR) has been proposed as a light-weight, low-cost and simpler alternative where passive joints are actuated with a Mobile Actuator (MA) moving along the arm. Yet, Inverse Kinematics (IK) and a general motion planning algorithm for the MASR have not be addressed. In this letter, we propose the MASR-RRT* motion planning algorithm specifically developed for the unique kinematics of MASR. The main component of the algorithm is a data-based model for solving the IK problem while considering minimal traverse of the MA. The model is trained solely using the forward kinematics of the MASR and does not require real data. With the model as a local-connection mechanism, MASR-RRT* minimizes a cost function expressing the action time. In a comprehensive analysis, we show that MASR-RRT* is superior in performance to the straight-forward implementation of the standard RRT*. Experiments on a real robot in different environments with obstacles validate the proposed algorithm.

PennSyn2Real: Training Object Recognition Models without Human Labeling

Oct 16, 2020

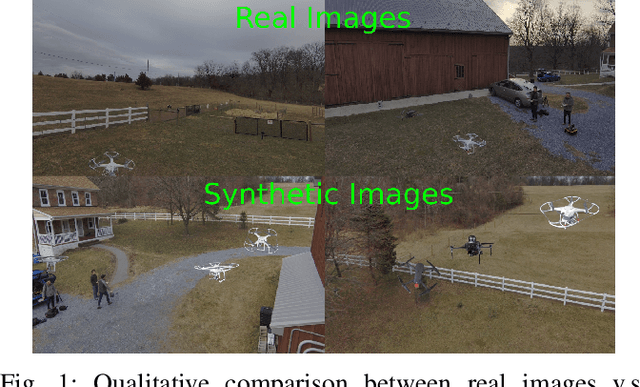

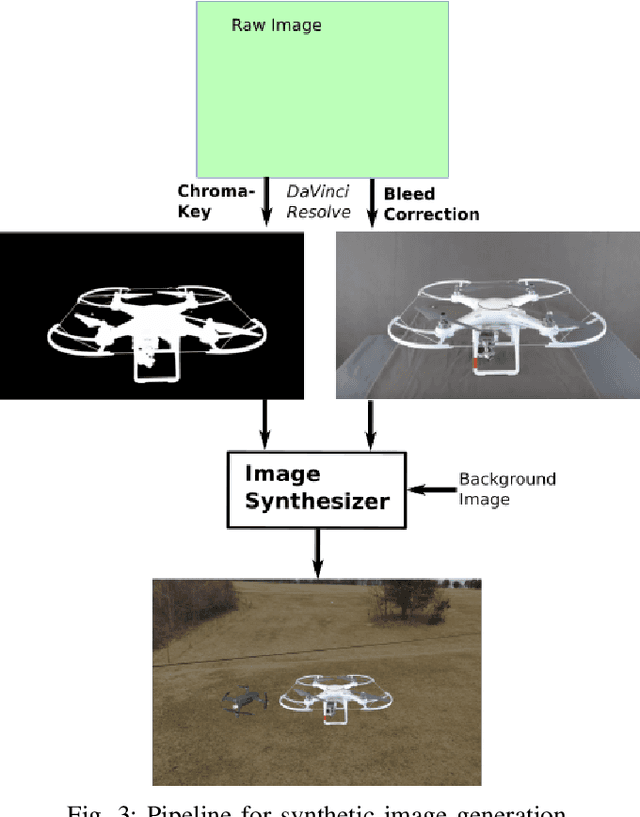

Abstract:Scalable training data generation is a critical problem in deep learning. We propose PennSyn2Real - a photo-realistic synthetic dataset consisting of more than 100,000 4K images of more than 20 types of micro aerial vehicles (MAVs). The dataset can be used to generate arbitrary numbers of training images for high-level computer vision tasks such as MAV detection and classification. Our data generation framework bootstraps chroma-keying, a mature cinematography technique with a motion tracking system, providing artifact-free and curated annotated images where object orientations and lighting are controlled. This framework is easy to set up and can be applied to a broad range of objects, reducing the gap between synthetic and real-world data. We show that synthetic data generated using this framework can be directly used to train CNN models for common object recognition tasks such as detection and segmentation. We demonstrate competitive performance in comparison with training using only real images. Furthermore, bootstrapping the generated synthetic data in few-shot learning can significantly improve the overall performance, reducing the number of required training data samples to achieve the desired accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge