Ashish Mahabal

Center for Data Driven Discovery, California Institute of Technology, Pasadena, CA, USA

Selfish Evolution: Making Discoveries in Extreme Label Noise with the Help of Overfitting Dynamics

Nov 26, 2024

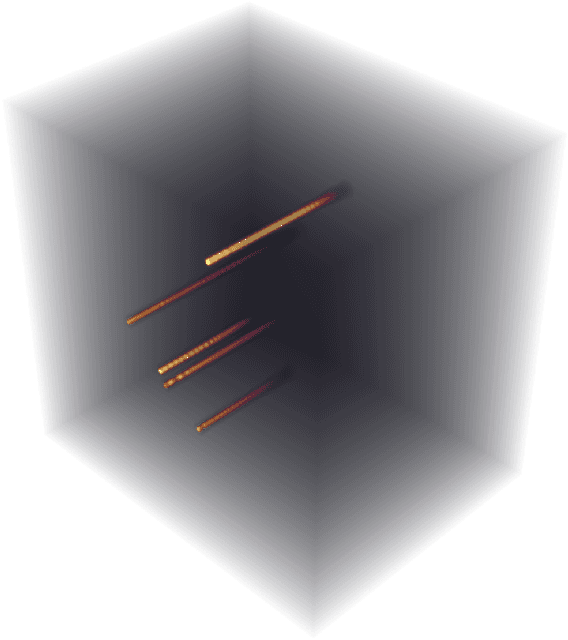

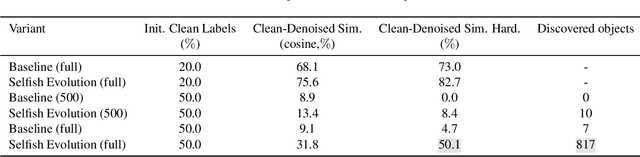

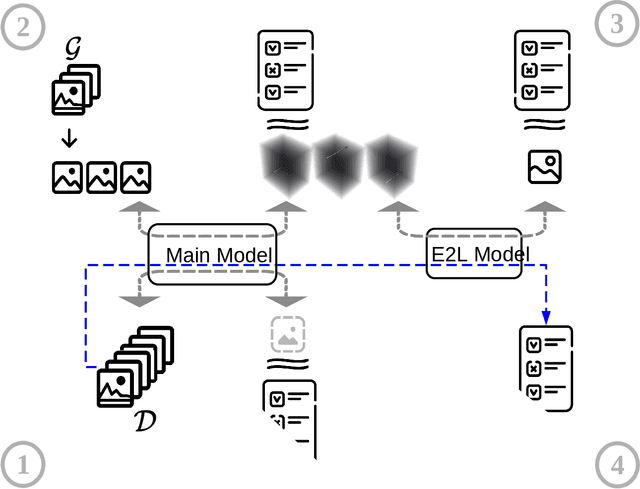

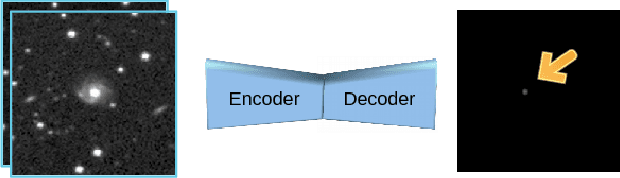

Abstract:Motivated by the scarcity of proper labels in an astrophysical application, we have developed a novel technique, called Selfish Evolution, which allows for the detection and correction of corrupted labels in a weakly supervised fashion. Unlike methods based on early stopping, we let the model train on the noisy dataset. Only then do we intervene and allow the model to overfit to individual samples. The ``evolution'' of the model during this process reveals patterns with enough information about the noisiness of the label, as well as its correct version. We train a secondary network on these spatiotemporal ``evolution cubes'' to correct potentially corrupted labels. We incorporate the technique in a closed-loop fashion, allowing for automatic convergence towards a mostly clean dataset, without presumptions about the state of the network in which we intervene. We evaluate on the main task of the Supernova-hunting dataset but also demonstrate efficiency on the more standard MNIST dataset.

Light Curve Classification with DistClassiPy: a new distance-based classifier

Mar 18, 2024Abstract:The rise of synoptic sky surveys has ushered in an era of big data in time-domain astronomy, making data science and machine learning essential tools for studying celestial objects. Tree-based (e.g. Random Forests) and deep learning models represent the current standard in the field. We explore the use of different distance metrics to aid in the classification of objects. For this, we developed a new distance metric based classifier called DistClassiPy. The direct use of distance metrics is an approach that has not been explored in time-domain astronomy, but distance-based methods can aid in increasing the interpretability of the classification result and decrease the computational costs. In particular, we classify light curves of variable stars by comparing the distances between objects of different classes. Using 18 distance metrics applied to a catalog of 6,000 variable stars in 10 classes, we demonstrate classification and dimensionality reduction. We show that this classifier meets state-of-the-art performance but has lower computational requirements and improved interpretability. We have made DistClassiPy open-source and accessible at https://pypi.org/project/distclassipy/ with the goal of broadening its applications to other classification scenarios within and beyond astronomy.

Beyond Low Earth Orbit: Biomonitoring, Artificial Intelligence, and Precision Space Health

Dec 22, 2021

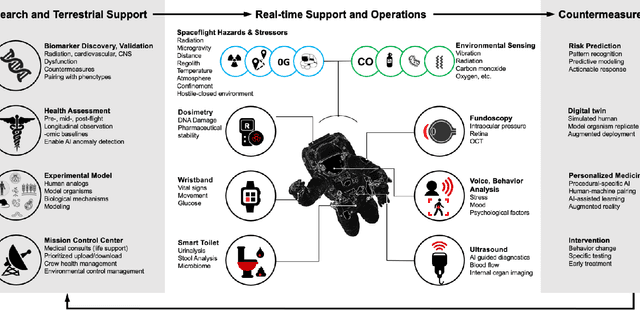

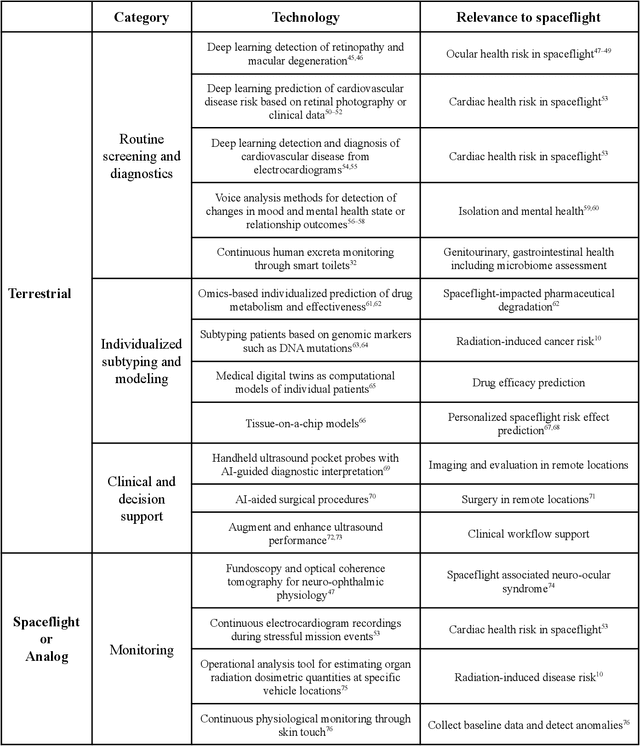

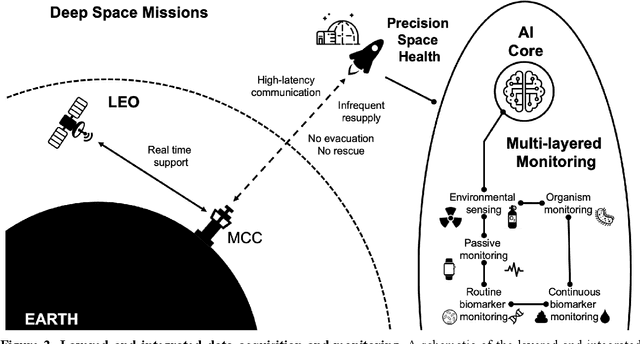

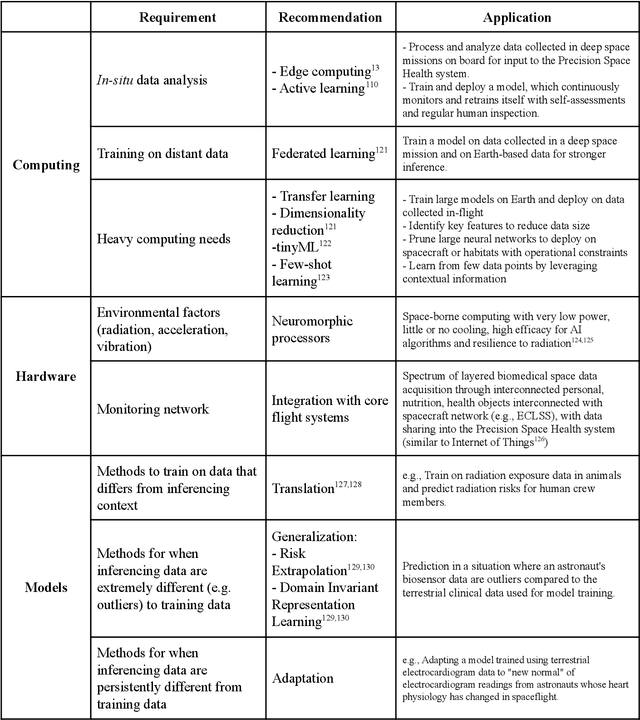

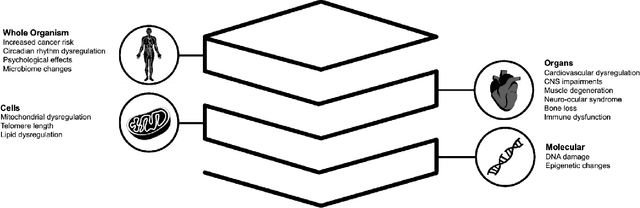

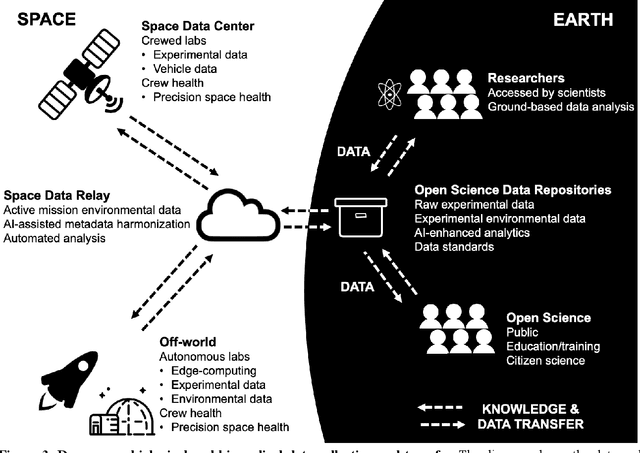

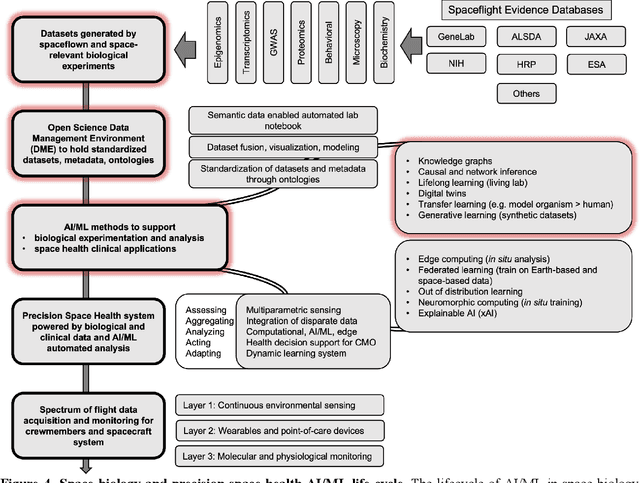

Abstract:Human space exploration beyond low Earth orbit will involve missions of significant distance and duration. To effectively mitigate myriad space health hazards, paradigm shifts in data and space health systems are necessary to enable Earth-independence, rather than Earth-reliance. Promising developments in the fields of artificial intelligence and machine learning for biology and health can address these needs. We propose an appropriately autonomous and intelligent Precision Space Health system that will monitor, aggregate, and assess biomedical statuses; analyze and predict personalized adverse health outcomes; adapt and respond to newly accumulated data; and provide preventive, actionable, and timely insights to individual deep space crew members and iterative decision support to their crew medical officer. Here we present a summary of recommendations from a workshop organized by the National Aeronautics and Space Administration, on future applications of artificial intelligence in space biology and health. In the next decade, biomonitoring technology, biomarker science, spacecraft hardware, intelligent software, and streamlined data management must mature and be woven together into a Precision Space Health system to enable humanity to thrive in deep space.

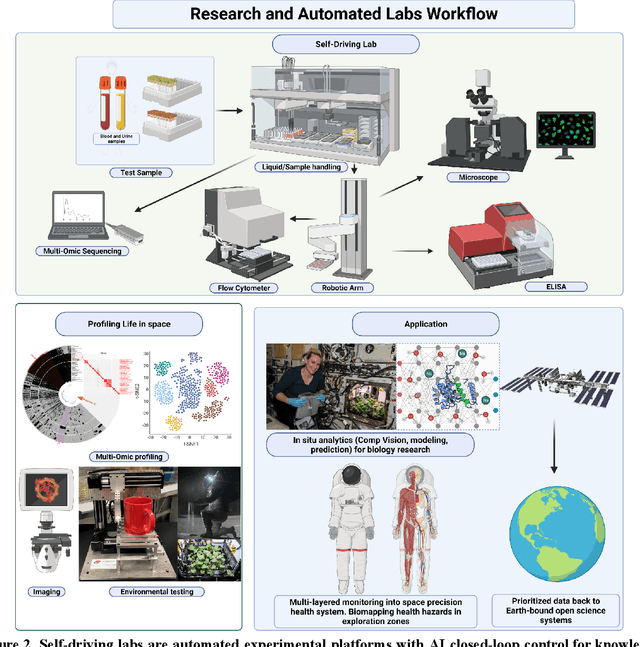

Beyond Low Earth Orbit: Biological Research, Artificial Intelligence, and Self-Driving Labs

Dec 22, 2021

Abstract:Space biology research aims to understand fundamental effects of spaceflight on organisms, develop foundational knowledge to support deep space exploration, and ultimately bioengineer spacecraft and habitats to stabilize the ecosystem of plants, crops, microbes, animals, and humans for sustained multi-planetary life. To advance these aims, the field leverages experiments, platforms, data, and model organisms from both spaceborne and ground-analog studies. As research is extended beyond low Earth orbit, experiments and platforms must be maximally autonomous, light, agile, and intelligent to expedite knowledge discovery. Here we present a summary of recommendations from a workshop organized by the National Aeronautics and Space Administration on artificial intelligence, machine learning, and modeling applications which offer key solutions toward these space biology challenges. In the next decade, the synthesis of artificial intelligence into the field of space biology will deepen the biological understanding of spaceflight effects, facilitate predictive modeling and analytics, support maximally autonomous and reproducible experiments, and efficiently manage spaceborne data and metadata, all with the goal to enable life to thrive in deep space.

Tails: Chasing Comets with the Zwicky Transient Facility and Deep Learning

Feb 26, 2021

Abstract:We present Tails, an open-source deep-learning framework for the identification and localization of comets in the image data of the Zwicky Transient Facility (ZTF), a robotic optical time-domain survey currently in operation at the Palomar Observatory in California, USA. Tails employs a custom EfficientDet-based architecture and is capable of finding comets in single images in near real time, rather than requiring multiple epochs as with traditional methods. The system achieves state-of-the-art performance with 99% recall, 0.01% false positive rate, and 1-2 pixel root mean square error in the predicted position. We report the initial results of the Tails efficiency evaluation in a production setting on the data of the ZTF Twilight survey, including the first AI-assisted discovery of a comet (C/2020 T2) and the recovery of a comet (P/2016 J3 = P/2021 A3).

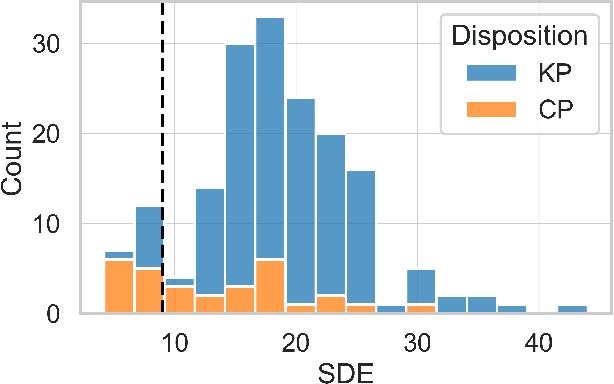

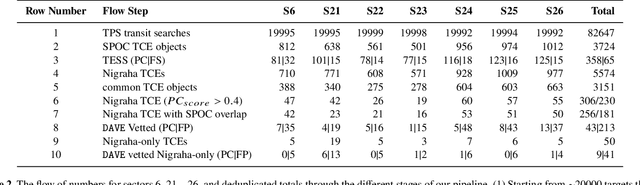

Nigraha: Machine-learning based pipeline to identify and evaluate planet candidates from TESS

Feb 22, 2021

Abstract:The Transiting Exoplanet Survey Satellite (TESS) has now been operational for a little over two years, covering the Northern and the Southern hemispheres once. The TESS team processes the downlinked data using the Science Processing Operations Center pipeline and Quick Look pipeline to generate alerts for follow-up. Combined with other efforts from the community, over two thousand planet candidates have been found of which tens have been confirmed as planets. We present our pipeline, Nigraha, that is complementary to these approaches. Nigraha uses a combination of transit finding, supervised machine learning, and detailed vetting to identify with high confidence a few planet candidates that were missed by prior searches. In particular, we identify high signal to noise ratio (SNR) shallow transits that may represent more Earth-like planets. In the spirit of open data exploration we provide details of our pipeline, release our supervised machine learning model and code as open source, and make public the 38 candidates we have found in seven sectors. The model can easily be run on other sectors as is. As part of future work we outline ways to increase the yield by strengthening some of the steps where we have been conservative and discarded objects for lack of a datum or two.

* 15 pages, 18 figures, and 6 tables. Accepted for publication as a full paper in Monthly Notices of the Royal Astronomical Society

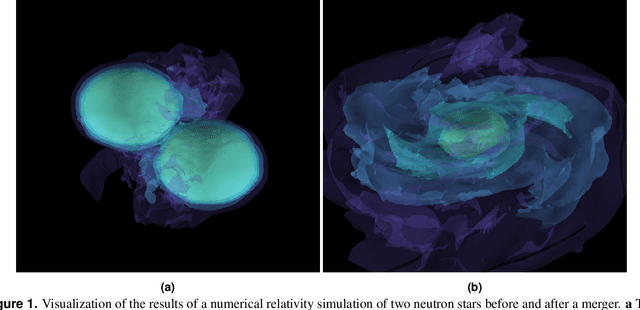

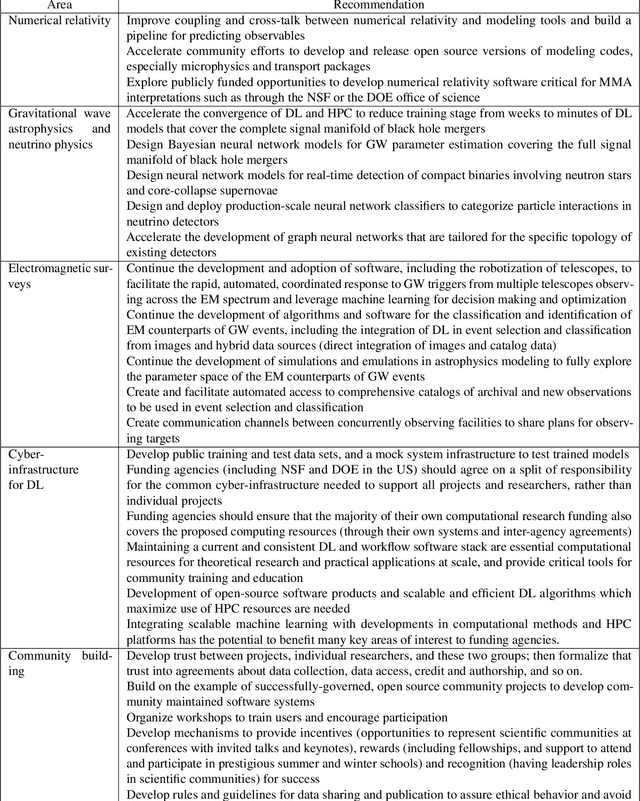

Enabling real-time multi-messenger astrophysics discoveries with deep learning

Nov 26, 2019

Abstract:Multi-messenger astrophysics is a fast-growing, interdisciplinary field that combines data, which vary in volume and speed of data processing, from many different instruments that probe the Universe using different cosmic messengers: electromagnetic waves, cosmic rays, gravitational waves and neutrinos. In this Expert Recommendation, we review the key challenges of real-time observations of gravitational wave sources and their electromagnetic and astroparticle counterparts, and make a number of recommendations to maximize their potential for scientific discovery. These recommendations refer to the design of scalable and computationally efficient machine learning algorithms; the cyber-infrastructure to numerically simulate astrophysical sources, and to process and interpret multi-messenger astrophysics data; the management of gravitational wave detections to trigger real-time alerts for electromagnetic and astroparticle follow-ups; a vision to harness future developments of machine learning and cyber-infrastructure resources to cope with the big-data requirements; and the need to build a community of experts to realize the goals of multi-messenger astrophysics.

* Invited Expert Recommendation for Nature Reviews Physics. The art work produced by E. A. Huerta and Shawn Rosofsky for this article was used by Carl Conway to design the cover of the October 2019 issue of Nature Reviews Physics

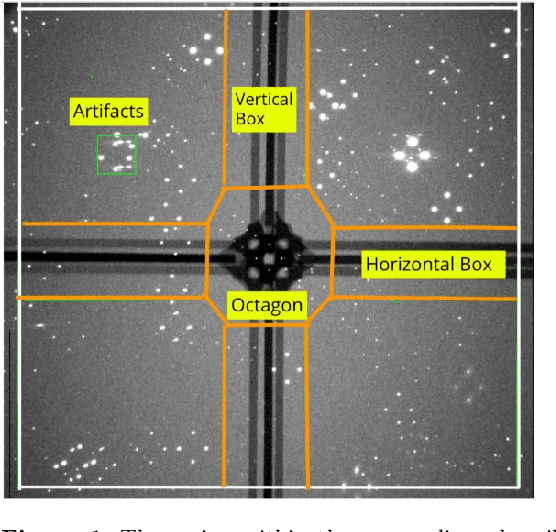

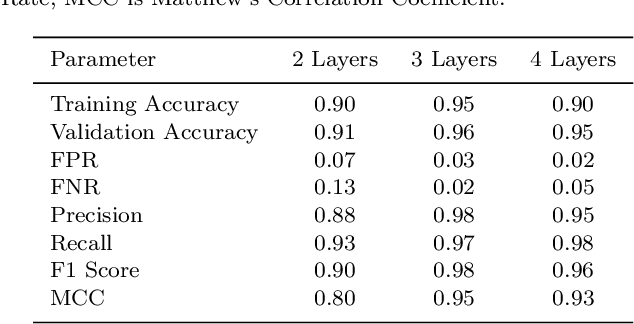

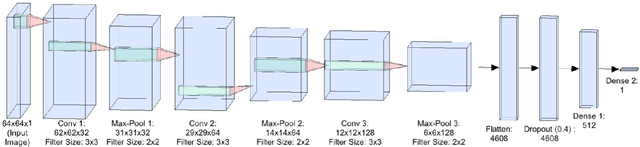

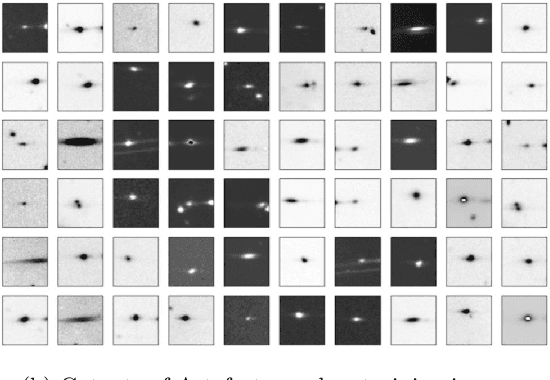

Eliminating artefacts in Polarimetric Images using Deep Learning

Nov 19, 2019

Abstract:Polarization measurements done using Imaging Polarimeters such as the Robotic Polarimeter are very sensitive to the presence of artefacts in images. Artefacts can range from internal reflections in a telescope to satellite trails that could contaminate an area of interest in the image. With the advent of wide-field polarimetry surveys, it is imperative to develop methods that automatically flag artefacts in images. In this paper, we implement a Convolutional Neural Network to identify the most dominant artefacts in the images. We find that our model can successfully classify sources with 98\% true positive and 97\% true negative rates. Such models, combined with transfer learning, will give us a running start in artefact elimination for near-future surveys like WALOP.

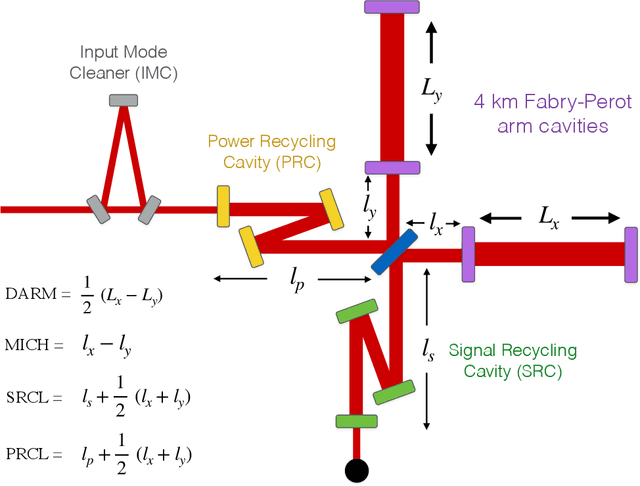

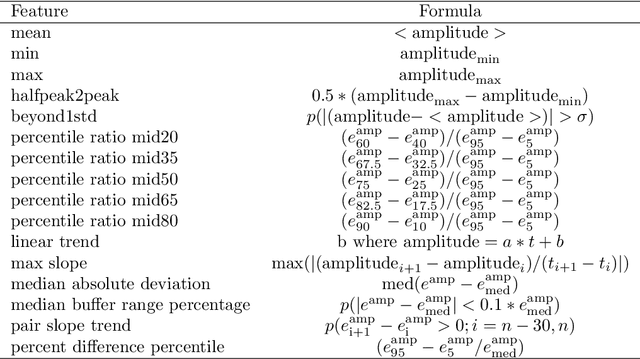

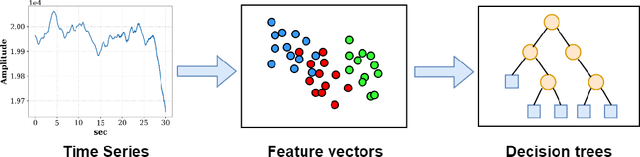

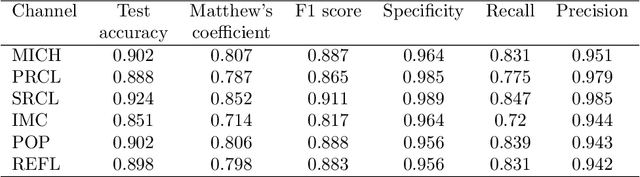

New methods to assess and improve LIGO detector duty cycle

Oct 26, 2019

Abstract:A network of three or more gravitational wave detectors simultaneously taking data is required to generate a well-localized sky map for gravitational wave sources, such as GW170817. Local seismic disturbances often cause the LIGO and Virgo detectors to lose light resonance in one or more of their component optic cavities, and the affected detector is unable to take data until resonance is recovered. In this paper, we use machine learning techniques to gain insight into the predictive behavior of the LIGO detector optic cavities during the second LIGO-Virgo observing run. We identify a minimal set of optic cavity control signals and data features which capture interferometer behavior leading to a loss of light resonance, or lockloss. We use these channels to accurately distinguish between lockloss events and quiet interferometer operating times via both supervised and unsupervised machine learning methods. This analysis yields new insights into how components of the LIGO detectors contribute to lockloss events, which could inform detector commissioning efforts to mitigate the associated loss of uptime. Particularly, we find that the state of the component optical cavities is a better predictor of loss of lock than ground motion trends. We report prediction accuracies of 98% for times just prior to lock loss, and 90% for times up to 30 seconds prior to lockloss, which shows promise for this method to be applied in near-real time to trigger preventative detector state changes. This method can be extended to target other auxiliary subsystems or times of interest, such as transient noise or loss in detector sensitivity. Application of these techniques during the third LIGO-Virgo observing run and beyond would maximize the potential of the global detector network for multi-messenger astronomy with gravitational waves.

Deep Learning for Multi-Messenger Astrophysics: A Gateway for Discovery in the Big Data Era

Feb 01, 2019Abstract:This report provides an overview of recent work that harnesses the Big Data Revolution and Large Scale Computing to address grand computational challenges in Multi-Messenger Astrophysics, with a particular emphasis on real-time discovery campaigns. Acknowledging the transdisciplinary nature of Multi-Messenger Astrophysics, this document has been prepared by members of the physics, astronomy, computer science, data science, software and cyberinfrastructure communities who attended the NSF-, DOE- and NVIDIA-funded "Deep Learning for Multi-Messenger Astrophysics: Real-time Discovery at Scale" workshop, hosted at the National Center for Supercomputing Applications, October 17-19, 2018. Highlights of this report include unanimous agreement that it is critical to accelerate the development and deployment of novel, signal-processing algorithms that use the synergy between artificial intelligence (AI) and high performance computing to maximize the potential for scientific discovery with Multi-Messenger Astrophysics. We discuss key aspects to realize this endeavor, namely (i) the design and exploitation of scalable and computationally efficient AI algorithms for Multi-Messenger Astrophysics; (ii) cyberinfrastructure requirements to numerically simulate astrophysical sources, and to process and interpret Multi-Messenger Astrophysics data; (iii) management of gravitational wave detections and triggers to enable electromagnetic and astro-particle follow-ups; (iv) a vision to harness future developments of machine and deep learning and cyberinfrastructure resources to cope with the scale of discovery in the Big Data Era; (v) and the need to build a community that brings domain experts together with data scientists on equal footing to maximize and accelerate discovery in the nascent field of Multi-Messenger Astrophysics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge