Arundhati Banerjee

Decentralized Multi-Agent Active Search and Tracking when Targets Outnumber Agents

Jan 09, 2024

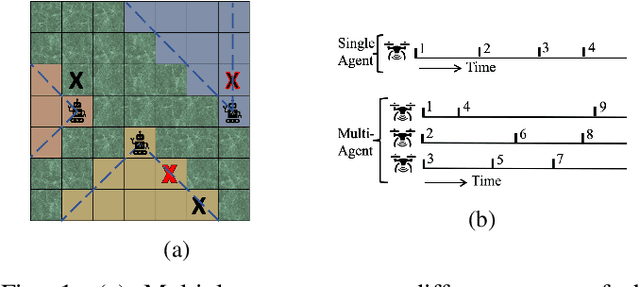

Abstract:Multi-agent multi-target tracking has a wide range of applications, including wildlife patrolling, security surveillance or environment monitoring. Such algorithms often make restrictive assumptions: the number of targets and/or their initial locations may be assumed known, or agents may be pre-assigned to monitor disjoint partitions of the environment, reducing the burden of exploration. This also limits applicability when there are fewer agents than targets, since agents are unable to continuously follow the targets in their fields of view. Multi-agent tracking algorithms additionally assume inter-agent synchronization of observations, or the presence of a central controller to coordinate joint actions. Instead, we focus on the setting of decentralized multi-agent, multi-target, simultaneous active search-and-tracking with asynchronous inter-agent communication. Our proposed algorithm DecSTER uses a sequential monte carlo implementation of the probability hypothesis density filter for posterior inference combined with Thompson sampling for decentralized multi-agent decision making. We compare different action selection policies, focusing on scenarios where targets outnumber agents. In simulation, we demonstrate that DecSTER is robust to unreliable inter-agent communication and outperforms information-greedy baselines in terms of the Optimal Sub-Pattern Assignment (OSPA) metric for different numbers of targets and varying teamsizes.

MERMAIDE: Learning to Align Learners using Model-Based Meta-Learning

Apr 10, 2023

Abstract:We study how a principal can efficiently and effectively intervene on the rewards of a previously unseen learning agent in order to induce desirable outcomes. This is relevant to many real-world settings like auctions or taxation, where the principal may not know the learning behavior nor the rewards of real people. Moreover, the principal should be few-shot adaptable and minimize the number of interventions, because interventions are often costly. We introduce MERMAIDE, a model-based meta-learning framework to train a principal that can quickly adapt to out-of-distribution agents with different learning strategies and reward functions. We validate this approach step-by-step. First, in a Stackelberg setting with a best-response agent, we show that meta-learning enables quick convergence to the theoretically known Stackelberg equilibrium at test time, although noisy observations severely increase the sample complexity. We then show that our model-based meta-learning approach is cost-effective in intervening on bandit agents with unseen explore-exploit strategies. Finally, we outperform baselines that use either meta-learning or agent behavior modeling, in both $0$-shot and $K=1$-shot settings with partial agent information.

Cost Aware Asynchronous Multi-Agent Active Search

Oct 05, 2022

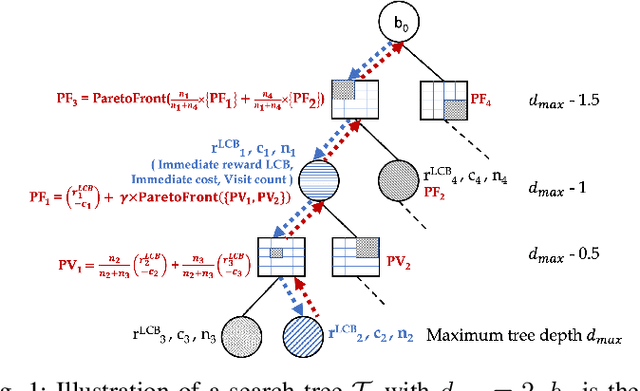

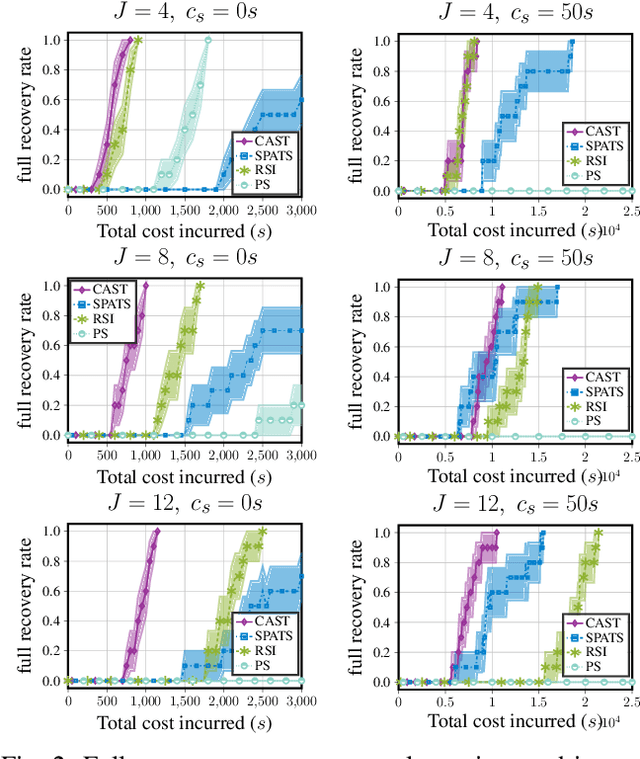

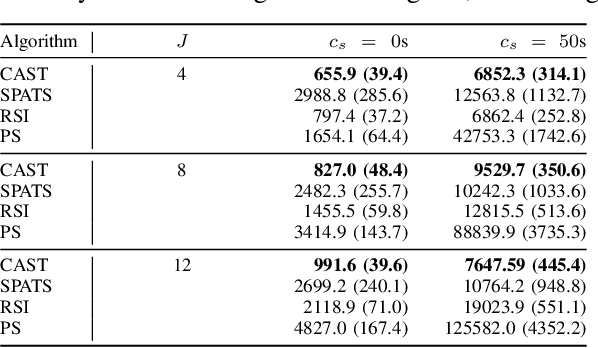

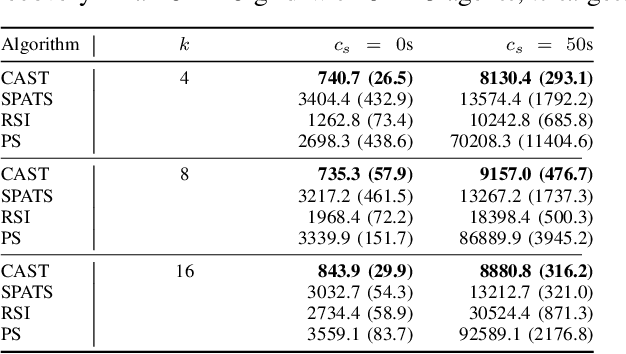

Abstract:Multi-agent active search requires autonomous agents to choose sensing actions that efficiently locate targets. In a realistic setting, agents also must consider the costs that their decisions incur. Previously proposed active search algorithms simplify the problem by ignoring uncertainty in the agent's environment, using myopic decision making, and/or overlooking costs. In this paper, we introduce an online active search algorithm to detect targets in an unknown environment by making adaptive cost-aware decisions regarding the agent's actions. Our algorithm combines principles from Thompson Sampling (for search space exploration and decentralized multi-agent decision making), Monte Carlo Tree Search (for long horizon planning) and pareto-optimal confidence bounds (for multi-objective optimization in an unknown environment) to propose an online lookahead planner that removes all the simplifications. We analyze the algorithm's performance in simulation to show its efficacy in cost aware active search.

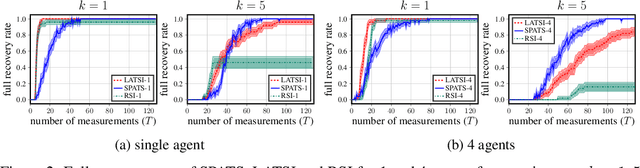

Multi-Agent Active Search using Detection and Location Uncertainty

Mar 09, 2022

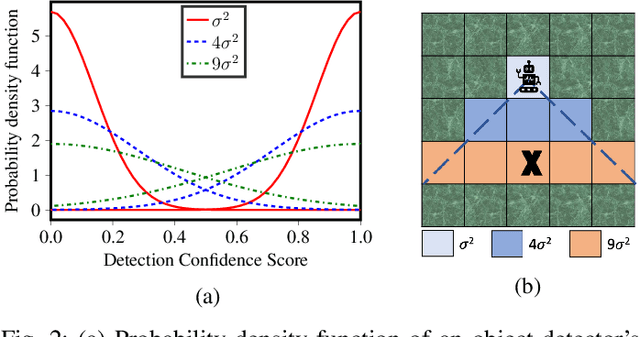

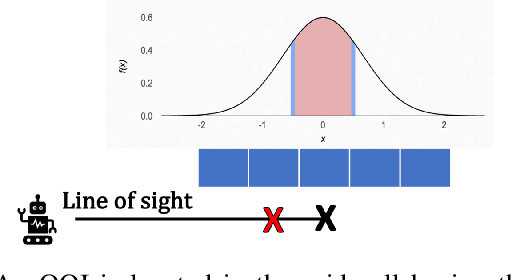

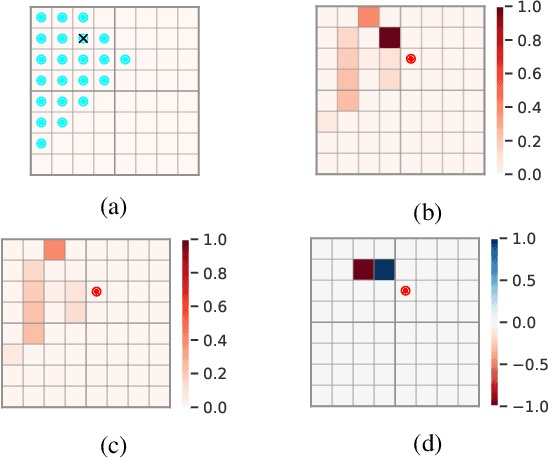

Abstract:Active search refers to the task of autonomous robots (agents) detecting objects of interest (targets) in a search space using decision making algorithms that adapt to the history of their observations. It has important applications in search and rescue missions, wildlife patrolling and environment monitoring. Active search algorithms must contend with two types of uncertainty: detection uncertainty and location uncertainty. Prior work has typically focused on one of these while ignoring or engineering away the other. The more common approach in robotics is to focus on location uncertainty and remove detection uncertainty by thresholding the detection probability to zero or one. On the other hand, it is common in the sparse signal processing literature to assume the target location is accurate and focus on the uncertainty of its detection. In this work, we propose an inference method to jointly handle both target detection and location uncertainty. We then build a decision making algorithm on this inference method that uses Thompson sampling to enable efficient active search in both the single agent and multi-agent settings. We perform experiments in simulation over varying number of agents and targets to show that our inference and decision making algorithms outperform competing baselines that only account for either target detection or location uncertainty.

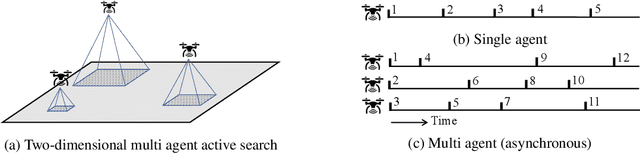

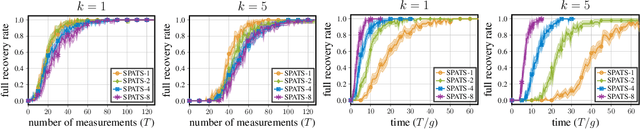

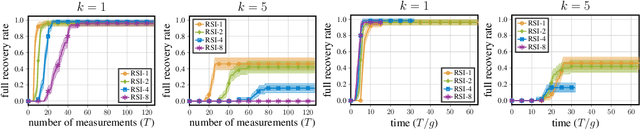

Asynchronous Multi Agent Active Search

Jun 25, 2020

Abstract:Active search refers to the problem of efficiently locating targets in an unknown environment by actively making data-collection decisions, and has many applications including detecting gas leaks, radiation sources or human survivors of disasters using aerial and/or ground robots (agents). Existing active search methods are in general only amenable to a single agent, or if they extend to multi agent they require a central control system to coordinate the actions of all agents. However, such control systems are often impractical in robotics applications. In this paper, we propose two distinct active search algorithms called SPATS (Sparse Parallel Asynchronous Thompson Sampling) and LATSI (LAplace Thompson Sampling with Information gain) that allow for multiple agents to independently make data-collection decisions without a central coordinator. Throughout we consider that targets are sparsely located around the environment in keeping with compressive sensing assumptions and its applicability in real world scenarios. Additionally, while most common search algorithms assume that agents can sense the entire environment (e.g. compressive sensing) or sense point-wise (e.g. Bayesian Optimization) at all times, we make a realistic assumption that each agent can only sense a contiguous region of space at a time. We provide simulation results as well as theoretical analysis to demonstrate the efficacy of our proposed algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge