Armin Saghafian

T2I-FineEval: Fine-Grained Compositional Metric for Text-to-Image Evaluation

Mar 14, 2025

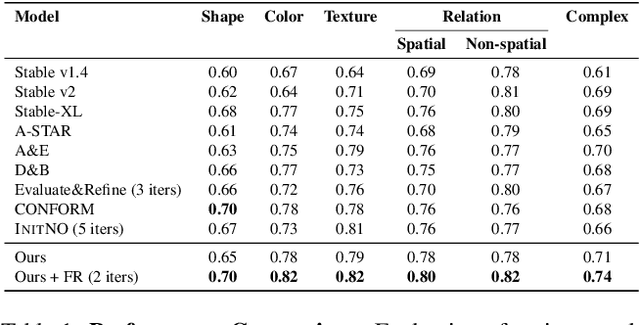

Abstract:Although recent text-to-image generative models have achieved impressive performance, they still often struggle with capturing the compositional complexities of prompts including attribute binding, and spatial relationships between different entities. This misalignment is not revealed by common evaluation metrics such as CLIPScore. Recent works have proposed evaluation metrics that utilize Visual Question Answering (VQA) by decomposing prompts into questions about the generated image for more robust compositional evaluation. Although these methods align better with human evaluations, they still fail to fully cover the compositionality within the image. To address this, we propose a novel metric that breaks down images into components, and texts into fine-grained questions about the generated image for evaluation. Our method outperforms previous state-of-the-art metrics, demonstrating its effectiveness in evaluating text-to-image generative models. Code is available at https://github.com/hadi-hosseini/ T2I-FineEval.

Fine-Grained Alignment and Noise Refinement for Compositional Text-to-Image Generation

Mar 09, 2025

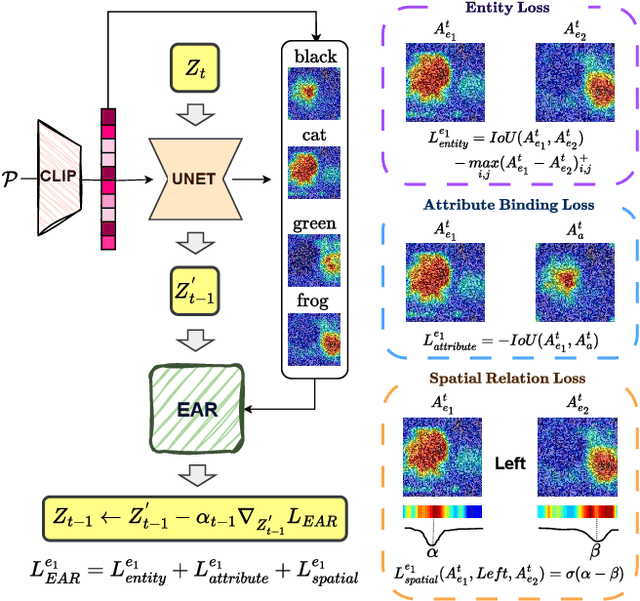

Abstract:Text-to-image generative models have made significant advancements in recent years; however, accurately capturing intricate details in textual prompts, such as entity missing, attribute binding errors, and incorrect relationships remains a formidable challenge. In response, we present an innovative, training-free method that directly addresses these challenges by incorporating tailored objectives to account for textual constraints. Unlike layout-based approaches that enforce rigid structures and limit diversity, our proposed approach offers a more flexible arrangement of the scene by imposing just the extracted constraints from the text, without any unnecessary additions. These constraints are formulated as losses-entity missing, entity mixing, attribute binding, and spatial relationships, integrated into a unified loss that is applied in the first generation stage. Furthermore, we introduce a feedback-driven system for fine-grained initial noise refinement. This system integrates a verifier that evaluates the generated image, identifies inconsistencies, and provides corrective feedback. Leveraging this feedback, our refinement method first targets the unmet constraints by refining the faulty attention maps caused by initial noise, through the optimization of selective losses associated with these constraints. Subsequently, our unified loss function is reapplied to proceed the second generation phase. Experimental results demonstrate that our method, relying solely on our proposed objective functions, significantly enhances compositionality, achieving a 24% improvement in human evaluation and a 25% gain in spatial relationships. Furthermore, our fine-grained noise refinement proves effective, boosting performance by up to 5%. Code is available at https://github.com/hadi-hosseini/noise-refinement.

CAREL: Instruction-guided reinforcement learning with cross-modal auxiliary objectives

Nov 29, 2024

Abstract:Grounding the instruction in the environment is a key step in solving language-guided goal-reaching reinforcement learning problems. In automated reinforcement learning, a key concern is to enhance the model's ability to generalize across various tasks and environments. In goal-reaching scenarios, the agent must comprehend the different parts of the instructions within the environmental context in order to complete the overall task successfully. In this work, we propose CAREL (Cross-modal Auxiliary REinforcement Learning) as a new framework to solve this problem using auxiliary loss functions inspired by video-text retrieval literature and a novel method called instruction tracking, which automatically keeps track of progress in an environment. The results of our experiments suggest superior sample efficiency and systematic generalization for this framework in multi-modal reinforcement learning problems. Our code base is available here.

ComAlign: Compositional Alignment in Vision-Language Models

Sep 12, 2024Abstract:Vision-language models (VLMs) like CLIP have showcased a remarkable ability to extract transferable features for downstream tasks. Nonetheless, the training process of these models is usually based on a coarse-grained contrastive loss between the global embedding of images and texts which may lose the compositional structure of these modalities. Many recent studies have shown VLMs lack compositional understandings like attribute binding and identifying object relationships. Although some recent methods have tried to achieve finer-level alignments, they either are not based on extracting meaningful components of proper granularity or don't properly utilize the modalities' correspondence (especially in image-text pairs with more ingredients). Addressing these limitations, we introduce Compositional Alignment (ComAlign), a fine-grained approach to discover more exact correspondence of text and image components using only the weak supervision in the form of image-text pairs. Our methodology emphasizes that the compositional structure (including entities and relations) extracted from the text modality must also be retained in the image modality. To enforce correspondence of fine-grained concepts in image and text modalities, we train a lightweight network lying on top of existing visual and language encoders using a small dataset. The network is trained to align nodes and edges of the structure across the modalities. Experimental results on various VLMs and datasets demonstrate significant improvements in retrieval and compositional benchmarks, affirming the effectiveness of our plugin model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge