Armin Dekorsy

Gauss-Olbers Center, c/o University of Bremen, Dept. of Communications Engineering

Semantic-Aware Task Clustering for Federated Cooperative Multi-Task Semantic Communication

Jan 24, 2026Abstract:Task-oriented semantic communication (SemCom) prioritizes task execution over accurate symbol reconstruction and is well-suited to emerging intelligent applications. Cooperative multi-task SemCom (CMT-SemCom) further improves task execution performance. However, [1] demonstrates that cooperative multi-tasking can be either constructive or destructive. Moreover, the existing CMT-SemCom framework is not directly applicable to distributed multi-user scenarios, such as non-terrestrial satellite networks, where each satellite employs an individual semantic encoder. In this paper, we extend our earlier CMT-SemCom framework to distributed settings by proposing a federated learning (FL) based CMT-SemCom that enables cooperative multi-tasking across distributed users. Moreover, to address performance degradation caused by negative information transfer among heterogeneous tasks, we propose a semantic-aware task clustering method integrated in the FL process to ensure constructive cooperation based on an information-theoretic approach. Unlike common clustering methods that rely on high-dimensional data or feature space similarity, our proposed approach operates in the low-dimensional semantic domain to identify meaningful task relationships. Simulation results based on a LEO satellite network setup demonstrate the effectiveness of our approach and performance gain over unclustered FL and individual single-task SemCom.

Dynamic Downlink-Uplink Spectrum Sharing between Terrestrial and Non-Terrestrial Networks

Nov 11, 2025Abstract:6G networks are expected to integrate low Earth orbit satellites to ensure global connectivity by extending coverage to underserved and remote regions. However, the deployment of dense mega-constellations introduces severe interference among satellites operating over shared frequency bands. This is, in part, due to the limited flexibility of conventional frequency division duplex (FDD) systems, where fixed bands for downlink (DL) and uplink (UL) transmissions are employed. In this work, we propose dynamic re-assignment of FDD bands for improved interference management in dense deployments and evaluate the performance gain of this approach. To this end, we formulate a joint optimization problem that incorporates dynamic band assignment, user scheduling, and power allocation in both directions. This non-convex mixed integer problem is solved using a combination of equivalence transforms, alternating optimization, and state-of-the-art industrial-grade mixed integer solvers. Numerical results demonstrate that the proposed approach of dynamic FDD band assignment significantly enhances system performance over conventional FDD, achieving up to 94\% improvement in throughput in dense deployments.

6G Resilience -- White Paper

Sep 10, 2025

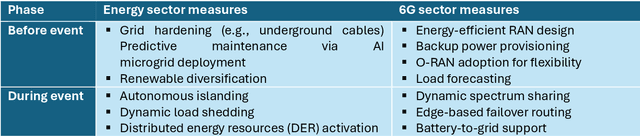

Abstract:6G must be designed to withstand, adapt to, and evolve amid prolonged, complex disruptions. Mobile networks' shift from efficiency-first to sustainability-aware has motivated this white paper to assert that resilience is a primary design goal, alongside sustainability and efficiency, encompassing technology, architecture, and economics. We promote resilience by analysing dependencies between mobile networks and other critical systems, such as energy, transport, and emergency services, and illustrate how cascading failures spread through infrastructures. We formalise resilience using the 3R framework: reliability, robustness, resilience. Subsequently, we translate this into measurable capabilities: graceful degradation, situational awareness, rapid reconfiguration, and learning-driven improvement and recovery. Architecturally, we promote edge-native and locality-aware designs, open interfaces, and programmability to enable islanded operations, fallback modes, and multi-layer diversity (radio, compute, energy, timing). Key enablers include AI-native control loops with verifiable behaviour, zero-trust security rooted in hardware and supply-chain integrity, and networking techniques that prioritise critical traffic, time-sensitive flows, and inter-domain coordination. Resilience also has a techno-economic aspect: open platforms and high-quality complementors generate ecosystem externalities that enhance resilience while opening new markets. We identify nine business-model groups and several patterns aligned with the 3R objectives, and we outline governance and standardisation. This white paper serves as an initial step and catalyst for 6G resilience. It aims to inspire researchers, professionals, government officials, and the public, providing them with the essential components to understand and shape the development of 6G resilience.

Compressed Learning for Nanosurface Deficiency Recognition Using Angle-resolved Scatterometry Data

Aug 25, 2025Abstract:Nanoscale manufacturing requires high-precision surface inspection to guarantee the quality of the produced nanostructures. For production environments, angle-resolved scatterometry offers a non- invasive and in-line compatible alternative to traditional surface inspection methods, such as scanning electron microscopy. However, angle-resolved scatterometry currently suffers from long data acquisition time. Our study addresses the issue of slow data acquisition by proposing a compressed learning framework for the accurate recognition of nanosurface deficiencies using angle-resolved scatterometry data. The framework uses the particle swarm optimization algorithm with a sampling scheme customized for scattering patterns. This combination allows the identification of optimal sampling points in scatterometry data that maximize the detection accuracy of five different levels of deficiency in ZnO nanosurfaces. The proposed method significantly reduces the amount of sampled data while maintaining a high accuracy in deficiency detection, even in noisy environments. Notably, by sampling only 1% of the data, the method achieves an accuracy of over 86%, which further improves to 94% when the sampling rate is increased to 6%. These results demonstrate a favorable balance between data reduction and classification performance. The obtained results also show that the compressed learning framework effectively identifies critical sampling areas.

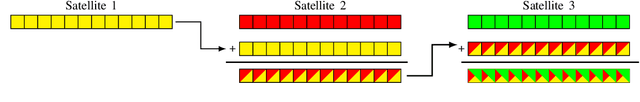

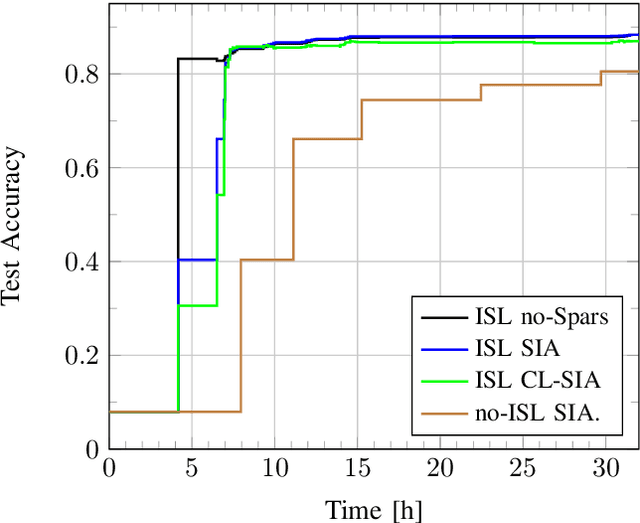

Sparse Incremental Aggregation in Satellite Federated Learning

Jan 20, 2025

Abstract:This paper studies Federated Learning (FL) in low Earth orbit (LEO) satellite constellations, where satellites are connected via intra-orbit inter-satellite links (ISLs) to their neighboring satellites. During the FL training process, satellites in each orbit forward gradients from nearby satellites, which are eventually transferred to the parameter server (PS). To enhance the efficiency of the FL training process, satellites apply in-network aggregation, referred to as incremental aggregation. In this work, the gradient sparsification methods from [1] are applied to satellite scenarios to improve bandwidth efficiency during incremental aggregation. The numerical results highlight an increase of over 4 x in bandwidth efficiency as the number of satellites in the orbital plane increases.

Integrating Semantic Communication and Human Decision-Making into an End-to-End Sensing-Decision Framework

Dec 06, 2024Abstract:As early as 1949, Weaver defined communication in a very broad sense to include all procedures by which one mind or technical system can influence another, thus establishing the idea of semantic communication. With the recent success of machine learning in expert assistance systems where sensed information is wirelessly provided to a human to assist task execution, the need to design effective and efficient communications has become increasingly apparent. In particular, semantic communication aims to convey the meaning behind the sensed information relevant for Human Decision-Making (HDM). Regarding the interplay between semantic communication and HDM, many questions remain, such as how to model the entire end-to-end sensing-decision-making process, how to design semantic communication for the HDM and which information should be provided to the HDM. To address these questions, we propose to integrate semantic communication and HDM into one probabilistic end-to-end sensing-decision framework that bridges communications and psychology. In our interdisciplinary framework, we model the human through a HDM process, allowing us to explore how feature extraction from semantic communication can best support human decision-making. In this sense, our study provides new insights for the design/interaction of semantic communication with models of HDM. Our initial analysis shows how semantic communication can balance the level of detail with human cognitive capabilities while demanding less bandwidth, power, and latency.

Cooperative and Collaborative Multi-Task Semantic Communication for Distributed Sources

Nov 04, 2024

Abstract:In this paper, we explore a multi-task semantic communication (SemCom) system for distributed sources, extending the existing focus on collaborative single-task execution. We build on the cooperative multi-task processing introduced in [1], which divides the encoder into a common unit (CU) and multiple specific units (SUs). While earlier studies in multi-task SemCom focused on full observation settings, our research explores a more realistic case where only distributed partial observations are available, such as in a production line monitored by multiple sensing nodes. To address this, we propose an SemCom system that supports multi-task processing through cooperation on the transmitter side via split structure and collaboration on the receiver side. We have used an information-theoretic perspective with variational approximations for our end-to-end data-driven approach. Simulation results demonstrate that the proposed cooperative and collaborative multi-task (CCMT) SemCom system significantly improves task execution accuracy, particularly in complex datasets, if the noise introduced from the communication channel is not limiting the task performance too much. Our findings contribute to a more general SemCom framework capable of handling distributed sources and multiple tasks simultaneously, advancing the applicability of SemCom systems in real-world scenarios.

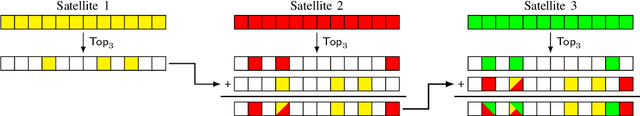

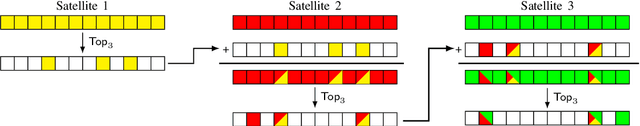

Sparse Incremental Aggregation in Multi-Hop Federated Learning

Jul 25, 2024Abstract:This paper investigates federated learning (FL) in a multi-hop communication setup, such as in constellations with inter-satellite links. In this setup, part of the FL clients are responsible for forwarding other client's results to the parameter server. Instead of using conventional routing, the communication efficiency can be improved significantly by using in-network model aggregation at each intermediate hop, known as incremental aggregation (IA). Prior works [1] have indicated diminishing gains for IA under gradient sparsification. Here we study this issue and propose several novel correlated sparsification methods for IA. Numerical results show that, for some of these algorithms, the full potential of IA is still available under sparsification without impairing convergence. We demonstrate a 15x improvement in communication efficiency over conventional routing and a 11x improvement over state-of-the-art (SoA) sparse IA.

Throughput Requirements for RAN Functional Splits in 3D-Networks

May 24, 2024Abstract:The rapid growth of non-terrestrial communication necessitates its integration with existing terrestrial networks, as highlighted in 3GPP Releases 16 and 17. This paper analyses the concept of functional splits in 3D-Networks. To manage this complex structure effectively, the adoption of a Radio Access Network (RAN) architecture with Functional Split (FS) offers advantages in flexibility, scalability, and cost-efficiency. RAN achieves this by disaggregating functionalities into three separate units. Analogous to the terrestrial network approach, 3GPP is extending this concept to non-terrestrial platforms as well. This work presents a general analysis of the requested Fronthaul (FH) data rate on feeder link between a non-terrestrial platform and the ground-station. Each split option is a trade-of between FH data rate and the respected complexity. Since flying nodes face more limitations regarding power consumption and complexity on board in comparison to terrestrial ones, we are investigating the split options between lower and higher physical layer.

Instantaneous Bandwidth Estimation from Level-Crossing Samples via LSTM-based Encoder-Decoder Architecture

May 14, 2024Abstract:This paper presents an approach for instantaneous bandwidth estimation from level-crossing samples using a long short-term memory (LSTM) encoder-decoder architecture. Level-crossing sampling is a nonuniform sampling technique that is particularly useful for energy-efficient acquisition of signals with sparse spectra. Especially in combination with fully analog wireless sensor nodes, level-crossing sampling offers a viable alternative to traditional sampling methods. However, due to the nonuniform distribution of samples, reconstructing the original signal is a challenging task. One promising reconstruction approach is time-warping, where the local signal spectrum is taken into account. However, this requires an accurate estimate of the instantaneous bandwidth of the signal. In this paper, we show that applying neural networks (NNs) to the problem of estimating instantaneous bandwidth from level-crossing samples can improve the overall reconstruction accuracy. We conduct a comprehensive numerical analysis of the proposed approach and compare it to an intensity-based bandwidth estimation method from literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge