Nasrin Razmi

Sparse Incremental Aggregation in Satellite Federated Learning

Jan 20, 2025

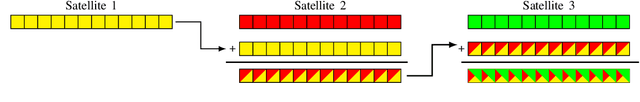

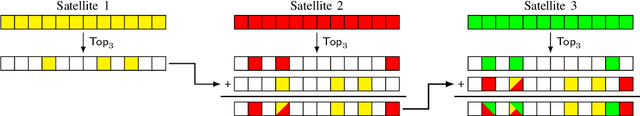

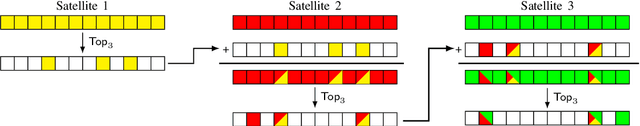

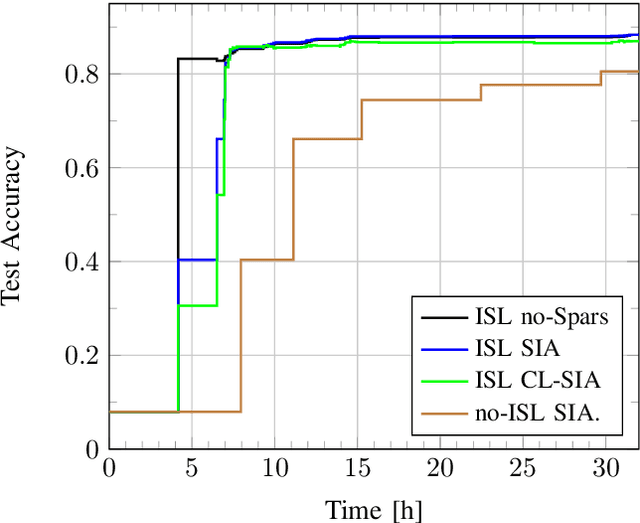

Abstract:This paper studies Federated Learning (FL) in low Earth orbit (LEO) satellite constellations, where satellites are connected via intra-orbit inter-satellite links (ISLs) to their neighboring satellites. During the FL training process, satellites in each orbit forward gradients from nearby satellites, which are eventually transferred to the parameter server (PS). To enhance the efficiency of the FL training process, satellites apply in-network aggregation, referred to as incremental aggregation. In this work, the gradient sparsification methods from [1] are applied to satellite scenarios to improve bandwidth efficiency during incremental aggregation. The numerical results highlight an increase of over 4 x in bandwidth efficiency as the number of satellites in the orbital plane increases.

Sparse Incremental Aggregation in Multi-Hop Federated Learning

Jul 25, 2024Abstract:This paper investigates federated learning (FL) in a multi-hop communication setup, such as in constellations with inter-satellite links. In this setup, part of the FL clients are responsible for forwarding other client's results to the parameter server. Instead of using conventional routing, the communication efficiency can be improved significantly by using in-network model aggregation at each intermediate hop, known as incremental aggregation (IA). Prior works [1] have indicated diminishing gains for IA under gradient sparsification. Here we study this issue and propose several novel correlated sparsification methods for IA. Numerical results show that, for some of these algorithms, the full potential of IA is still available under sparsification without impairing convergence. We demonstrate a 15x improvement in communication efficiency over conventional routing and a 11x improvement over state-of-the-art (SoA) sparse IA.

Scheduling for On-Board Federated Learning with Satellite Clusters

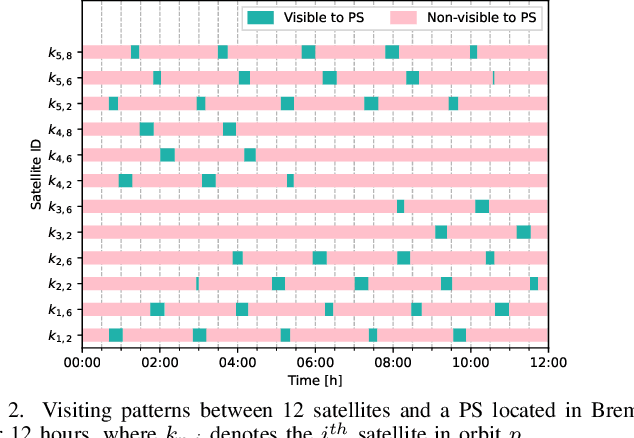

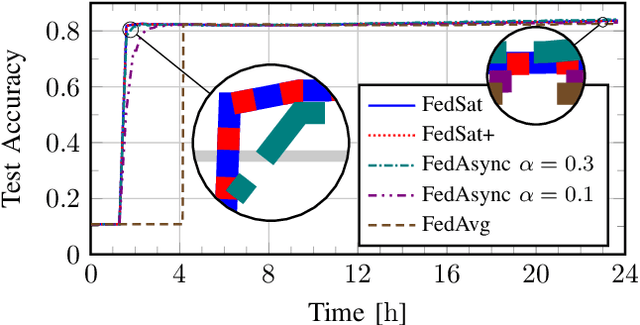

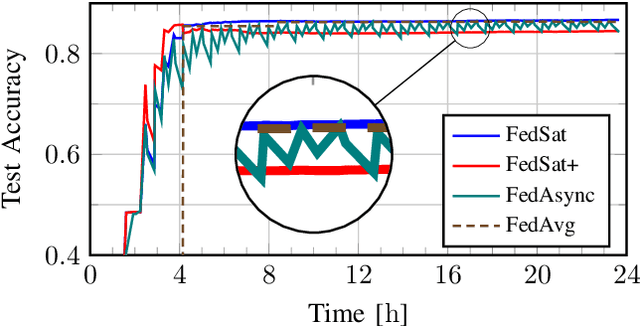

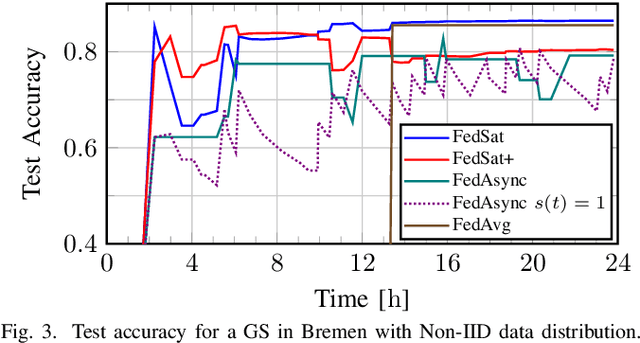

Feb 14, 2024Abstract:Mega-constellations of small satellites have evolved into a source of massive amount of valuable data. To manage this data efficiently, on-board federated learning (FL) enables satellites to train a machine learning (ML) model collaboratively without having to share the raw data. This paper introduces a scheme for scheduling on-board FL for constellations connected with intra-orbit inter-satellite links. The proposed scheme utilizes the predictable visibility pattern between satellites and ground station (GS), both at the individual satellite level and cumulatively within the entire orbit, to mitigate intermittent connectivity and best use of available time. To this end, two distinct schedulers are employed: one for coordinating the FL procedures among orbits, and the other for controlling those within each orbit. These two schedulers cooperatively determine the appropriate time to perform global updates in GS and then allocate suitable duration to satellites within each orbit for local training, proportional to usable time until next global update. This scheme leads to improved test accuracy within a shorter time.

Scheduling for Ground-Assisted Federated Learning in LEO Satellite Constellations

Jun 04, 2022

Abstract:Distributed training of machine learning models directly on satellites in low Earth orbit (LEO) is considered. Based on a federated learning (FL) algorithm specifically targeted at the unique challenges of the satellite scenario, we design a scheduler that exploits the predictability of visiting times between ground stations (GS) and satellites to reduce model staleness. Numerical experiments show that this can improve the convergence speed by a factor three.

Federated Learning in Satellite Constellations

Jun 01, 2022

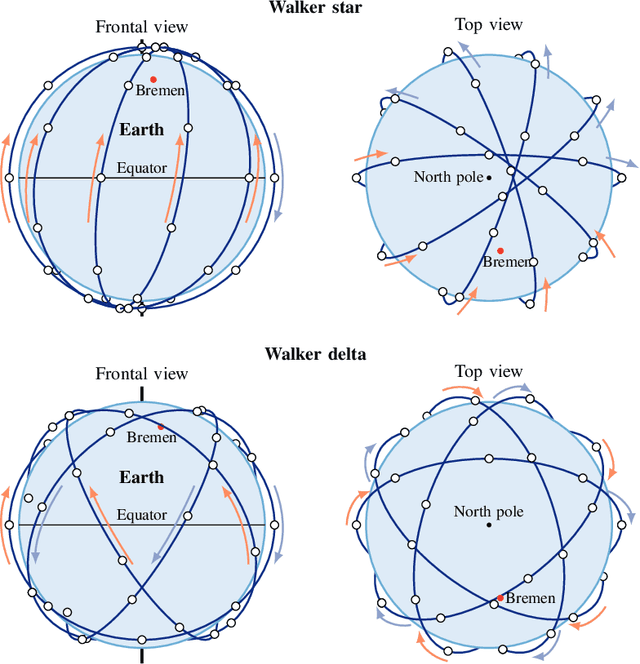

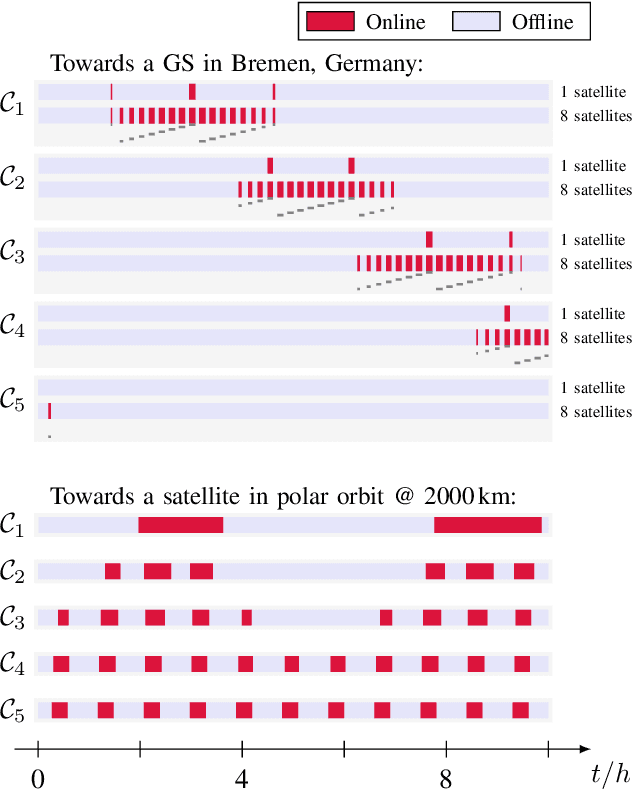

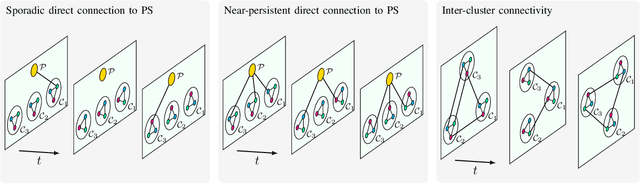

Abstract:Distributed machine learning (DML) results from the synergy between machine learning and connectivity. Federated learning (FL) is a prominent instance of DML in which intermittently connected mobile clients contribute to the training of a common learning model. This paper presents the new context brought to FL by satellite constellations where the connectivity patterns are significantly different from the ones assumed in terrestrial FL. We provide a taxonomy of different types of satellite connectivity relevant for FL and show how the distributed training process can overcome the slow convergence due to long offline times of clients by taking advantage of the predictable intermittency of the satellite communication links.

On-Board Federated Learning for Dense LEO Constellations

Nov 24, 2021

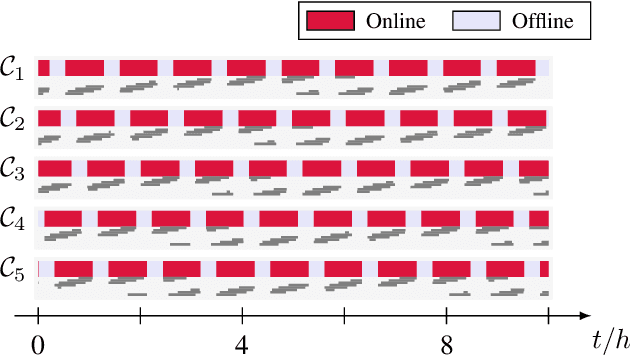

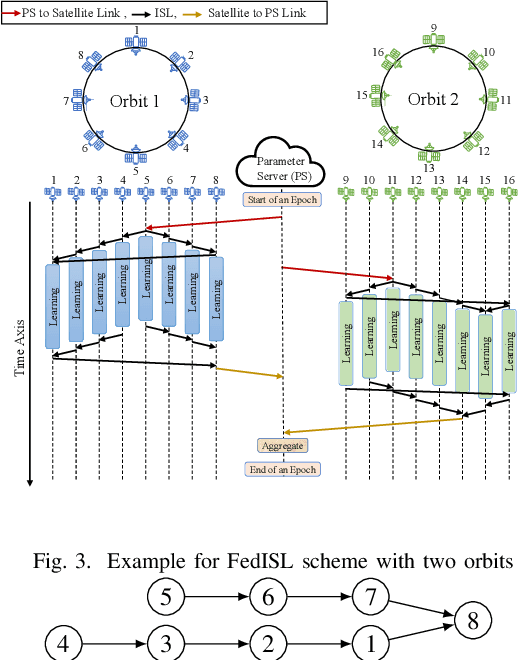

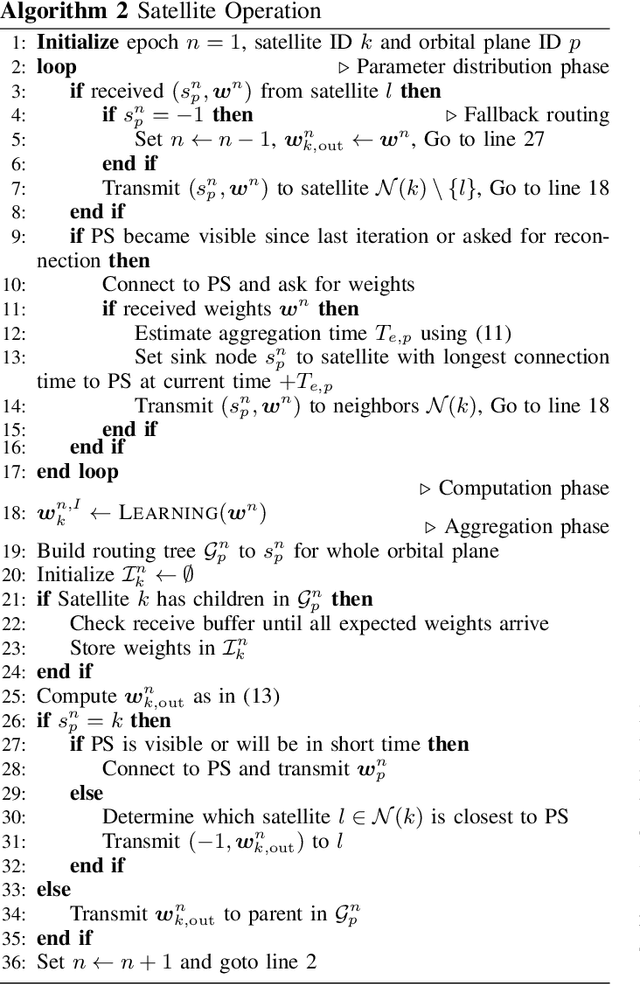

Abstract:Mega-constellations of small-size Low Earth Orbit (LEO) satellites are currently planned and deployed by various private and public entities. While global connectivity is the main rationale, these constellations also offer the potential to gather immense amounts of data, e.g., for Earth observation. Power and bandwidth constraints together with motives like privacy, limiting delay, or resiliency make it desirable to process this data directly within the constellation. We consider the implementation of on-board federated learning (FL) orchestrated by an out-of-constellation parameter server (PS) and propose a novel communication scheme tailored to support FL. It leverages intra-orbit inter-satellite links, the predictability of satellite movements and partial aggregating to massively reduce the training time and communication costs. In particular, for a constellation with 40 satellites equally distributed among five low Earth orbits and the PS in medium Earth orbit, we observe a 29x speed-up in the training process time and a 8x traffic reduction at the PS over the baseline.

Ground-Assisted Federated Learning in LEO Satellite Constellations

Sep 03, 2021

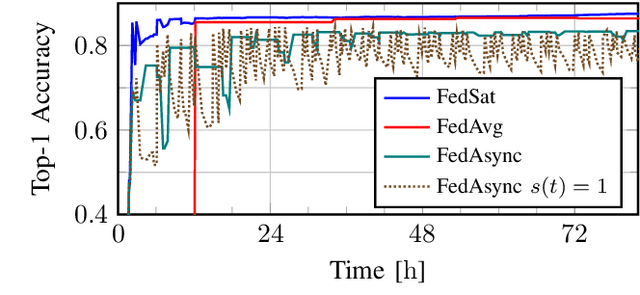

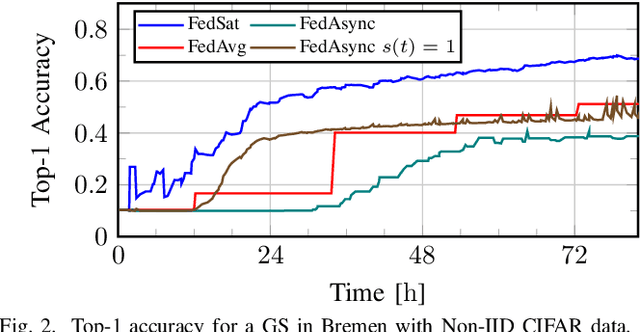

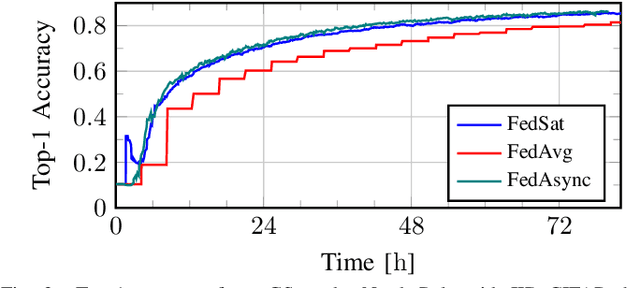

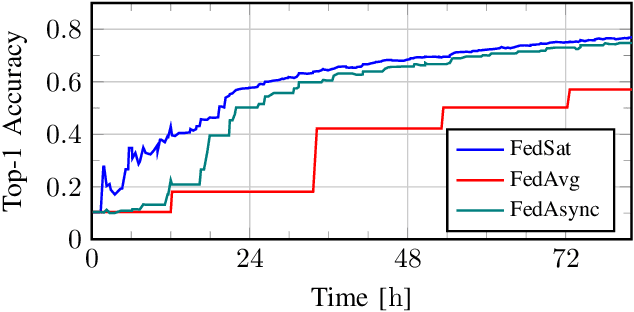

Abstract:In Low Earth Orbit (LEO) mega constellations, there are relevant use cases, such as inference based on satellite imaging, in which a large number of satellites collaboratively train a machine learning model without sharing their local data sets. To address this problem, we propose a new set of algorithms based of Federated learning (FL). Our approach differs substantially from the standard FL algorithms, as it takes into account the predictable connectivity patterns that are immanent to the LEO constellations. Extensive numerical evaluations highlight the fast convergence speed and excellent asymptotic test accuracy of the proposed method. In particular, the achieved test accuracy is within 96% to 99.6% of the centralized solution and the proposed algorithm has less hyperparameters to tune than state-of-the-art asynchronous FL methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge