Federated Learning in Satellite Constellations

Paper and Code

Jun 01, 2022

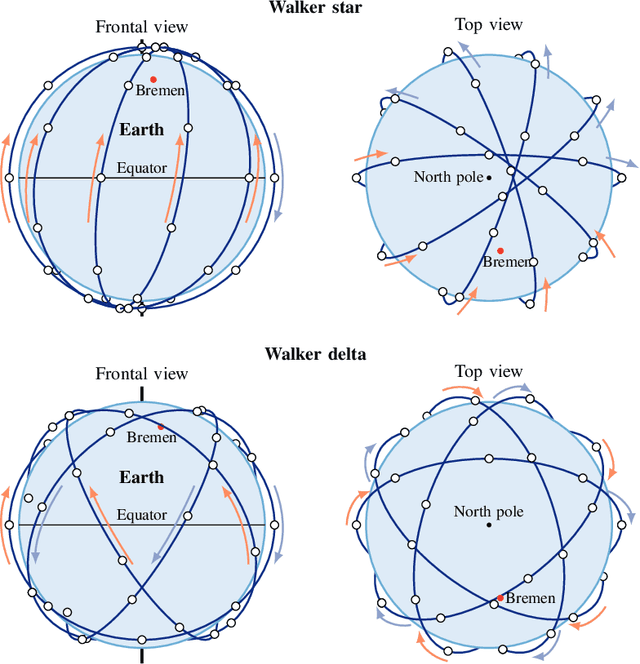

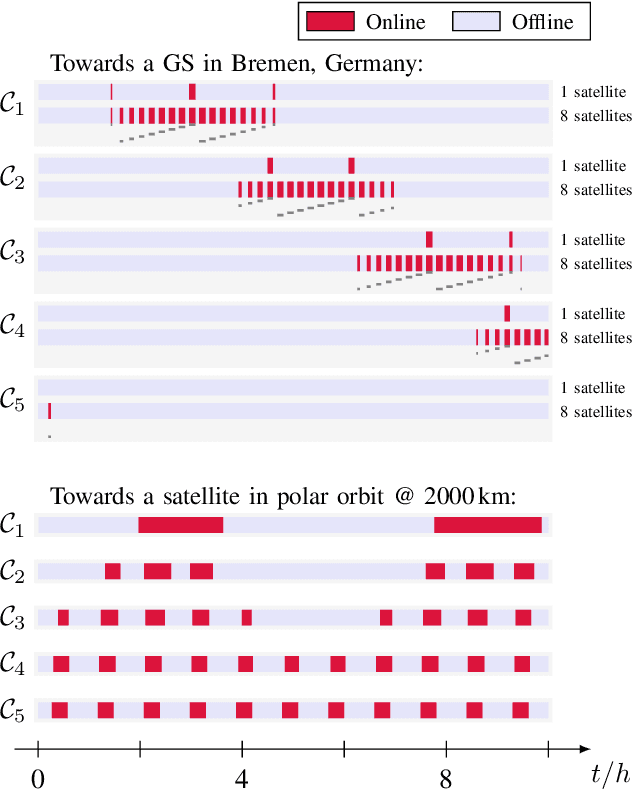

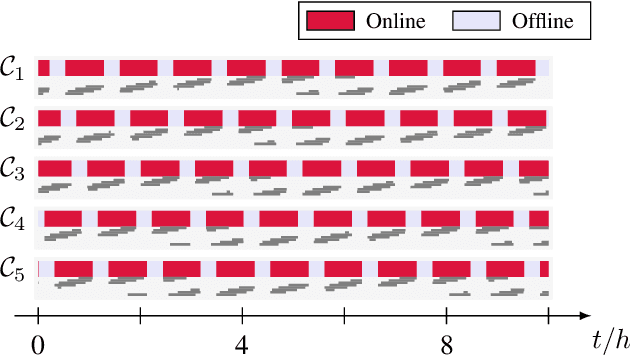

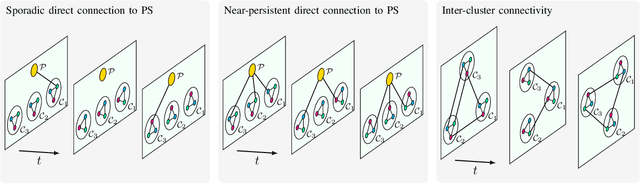

Distributed machine learning (DML) results from the synergy between machine learning and connectivity. Federated learning (FL) is a prominent instance of DML in which intermittently connected mobile clients contribute to the training of a common learning model. This paper presents the new context brought to FL by satellite constellations where the connectivity patterns are significantly different from the ones assumed in terrestrial FL. We provide a taxonomy of different types of satellite connectivity relevant for FL and show how the distributed training process can overcome the slow convergence due to long offline times of clients by taking advantage of the predictable intermittency of the satellite communication links.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge