Archith J. Bency

Beyond Spatial Auto-Regressive Models: Predicting Housing Prices with Satellite Imagery

Oct 16, 2016

Abstract:When modeling geo-spatial data, it is critical to capture spatial correlations for achieving high accuracy. Spatial Auto-Regression (SAR) is a common tool used to model such data, where the spatial contiguity matrix (W) encodes the spatial correlations. However, the efficacy of SAR is limited by two factors. First, it depends on the choice of contiguity matrix, which is typically not learnt from data, but instead, is assumed to be known apriori. Second, it assumes that the observations can be explained by linear models. In this paper, we propose a Convolutional Neural Network (CNN) framework to model geo-spatial data (specifi- cally housing prices), to learn the spatial correlations automatically. We show that neighborhood information embedded in satellite imagery can be leveraged to achieve the desired spatial smoothing. An additional upside of our framework is the relaxation of linear assumption on the data. Specific challenges we tackle while implementing our framework include, (i) how much of the neighborhood is relevant while estimating housing prices? (ii) what is the right approach to capture multiple resolutions of satellite imagery? and (iii) what other data-sources can help improve the estimation of spatial correlations? We demonstrate a marked improvement of 57% on top of the SAR baseline through the use of features from deep neural networks for the cities of London, Birmingham and Liverpool.

Fast Object Localization Using a CNN Feature Map Based Multi-Scale Search

Apr 12, 2016

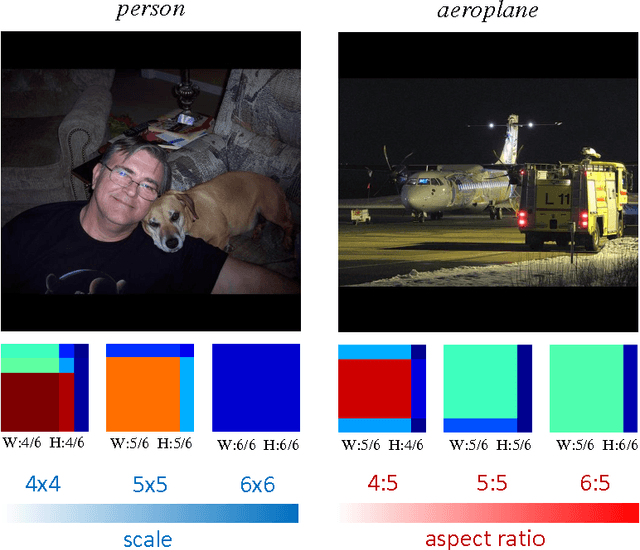

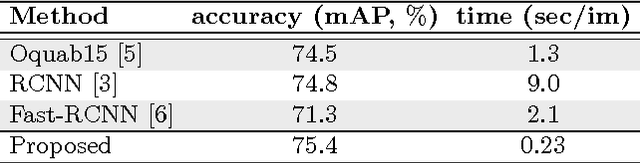

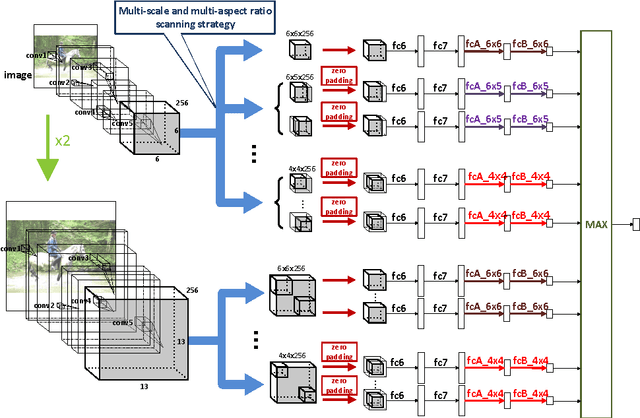

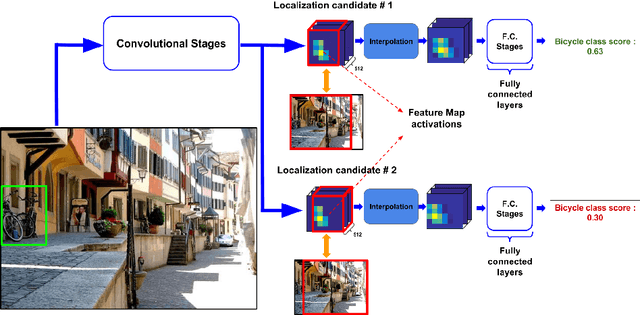

Abstract:Object localization is an important task in computer vision but requires a large amount of computational power due mainly to an exhaustive multiscale search on the input image. In this paper, we describe a near real-time multiscale search on a deep CNN feature map that does not use region proposals. The proposed approach effectively exploits local semantic information preserved in the feature map of the outermost convolutional layer. A multi-scale search is performed on the feature map by processing all the sub-regions of different sizes using separate expert units of fully connected layers. Each expert unit receives as input local semantic features only from the corresponding sub-regions of a specific geometric shape. Therefore, it contains more nearly optimal parameters tailored to the corresponding shape. This multi-scale and multi-aspect ratio scanning strategy can effectively localize a potential object of an arbitrary size. The proposed approach is fast and able to localize objects of interest with a frame rate of 4 fps while providing improved detection performance over the state-of-the art on the PASCAL VOC 12 and MSCOCO data sets.

Weakly Supervised Localization using Deep Feature Maps

Mar 29, 2016

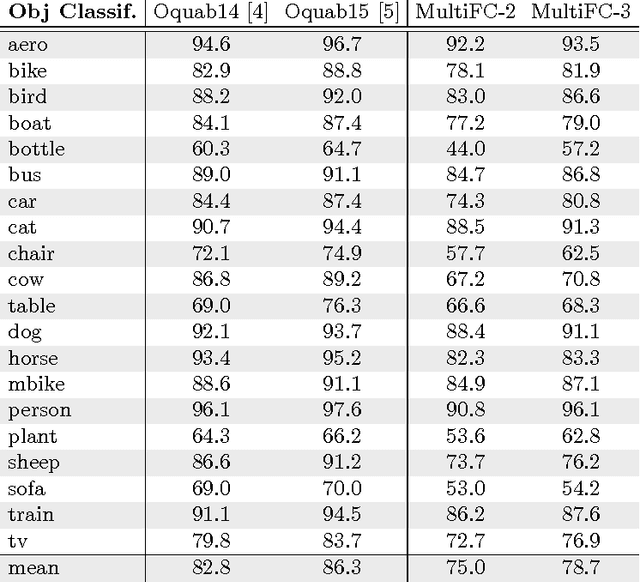

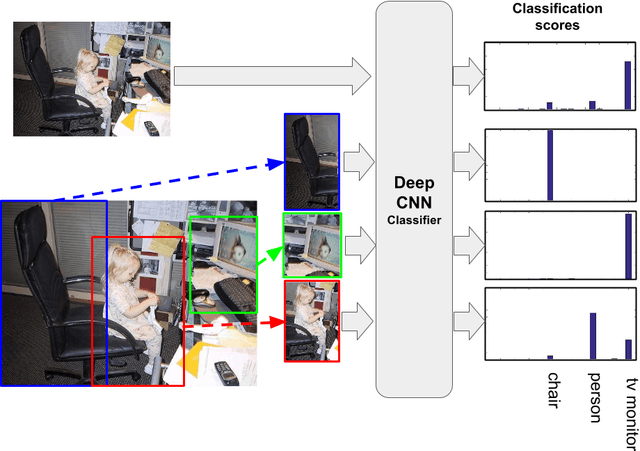

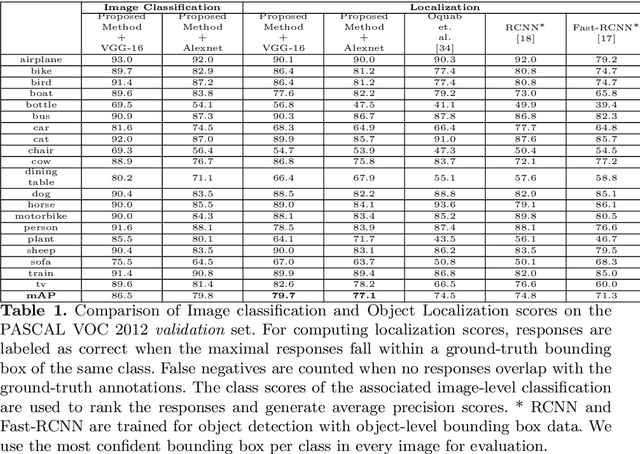

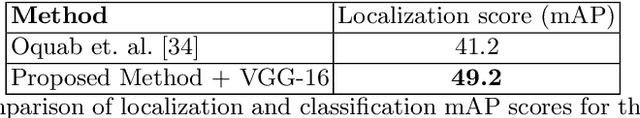

Abstract:Object localization is an important computer vision problem with a variety of applications. The lack of large scale object-level annotations and the relative abundance of image-level labels makes a compelling case for weak supervision in the object localization task. Deep Convolutional Neural Networks are a class of state-of-the-art methods for the related problem of object recognition. In this paper, we describe a novel object localization algorithm which uses classification networks trained on only image labels. This weakly supervised method leverages local spatial and semantic patterns captured in the convolutional layers of classification networks. We propose an efficient beam search based approach to detect and localize multiple objects in images. The proposed method significantly outperforms the state-of-the-art in standard object localization data-sets with a 8 point increase in mAP scores.

Search Tracker: Human-derived object tracking in-the-wild through large-scale search and retrieval

Feb 05, 2016

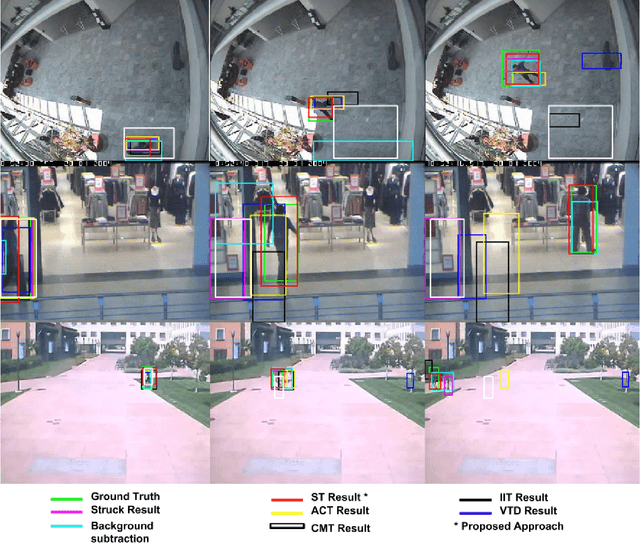

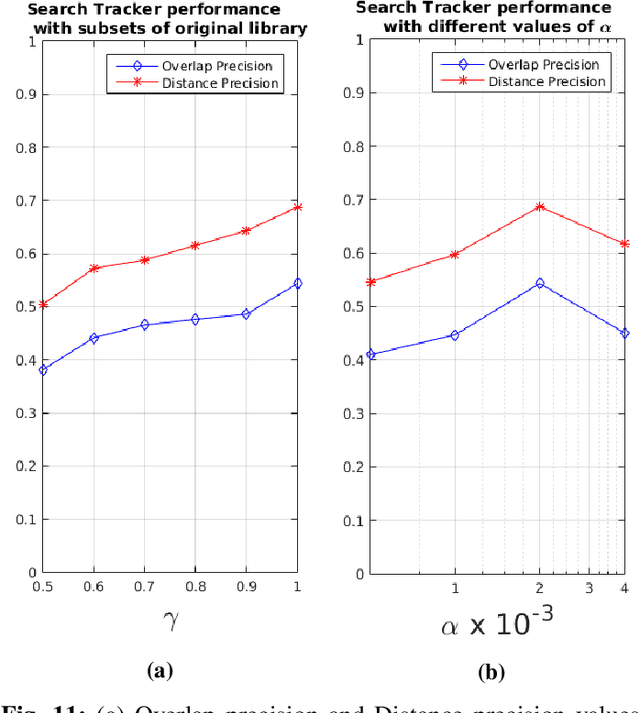

Abstract:Humans use context and scene knowledge to easily localize moving objects in conditions of complex illumination changes, scene clutter and occlusions. In this paper, we present a method to leverage human knowledge in the form of annotated video libraries in a novel search and retrieval based setting to track objects in unseen video sequences. For every video sequence, a document that represents motion information is generated. Documents of the unseen video are queried against the library at multiple scales to find videos with similar motion characteristics. This provides us with coarse localization of objects in the unseen video. We further adapt these retrieved object locations to the new video using an efficient warping scheme. The proposed method is validated on in-the-wild video surveillance datasets where we outperform state-of-the-art appearance-based trackers. We also introduce a new challenging dataset with complex object appearance changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge