April Yi Wang

AI and My Values: User Perceptions of LLMs' Ability to Extract, Embody, and Explain Human Values from Casual Conversations

Jan 30, 2026Abstract:Does AI understand human values? While this remains an open philosophical question, we take a pragmatic stance by introducing VAPT, the Value-Alignment Perception Toolkit, for studying how LLMs reflect people's values and how people judge those reflections. 20 participants texted a human-like chatbot over a month, then completed a 2-hour interview with our toolkit evaluating AI's ability to extract (pull details regarding), embody (make decisions guided by), and explain (provide proof of) human values. 13 participants left our study convinced that AI can understand human values. Participants found the experience insightful for self-reflection and found themselves getting persuaded by the AI's reasoning. Thus, we warn about "weaponized empathy": a potentially dangerous design pattern that may arise in value-aligned, yet welfare-misaligned AI. VAPT offers concrete artifacts and design implications to evaluate and responsibly build value-aligned conversational agents with transparency, consent, and safeguards as AI grows more capable and human-like into the future.

Does My Chatbot Have an Agenda? Understanding Human and AI Agency in Human-Human-like Chatbot Interaction

Jan 30, 2026Abstract:AI chatbots are shifting from tools to companions. This raises critical questions about agency: who drives conversations and sets boundaries in human-AI chatrooms? We report a month-long longitudinal study with 22 adults who chatted with Day, an LLM companion we built, followed by a semi-structured interview with post-hoc elicitation of notable moments, cross-participant chat reviews, and a 'strategy reveal' disclosing Day's vertical (depth-seeking) vs. horizontal (breadth-seeking) modes. We discover that agency in human-AI chatrooms is an emergent, shared experience: as participants claimed agency by setting boundaries and providing feedback, and the AI was perceived to steer intentions and drive execution, control shifted and was co-constructed turn-by-turn. We introduce a 3-by-5 framework mapping who (human, AI, hybrid) x agency action (Intention, Execution, Adaptation, Delimitation, Negotiation), modulated by individual and environmental factors. Ultimately, we argue for translucent design (i.e. transparency-on-demand), spaces for agency negotiation, and guidelines toward agency-aware conversational AI.

Enhancing Debugging Skills with AI-Powered Assistance: A Real-Time Tool for Debugging Support

Jan 05, 2026Abstract:Debugging is a crucial skill in programming education and software development, yet it is often overlooked in CS curricula. To address this, we introduce an AI-powered debugging assistant integrated into an IDE. It offers real-time support by analyzing code, suggesting breakpoints, and providing contextual hints. Using RAG with LLMs, program slicing, and custom heuristics, it enhances efficiency by minimizing LLM calls and improving accuracy. A three-level evaluation - technical analysis, UX study, and classroom tests - highlights its potential for teaching debugging.

Do It For Me vs. Do It With Me: Investigating User Perceptions of Different Paradigms of Automation in Copilots for Feature-Rich Software

Apr 22, 2025

Abstract:Large Language Model (LLM)-based in-application assistants, or copilots, can automate software tasks, but users often prefer learning by doing, raising questions about the optimal level of automation for an effective user experience. We investigated two automation paradigms by designing and implementing a fully automated copilot (AutoCopilot) and a semi-automated copilot (GuidedCopilot) that automates trivial steps while offering step-by-step visual guidance. In a user study (N=20) across data analysis and visual design tasks, GuidedCopilot outperformed AutoCopilot in user control, software utility, and learnability, especially for exploratory and creative tasks, while AutoCopilot saved time for simpler visual tasks. A follow-up design exploration (N=10) enhanced GuidedCopilot with task-and state-aware features, including in-context preview clips and adaptive instructions. Our findings highlight the critical role of user control and tailored guidance in designing the next generation of copilots that enhance productivity, support diverse skill levels, and foster deeper software engagement.

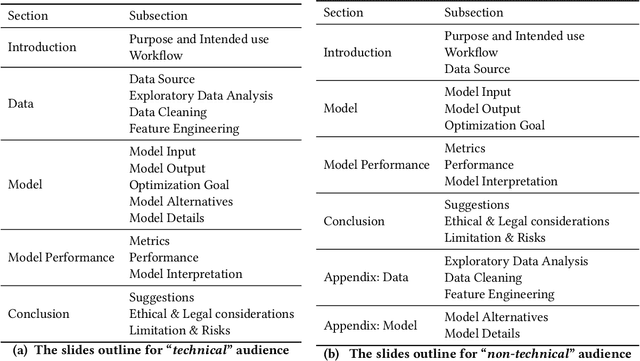

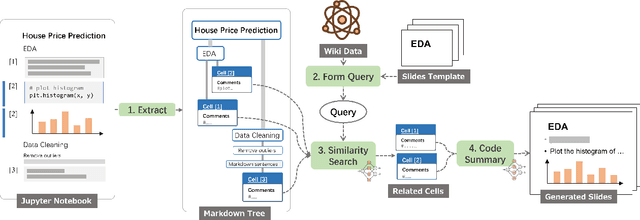

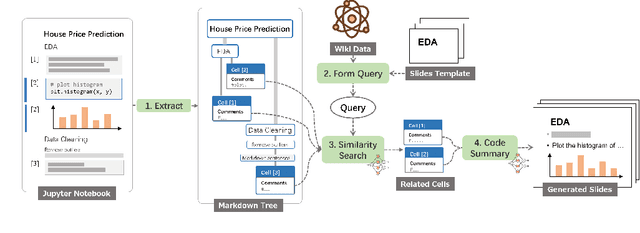

Telling Stories from Computational Notebooks: AI-Assisted Presentation Slides Creation for Presenting Data Science Work

Mar 21, 2022

Abstract:Creating presentation slides is a critical but time-consuming task for data scientists. While researchers have proposed many AI techniques to lift data scientists' burden on data preparation and model selection, few have targeted the presentation creation task. Based on the needs identified from a formative study, this paper presents NB2Slides, an AI system that facilitates users to compose presentations of their data science work. NB2Slides uses deep learning methods as well as example-based prompts to generate slides from computational notebooks, and take users' input (e.g., audience background) to structure the slides. NB2Slides also provides an interactive visualization that links the slides with the notebook to help users further edit the slides. A follow-up user evaluation with 12 data scientists shows that participants believed NB2Slides can improve efficiency and reduces the complexity of creating slides. Yet, participants questioned the future of full automation and suggested a human-AI collaboration paradigm.

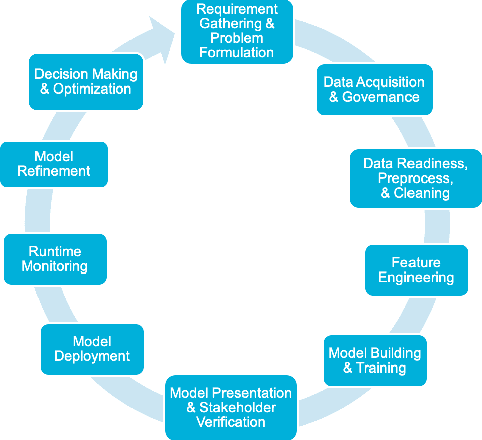

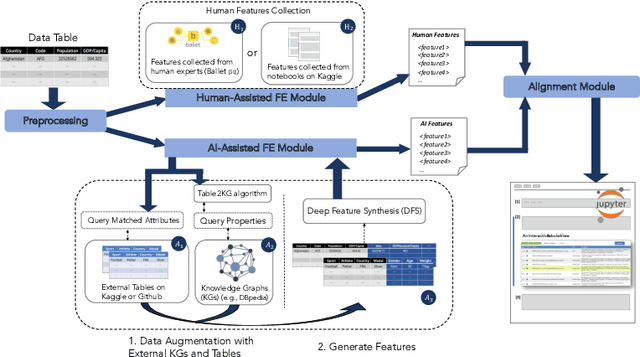

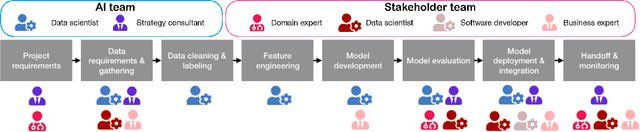

Human-Centered AI for Data Science: A Systematic Approach

Oct 03, 2021

Abstract:Human-Centered AI (HCAI) refers to the research effort that aims to design and implement AI techniques to support various human tasks, while taking human needs into consideration and preserving human control. In this short position paper, we illustrate how we approach HCAI using a series of research projects around Data Science (DS) works as a case study. The AI techniques built for supporting DS works are collectively referred to as AutoML systems, and their goals are to automate some parts of the DS workflow. We illustrate a three-step systematical research approach(i.e., explore, build, and integrate) and four practical ways of implementation for HCAI systems. We argue that our work is a cornerstone towards the ultimate future of Human-AI Collaboration for DS and beyond, where AI and humans can take complementary and indispensable roles to achieve a better outcome and experience.

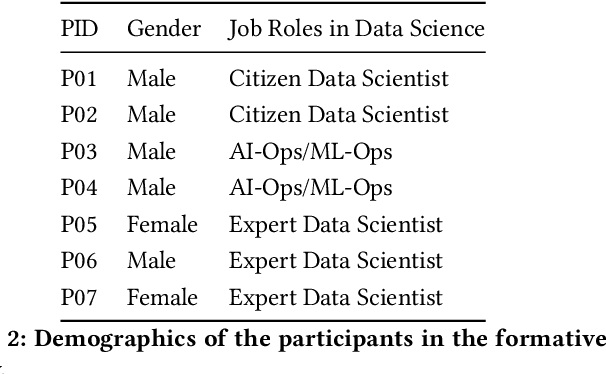

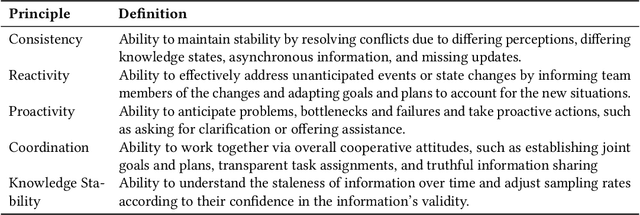

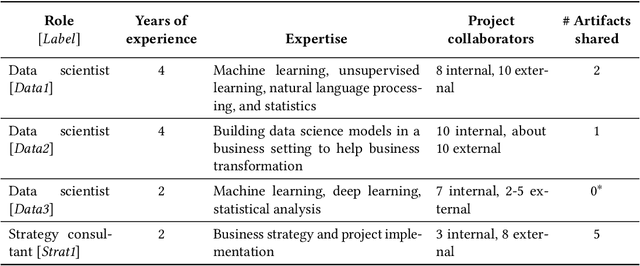

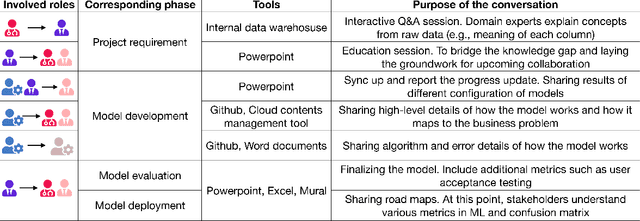

How AI Developers Overcome Communication Challenges in a Multidisciplinary Team: A Case Study

Jan 13, 2021

Abstract:The development of AI applications is a multidisciplinary effort, involving multiple roles collaborating with the AI developers, an umbrella term we use to include data scientists and other AI-adjacent roles on the same team. During these collaborations, there is a knowledge mismatch between AI developers, who are skilled in data science, and external stakeholders who are typically not. This difference leads to communication gaps, and the onus falls on AI developers to explain data science concepts to their collaborators. In this paper, we report on a study including analyses of both interviews with AI developers and artifacts they produced for communication. Using the analytic lens of shared mental models, we report on the types of communication gaps that AI developers face, how AI developers communicate across disciplinary and organizational boundaries, and how they simultaneously manage issues regarding trust and expectations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge