Antonis Kakas

Explainable AI applications in the Medical Domain: a systematic review

Aug 10, 2023Abstract:Artificial Intelligence in Medicine has made significant progress with emerging applications in medical imaging, patient care, and other areas. While these applications have proven successful in retrospective studies, very few of them were applied in practice.The field of Medical AI faces various challenges, in terms of building user trust, complying with regulations, using data ethically.Explainable AI (XAI) aims to enable humans understand AI and trust its results. This paper presents a literature review on the recent developments of XAI solutions for medical decision support, based on a representative sample of 198 articles published in recent years. The systematic synthesis of the relevant articles resulted in several findings. (1) model-agnostic XAI techniques were mostly employed in these solutions, (2) deep learning models are utilized more than other types of machine learning models, (3) explainability was applied to promote trust, but very few works reported the physicians participation in the loop, (4) visual and interactive user interface is more useful in understanding the explanation and the recommendation of the system. More research is needed in collaboration between medical and AI experts, that could guide the development of suitable frameworks for the design, implementation, and evaluation of XAI solutions in medicine.

Computational Argumentation and Cognition

Nov 12, 2021Abstract:This paper examines the interdisciplinary research question of how to integrate Computational Argumentation, as studied in AI, with Cognition, as can be found in Cognitive Science, Linguistics, and Philosophy. It stems from the work of the 1st Workshop on Computational Argumentation and Cognition (COGNITAR), which was organized as part of the 24th European Conference on Artificial Intelligence (ECAI), and took place virtually on September 8th, 2020. The paper begins with a brief presentation of the scientific motivation for the integration of Computational Argumentation and Cognition, arguing that within the context of Human-Centric AI the use of theory and methods from Computational Argumentation for the study of Cognition can be a promising avenue to pursue. A short summary of each of the workshop presentations is given showing the wide spectrum of problems where the synthesis of the theory and methods of Computational Argumentation with other approaches that study Cognition can be applied. The paper presents the main problems and challenges in the area that would need to be addressed, both at the scientific level but also at the epistemological level, particularly in relation to the synthesis of ideas and approaches from the various disciplines involved.

Abduction and Argumentation for Explainable Machine Learning: A Position Survey

Oct 24, 2020

Abstract:This paper presents Abduction and Argumentation as two principled forms for reasoning, and fleshes out the fundamental role that they can play within Machine Learning. It reviews the state-of-the-art work over the past few decades on the link of these two reasoning forms with machine learning work, and from this it elaborates on how the explanation-generating role of Abduction and Argumentation makes them naturally-fitting mechanisms for the development of Explainable Machine Learning and AI systems. Abduction contributes towards this goal by facilitating learning through the transformation, preparation, and homogenization of data. Argumentation, as a conservative extension of classical deductive reasoning, offers a flexible prediction and coverage mechanism for learning -- an associated target language for learned knowledge -- that explicitly acknowledges the need to deal, in the context of learning, with uncertain, incomplete and inconsistent data that are incompatible with any classically-represented logical theory.

Cognitive Argumentation and the Suppression Task

Feb 24, 2020

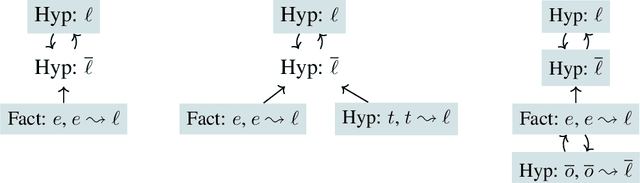

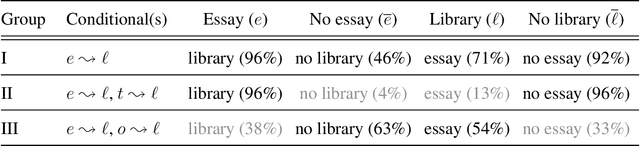

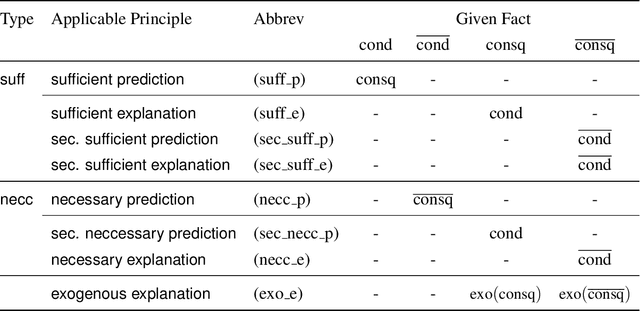

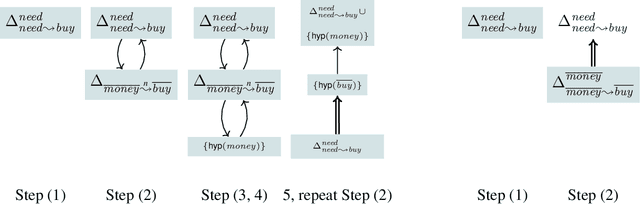

Abstract:This paper addresses the challenge of modeling human reasoning, within a new framework called Cognitive Argumentation. This framework rests on the assumption that human logical reasoning is inherently a process of dialectic argumentation and aims to develop a cognitive model for human reasoning that is computational and implementable. To give logical reasoning a human cognitive form the framework relies on cognitive principles, based on empirical and theoretical work in Cognitive Science, to suitably adapt a general and abstract framework of computational argumentation from AI. The approach of Cognitive Argumentation is evaluated with respect to Byrne's suppression task, where the aim is not only to capture the suppression effect between different groups of people but also to account for the variation of reasoning within each group. Two main cognitive principles are particularly important to capture human conditional reasoning that explain the participants' responses: (i) the interpretation of a condition within a conditional as sufficient and/or necessary and (ii) the mode of reasoning either as predictive or explanatory. We argue that Cognitive Argumentation provides a coherent and cognitively adequate model for human conditional reasoning that allows a natural distinction between definite and plausible conclusions, exhibiting the important characteristics of context-sensitive and defeasible reasoning.

Non-Monotonic Reasoning and Story Comprehension

Jul 14, 2014Abstract:This paper develops a Reasoning about Actions and Change framework integrated with Default Reasoning, suitable as a Knowledge Representation and Reasoning framework for Story Comprehension. The proposed framework, which is guided strongly by existing knowhow from the Psychology of Reading and Comprehension, is based on the theory of argumentation from AI. It uses argumentation to capture appropriate solutions to the frame, ramification and qualification problems and generalizations of these problems required for text comprehension. In this first part of the study the work concentrates on the central problem of integration (or elaboration) of the explicit information from the narrative in the text with the implicit (in the readers mind) common sense world knowledge pertaining to the topic(s) of the story given in the text. We also report on our empirical efforts to gather background common sense world knowledge used by humans when reading a story and to evaluate, through a prototype system, the ability of our approach to capture both the majority and the variability of understanding of a story by the human readers in the experiments.

Computational Logic Foundations of KGP Agents

Jan 15, 2014

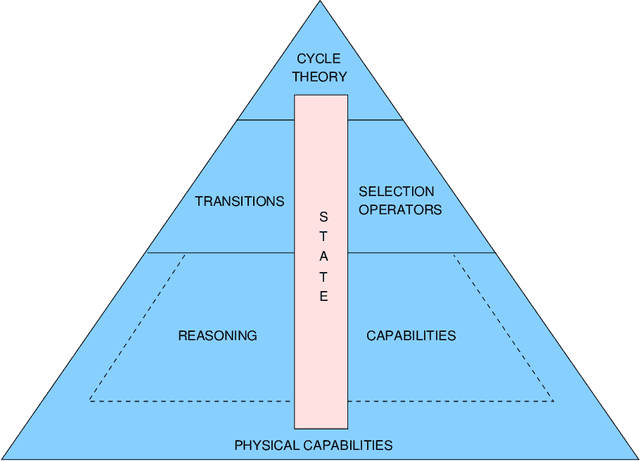

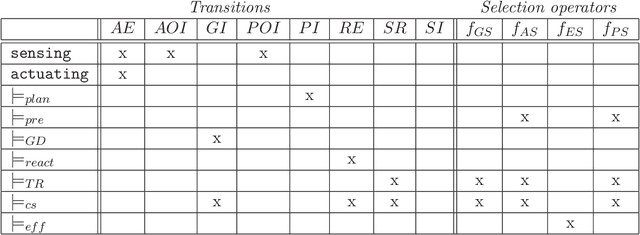

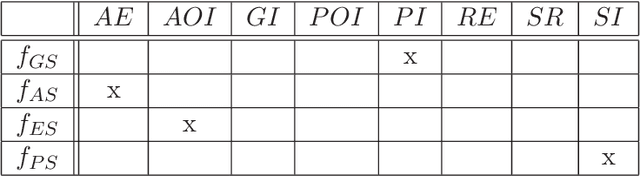

Abstract:This paper presents the computational logic foundations of a model of agency called the KGP (Knowledge, Goals and Plan model. This model allows the specification of heterogeneous agents that can interact with each other, and can exhibit both proactive and reactive behaviour allowing them to function in dynamic environments by adjusting their goals and plans when changes happen in such environments. KGP provides a highly modular agent architecture that integrates a collection of reasoning and physical capabilities, synthesised within transitions that update the agents state in response to reasoning, sensing and acting. Transitions are orchestrated by cycle theories that specify the order in which transitions are executed while taking into account the dynamic context and agent preferences, as well as selection operators for providing inputs to transitions.

Modeling Complex Domains of Actions and Change

Jul 13, 2002

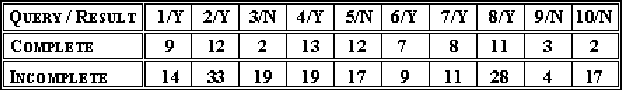

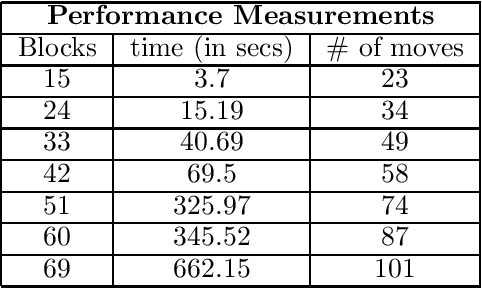

Abstract:This paper studies the problem of modeling complex domains of actions and change within high-level action description languages. We investigate two main issues of concern: (a) can we represent complex domains that capture together different problems such as ramifications, non-determinism and concurrency of actions, at a high-level, close to the given natural ontology of the problem domain and (b) what features of such a representation can affect, and how, its computational behaviour. The paper describes the main problems faced in this representation task and presents the results of an empirical study, carried out through a series of controlled experiments, to analyze the computational performance of reasoning in these representations. The experiments compare different representations obtained, for example, by changing the basic ontology of the domain or by varying the degree of use of indirect effect laws through domain constraints. This study has helped to expose the main sources of computational difficulty in the reasoning and suggest some methodological guidelines for representing complex domains. Although our work has been carried out within one particular high-level description language, we believe that the results, especially those that relate to the problems of representation, are independent of the specific modeling language.

Information Integration and Computational Logic

Jun 11, 2001Abstract:Information Integration is a young and exciting field with enormous research and commercial significance in the new world of the Information Society. It stands at the crossroad of Databases and Artificial Intelligence requiring novel techniques that bring together different methods from these fields. Information from disparate heterogeneous sources often with no a-priori common schema needs to be synthesized in a flexible, transparent and intelligent way in order to respond to the demands of a query thus enabling a more informed decision by the user or application program. The field although relatively young has already found many practical applications particularly for integrating information over the World Wide Web. This paper gives a brief introduction of the field highlighting some of the main current and future research issues and application areas. It attempts to evaluate the current and potential role of Computational Logic in this and suggests some of the problems where logic-based techniques could be used.

ACLP: Integrating Abduction and Constraint Solving

Mar 10, 2000

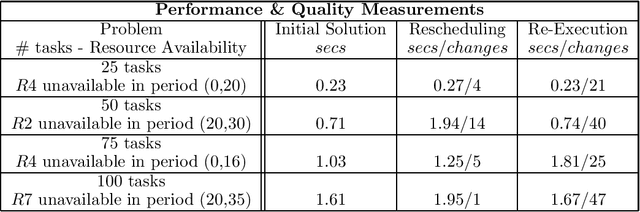

Abstract:ACLP is a system which combines abductive reasoning and constraint solving by integrating the frameworks of Abductive Logic Programming (ALP) and Constraint Logic Programming (CLP). It forms a general high-level knowledge representation environment for abductive problems in Artificial Intelligence and other areas. In ACLP, the task of abduction is supported and enhanced by its non-trivial integration with constraint solving facilitating its application to complex problems. The ACLP system is currently implemented on top of the CLP language of ECLiPSe as a meta-interpreter exploiting its underlying constraint solver for finite domains. It has been applied to the problems of planning and scheduling in order to test its computational effectiveness compared with the direct use of the (lower level) constraint solving framework of CLP on which it is built. These experiments provide evidence that the abductive framework of ACLP does not compromise significantly the computational efficiency of the solutions. Other experiments show the natural ability of ACLP to accommodate easily and in a robust way new or changing requirements of the original problem.

E-RES: A System for Reasoning about Actions, Events and Observations

Mar 09, 2000Abstract:E-RES is a system that implements the Language E, a logic for reasoning about narratives of action occurrences and observations. E's semantics is model-theoretic, but this implementation is based on a sound and complete reformulation of E in terms of argumentation, and uses general computational techniques of argumentation frameworks. The system derives sceptical non-monotonic consequences of a given reformulated theory which exactly correspond to consequences entailed by E's model-theory. The computation relies on a complimentary ability of the system to derive credulous non-monotonic consequences together with a set of supporting assumptions which is sufficient for the (credulous) conclusion to hold. E-RES allows theories to contain general action laws, statements about action occurrences, observations and statements of ramifications (or universal laws). It is able to derive consequences both forward and backward in time. This paper gives a short overview of the theoretical basis of E-RES and illustrates its use on a variety of examples. Currently, E-RES is being extended so that the system can be used for planning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge