Antonio Vitale

On the Impact of Code Comments for Automated Bug-Fixing: An Empirical Study

Jan 30, 2026Abstract:Large Language Models (LLMs) are increasingly relevant in Software Engineering research and practice, with Automated Bug Fixing (ABF) being one of their key applications. ABF involves transforming a buggy method into its fixed equivalent. A common preprocessing step in ABF involves removing comments from code prior to training. However, we hypothesize that comments may play a critical role in fixing certain types of bugs by providing valuable design and implementation insights. In this study, we investigate how the presence or absence of comments, both during training and at inference time, impacts the bug-fixing capabilities of LLMs. We conduct an empirical evaluation comparing two model families, each evaluated under all combinations of training and inference conditions (with and without comments), and thereby revisiting the common practice of removing comments during training. To address the limited availability of comments in state-of-the-art datasets, we use an LLM to automatically generate comments for methods lacking them. Our findings show that comments improve ABF accuracy by up to threefold when present in both phases, while training with comments does not degrade performance when instances lack them. Additionally, an interpretability analysis identifies that comments detailing method implementation are particularly effective in aiding LLMs to fix bugs accurately.

Toward Explaining Large Language Models in Software Engineering Tasks

Dec 23, 2025Abstract:Recent progress in Large Language Models (LLMs) has substantially advanced the automation of software engineering (SE) tasks, enabling complex activities such as code generation and code summarization. However, the black-box nature of LLMs remains a major barrier to their adoption in high-stakes and safety-critical domains, where explainability and transparency are vital for trust, accountability, and effective human supervision. Despite increasing interest in explainable AI for software engineering, existing methods lack domain-specific explanations aligned with how practitioners reason about SE artifacts. To address this gap, we introduce FeatureSHAP, the first fully automated, model-agnostic explainability framework tailored to software engineering tasks. Based on Shapley values, FeatureSHAP attributes model outputs to high-level input features through systematic input perturbation and task-specific similarity comparisons, while remaining compatible with both open-source and proprietary LLMs. We evaluate FeatureSHAP on two bi-modal SE tasks: code generation and code summarization. The results show that FeatureSHAP assigns less importance to irrelevant input features and produces explanations with higher fidelity than baseline methods. A practitioner survey involving 37 participants shows that FeatureSHAP helps practitioners better interpret model outputs and make more informed decisions. Collectively, FeatureSHAP represents a meaningful step toward practical explainable AI in software engineering. FeatureSHAP is available at https://github.com/deviserlab/FeatureSHAP.

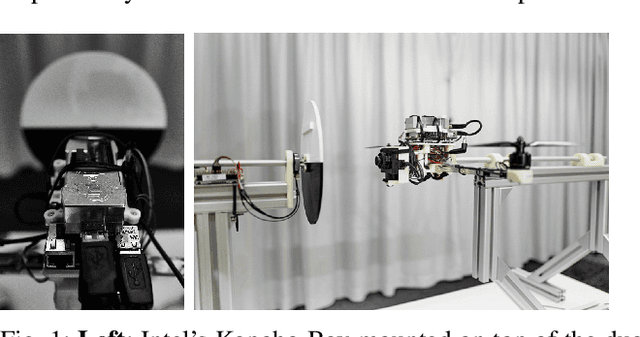

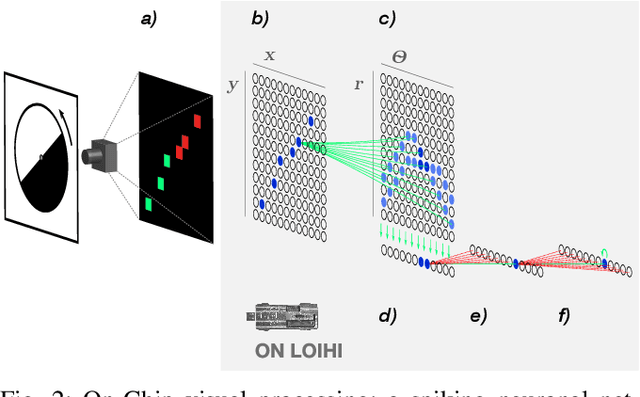

Event-driven Vision and Control for UAVs on a Neuromorphic Chip

Aug 19, 2021

Abstract:Event-based vision sensors achieve up to three orders of magnitude better speed vs. power consumption trade off in high-speed control of UAVs compared to conventional image sensors. Event-based cameras produce a sparse stream of events that can be processed more efficiently and with a lower latency than images, enabling ultra-fast vision-driven control. Here, we explore how an event-based vision algorithm can be implemented as a spiking neuronal network on a neuromorphic chip and used in a drone controller. We show how seamless integration of event-based perception on chip leads to even faster control rates and lower latency. In addition, we demonstrate how online adaptation of the SNN controller can be realised using on-chip learning. Our spiking neuronal network on chip is the first example of a neuromorphic vision-based controller solving a high-speed UAV control task. The excellent scalability of processing in neuromorphic hardware opens the possibility to solve more challenging visual tasks in the future and integrate visual perception in fast control loops.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge