Anne-Laure Ligozat

ENSIIE, LISN, STL

How Green Can AI Be? A Study of Trends in Machine Learning Environmental Impacts

Dec 23, 2024

Abstract:The compute requirements associated with training Artificial Intelligence (AI) models have increased exponentially over time. Optimisation strategies aim to reduce the energy consumption and environmental impacts associated with AI, possibly shifting impacts from the use phase to the manufacturing phase in the life-cycle of hardware. This paper investigates the evolution of individual graphics cards production impacts and of the environmental impacts associated with training Machine Learning (ML) models over time. We collect information on graphics cards used to train ML models and released between 2013 and 2023. We assess the environmental impacts associated with the production of each card to visualize the trends on the same period. Then, using information on notable AI systems from the Epoch AI dataset we assess the environmental impacts associated with training each system. The environmental impacts of graphics cards production have increased continuously. The energy consumption and environmental impacts associated with training models have increased exponentially, even when considering reduction strategies such as location shifting to places with less carbon intensive electricity mixes. These results suggest that current impact reduction strategies cannot curb the growth in the environmental impacts of AI. This is consistent with rebound effect, where the efficiency increases fuel the creation of even larger models thereby cancelling the potential impact reduction. Furthermore, these results highlight the importance of considering the impacts of hardware over the entire life-cycle rather than the sole usage phase in order to avoid impact shifting. The environmental impact of AI cannot be reduced without reducing AI activities as well as increasing efficiency.

BLOOM: A 176B-Parameter Open-Access Multilingual Language Model

Nov 09, 2022Abstract:Large language models (LLMs) have been shown to be able to perform new tasks based on a few demonstrations or natural language instructions. While these capabilities have led to widespread adoption, most LLMs are developed by resource-rich organizations and are frequently kept from the public. As a step towards democratizing this powerful technology, we present BLOOM, a 176B-parameter open-access language model designed and built thanks to a collaboration of hundreds of researchers. BLOOM is a decoder-only Transformer language model that was trained on the ROOTS corpus, a dataset comprising hundreds of sources in 46 natural and 13 programming languages (59 in total). We find that BLOOM achieves competitive performance on a wide variety of benchmarks, with stronger results after undergoing multitask prompted finetuning. To facilitate future research and applications using LLMs, we publicly release our models and code under the Responsible AI License.

Estimating the Carbon Footprint of BLOOM, a 176B Parameter Language Model

Nov 03, 2022Abstract:Progress in machine learning (ML) comes with a cost to the environment, given that training ML models requires significant computational resources, energy and materials. In the present article, we aim to quantify the carbon footprint of BLOOM, a 176-billion parameter language model, across its life cycle. We estimate that BLOOM's final training emitted approximately 24.7 tonnes of~\carboneq~if we consider only the dynamic power consumption, and 50.5 tonnes if we account for all processes ranging from equipment manufacturing to energy-based operational consumption. We also study the energy requirements and carbon emissions of its deployment for inference via an API endpoint receiving user queries in real-time. We conclude with a discussion regarding the difficulty of precisely estimating the carbon footprint of ML models and future research directions that can contribute towards improving carbon emissions reporting.

Unraveling the hidden environmental impacts of AI solutions for environment

Oct 22, 2021

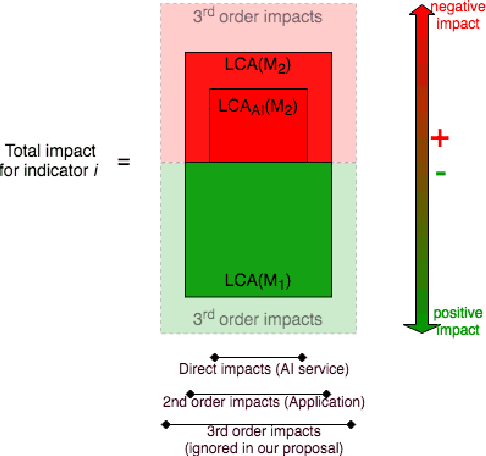

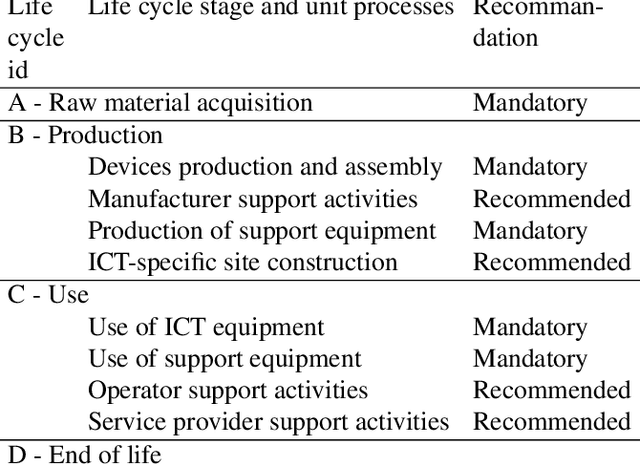

Abstract:In the past ten years artificial intelligence has encountered such dramatic progress that it is seen now as a tool of choice to solve environmental issues and in the first place greenhouse gas emissions (GHG). At the same time the deep learning community began to realize that training models with more and more parameters required a lot of energy and as a consequence GHG emissions. To our knowledge, questioning the complete environmental impacts of AI methods for environment ("AI for green"), and not only GHG, has never been addressed directly. In this article we propose to study the possible negative impact of "AI for green" 1) by reviewing first the different types of AI impacts 2) by presenting the different methodologies used to assess those impacts, in particular life cycle assessment and 3) by discussing how to assess the environmental usefulness of a general AI service.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge