Andrew Wilson

Uncertainty Calibration in Bayesian Neural Networks via Distance-Aware Priors

Jul 17, 2022

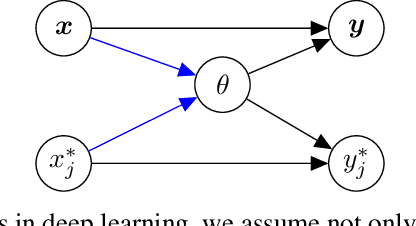

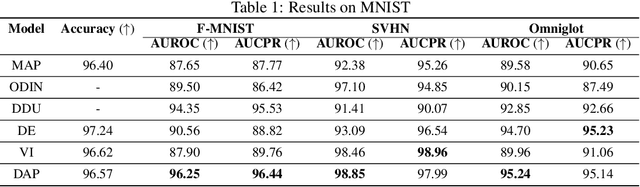

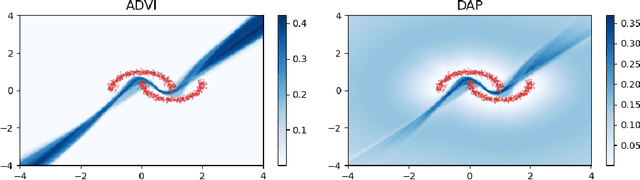

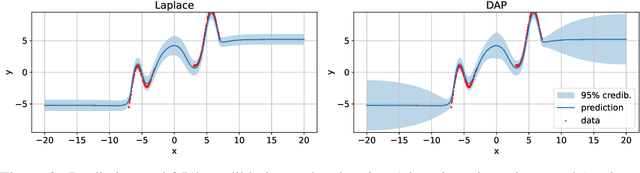

Abstract:As we move away from the data, the predictive uncertainty should increase, since a great variety of explanations are consistent with the little available information. We introduce Distance-Aware Prior (DAP) calibration, a method to correct overconfidence of Bayesian deep learning models outside of the training domain. We define DAPs as prior distributions over the model parameters that depend on the inputs through a measure of their distance from the training set. DAP calibration is agnostic to the posterior inference method, and it can be performed as a post-processing step. We demonstrate its effectiveness against several baselines in a variety of classification and regression problems, including benchmarks designed to test the quality of predictive distributions away from the data.

Scalable Gaussian Processes for Characterizing Multidimensional Change Surfaces

Nov 13, 2015

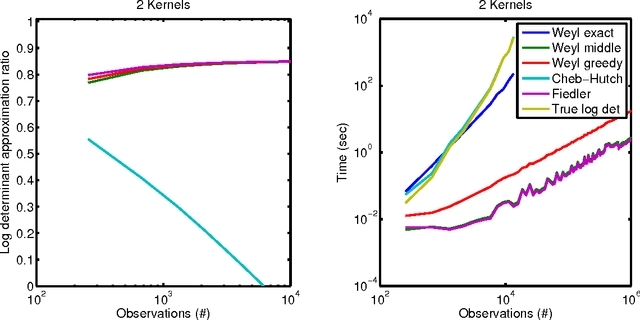

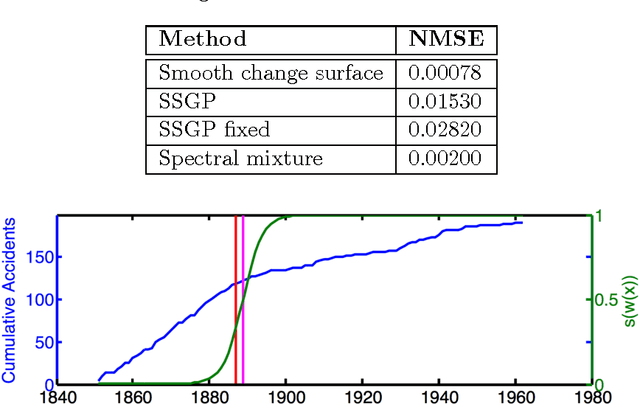

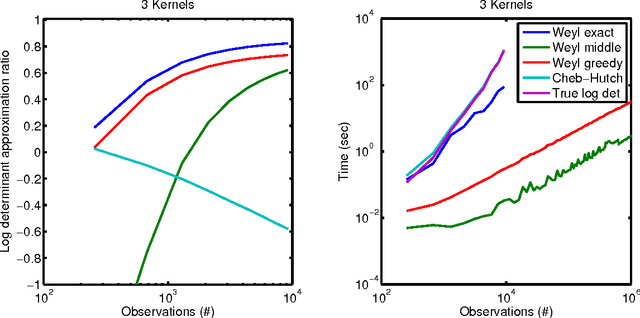

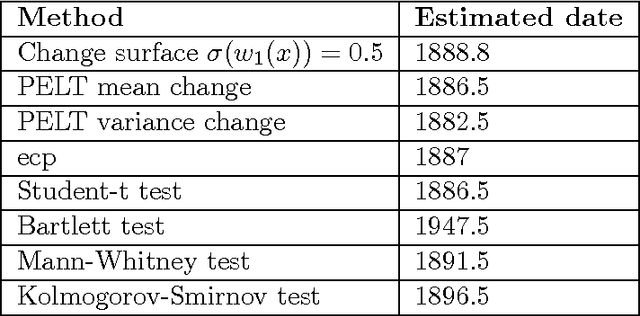

Abstract:We present a scalable Gaussian process model for identifying and characterizing smooth multidimensional changepoints, and automatically learning changes in expressive covariance structure. We use Random Kitchen Sink features to flexibly define a change surface in combination with expressive spectral mixture kernels to capture the complex statistical structure. Finally, through the use of novel methods for additive non-separable kernels, we can scale the model to large datasets. We demonstrate the model on numerical and real world data, including a large spatio-temporal disease dataset where we identify previously unknown heterogeneous changes in space and time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge