Andrew P Bradley

Fully automatic computer-aided mass detection and segmentation via pseudo-color mammograms and Mask R-CNN

Jun 28, 2019

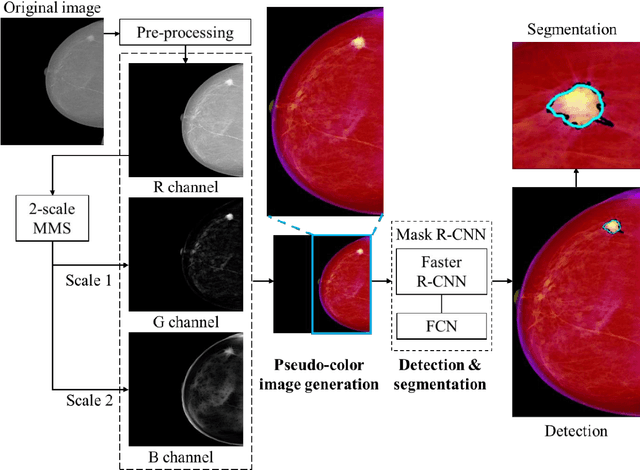

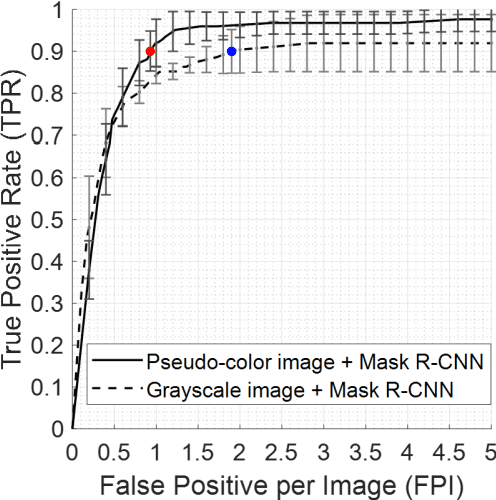

Abstract:Purpose: To propose pseudo-color mammograms that enhance mammographic masses as part of a fast computer-aided detection (CAD) system that simultaneously detects and segments masses without any user intervention. Methods: The proposed pseudo-color mammograms, whose three channels contain the original grayscale mammogram and two morphologically enhanced images, are used to provide pseudo-color contrast to the lesions. The morphological enhancement 'sifts' out the mass-like mammographic patterns to improve detection and segmentation. We construct a fast, fully automated simultaneous mass detection and segmentation CAD system using the colored mammograms as inputs of transfer learning with the Mask R-CNN which is a state-of-the-art deep learning framework. The source code for this work has been made available online. Results: Evaluated on the publicly available mammographic dataset INbreast, the method outperforms the state-of-the-art methods by achieving an average true positive rate of 0.90 at 0.9 false positive per image and an average Dice similarity index for mass segmentation of 0.88, while taking 20.4 seconds to process each image on average. Conclusions: The proposed method provides an accurate, fully-automatic breast mass detection and segmentation result in less than half a minute without any user intervention while outperforming state-of-the-art methods.

Producing radiologist-quality reports for interpretable artificial intelligence

Jun 01, 2018

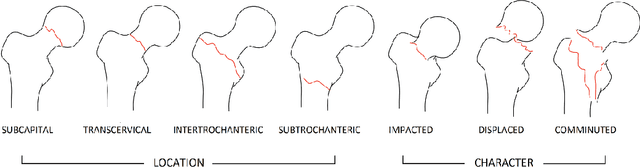

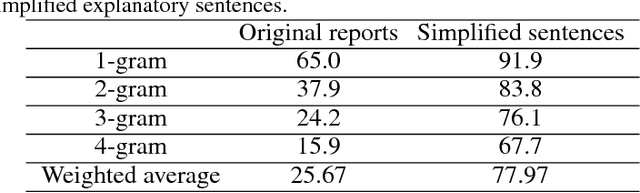

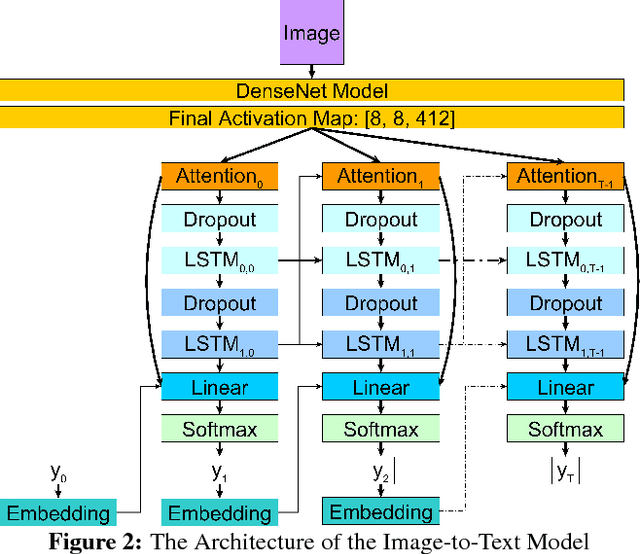

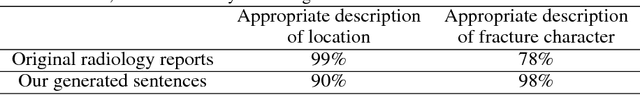

Abstract:Current approaches to explaining the decisions of deep learning systems for medical tasks have focused on visualising the elements that have contributed to each decision. We argue that such approaches are not enough to "open the black box" of medical decision making systems because they are missing a key component that has been used as a standard communication tool between doctors for centuries: language. We propose a model-agnostic interpretability method that involves training a simple recurrent neural network model to produce descriptive sentences to clarify the decision of deep learning classifiers. We test our method on the task of detecting hip fractures from frontal pelvic x-rays. This process requires minimal additional labelling despite producing text containing elements that the original deep learning classification model was not specifically trained to detect. The experimental results show that: 1) the sentences produced by our method consistently contain the desired information, 2) the generated sentences are preferred by doctors compared to current tools that create saliency maps, and 3) the combination of visualisations and generated text is better than either alone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge