Andrew O'Brien

Sparse identification of nonlinear dynamics in the presence of library and system uncertainty

Jan 23, 2024Abstract:The SINDy algorithm has been successfully used to identify the governing equations of dynamical systems from time series data. However, SINDy assumes the user has prior knowledge of the variables in the system and of a function library that can act as a basis for the system. In this paper, we demonstrate on real world data how the Augmented SINDy algorithm outperforms SINDy in the presence of system variable uncertainty. We then show SINDy can be further augmented to perform robustly when both kinds of uncertainty are present.

Investigating Sindy As a Tool For Causal Discovery In Time Series Signals

Dec 29, 2022Abstract:The SINDy algorithm has been successfully used to identify the governing equations of dynamical systems from time series data. In this paper, we argue that this makes SINDy a potentially useful tool for causal discovery and that existing tools for causal discovery can be used to dramatically improve the performance of SINDy as tool for robust sparse modeling and system identification. We then demonstrate empirically that augmenting the SINDy algorithm with tools from causal discovery can provides engineers with a tool for learning causally robust governing equations.

Dictionary Learning with Accumulator Neurons

May 30, 2022

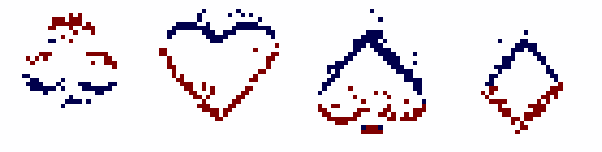

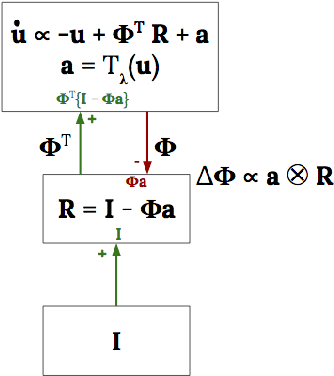

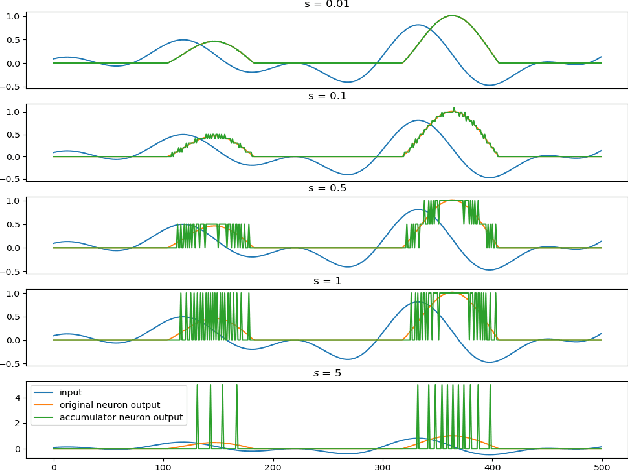

Abstract:The Locally Competitive Algorithm (LCA) uses local competition between non-spiking leaky integrator neurons to infer sparse representations, allowing for potentially real-time execution on massively parallel neuromorphic architectures such as Intel's Loihi processor. Here, we focus on the problem of inferring sparse representations from streaming video using dictionaries of spatiotemporal features optimized in an unsupervised manner for sparse reconstruction. Non-spiking LCA has previously been used to achieve unsupervised learning of spatiotemporal dictionaries composed of convolutional kernels from raw, unlabeled video. We demonstrate how unsupervised dictionary learning with spiking LCA (\hbox{S-LCA}) can be efficiently implemented using accumulator neurons, which combine a conventional leaky-integrate-and-fire (\hbox{LIF}) spike generator with an additional state variable that is used to minimize the difference between the integrated input and the spiking output. We demonstrate dictionary learning across a wide range of dynamical regimes, from graded to intermittent spiking, for inferring sparse representations of both static images drawn from the CIFAR database as well as video frames captured from a DVS camera. On a classification task that requires identification of the suite from a deck of cards being rapidly flipped through as viewed by a DVS camera, we find essentially no degradation in performance as the LCA model used to infer sparse spatiotemporal representations migrates from graded to spiking. We conclude that accumulator neurons are likely to provide a powerful enabling component of future neuromorphic hardware for implementing online unsupervised learning of spatiotemporal dictionaries optimized for sparse reconstruction of streaming video from event based DVS cameras.

Multi-Agent Algorithmic Recourse

Oct 01, 2021

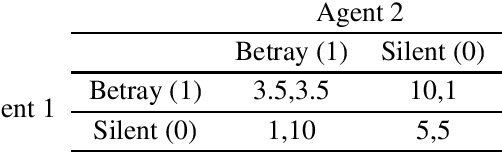

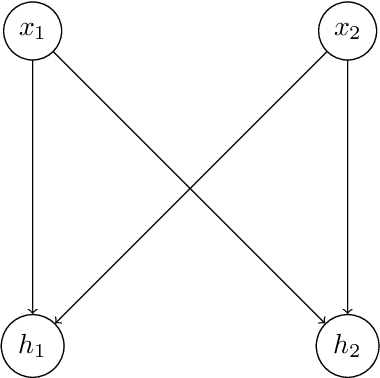

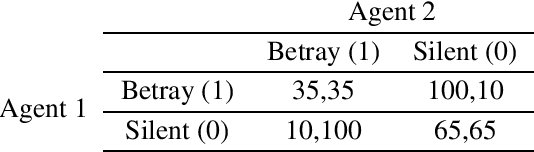

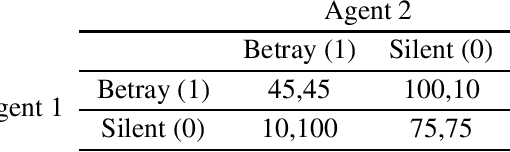

Abstract:The recent adoption of machine learning as a tool in real world decision making has spurred interest in understanding how these decisions are being made. Counterfactual Explanations are a popular interpretable machine learning technique that aims to understand how a machine learning model would behave if given alternative inputs. Many explanations attempt to go further and recommend actions an individual could take to obtain a more desirable output from the model. These recommendations are known as algorithmic recourse. Past work has largely focused on the effect algorithmic recourse has on a single agent. In this work, we show that when the assumption of a single agent environment is relaxed, current approaches to algorithmic recourse fail to guarantee certain ethically desirable properties. Instead, we propose a new game theory inspired framework for providing algorithmic recourse in a multi-agent environment that does guarantee these properties.

The Interpretable Dictionary in Sparse Coding

Nov 24, 2020

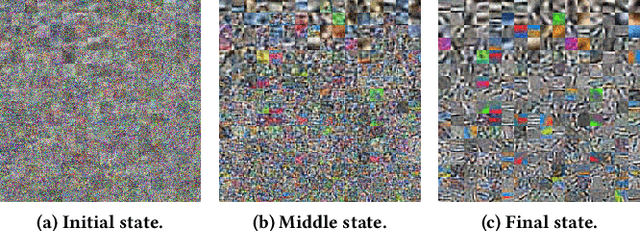

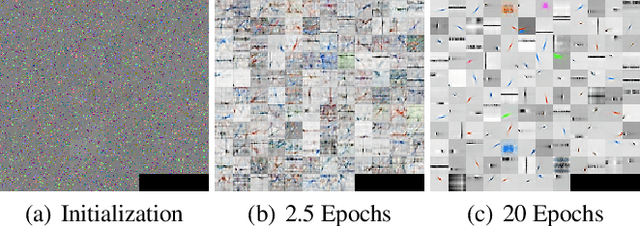

Abstract:Artificial neural networks (ANNs), specifically deep learning networks, have often been labeled as black boxes due to the fact that the internal representation of the data is not easily interpretable. In our work, we illustrate that an ANN, trained using sparse coding under specific sparsity constraints, yields a more interpretable model than the standard deep learning model. The dictionary learned by sparse coding can be more easily understood and the activations of these elements creates a selective feature output. We compare and contrast our sparse coding model with an equivalent feed forward convolutional autoencoder trained on the same data. Our results show both qualitative and quantitative benefits in the interpretation of the learned sparse coding dictionary as well as the internal activation representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge