Andrea Bonetti

GENIE-ASI: Generative Instruction and Executable Code for Analog Subcircuit Identification

Aug 26, 2025Abstract:Analog subcircuit identification is a core task in analog design, essential for simulation, sizing, and layout. Traditional methods often require extensive human expertise, rule-based encoding, or large labeled datasets. To address these challenges, we propose GENIE-ASI, the first training-free, large language model (LLM)-based methodology for analog subcircuit identification. GENIE-ASI operates in two phases: it first uses in-context learning to derive natural language instructions from a few demonstration examples, then translates these into executable Python code to identify subcircuits in unseen SPICE netlists. In addition, to evaluate LLM-based approaches systematically, we introduce a new benchmark composed of operational amplifier netlists (op-amps) that cover a wide range of subcircuit variants. Experimental results on the proposed benchmark show that GENIE-ASI matches rule-based performance on simple structures (F1-score = 1.0), remains competitive on moderate abstractions (F1-score = 0.81), and shows potential even on complex subcircuits (F1-score = 0.31). These findings demonstrate that LLMs can serve as adaptable, general-purpose tools in analog design automation, opening new research directions for foundation model applications in analog design automation.

Locality-aware Surrogates for Gradient-based Black-box Optimization

Jan 31, 2025Abstract:In physics and engineering, many processes are modeled using non-differentiable black-box simulators, making the optimization of such functions particularly challenging. To address such cases, inspired by the Gradient Theorem, we propose locality-aware surrogate models for active model-based black-box optimization. We first establish a theoretical connection between gradient alignment and the minimization of a Gradient Path Integral Equation (GradPIE) loss, which enforces consistency of the surrogate's gradients in local regions of the design space. Leveraging this theoretical insight, we develop a scalable training algorithm that minimizes the GradPIE loss, enabling both offline and online learning while maintaining computational efficiency. We evaluate our approach on three real-world tasks - spanning automated in silico experiments such as coupled nonlinear oscillators, analog circuits, and optical systems - and demonstrate consistent improvements in optimization efficiency under limited query budgets. Our results offer dependable solutions for both offline and online optimization tasks where reliable gradient estimation is needed.

GraCo -- A Graph Composer for Integrated Circuits

Nov 21, 2024

Abstract:Designing integrated circuits involves substantial complexity, posing challenges in revealing its potential applications - from custom digital cells to analog circuits. Despite extensive research over the past decades in building versatile and automated frameworks, there remains open room to explore more computationally efficient AI-based solutions. This paper introduces the graph composer GraCo, a novel method for synthesizing integrated circuits using reinforcement learning (RL). GraCo learns to construct a graph step-by-step, which is then converted into a netlist and simulated with SPICE. We demonstrate that GraCo is highly configurable, enabling the incorporation of prior design knowledge into the framework. We formalize how this prior knowledge can be utilized and, in particular, show that applying consistency checks enhances the efficiency of the sampling process. To evaluate its performance, we compare GraCo to a random baseline, which is known to perform well for smaller design space problems. We demonstrate that GraCo can discover circuits for tasks such as generating standard cells, including the inverter and the two-input NAND (NAND2) gate. Compared to a random baseline, GraCo requires 5x fewer sampling steps to design an inverter and successfully synthesizes a NAND2 gate that is 2.5x faster.

Schemato -- An LLM for Netlist-to-Schematic Conversion

Nov 21, 2024

Abstract:Machine learning models are advancing circuit design, particularly in analog circuits. They typically generate netlists that lack human interpretability. This is a problem as human designers heavily rely on the interpretability of circuit diagrams or schematics to intuitively understand, troubleshoot, and develop designs. Hence, to integrate domain knowledge effectively, it is crucial to translate ML-generated netlists into interpretable schematics quickly and accurately. We propose Schemato, a large language model (LLM) for netlist-to-schematic conversion. In particular, we consider our approach in the two settings of converting netlists to .asc files for LTSpice and LATEX files for CircuiTikz schematics. Experiments on our circuit dataset show that Schemato achieves up to 93% compilation success rate for the netlist-to-LaTeX conversion task, surpassing the 26% rate scored by the state-of-the-art LLMs. Furthermore, our experiments show that Schemato generates schematics with a mean structural similarity index measure that is 3xhigher than the best performing LLMs, therefore closer to the reference human design.

A Construction Kit for Efficient Low Power Neural Network Accelerator Designs

Jun 24, 2021

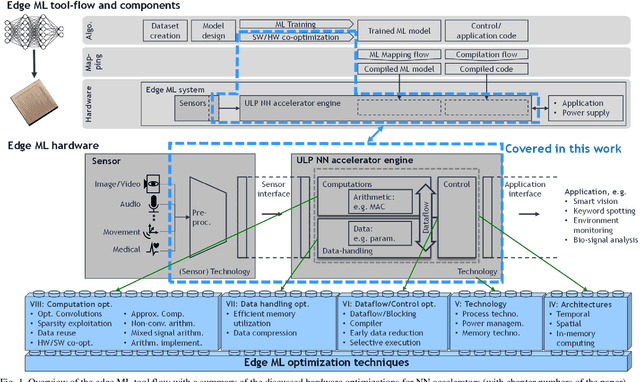

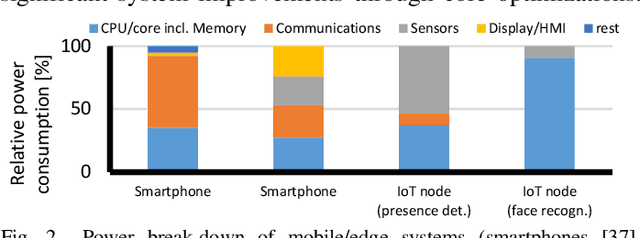

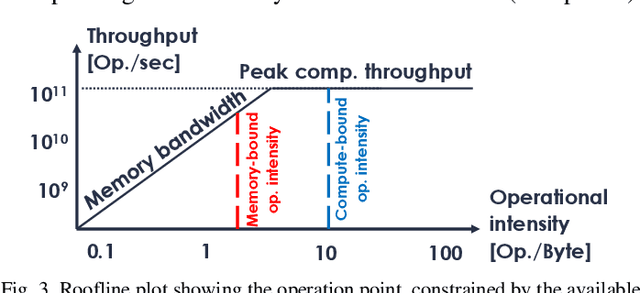

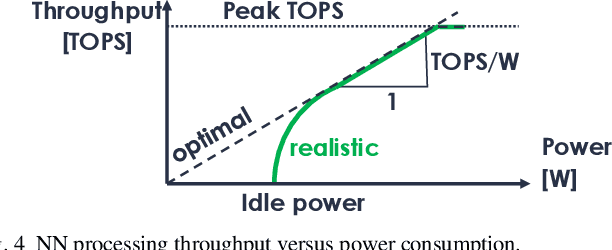

Abstract:Implementing embedded neural network processing at the edge requires efficient hardware acceleration that couples high computational performance with low power consumption. Driven by the rapid evolution of network architectures and their algorithmic features, accelerator designs are constantly updated and improved. To evaluate and compare hardware design choices, designers can refer to a myriad of accelerator implementations in the literature. Surveys provide an overview of these works but are often limited to system-level and benchmark-specific performance metrics, making it difficult to quantitatively compare the individual effect of each utilized optimization technique. This complicates the evaluation of optimizations for new accelerator designs, slowing-down the research progress. This work provides a survey of neural network accelerator optimization approaches that have been used in recent works and reports their individual effects on edge processing performance. It presents the list of optimizations and their quantitative effects as a construction kit, allowing to assess the design choices for each building block separately. Reported optimizations range from up to 10'000x memory savings to 33x energy reductions, providing chip designers an overview of design choices for implementing efficient low power neural network accelerators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge