Anders Arpteg

Feature Space Saturation during Training

Jun 18, 2020

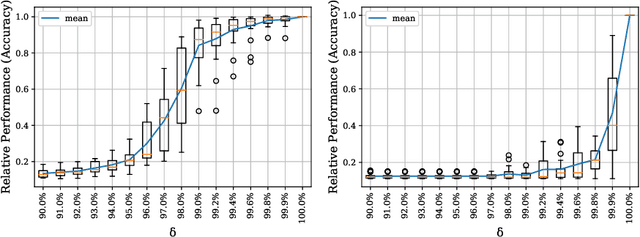

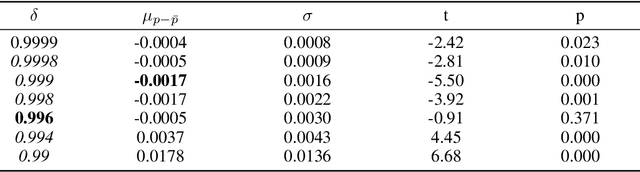

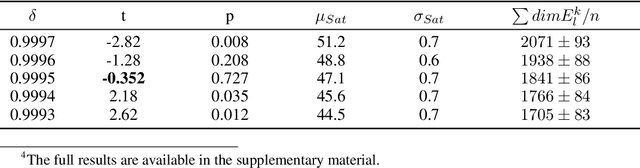

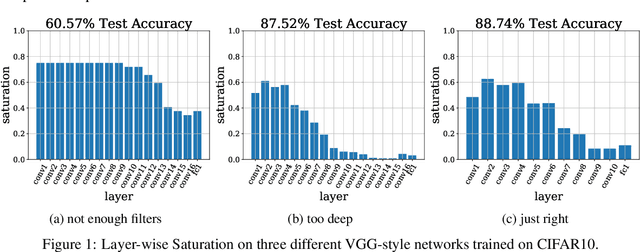

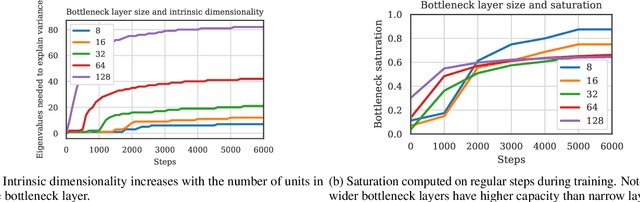

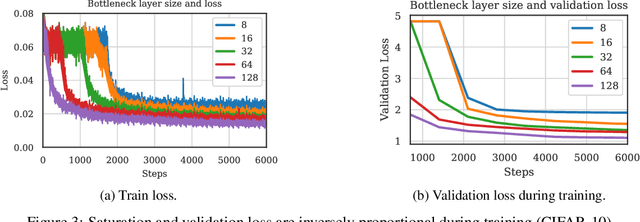

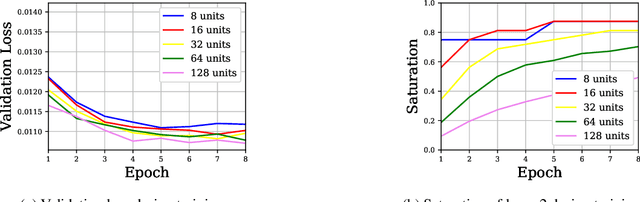

Abstract:We propose layer saturation - a simple, online-computable method for analyzing the information processing in neural networks. First, we show that a layer's output can be restricted to the eigenspace of its variance matrix without performance loss. We propose a computationally lightweight method for approximating the variance matrix during training. From the dimension of its lossless eigenspace we derive layer saturation - the ratio between the eigenspace dimension and layer width. We show that saturation seems to indicate which layers contribute to network performance. We demonstrate how to alter layer saturation in a neural network by changing network depth, filter sizes and input resolution. Furthermore, we show that well-chosen input resolution increases network performance by distributing the inference process more evenly across the network.

Perceiving Music Quality with GANs

Jun 11, 2020

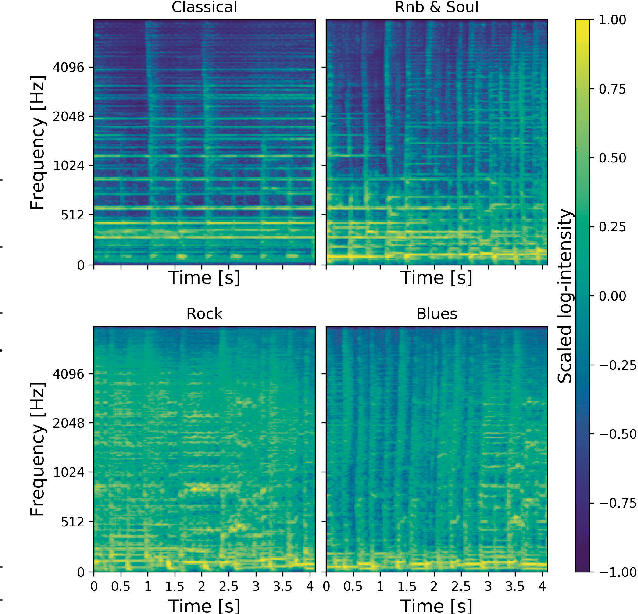

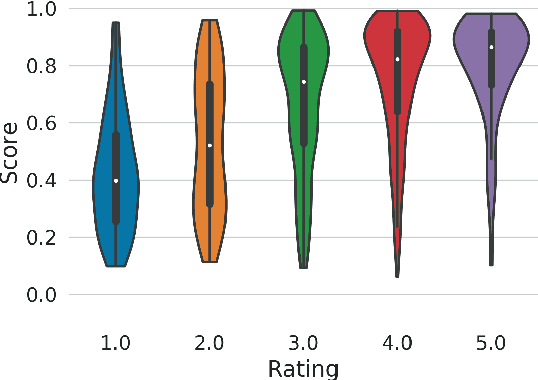

Abstract:Assessing perceptual quality of musical audio signals usually requires a clean reference signal of unaltered content, hindering applications where a reference is unavailable such as for music generation. We propose training a generative adversarial network on a music library, and using its discriminator as a measure of the perceived quality of music. This method is unsupervised, needs no access to degraded material and can be tuned for various domains of music. Finally, the method is shown to have a statistically significant correlation with human ratings of music.

Spectral Analysis of Latent Representations

Jul 19, 2019

Abstract:We propose a metric, Layer Saturation, defined as the proportion of the number of eigenvalues needed to explain 99% of the variance of the latent representations, for analyzing the learned representations of neural network layers. Saturation is based on spectral analysis and can be computed efficiently, making live analysis of the representations practical during training. We provide an outlook for future applications of this metric by outlining the behaviour of layer saturation in different neural architectures and problems. We further show that saturation is related to the generalization and predictive performance of neural networks.

Software Engineering Challenges of Deep Learning

Oct 29, 2018

Abstract:Surprisingly promising results have been achieved by deep learning (DL) systems in recent years. Many of these achievements have been reached in academic settings, or by large technology companies with highly skilled research groups and advanced supporting infrastructure. For companies without large research groups or advanced infrastructure, building high-quality production-ready systems with DL components has proven challenging. There is a clear lack of well-functioning tools and best practices for building DL systems. It is the goal of this research to identify what the main challenges are, by applying an interpretive research approach in close collaboration with companies of varying size and type. A set of seven projects have been selected to describe the potential with this new technology and to identify associated main challenges. A set of 12 main challenges has been identified and categorized into the three areas of development, production, and organizational challenges. Furthermore, a mapping between the challenges and the projects is defined, together with selected motivating descriptions of how and why the challenges apply to specific projects. Compared to other areas such as software engineering or database technologies, it is clear that DL is still rather immature and in need of further work to facilitate development of high-quality systems. The challenges identified in this paper can be used to guide future research by the software engineering and DL communities. Together, we could enable a large number of companies to start taking advantage of the high potential of the DL technology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge