Anastasios Tefas

Software Engineering for Self-Adaptive Robotics: A Research Agenda

May 26, 2025Abstract:Self-adaptive robotic systems are designed to operate autonomously in dynamic and uncertain environments, requiring robust mechanisms to monitor, analyse, and adapt their behaviour in real-time. Unlike traditional robotic software, which follows predefined logic, self-adaptive robots leverage artificial intelligence, machine learning, and model-driven engineering to continuously adjust to changing operational conditions while ensuring reliability, safety, and performance. This paper presents a research agenda for software engineering in self-adaptive robotics, addressing critical challenges across two key dimensions: (1) the development phase, including requirements engineering, software design, co-simulation, and testing methodologies tailored to adaptive robotic systems, and (2) key enabling technologies, such as digital twins, model-driven engineering, and AI-driven adaptation, which facilitate runtime monitoring, fault detection, and automated decision-making. We discuss open research challenges, including verifying adaptive behaviours under uncertainty, balancing trade-offs between adaptability, performance, and safety, and integrating self-adaptation frameworks like MAPE-K. By providing a structured roadmap, this work aims to advance the software engineering foundations for self-adaptive robotic systems, ensuring they remain trustworthy, efficient, and capable of handling real-world complexities.

A 262 TOPS Hyperdimensional Photonic AI Accelerator powered by a Si3N4 microcomb laser

Mar 05, 2025

Abstract:The ever-increasing volume of data has necessitated a new computing paradigm, embodied through Artificial Intelligence (AI) and Large Language Models (LLMs). Digital electronic AI computing systems, however, are gradually reaching their physical plateaus, stimulating extensive research towards next-generation AI accelerators. Photonic Neural Networks (PNNs), with their unique ability to capitalize on the interplay of multiple physical dimensions including time, wavelength, and space, have been brought forward with a credible promise for boosting computational power and energy efficiency in AI processors. In this article, we experimentally demonstrate a novel multidimensional arrayed waveguide grating router (AWGR)-based photonic AI accelerator that can execute tensor multiplications at a record-high total computational power of 262 TOPS, offering a ~24x improvement over the existing waveguide-based optical accelerators. It consists of a 16x16 AWGR that exploits the time-, wavelength- and space- division multiplexing (T-WSDM) for weight and input encoding together with an integrated Si3N4-based frequency comb for multi-wavelength generation. The photonic AI accelerator has been experimentally validated in both Fully-Connected (FC) and Convolutional NN (NNs) models, with the FC and CNN being trained for DDoS attack identification and MNIST classification, respectively. The experimental inference at 32 Gbaud achieved a Cohen's kappa score of 0.867 for DDoS detection and an accuracy of 92.14% for MNIST classification, respectively, closely matching the software performance.

Large Models in Dialogue for Active Perception and Anomaly Detection

Jan 27, 2025

Abstract:Autonomous aerial monitoring is an important task aimed at gathering information from areas that may not be easily accessible by humans. At the same time, this task often requires recognizing anomalies from a significant distance or not previously encountered in the past. In this paper, we propose a novel framework that leverages the advanced capabilities provided by Large Language Models (LLMs) to actively collect information and perform anomaly detection in novel scenes. To this end, we propose an LLM based model dialogue approach, in which two deep learning models engage in a dialogue to actively control a drone to increase perception and anomaly detection accuracy. We conduct our experiments in a high fidelity simulation environment where an LLM is provided with a predetermined set of natural language movement commands mapped into executable code functions. Additionally, we deploy a multimodal Visual Question Answering (VQA) model charged with the task of visual question answering and captioning. By engaging the two models in conversation, the LLM asks exploratory questions while simultaneously flying a drone into different parts of the scene, providing a novel way to implement active perception. By leveraging LLMs reasoning ability, we output an improved detailed description of the scene going beyond existing static perception approaches. In addition to information gathering, our approach is utilized for anomaly detection and our results demonstrate the proposed methods effectiveness in informing and alerting about potential hazards.

Using Part-based Representations for Explainable Deep Reinforcement Learning

Aug 22, 2024Abstract:Utilizing deep learning models to learn part-based representations holds significant potential for interpretable-by-design approaches, as these models incorporate latent causes obtained from feature representations through simple addition. However, training a part-based learning model presents challenges, particularly in enforcing non-negative constraints on the model's parameters, which can result in training difficulties such as instability and convergence issues. Moreover, applying such approaches in Deep Reinforcement Learning (RL) is even more demanding due to the inherent instabilities that impact many optimization methods. In this paper, we propose a non-negative training approach for actor models in RL, enabling the extraction of part-based representations that enhance interpretability while adhering to non-negative constraints. To this end, we employ a non-negative initialization technique, as well as a modified sign-preserving training method, which can ensure better gradient flow compared to existing approaches. We demonstrate the effectiveness of the proposed approach using the well-known Cartpole benchmark.

Deep Active Perception for Object Detection using Navigation Proposals

Dec 15, 2023Abstract:Deep Learning (DL) has brought significant advances to robotics vision tasks. However, most existing DL methods have a major shortcoming, they rely on a static inference paradigm inherent in traditional computer vision pipelines. On the other hand, recent studies have found that active perception improves the perception abilities of various models by going beyond these static paradigms. Despite the significant potential of active perception, it poses several challenges, primarily involving significant changes in training pipelines for deep learning models. To overcome these limitations, in this work, we propose a generic supervised active perception pipeline for object detection that can be trained using existing off-the-shelf object detectors, while also leveraging advances in simulation environments. To this end, the proposed method employs an additional neural network architecture that estimates better viewpoints in cases where the object detector confidence is insufficient. The proposed method was evaluated on synthetic datasets, constructed within the Webots robotics simulator, showcasing its effectiveness in two object detection cases.

Non-negative isomorphic neural networks for photonic neuromorphic accelerators

Oct 02, 2023

Abstract:Neuromorphic photonic accelerators are becoming increasingly popular, since they can significantly improve computation speed and energy efficiency, leading to femtojoule per MAC efficiency. However, deploying existing DL models on such platforms is not trivial, since a great range of photonic neural network architectures relies on incoherent setups and power addition operational schemes that cannot natively represent negative quantities. This results in additional hardware complexity that increases cost and reduces energy efficiency. To overcome this, we can train non-negative neural networks and potentially exploit the full range of incoherent neuromorphic photonic capabilities. However, existing approaches cannot achieve the same level of accuracy as their regular counterparts, due to training difficulties, as also recent evidence suggests. To this end, we introduce a methodology to obtain the non-negative isomorphic equivalents of regular neural networks that meet requirements of neuromorphic hardware, overcoming the aforementioned limitations. Furthermore, we also introduce a sign-preserving optimization approach that enables training of such isomorphic networks in a non-negative manner.

Multiplicative update rules for accelerating deep learning training and increasing robustness

Jul 14, 2023

Abstract:Even nowadays, where Deep Learning (DL) has achieved state-of-the-art performance in a wide range of research domains, accelerating training and building robust DL models remains a challenging task. To this end, generations of researchers have pursued to develop robust methods for training DL architectures that can be less sensitive to weight distributions, model architectures and loss landscapes. However, such methods are limited to adaptive learning rate optimizers, initialization schemes, and clipping gradients without investigating the fundamental rule of parameters update. Although multiplicative updates have contributed significantly to the early development of machine learning and hold strong theoretical claims, to best of our knowledge, this is the first work that investigate them in context of DL training acceleration and robustness. In this work, we propose an optimization framework that fits to a wide range of optimization algorithms and enables one to apply alternative update rules. To this end, we propose a novel multiplicative update rule and we extend their capabilities by combining it with a traditional additive update term, under a novel hybrid update method. We claim that the proposed framework accelerates training, while leading to more robust models in contrast to traditionally used additive update rule and we experimentally demonstrate their effectiveness in a wide range of task and optimization methods. Such tasks ranging from convex and non-convex optimization to difficult image classification benchmarks applying a wide range of traditionally used optimization methods and Deep Neural Network (DNN) architectures.

Deep Learning for Energy Time-Series Analysis and Forecasting

Jun 29, 2023Abstract:Energy time-series analysis describes the process of analyzing past energy observations and possibly external factors so as to predict the future. Different tasks are involved in the general field of energy time-series analysis and forecasting, with electric load demand forecasting, personalized energy consumption forecasting, as well as renewable energy generation forecasting being among the most common ones. Following the exceptional performance of Deep Learning (DL) in a broad area of vision tasks, DL models have successfully been utilized in time-series forecasting tasks. This paper aims to provide insight into various DL methods geared towards improving the performance in energy time-series forecasting tasks, with special emphasis in Greek Energy Market, and equip the reader with the necessary knowledge to apply these methods in practice.

Variational Voxel Pseudo Image Tracking

Feb 12, 2023

Abstract:Uncertainty estimation is an important task for critical problems, such as robotics and autonomous driving, because it allows creating statistically better perception models and signaling the model's certainty in its predictions to the decision method or a human supervisor. In this paper, we propose a Variational Neural Network-based version of a Voxel Pseudo Image Tracking (VPIT) method for 3D Single Object Tracking. The Variational Feature Generation Network of the proposed Variational VPIT computes features for target and search regions and the corresponding uncertainties, which are later combined using an uncertainty-aware cross-correlation module in one of two ways: by computing similarity between the corresponding uncertainties and adding it to the regular cross-correlation values, or by penalizing the uncertain feature channels to increase influence of the certain features. In experiments, we show that both methods improve tracking performance, while penalization of uncertain features provides the best uncertainty quality.

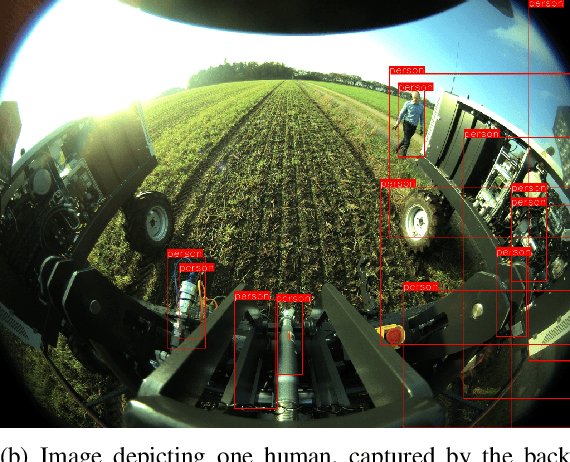

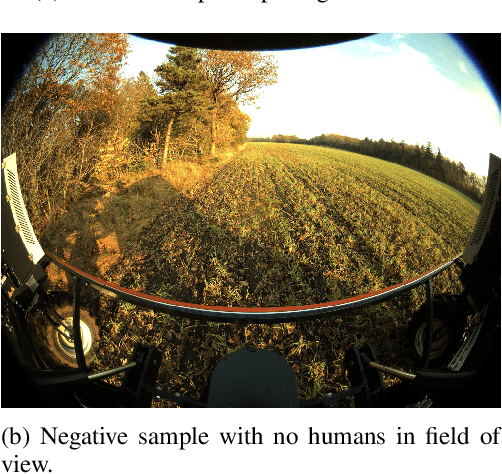

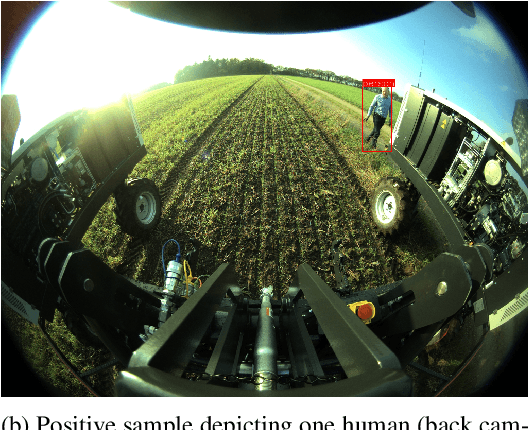

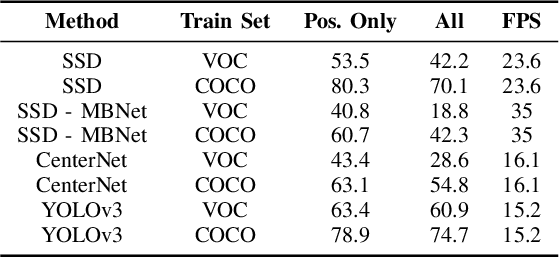

A Novel Dataset for Evaluating and Alleviating Domain Shift for Human Detection in Agricultural Fields

Sep 27, 2022

Abstract:In this paper we evaluate the impact of domain shift on human detection models trained on well known object detection datasets when deployed on data outside the distribution of the training set, as well as propose methods to alleviate such phenomena based on the available annotations from the target domain. Specifically, we introduce the OpenDR Humans in Field dataset, collected in the context of agricultural robotics applications, using the Robotti platform, allowing for quantitatively measuring the impact of domain shift in such applications. Furthermore, we examine the importance of manual annotation by evaluating three distinct scenarios concerning the training data: a) only negative samples, i.e., no depicted humans, b) only positive samples, i.e., only images which contain humans, and c) both negative and positive samples. Our results indicate that good performance can be achieved even when using only negative samples, if additional consideration is given to the training process. We also find that positive samples increase performance especially in terms of better localization. The dataset is publicly available for download at https://github.com/opendr-eu/datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge