Anand Gokhale

Proximal Gradient Dynamics: Monotonicity, Exponential Convergence, and Applications

Sep 16, 2024Abstract:In this letter, we study the proximal gradient dynamics. This recently-proposed continuous-time dynamics solves optimization problems whose cost functions are separable into a nonsmooth convex and a smooth component. First, we show that the cost function decreases monotonically along the trajectories of the proximal gradient dynamics. We then introduce a new condition that guarantees exponential convergence of the cost function to its optimal value, and show that this condition implies the proximal Polyak-{\L}ojasiewicz condition. We also show that the proximal Polyak-{\L}ojasiewicz condition guarantees exponential convergence of the cost function. Moreover, we extend these results to time-varying optimization problems, providing bounds for equilibrium tracking. Finally, we discuss applications of these findings, including the LASSO problem, quadratic optimization with polytopic constraints, and certain matrix based problems.

Contracting Dynamics for Time-Varying Convex Optimization

May 24, 2023

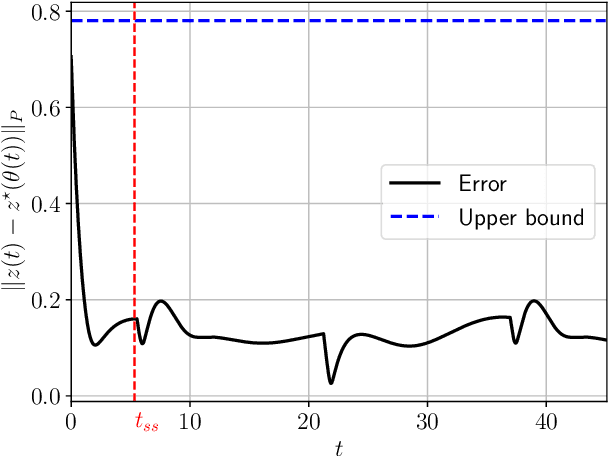

Abstract:In this article, we provide a novel and broadly-applicable contraction-theoretic approach to continuous-time time-varying convex optimization. For any parameter-dependent contracting dynamics, we show that the tracking error between any solution trajectory and the equilibrium trajectory is uniformly upper bounded in terms of the contraction rate, the Lipschitz constant in which the parameter appears, and the rate of change of the parameter. To apply this result to time-varying convex optimization problems, we establish the strong infinitesimal contraction of dynamics solving three canonical problems, namely monotone inclusions, linear equality-constrained problems, and composite minimization problems. For each of these problems, we prove the sharpest-known rates of contraction and provide explicit tracking error bounds between solution trajectories and minimizing trajectories. We validate our theoretical results on two numerical examples.

Distributed Online Optimization with Byzantine Adversarial Agents

Sep 25, 2021

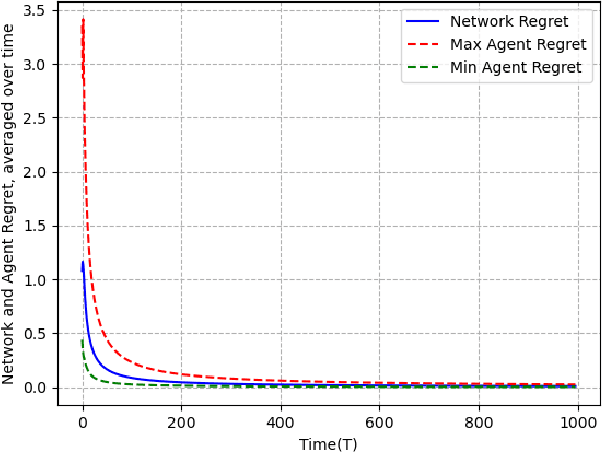

Abstract:We study the problem of non-constrained, discrete-time, online distributed optimization in a multi-agent system where some of the agents do not follow the prescribed update rule either due to failures or malicious intentions. None of the agents have prior information about the identities of the faulty agents and any agent can communicate only with its immediate neighbours. At each time step, a Lipschitz strongly convex cost function is revealed locally to all the agents and the non-faulty agents update their states using their local information and the information obtained from their neighbours. We measure the performance of the online algorithm by comparing it to its offline version when the cost functions are known apriori. The difference between the same is termed as regret. Under sufficient conditions on the graph topology, the number and location of the adversaries, the defined regret grows sublinearly. We further conduct numerical experiments to validate our theoretical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge