Rachel Kalpana Kalaimani

Distributed Federated Learning by Alternating Periods of Training

Jan 05, 2026Abstract:Federated learning is a privacy-focused approach towards machine learning where models are trained on client devices with locally available data and aggregated at a central server. However, the dependence on a single central server is challenging in the case of a large number of clients and even poses the risk of a single point of failure. To address these critical limitations of scalability and fault-tolerance, we present a distributed approach to federated learning comprising multiple servers with inter-server communication capabilities. While providing a fully decentralized approach, the designed framework retains the core federated learning structure where each server is associated with a disjoint set of clients with server-client communication capabilities. We propose a novel DFL (Distributed Federated Learning) algorithm which uses alternating periods of local training on the client data followed by global training among servers. We show that the DFL algorithm, under a suitable choice of parameters, ensures that all the servers converge to a common model value within a small tolerance of the ideal model, thus exhibiting effective integration of local and global training models. Finally, we illustrate our theoretical claims through numerical simulations.

Distributed Online Optimization with Byzantine Adversarial Agents

Sep 25, 2021

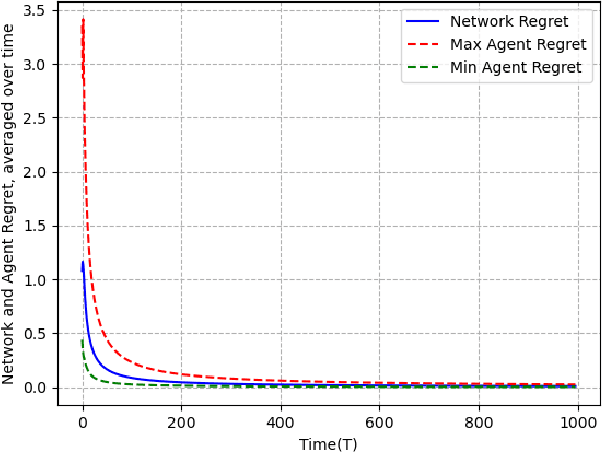

Abstract:We study the problem of non-constrained, discrete-time, online distributed optimization in a multi-agent system where some of the agents do not follow the prescribed update rule either due to failures or malicious intentions. None of the agents have prior information about the identities of the faulty agents and any agent can communicate only with its immediate neighbours. At each time step, a Lipschitz strongly convex cost function is revealed locally to all the agents and the non-faulty agents update their states using their local information and the information obtained from their neighbours. We measure the performance of the online algorithm by comparing it to its offline version when the cost functions are known apriori. The difference between the same is termed as regret. Under sufficient conditions on the graph topology, the number and location of the adversaries, the defined regret grows sublinearly. We further conduct numerical experiments to validate our theoretical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge