An Ran Ran

Unpaired Optical Coherence Tomography Angiography Image Super-Resolution via Frequency-Aware Inverse-Consistency GAN

Sep 29, 2023Abstract:For optical coherence tomography angiography (OCTA) images, a limited scanning rate leads to a trade-off between field-of-view (FOV) and imaging resolution. Although larger FOV images may reveal more parafoveal vascular lesions, their application is greatly hampered due to lower resolution. To increase the resolution, previous works only achieved satisfactory performance by using paired data for training, but real-world applications are limited by the challenge of collecting large-scale paired images. Thus, an unpaired approach is highly demanded. Generative Adversarial Network (GAN) has been commonly used in the unpaired setting, but it may struggle to accurately preserve fine-grained capillary details, which are critical biomarkers for OCTA. In this paper, our approach aspires to preserve these details by leveraging the frequency information, which represents details as high-frequencies ($\textbf{hf}$) and coarse-grained backgrounds as low-frequencies ($\textbf{lf}$). In general, we propose a GAN-based unpaired super-resolution method for OCTA images and exceptionally emphasize $\textbf{hf}$ fine capillaries through a dual-path generator. To facilitate a precise spectrum of the reconstructed image, we also propose a frequency-aware adversarial loss for the discriminator and introduce a frequency-aware focal consistency loss for end-to-end optimization. Experiments show that our method outperforms other state-of-the-art unpaired methods both quantitatively and visually.

Reference-based OCT Angiogram Super-resolution with Learnable Texture Generation

May 10, 2023Abstract:Optical coherence tomography angiography (OCTA) is a new imaging modality to visualize retinal microvasculature and has been readily adopted in clinics. High-resolution OCT angiograms are important to qualitatively and quantitatively identify potential biomarkers for different retinal diseases accurately. However, one significant problem of OCTA is the inevitable decrease in resolution when increasing the field-of-view given a fixed acquisition time. To address this issue, we propose a novel reference-based super-resolution (RefSR) framework to preserve the resolution of the OCT angiograms while increasing the scanning area. Specifically, textures from the normal RefSR pipeline are used to train a learnable texture generator (LTG), which is designed to generate textures according to the input. The key difference between the proposed method and traditional RefSR models is that the textures used during inference are generated by the LTG instead of being searched from a single reference image. Since the LTG is optimized throughout the whole training process, the available texture space is significantly enlarged and no longer limited to a single reference image, but extends to all textures contained in the training samples. Moreover, our proposed LTGNet does not require a reference image at the inference phase, thereby becoming invulnerable to the selection of the reference image. Both experimental and visual results show that LTGNet has superior performance and robustness over state-of-the-art methods, indicating good reliability and promise in real-life deployment. The source code will be made available upon acceptance.

Detecting Glaucoma Using 3D Convolutional Neural Network of Raw SD-OCT Optic Nerve Scans

Oct 14, 2019

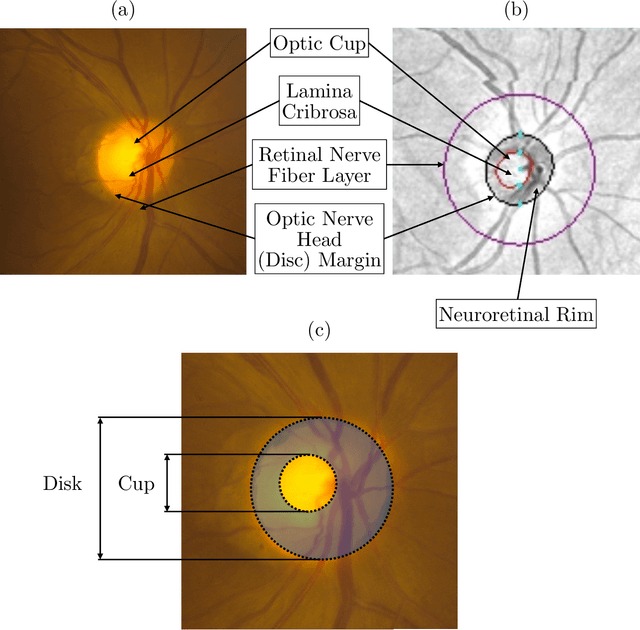

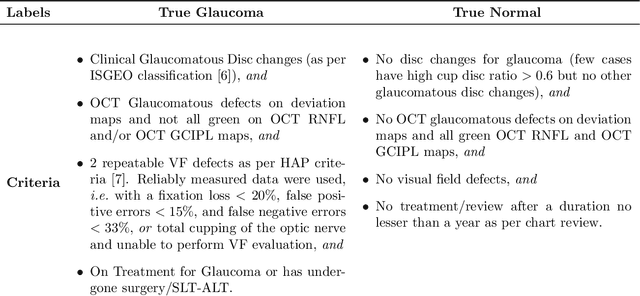

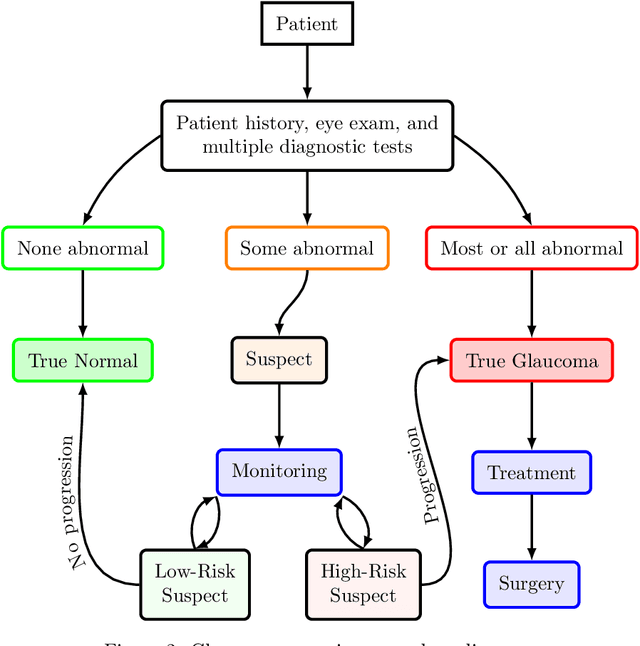

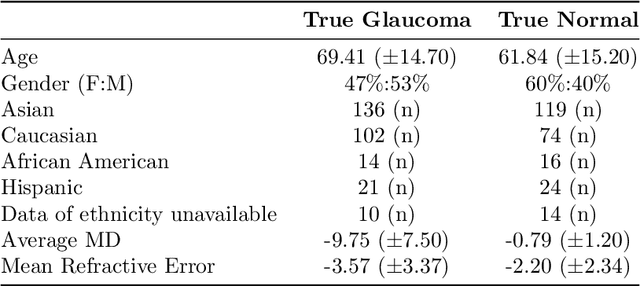

Abstract:We propose developing and validating a three-dimensional (3D) deep learning system using the entire unprocessed OCT optic nerve volumes to distinguish true glaucoma from normals in order to discover any additional imaging biomarkers within the cube through saliency mapping. The algorithm has been validated against 4 additional distinct datasets from different countries using multimodal test results to define glaucoma rather than just the OCT alone. 2076 OCT (Cirrus SD-OCT, Carl Zeiss Meditec, Dublin, CA) cube scans centered over the optic nerve, of 879 eyes (390 healthy and 489 glaucoma) from 487 patients, age 18-84 years, were exported from the Glaucoma Clinic Imaging Database at the Byers Eye Institute, Stanford University, from March 2010 to December 2017. A 3D deep neural network was trained and tested on this unique OCT optic nerve head dataset from Stanford. A total of 3620 scans (all obtained using the Cirrus SD-OCT device) from 1458 eyes obtained from 4 different institutions, from United States (943 scans), Hong Kong (1625 scans), India (672 scans), and Nepal (380 scans) were used for external evaluation. The 3D deep learning system achieved an area under the receiver operation characteristics curve (AUROC) of 0.8883 in the primary Stanford test set identifying true normal from true glaucoma. The system obtained AUROCs of 0.8571, 0.7695, 0.8706, and 0.7965 on OCT cubes from United States, Hong Kong, India, and Nepal, respectively. We also analyzed the performance of the model separately for each myopia severity level as defined by spherical equivalent and the model was able to achieve F1 scores of 0.9673, 0.9491, and 0.8528 on severe, moderate, and mild myopia cases, respectively. Saliency map visualizations highlighted a significant association between the optic nerve lamina cribrosa region in the glaucoma group.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge