Amy Tabb

Self-supervised Learning for Panoptic Segmentation of Multiple Fruit Flower Species

Sep 10, 2022

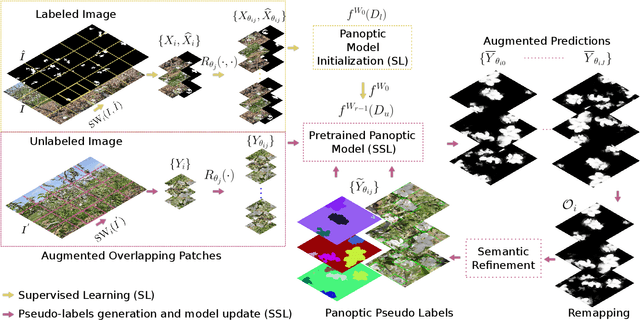

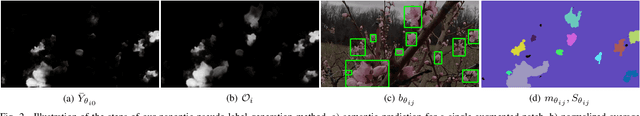

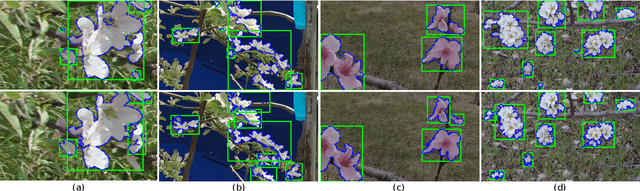

Abstract:Convolutional neural networks trained using manually generated labels are commonly used for semantic or instance segmentation. In precision agriculture, automated flower detection methods use supervised models and post-processing techniques that may not perform consistently as the appearance of the flowers and the data acquisition conditions vary. We propose a self-supervised learning strategy to enhance the sensitivity of segmentation models to different flower species using automatically generated pseudo-labels. We employ a data augmentation and refinement approach to improve the accuracy of the model predictions. The augmented semantic predictions are then converted to panoptic pseudo-labels to iteratively train a multi-task model. The self-supervised model predictions can be refined with existing post-processing approaches to further improve their accuracy. An evaluation on a multi-species fruit tree flower dataset demonstrates that our method outperforms state-of-the-art models without computationally expensive post-processing steps, providing a new baseline for flower detection applications.

ArXiving Before Submission Helps Everyone

Oct 11, 2020

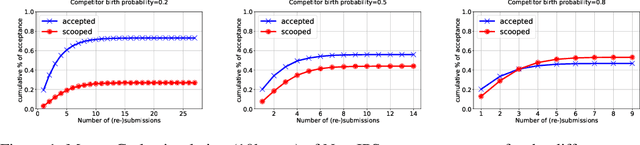

Abstract:We claim, and present evidence, that allowing arXiv publication before a conference or journal submission benefits researchers, especially early career, as well as the whole scientific community. Specifically, arXiving helps professional identity building, protects against independent re-discovery, idea theft and gate-keeping; it facilitates open research result distribution and reduces inequality. The advantages dwarf the drawbacks -- mainly the relative increase in acceptance rate of papers of well-known authors -- which studies show to be marginal. Analyzing the pros and cons of arXiving papers, we conclude that requiring preprints be anonymous is nearly as detrimental as not allowing them. We see no reasons why anyone but the authors should decide whether to arXiv or not.

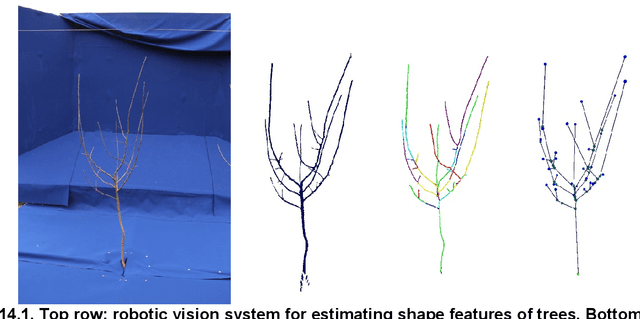

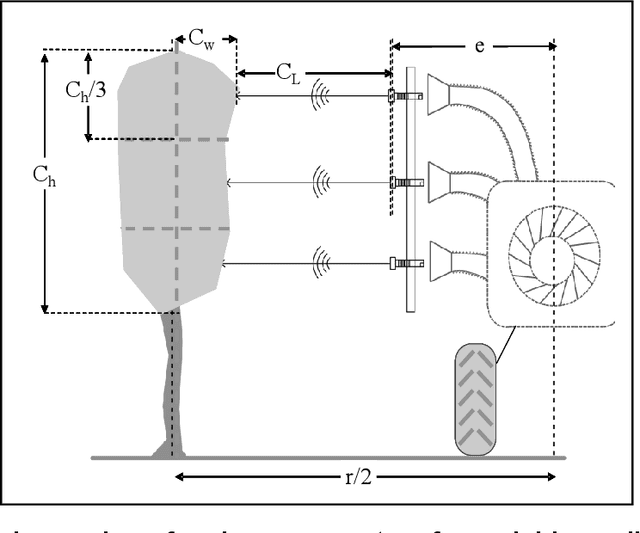

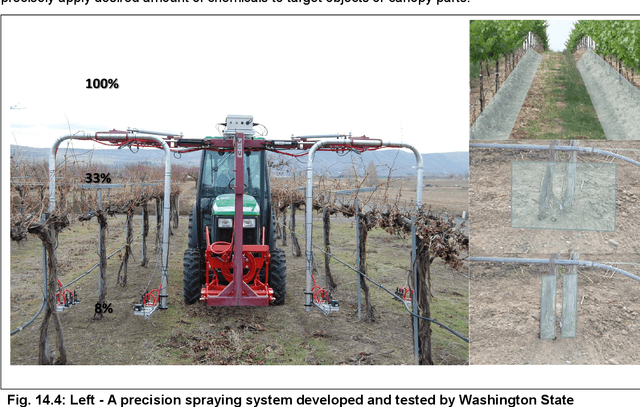

The Use of Agricultural Robots in Orchard Management

Jul 30, 2019

Abstract:Book chapter that summarizes recent research on agricultural robotics in orchard management, including Robotic pruning, Robotic thinning, Robotic spraying, Robotic harvesting, Robotic fruit transportation, and future trends.

* 22 pages

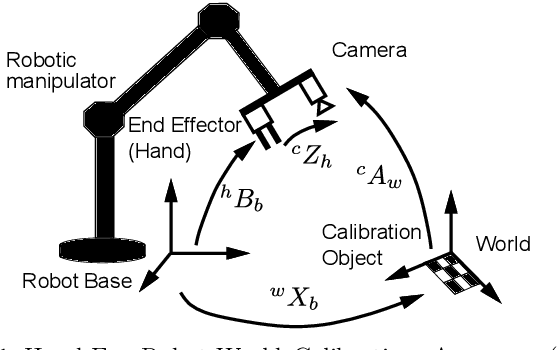

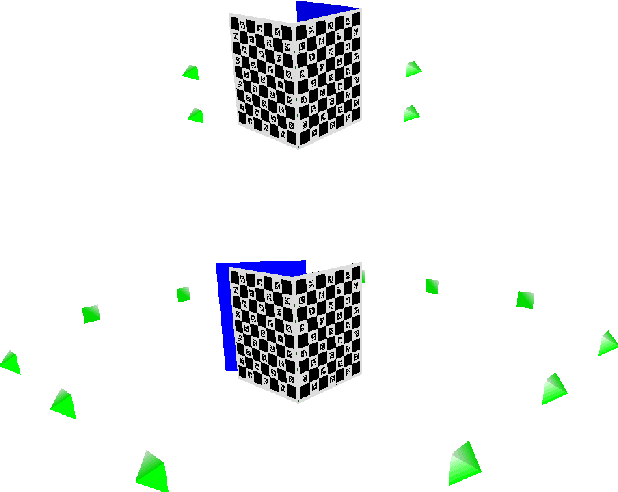

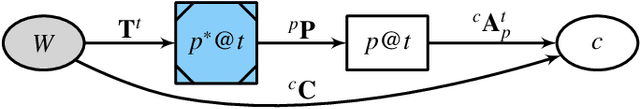

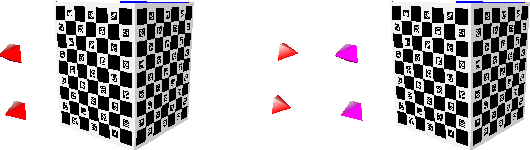

Solving the Robot-World Hand-Eye Calibration Problem with Iterative Methods

Jul 29, 2019

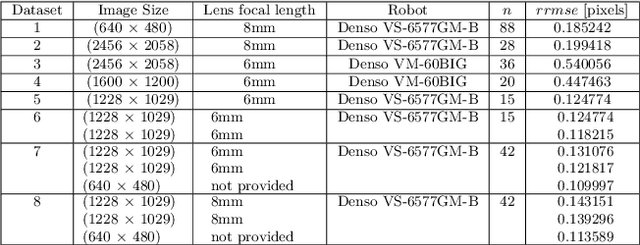

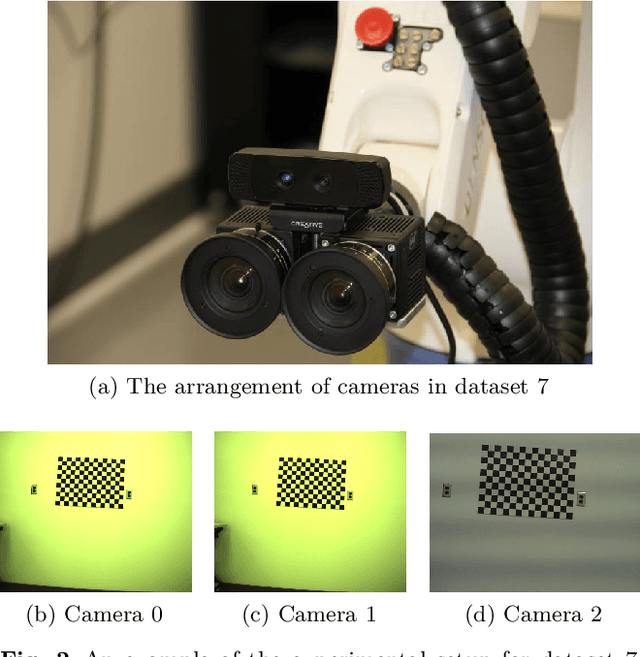

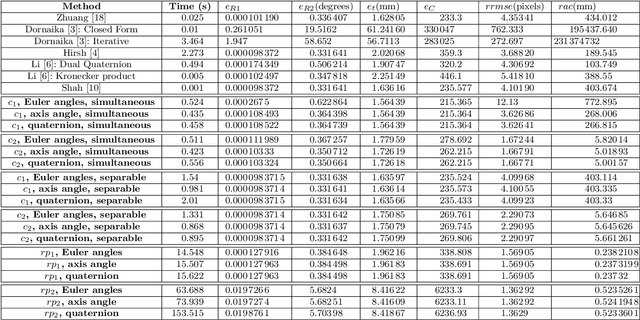

Abstract:Robot-world, hand-eye calibration is the problem of determining the transformation between the robot end-effector and a camera, as well as the transformation between the robot base and the world coordinate system. This relationship has been modeled as $\mathbf{AX}=\mathbf{ZB}$, where $\mathbf{X}$ and $\mathbf{Z}$ are unknown homogeneous transformation matrices. The successful execution of many robot manipulation tasks depends on determining these matrices accurately, and we are particularly interested in the use of calibration for use in vision tasks. In this work, we describe a collection of methods consisting of two cost function classes, three different parameterizations of rotation components, and separable versus simultaneous formulations. We explore the behavior of this collection of methods on real datasets and simulated datasets, and compare to seven other state-of-the-art methods. Our collection of methods return greater accuracy on many metrics as compared to the state-of-the-art. The collection of methods is extended to the problem of robot-world hand-multiple-eye calibration, and results are shown with two and three cameras mounted on the same robot.

* 25 pages, including Erratum

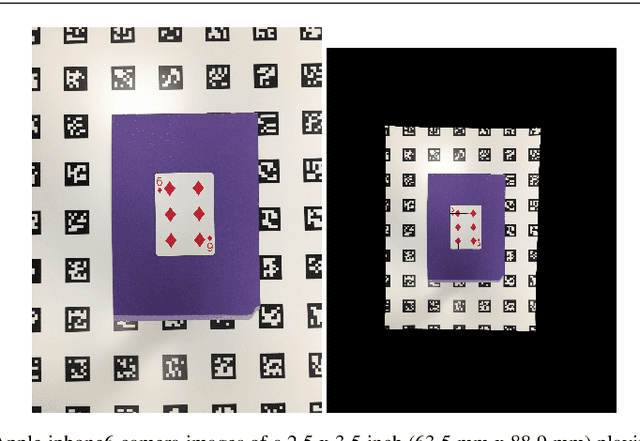

Using cameras for precise measurement of two-dimensional plant features

Apr 30, 2019

Abstract:Images are used frequently in plant phenotyping to capture measurements. This chapter offers a repeatable method for capturing two-dimensional measurements of plant parts in field or laboratory settings using a variety of camera styles (cellular phone, DSLR), with the addition of a printed calibration pattern. The method is based on calibrating the camera using information available from the EXIF tags from the image, as well as visual information from the pattern. Code is provided to implement the method, as well as a dataset for testing. We include steps to verify protocol correctness by imaging an artifact. The use of this protocol for two-dimensional plant phenotyping will allow data capture from different cameras and environments, with comparison on the same physical scale.

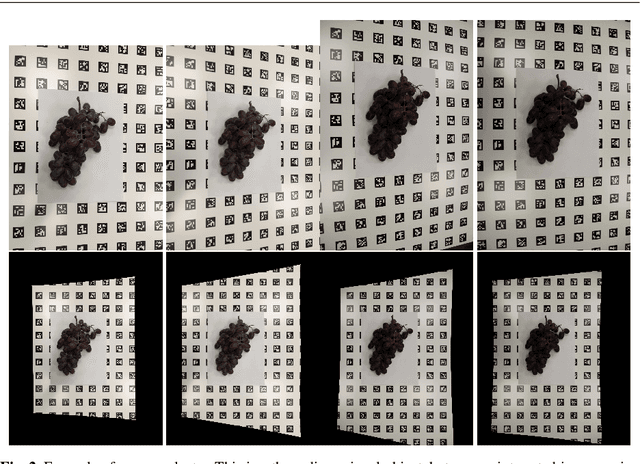

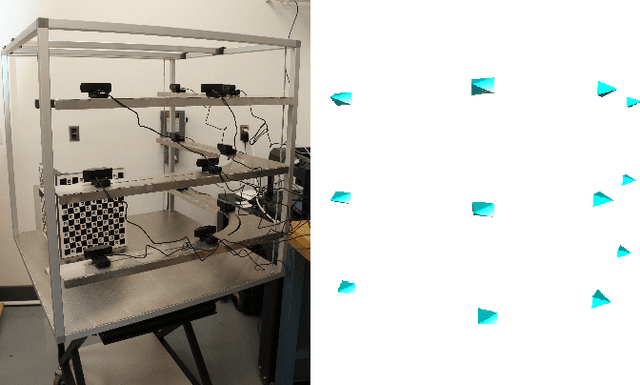

Calibration of Asynchronous Camera Networks for Object Reconstruction Tasks

Mar 15, 2019

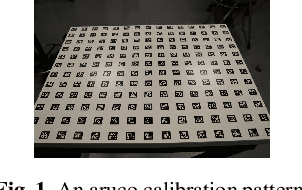

Abstract:Camera network and multi-camera calibration for external parameters is a necessary step for a variety of contexts in computer vision and robotics, ranging from three-dimensional reconstruction to human activity tracking. This paper describes a method for camera network and/or multi-camera calibration suitable for specific contexts: the cameras may not all have a common field of view, or if they do, there may be some views that are 180 degrees from one another, and the network may be asynchronous. The calibration object required is one or more planar calibration patterns, rigidly attached to one another, and are distinguishable from one another, such as aruco or charuco patterns. We formulate the camera network and/or multi-camera calibration problem in this context using rigidity constraints, represented as a system of equations, and an approximate solution is found through a two-step process. Synthetic and real experiments, including scenarios of a asynchronous camera network and rotating imaging system, demonstrate the method in a variety of settings. Reconstruction accuracy error was less than 0.5 mm for all datasets. This method is suitable for new users to calibrate a camera network, and the modularity of the calibration object also allows for disassembly, shipping, and the use of this method in a variety of large and small spaces.

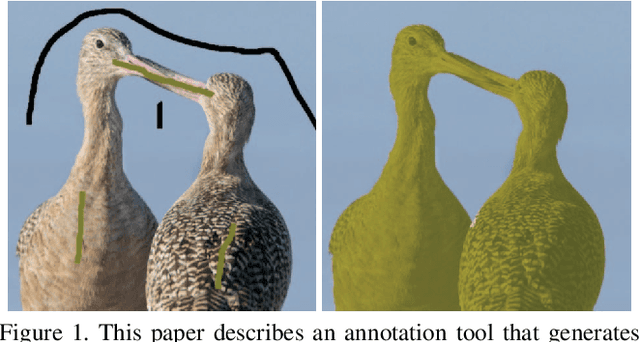

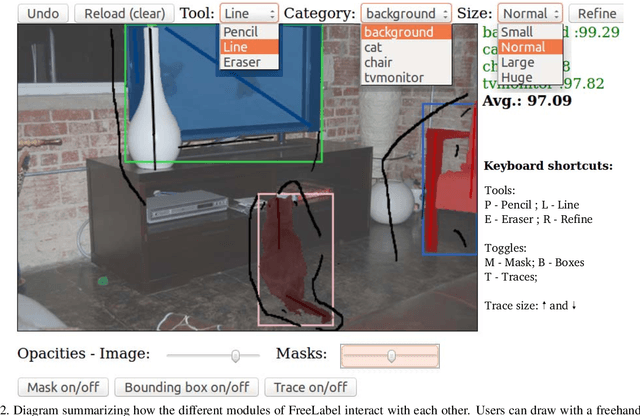

FreeLabel: A Publicly Available Annotation Tool based on Freehand Traces

Mar 11, 2019

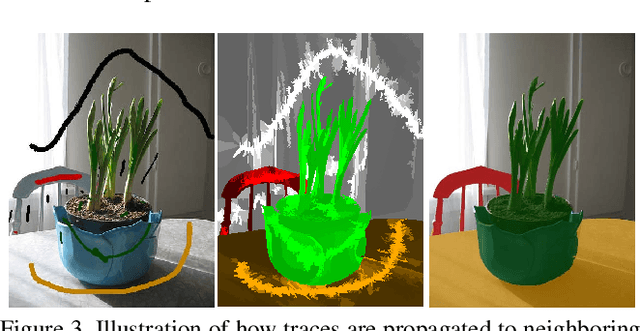

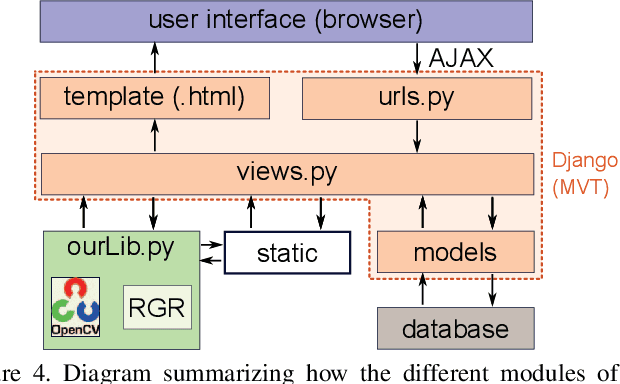

Abstract:Large-scale annotation of image segmentation datasets is often prohibitively expensive, as it usually requires a huge number of worker hours to obtain high-quality results. Abundant and reliable data has been, however, crucial for the advances on image understanding tasks achieved by deep learning models. In this paper, we introduce FreeLabel, an intuitive open-source web interface that allows users to obtain high-quality segmentation masks with just a few freehand scribbles, in a matter of seconds. The efficacy of FreeLabel is quantitatively demonstrated by experimental results on the PASCAL dataset as well as on a dataset from the agricultural domain. Designed to benefit the computer vision community, FreeLabel can be used for both crowdsourced or private annotation and has a modular structure that can be easily adapted for any image dataset.

* Accepted and presented at 2019 IEEE Winter Conference on Applications of Computer Vision (WACV). 10 pages

Detecting Invasive Insects with Unmanned Aerial Vehicles

Mar 03, 2019

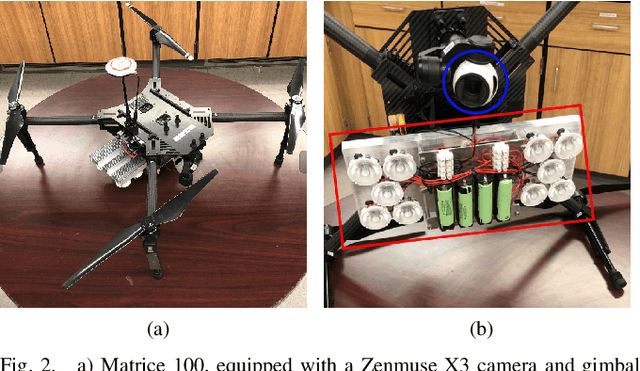

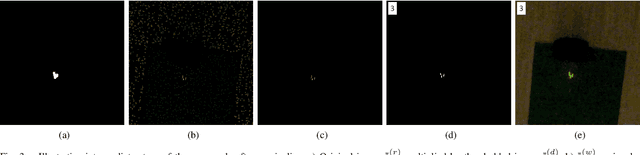

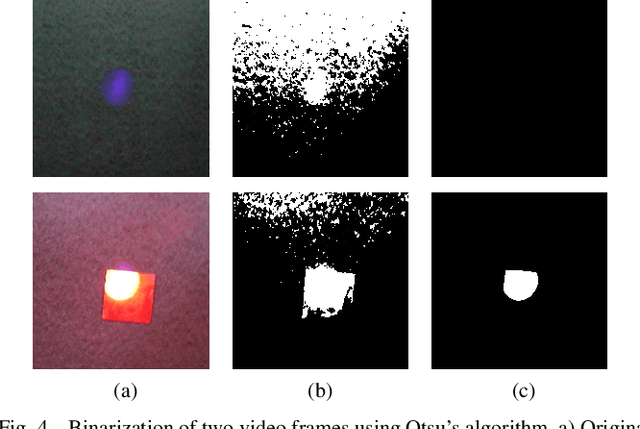

Abstract:A key aspect to controlling and reducing the effects invasive insect species have on agriculture is to obtain knowledge about the migration patterns of these species. Current state-of-the-art methods of studying these migration patterns involve a mark-release-recapture technique, in which insects are released after being marked and researchers attempt to recapture them later. However, this approach involves a human researcher manually searching for these insects in large fields and results in very low recapture rates. In this paper, we propose an automated system for detecting released insects using an unmanned aerial vehicle. This system utilizes ultraviolet lighting technology, digital cameras, and lightweight computer vision algorithms to more quickly and accurately detect insects compared to the current state of the art. The efficiency and accuracy that this system provides will allow for a more comprehensive understanding of invasive insect species migration patterns. Our experimental results demonstrate that our system can detect real target insects in field conditions with high precision and recall rates.

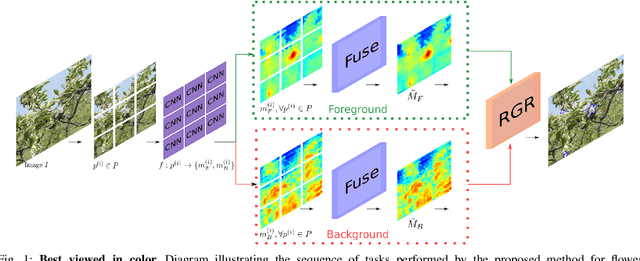

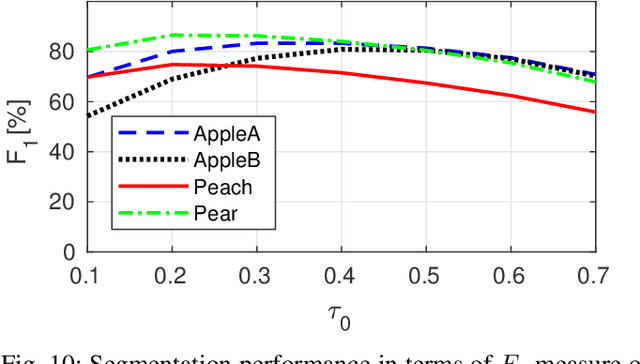

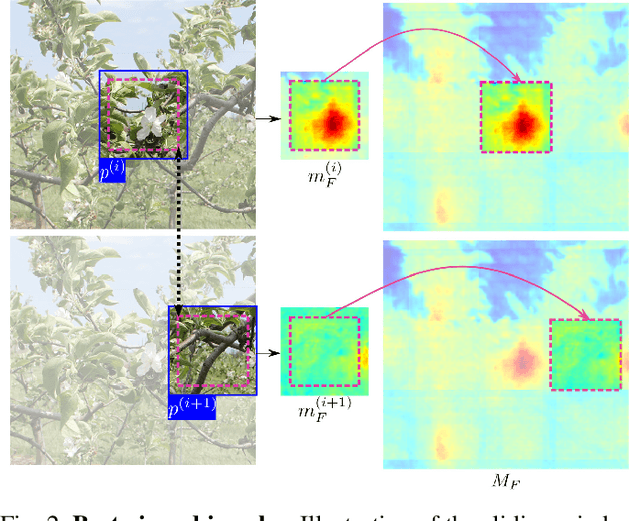

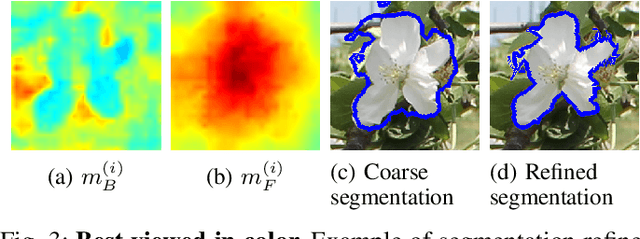

Multispecies fruit flower detection using a refined semantic segmentation network

Sep 20, 2018

Abstract:In fruit production, critical crop management decisions are guided by bloom intensity, i.e., the number of flowers present in an orchard. Despite its importance, bloom intensity is still typically estimated by means of human visual inspection. Existing automated computer vision systems for flower identification are based on hand-engineered techniques that work only under specific conditions and with limited performance. This work proposes an automated technique for flower identification that is robust to uncontrolled environments and applicable to different flower species. Our method relies on an end-to-end residual convolutional neural network (CNN) that represents the state-of-the-art in semantic segmentation. To enhance its sensitivity to flowers, we fine-tune this network using a single dataset of apple flower images. Since CNNs tend to produce coarse segmentations, we employ a refinement method to better distinguish between individual flower instances. Without any pre-processing or dataset-specific training, experimental results on images of apple, peach and pear flowers, acquired under different conditions demonstrate the robustness and broad applicability of our method.

* 8 pages

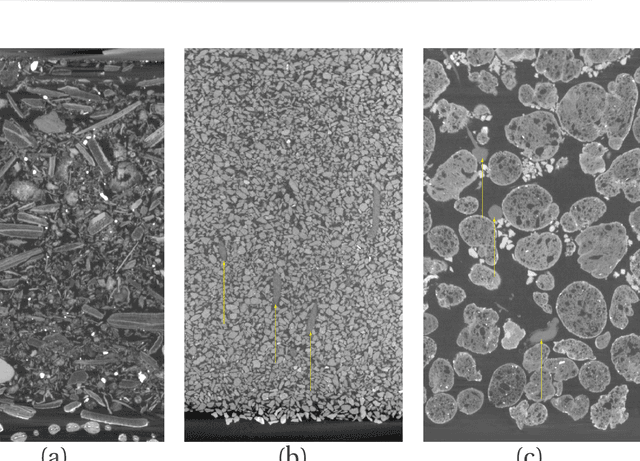

Segmenting root systems in X-ray computed tomography images using level sets

Sep 17, 2018

Abstract:The segmentation of plant roots from soil and other growing media in X-ray computed tomography images is needed to effectively study the root system architecture without excavation. However, segmentation is a challenging problem in this context because the root and non-root regions share similar features. In this paper, we describe a method based on level sets and specifically adapted for this segmentation problem. In particular, we deal with the issues of using a level sets approach on large image volumes for root segmentation, and track active regions of the front using an occupancy grid. This method allows for straightforward modifications to a narrow-band algorithm such that excessive forward and backward movements of the front can be avoided, distance map computations in a narrow band context can be done in linear time through modification of Meijster et al.'s distance transform algorithm, and regions of the image volume are iteratively used to estimate distributions for root versus non-root classes. Results are shown of three plant species of different maturity levels, grown in three different media. Our method compares favorably to a state-of-the-art method for root segmentation in X-ray CT image volumes.

* 11 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge