Alireza Bagheri

Neighborhood-Order Learning Graph Attention Network for Fake News Detection

Feb 10, 2025Abstract:Fake news detection is a significant challenge in the digital age, which has become increasingly important with the proliferation of social media and online communication networks. Graph Neural Networks (GNN)-based methods have shown high potential in analyzing graph-structured data for this problem. However, a major limitation in conventional GNN architectures is their inability to effectively utilize information from neighbors beyond the network's layer depth, which can reduce the model's accuracy and effectiveness. In this paper, we propose a novel model called Neighborhood-Order Learning Graph Attention Network (NOL-GAT) for fake news detection. This model allows each node in each layer to independently learn its optimal neighborhood order. By doing so, the model can purposefully and efficiently extract critical information from distant neighbors. The NOL-GAT architecture consists of two main components: a Hop Network that determines the optimal neighborhood order and an Embedding Network that updates node embeddings using these optimal neighborhoods. To evaluate the model's performance, experiments are conducted on various fake news datasets. Results demonstrate that NOL-GAT significantly outperforms baseline models in metrics such as accuracy and F1-score, particularly in scenarios with limited labeled data. Features such as mitigating the over-squashing problem, improving information flow, and reducing computational complexity further highlight the advantages of the proposed model.

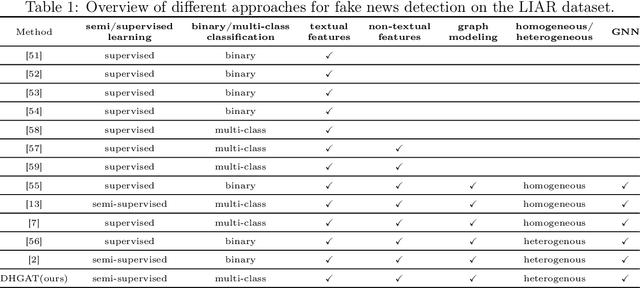

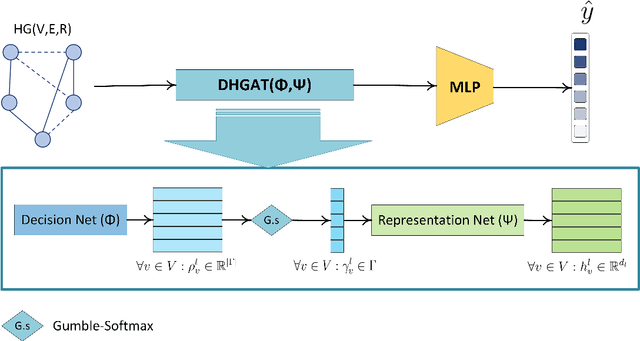

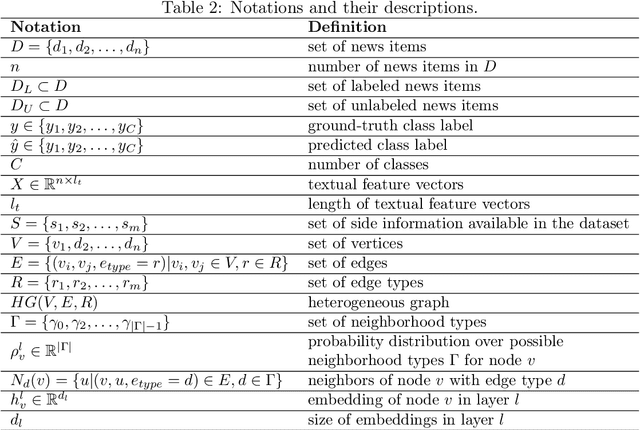

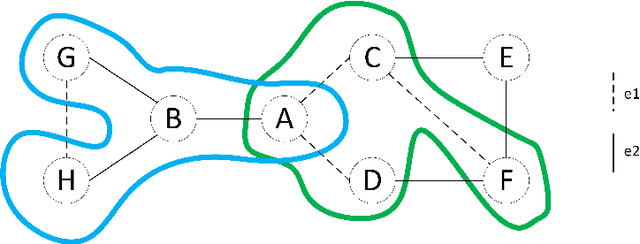

A Decision-Based Heterogenous Graph Attention Network for Multi-Class Fake News Detection

Jan 06, 2025

Abstract:A promising tool for addressing fake news detection is Graph Neural Networks (GNNs). However, most existing GNN-based methods rely on binary classification, categorizing news as either real or fake. Additionally, traditional GNN models use a static neighborhood for each node, making them susceptible to issues like over-squashing. In this paper, we introduce a novel model named Decision-based Heterogeneous Graph Attention Network (DHGAT) for fake news detection in a semi-supervised setting. DHGAT effectively addresses the limitations of traditional GNNs by dynamically optimizing and selecting the neighborhood type for each node in every layer. It represents news data as a heterogeneous graph where nodes (news items) are connected by various types of edges. The architecture of DHGAT consists of a decision network that determines the optimal neighborhood type and a representation network that updates node embeddings based on this selection. As a result, each node learns an optimal and task-specific computational graph, enhancing both the accuracy and efficiency of the fake news detection process. We evaluate DHGAT on the LIAR dataset, a large and challenging dataset for multi-class fake news detection, which includes news items categorized into six classes. Our results demonstrate that DHGAT outperforms existing methods, improving accuracy by approximately 4% and showing robustness with limited labeled data.

LOSS-GAT: Label Propagation and One-Class Semi-Supervised Graph Attention Network for Fake News Detection

Feb 13, 2024Abstract:In the era of widespread social networks, the rapid dissemination of fake news has emerged as a significant threat, inflicting detrimental consequences across various dimensions of people's lives. Machine learning and deep learning approaches have been extensively employed for identifying fake news. However, a significant challenge in identifying fake news is the limited availability of labeled news datasets. Therefore, the One-Class Learning (OCL) approach, utilizing only a small set of labeled data from the interest class, can be a suitable approach to address this challenge. On the other hand, representing data as a graph enables access to diverse content and structural information, and label propagation methods on graphs can be effective in predicting node labels. In this paper, we adopt a graph-based model for data representation and introduce a semi-supervised and one-class approach for fake news detection, called LOSS-GAT. Initially, we employ a two-step label propagation algorithm, utilizing Graph Neural Networks (GNNs) as an initial classifier to categorize news into two groups: interest (fake) and non-interest (real). Subsequently, we enhance the graph structure using structural augmentation techniques. Ultimately, we predict the final labels for all unlabeled data using a GNN that induces randomness within the local neighborhood of nodes through the aggregation function. We evaluate our proposed method on five common datasets and compare the results against a set of baseline models, including both OCL and binary labeled models. The results demonstrate that LOSS-GAT achieves a notable improvement, surpassing 10%, with the advantage of utilizing only a limited set of labeled fake news. Noteworthy, LOSS-GAT even outperforms binary labeled models.

Securing Pathways with Orthogonal Robots

Aug 19, 2023Abstract:The protection of pathways holds immense significance across various domains, including urban planning, transportation, surveillance, and security. This article introduces a groundbreaking approach to safeguarding pathways by employing orthogonal robots. The study specifically addresses the challenge of efficiently guarding orthogonal areas with the minimum number of orthogonal robots. The primary focus is on orthogonal pathways, characterized by a path-like dual graph of vertical decomposition. It is demonstrated that determining the minimum number of orthogonal robots for pathways can be achieved in linear time. However, it is essential to note that the general problem of finding the minimum number of robots for simple polygons with general visibility, even in the orthogonal case, is known to be NP-hard. Emphasis is placed on the flexibility of placing robots anywhere within the polygon, whether on the boundary or in the interior.

* 8 pages, 5 figures

Minimizing Turns in Watchman Robot Navigation: Strategies and Solutions

Aug 19, 2023Abstract:The Orthogonal Watchman Route Problem (OWRP) entails the search for the shortest path, known as the watchman route, that a robot must follow within a polygonal environment. The primary objective is to ensure that every point in the environment remains visible from at least one point on the route, allowing the robot to survey the entire area in a single, continuous sweep. This research places particular emphasis on reducing the number of turns in the route, as it is crucial for optimizing navigation in watchman routes within the field of robotics. The cost associated with changing direction is of significant importance, especially for specific types of robots. This paper introduces an efficient linear-time algorithm for solving the OWRP under the assumption that the environment is monotone. The findings of this study contribute to the progress of robotic systems by enabling the design of more streamlined patrol robots. These robots are capable of efficiently navigating complex environments while minimizing the number of turns. This advancement enhances their coverage and surveillance capabilities, making them highly effective in various real-world applications.

* 6 pages, 3 figures

Adversarial Training for Probabilistic Spiking Neural Networks

Feb 26, 2018

Abstract:Classifiers trained using conventional empirical risk minimization or maximum likelihood methods are known to suffer dramatic performance degradations when tested over examples adversarially selected based on knowledge of the classifier's decision rule. Due to the prominence of Artificial Neural Networks (ANNs) as classifiers, their sensitivity to adversarial examples, as well as robust training schemes, have been recently the subject of intense investigation. In this paper, for the first time, the sensitivity of spiking neural networks (SNNs), or third-generation neural networks, to adversarial examples is studied. The study considers rate and time encoding, as well as rate and first-to-spike decoding. Furthermore, a robust training mechanism is proposed that is demonstrated to enhance the performance of SNNs under white-box attacks.

Training Probabilistic Spiking Neural Networks with First-to-spike Decoding

Feb 22, 2018

Abstract:Third-generation neural networks, or Spiking Neural Networks (SNNs), aim at harnessing the energy efficiency of spike-domain processing by building on computing elements that operate on, and exchange, spikes. In this paper, the problem of training a two-layer SNN is studied for the purpose of classification, under a Generalized Linear Model (GLM) probabilistic neural model that was previously considered within the computational neuroscience literature. Conventional classification rules for SNNs operate offline based on the number of output spikes at each output neuron. In contrast, a novel training method is proposed here for a first-to-spike decoding rule, whereby the SNN can perform an early classification decision once spike firing is detected at an output neuron. Numerical results bring insights into the optimal parameter selection for the GLM neuron and on the accuracy-complexity trade-off performance of conventional and first-to-spike decoding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge