Alina Ene

Online and Streaming Algorithms for Constrained $k$-Submodular Maximization

May 25, 2023

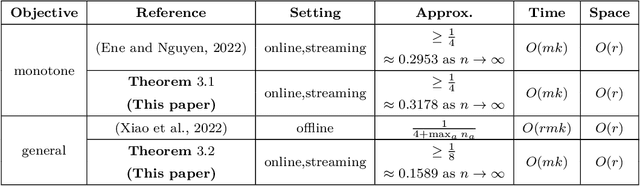

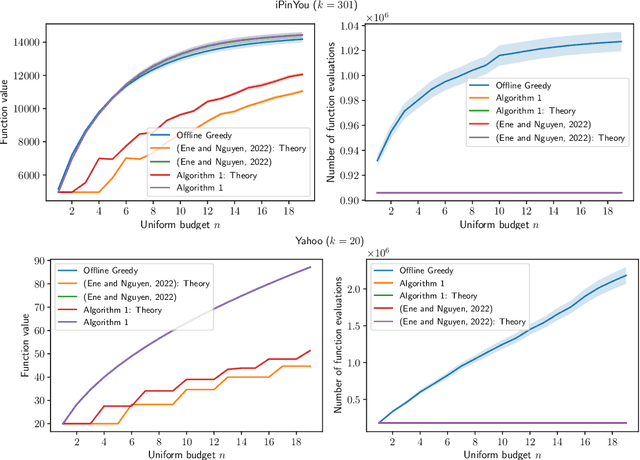

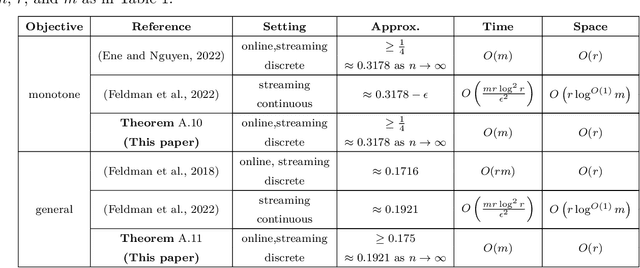

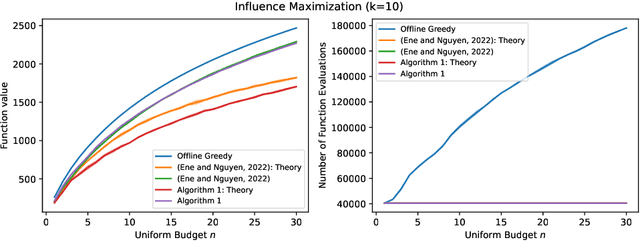

Abstract:Constrained $k$-submodular maximization is a general framework that captures many discrete optimization problems such as ad allocation, influence maximization, personalized recommendation, and many others. In many of these applications, datasets are large or decisions need to be made in an online manner, which motivates the development of efficient streaming and online algorithms. In this work, we develop single-pass streaming and online algorithms for constrained $k$-submodular maximization with both monotone and general (possibly non-monotone) objectives subject to cardinality and knapsack constraints. Our algorithms achieve provable constant-factor approximation guarantees which improve upon the state of the art in almost all settings. Moreover, they are combinatorial and very efficient, and have optimal space and running time. We experimentally evaluate our algorithms on instances for ad allocation and other applications, where we observe that our algorithms are efficient and scalable, and construct solutions that are comparable in value to offline greedy algorithms.

High Probability Convergence of Stochastic Gradient Methods

Feb 28, 2023Abstract:In this work, we describe a generic approach to show convergence with high probability for both stochastic convex and non-convex optimization with sub-Gaussian noise. In previous works for convex optimization, either the convergence is only in expectation or the bound depends on the diameter of the domain. Instead, we show high probability convergence with bounds depending on the initial distance to the optimal solution. The algorithms use step sizes analogous to the standard settings and are universal to Lipschitz functions, smooth functions, and their linear combinations. This method can be applied to the non-convex case. We demonstrate an $O((1+\sigma^{2}\log(1/\delta))/T+\sigma/\sqrt{T})$ convergence rate when the number of iterations $T$ is known and an $O((1+\sigma^{2}\log(T/\delta))/\sqrt{T})$ convergence rate when $T$ is unknown for SGD, where $1-\delta$ is the desired success probability. These bounds improve over existing bounds in the literature. Additionally, we demonstrate that our techniques can be used to obtain high probability bound for AdaGrad-Norm (Ward et al., 2019) that removes the bounded gradients assumption from previous works. Furthermore, our technique for AdaGrad-Norm extends to the standard per-coordinate AdaGrad algorithm (Duchi et al., 2011), providing the first noise-adapted high probability convergence for AdaGrad.

Online Ad Allocation with Predictions

Feb 03, 2023

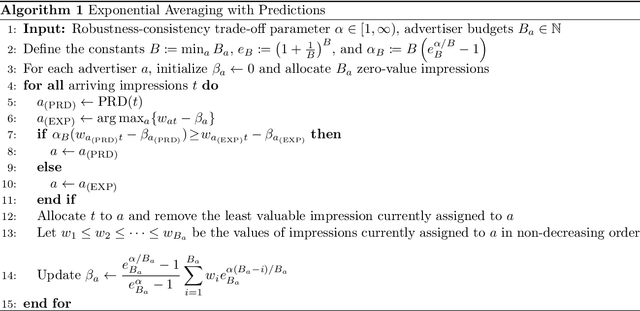

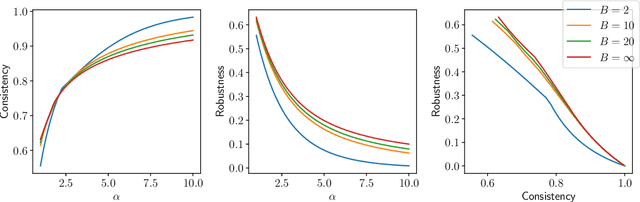

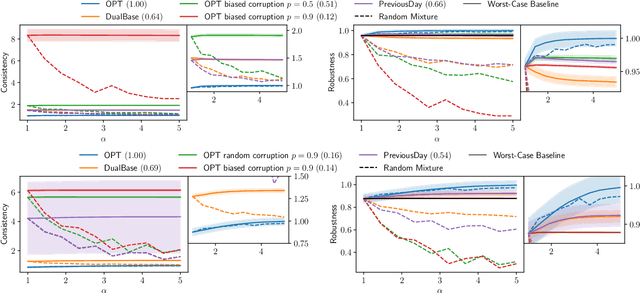

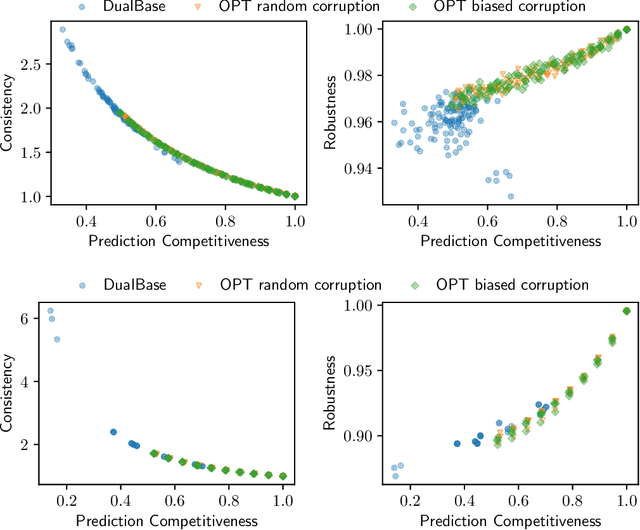

Abstract:Display Ads and the generalized assignment problem are two well-studied online packing problems with important applications in ad allocation and other areas. In both problems, ad impressions arrive online and have to be allocated immediately to budget-constrained advertisers. Worst-case algorithms that achieve the ideal competitive ratio are known, but might act overly conservative given the predictable and usually tame nature of real-world input. Given this discrepancy, we develop an algorithm for both problems that incorporate machine-learned predictions and can thus improve the performance beyond the worst-case. Our algorithm is based on the work of Feldman et al. (2009) and similar in nature to Mahdian et al. (2007) who were the first to develop a learning-augmented algorithm for the related, but more structured Ad Words problem. We use a novel analysis to show that our algorithm is able to capitalize on a good prediction, while being robust against poor predictions. We experimentally evaluate our algorithm on synthetic and real-world data on a wide range of predictions. Our algorithm is consistently outperforming the worst-case algorithm without predictions.

High Probability Convergence for Accelerated Stochastic Mirror Descent

Oct 03, 2022Abstract:In this work, we describe a generic approach to show convergence with high probability for stochastic convex optimization. In previous works, either the convergence is only in expectation or the bound depends on the diameter of the domain. Instead, we show high probability convergence with bounds depending on the initial distance to the optimal solution as opposed to the domain diameter. The algorithms use step sizes analogous to the standard settings and are universal to Lipschitz functions, smooth functions, and their linear combinations.

META-STORM: Generalized Fully-Adaptive Variance Reduced SGD for Unbounded Functions

Sep 29, 2022

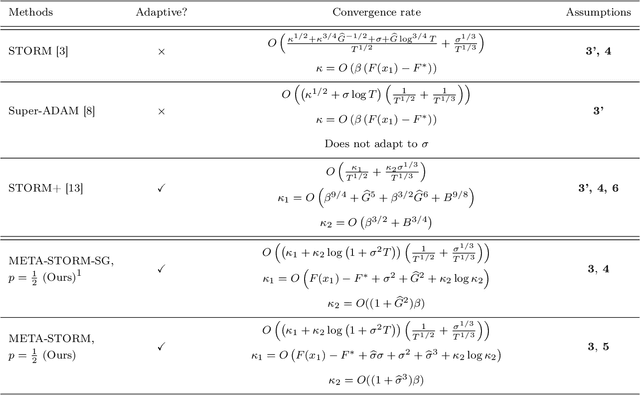

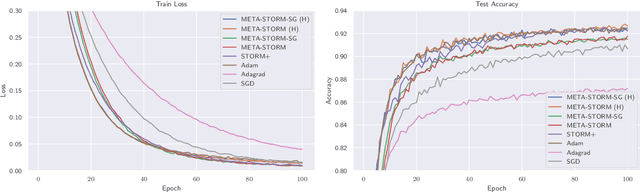

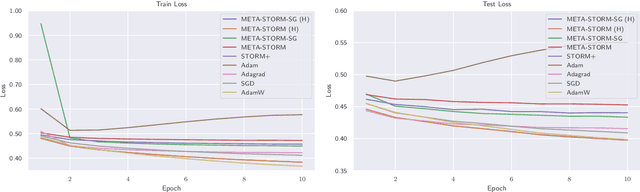

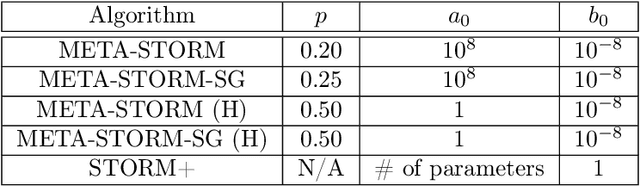

Abstract:We study the application of variance reduction (VR) techniques to general non-convex stochastic optimization problems. In this setting, the recent work STORM [Cutkosky-Orabona '19] overcomes the drawback of having to compute gradients of "mega-batches" that earlier VR methods rely on. There, STORM utilizes recursive momentum to achieve the VR effect and is then later made fully adaptive in STORM+ [Levy et al., '21], where full-adaptivity removes the requirement for obtaining certain problem-specific parameters such as the smoothness of the objective and bounds on the variance and norm of the stochastic gradients in order to set the step size. However, STORM+ crucially relies on the assumption that the function values are bounded, excluding a large class of useful functions. In this work, we propose META-STORM, a generalized framework of STORM+ that removes this bounded function values assumption while still attaining the optimal convergence rate for non-convex optimization. META-STORM not only maintains full-adaptivity, removing the need to obtain problem specific parameters, but also improves the convergence rate's dependency on the problem parameters. Furthermore, META-STORM can utilize a large range of parameter settings that subsumes previous methods allowing for more flexibility in a wider range of settings. Finally, we demonstrate the effectiveness of META-STORM through experiments across common deep learning tasks. Our algorithm improves upon the previous work STORM+ and is competitive with widely used algorithms after the addition of per-coordinate update and exponential moving average heuristics.

On the Convergence of AdaGrad on $\R^{d}$: Beyond Convexity, Non-Asymptotic Rate and Acceleration

Sep 29, 2022Abstract:Existing analysis of AdaGrad and other adaptive methods for smooth convex optimization is typically for functions with bounded domain diameter. In unconstrained problems, previous works guarantee an asymptotic convergence rate without an explicit constant factor that holds true for the entire function class. Furthermore, in the stochastic setting, only a modified version of AdaGrad, different from the one commonly used in practice, in which the latest gradient is not used to update the stepsize, has been analyzed. Our paper aims at bridging these gaps and developing a deeper understanding of AdaGrad and its variants in the standard setting of smooth convex functions as well as the more general setting of quasar convex functions. First, we demonstrate new techniques to explicitly bound the convergence rate of the vanilla AdaGrad for unconstrained problems in both deterministic and stochastic settings. Second, we propose a variant of AdaGrad for which we can show the convergence of the last iterate, instead of the average iterate. Finally, we give new accelerated adaptive algorithms and their convergence guarantee in the deterministic setting with explicit dependency on the problem parameters, improving upon the asymptotic rate shown in previous works.

Adaptive Accelerated (Extra-)Gradient Methods with Variance Reduction

Jan 28, 2022

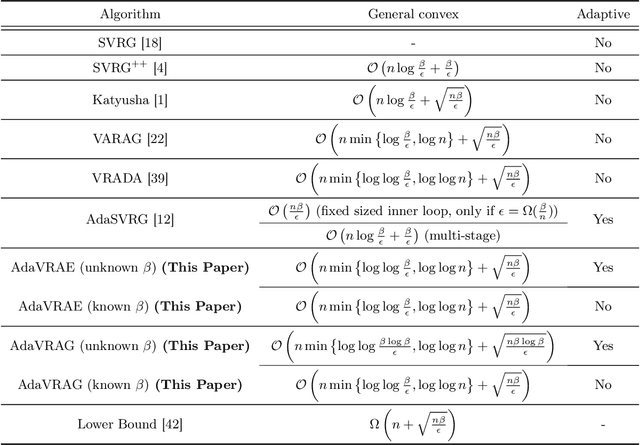

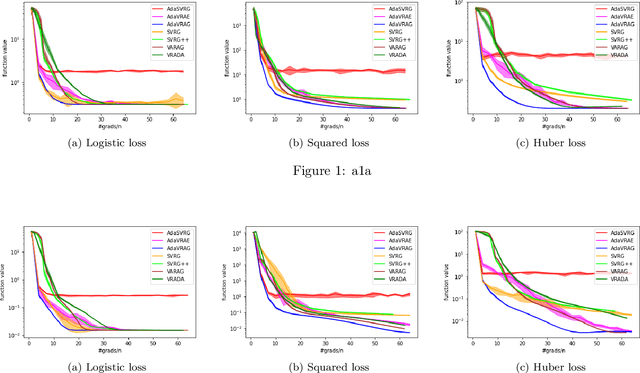

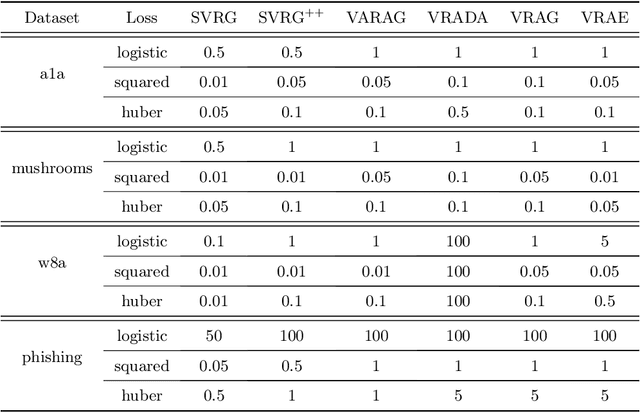

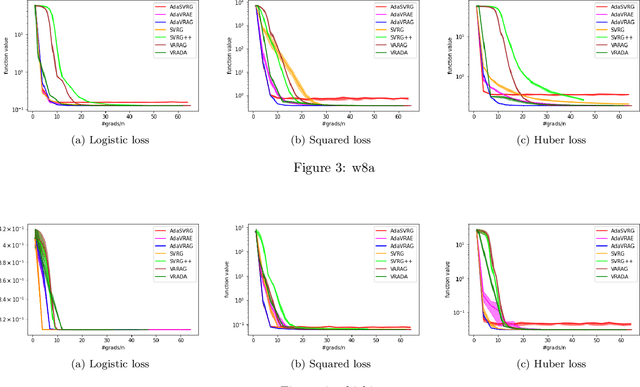

Abstract:In this paper, we study the finite-sum convex optimization problem focusing on the general convex case. Recently, the study of variance reduced (VR) methods and their accelerated variants has made exciting progress. However, the step size used in the existing VR algorithms typically depends on the smoothness parameter, which is often unknown and requires tuning in practice. To address this problem, we propose two novel adaptive VR algorithms: Adaptive Variance Reduced Accelerated Extra-Gradient (AdaVRAE) and Adaptive Variance Reduced Accelerated Gradient (AdaVRAG). Our algorithms do not require knowledge of the smoothness parameter. AdaVRAE uses $\mathcal{O}\left(n\log\log n+\sqrt{\frac{n\beta}{\epsilon}}\right)$ gradient evaluations and AdaVRAG uses $\mathcal{O}\left(n\log\log n+\sqrt{\frac{n\beta\log\beta}{\epsilon}}\right)$ gradient evaluations to attain an $\mathcal{O}(\epsilon)$-suboptimal solution, where $n$ is the number of functions in the finite sum and $\beta$ is the smoothness parameter. This result matches the best-known convergence rate of non-adaptive VR methods and it improves upon the convergence of the state of the art adaptive VR method, AdaSVRG. We demonstrate the superior performance of our algorithms compared with previous methods in experiments on real-world datasets.

Projection-Free Bandit Optimization with Privacy Guarantees

Dec 22, 2020Abstract:We design differentially private algorithms for the bandit convex optimization problem in the projection-free setting. This setting is important whenever the decision set has a complex geometry, and access to it is done efficiently only through a linear optimization oracle, hence Euclidean projections are unavailable (e.g. matroid polytope, submodular base polytope). This is the first differentially-private algorithm for projection-free bandit optimization, and in fact our bound of $\widetilde{O}(T^{3/4})$ matches the best known non-private projection-free algorithm (Garber-Kretzu, AISTATS `20) and the best known private algorithm, even for the weaker setting when projections are available (Smith-Thakurta, NeurIPS `13).

Adaptive and Universal Single-gradient Algorithms for Variational Inequalities

Oct 15, 2020

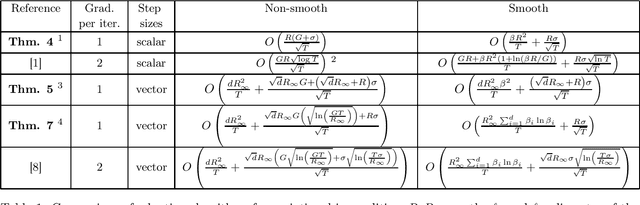

Abstract:Variational inequalities with monotone operators capture many problems of interest, notably convex optimization and convex-concave saddle point problems. Classical methods based on the MirrorProx algorithm require two operator evaluations per iteration, which is the dominant factor in the running time in many settings. Additionally, the algorithms typically require careful settings of the step sizes based on the parameters of the problems such as smoothness. In this work, we develop new algorithms addressing both of these shortcomings simultaneously. Our algorithms use a single operator evaluation per iteration and automatically adapt to problem parameters such as smoothness. We show that our algorithms are universal and simultaneously achieve the optimal convergence rates in the non-smooth, smooth, and stochastic settings.

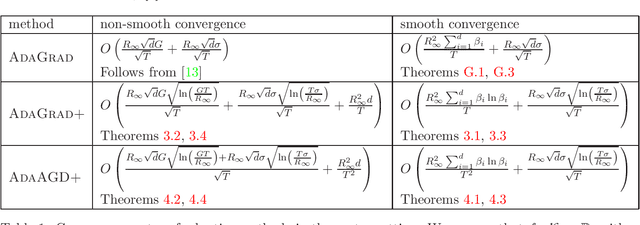

Adaptive Gradient Methods for Constrained Convex Optimization

Aug 16, 2020

Abstract:We provide new adaptive first-order methods for constrained convex optimization. Our main algorithms AdaACSA and AdaAGD+ are accelerated methods, which are universal in the sense that they achieve nearly-optimal convergence rates for both smooth and non-smooth functions, even when they only have access to stochastic gradients. In addition, they do not require any prior knowledge on how the objective function is parametrized, since they automatically adjust their per-coordinate learning rate. These can be seen as truly accelerated Adagrad methods for constrained optimization. We complement them with a simpler algorithm AdaGrad+ which enjoys the same features, and achieves the standard non-accelerated convergence rate. We also present a set of new results involving adaptive methods for unconstrained optimization and monotone operators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge