Alexandros Paraschos

The Shortcomings of Force-from-Motion in Robot Learning

Jul 03, 2024

Abstract:Robotic manipulation requires accurate motion and physical interaction control. However, current robot learning approaches focus on motion-centric action spaces that do not explicitly give the policy control over the interaction. In this paper, we discuss the repercussions of this choice and argue for more interaction-explicit action spaces in robot learning.

Constrained Probabilistic Movement Primitives for Robot Trajectory Adaptation

Jan 29, 2021

Abstract:Versatile movement representations allow robots to learn new tasks and rapidly adapt them to environmental changes, e.g. introduction of obstacles, placing additional robots in the workspace, modification of the joint range due to faults or range of motion constraints due to tool manipulation. Probabilistic movement primitives (ProMP) model robot movements as a distribution over trajectories and they are an important tool due to their analytical tractability and ability to learn and generalise from a small number of demonstrations. Current approaches solve specific adaptation problems, e.g. obstacle avoidance, however, a generic probabilistic approach to adaptation has not yet been developed. In this paper we propose a generic probabilistic framework for adapting ProMPs. We formulate adaptation as a constrained optimisation problem where we minimise the Kullback-Leibler divergence between the adapted distribution and the distribution of the original primitive and we constrain the probability mass associated with undesired trajectories to be low. We derive several types of constraints that can be added depending on the task, such us joint limiting, various types of obstacle avoidance, via-points, and mutual avoidance, under a common framework. We demonstrate our approach on several adaptation problems on simulated planar robot arms and 7-DOF Franka-Emika robots in single and dual robot arm settings.

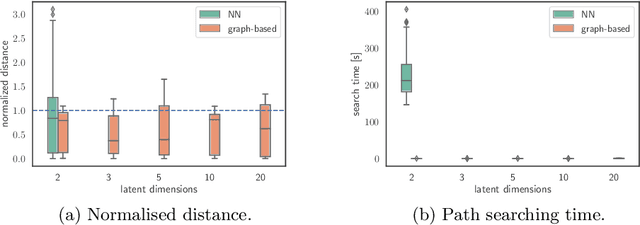

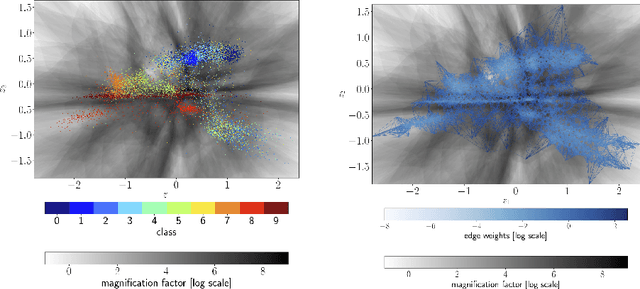

Fast Approximate Geodesics for Deep Generative Models

Dec 19, 2018

Abstract:The length of the geodesic between two data points along the Riemannian manifold, induced by a deep generative model, yields a principled measure of similarity. Applications have so far been limited to low-dimensional latent spaces, as the method is computationally demanding: it constitutes to solving a non-convex optimisation problem. Our approach is to tackle a relaxation: finding shortest paths in a finite graph of samples from the aggregate approximate posterior can be solved exactly, at greatly reduced runtime, and without notable loss in quality. The method is hence applicable to high-dimensional problems in the visual domain. We validate the approach empirically on a series of experiments using variational autoencoders applied to image data, tackling the Chair, Faces and FashionMNIST data sets.

Active Learning based on Data Uncertainty and Model Sensitivity

Aug 06, 2018

Abstract:Robots can rapidly acquire new skills from demonstrations. However, during generalisation of skills or transitioning across fundamentally different skills, it is unclear whether the robot has the necessary knowledge to perform the task. Failing to detect missing information often leads to abrupt movements or to collisions with the environment. Active learning can quantify the uncertainty of performing the task and, in general, locate regions of missing information. We introduce a novel algorithm for active learning and demonstrate its utility for generating smooth trajectories. Our approach is based on deep generative models and metric learning in latent spaces. It relies on the Jacobian of the likelihood to detect non-smooth transitions in the latent space, i.e., transitions that lead to abrupt changes in the movement of the robot. When non-smooth transitions are detected, our algorithm asks for an additional demonstration from that specific region. The newly acquired knowledge modifies the data manifold and allows for learning a latent representation for generating smooth movements. We demonstrate the efficacy of our approach on generalising elementary skills, transitioning across different skills, and implicitly avoiding collisions with the environment. For our experiments, we use a simulated pendulum where we observe its motion from images and a 7-DoF anthropomorphic arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge