Alexandros Papadopoulos

Food Image Classification and Segmentation with Attention-based Multiple Instance Learning

Aug 22, 2023Abstract:The demand for accurate food quantification has increased in the recent years, driven by the needs of applications in dietary monitoring. At the same time, computer vision approaches have exhibited great potential in automating tasks within the food domain. Traditionally, the development of machine learning models for these problems relies on training data sets with pixel-level class annotations. However, this approach introduces challenges arising from data collection and ground truth generation that quickly become costly and error-prone since they must be performed in multiple settings and for thousands of classes. To overcome these challenges, the paper presents a weakly supervised methodology for training food image classification and semantic segmentation models without relying on pixel-level annotations. The proposed methodology is based on a multiple instance learning approach in combination with an attention-based mechanism. At test time, the models are used for classification and, concurrently, the attention mechanism generates semantic heat maps which are used for food class segmentation. In the paper, we conduct experiments on two meta-classes within the FoodSeg103 data set to verify the feasibility of the proposed approach and we explore the functioning properties of the attention mechanism.

Leveraging Unlabelled Data in Multiple-Instance Learning Problems for Improved Detection of Parkinsonian Tremor in Free-Living Conditions

Apr 29, 2023

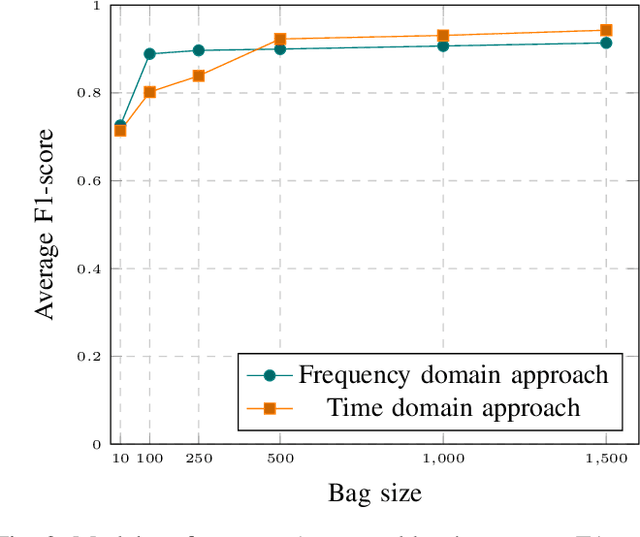

Abstract:Data-driven approaches for remote detection of Parkinson's Disease and its motor symptoms have proliferated in recent years, owing to the potential clinical benefits of early diagnosis. The holy grail of such approaches is the free-living scenario, in which data are collected continuously and unobtrusively during every day life. However, obtaining fine-grained ground-truth and remaining unobtrusive is a contradiction and therefore, the problem is usually addressed via multiple-instance learning. Yet for large scale studies, obtaining even the necessary coarse ground-truth is not trivial, as a complete neurological evaluation is required. In contrast, large scale collection of data without any ground-truth is much easier. Nevertheless, utilizing unlabelled data in a multiple-instance setting is not straightforward, as the topic has received very little research attention. Here we try to fill this gap by introducing a new method for combining semi-supervised with multiple-instance learning. Our approach builds on the Virtual Adversarial Training principle, a state-of-the-art approach for regular semi-supervised learning, which we adapt and modify appropriately for the multiple-instance setting. We first establish the validity of the proposed approach through proof-of-concept experiments on synthetic problems generated from two well-known benchmark datasets. We then move on to the actual task of detecting PD tremor from hand acceleration signals collected in-the-wild, but in the presence of additional completely unlabelled data. We show that by leveraging the unlabelled data of 454 subjects we can achieve large performance gains (up to 9% increase in F1-score) in per-subject tremor detection for a cohort of 45 subjects with known tremor ground-truth.

An Open Platform for Simulating the Physical Layer of 6G Communication Systems with Multiple Intelligent Surfaces

Nov 03, 2022Abstract:Reconfigurable Intelligent Surfaces (RIS) constitute a promising technology that could fulfill the extreme performance and capacity needs of the upcoming 6G wireless networks, by offering software-defined control over wireless propagation phenomena. Despite the existence of many theoretical models describing various aspects of RIS from the signal processing perspective (e.g., channel fading models), there is no open platform to simulate and study their actual physical-layer behavior, especially in the multi-RIS case. In this paper, we develop an open simulation platform, aimed at modeling the physical-layer electromagnetic coupling and propagation between RIS pairs. We present the platform by initially designing a basic unit cell, and then proceeding to progressively model and simulate multiple and larger RISs. The platform can be used for producing verifiable stochastic models for wireless communication in multi-RIS deployments, such as vehicle-to-everything (V2X) communications in autonomous vehicles and cybersecurity schemes, while its code is freely available to the public.

An Interpretable Multiple-Instance Approach for the Detection of referable Diabetic Retinopathy from Fundus Images

Mar 02, 2021

Abstract:Diabetic Retinopathy (DR) is a leading cause of vision loss globally. Yet despite its prevalence, the majority of affected people lack access to the specialized ophthalmologists and equipment required for assessing their condition. This can lead to delays in the start of treatment, thereby lowering their chances for a successful outcome. Machine learning systems that automatically detect the disease in eye fundus images have been proposed as a means of facilitating access to DR severity estimates for patients in remote regions or even for complementing the human expert's diagnosis. In this paper, we propose a machine learning system for the detection of referable DR in fundus images that is based on the paradigm of multiple-instance learning. By extracting local information from image patches and combining it efficiently through an attention mechanism, our system is able to achieve high classification accuracy. Moreover, it can highlight potential image regions where DR manifests through its characteristic lesions. We evaluate our approach on publicly available retinal image datasets, in which it exhibits near state-of-the-art performance, while also producing interpretable visualizations of its predictions.

Detecting Parkinsonian Tremor from IMU Data Collected In-The-Wild using Deep Multiple-Instance Learning

May 06, 2020

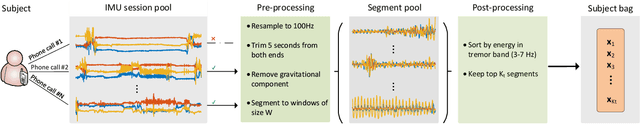

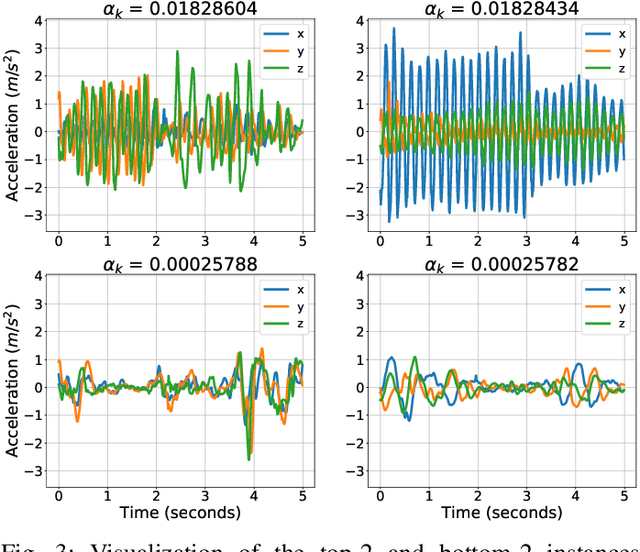

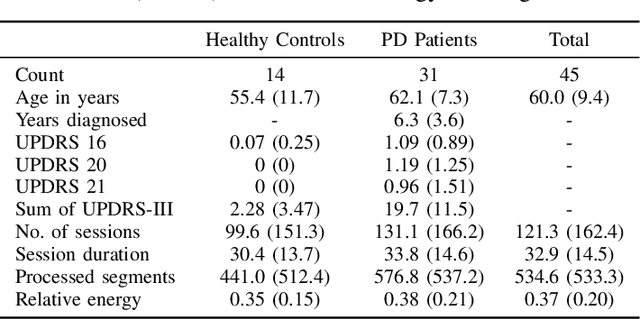

Abstract:Parkinson's Disease (PD) is a slowly evolving neuro-logical disease that affects about 1% of the population above 60 years old, causing symptoms that are subtle at first, but whose intensity increases as the disease progresses. Automated detection of these symptoms could offer clues as to the early onset of the disease, thus improving the expected clinical outcomes of the patients via appropriately targeted interventions. This potential has led many researchers to develop methods that use widely available sensors to measure and quantify the presence of PD symptoms such as tremor, rigidity and braykinesia. However, most of these approaches operate under controlled settings, such as in lab or at home, thus limiting their applicability under free-living conditions. In this work, we present a method for automatically identifying tremorous episodes related to PD, based on IMU signals captured via a smartphone device. We propose a Multiple-Instance Learning approach, wherein a subject is represented as an unordered bag of accelerometer signal segments and a single, expert-provided, tremor annotation. Our method combines deep feature learning with a learnable pooling stage that is able to identify key instances within the subject bag, while still being trainable end-to-end. We validate our algorithm on a newly introduced dataset of 45 subjects, containing accelerometer signals collected entirely in-the-wild. The good classification performance obtained in the conducted experiments suggests that the proposed method can efficiently navigate the noisy environment of in-the-wild recordings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge