Konstantinos Kyritsis

Estimation of Food Intake Quantity Using Inertial Signals from Smartwatches

Feb 10, 2025Abstract:Accurate monitoring of eating behavior is crucial for managing obesity and eating disorders such as bulimia nervosa. At the same time, existing methods rely on multiple and/or specialized sensors, greatly harming adherence and ultimately, the quality and continuity of data. This paper introduces a novel approach for estimating the weight of a bite, from a commercial smartwatch. Our publicly-available dataset contains smartwatch inertial data from ten participants, with manually annotated start and end times of each bite along with their corresponding weights from a smart scale, under semi-controlled conditions. The proposed method combines extracted behavioral features such as the time required to load the utensil with food, with statistical features of inertial signals, that serve as input to a Support Vector Regression model to estimate bite weights. Under a leave-one-subject-out cross-validation scheme, our approach achieves a mean absolute error (MAE) of 3.99 grams per bite. To contextualize this performance, we introduce the improvement metric, that measures the relative MAE difference compared to a baseline model. Our method demonstrates a 17.41% improvement, while the adapted state-of-the art method shows a -28.89% performance against that same baseline. The results presented in this work establish the feasibility of extracting meaningful bite weight estimates from commercial smartwatch inertial sensors alone, laying the groundwork for future accessible, non-invasive dietary monitoring systems.

Intake Monitoring in Free-Living Conditions: Overview and Lessons we Have Learned

Jun 04, 2022

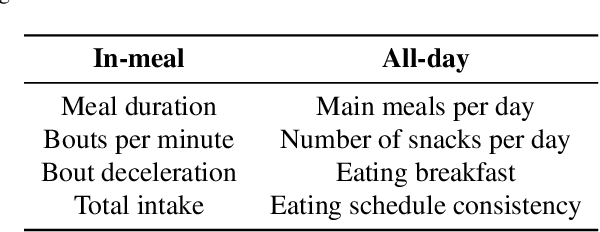

Abstract:The progress in artificial intelligence and machine learning algorithms over the past decade has enabled the development of new methods for the objective measurement of eating, including both the measurement of eating episodes as well as the measurement of in-meal eating behavior. These allow the study of eating behavior outside the laboratory in free-living conditions, without the need for video recordings and laborious manual annotations. In this paper, we present a high-level overview of our recent work on intake monitoring using a smartwatch, as well as methods using an in-ear microphone. We also present evaluation results of these methods in challenging, real-world datasets. Furthermore, we discuss use-cases of such intake monitoring tools for advancing research in eating behavior, for improving dietary monitoring, as well as for developing evidence-based health policies. Our goal is to inform researchers and users of intake monitoring methods regarding (i) the development of new methods based on commercially available devices, (ii) what to expect in terms of effectiveness, and (iii) how these methods can be used in research as well as in practical applications.

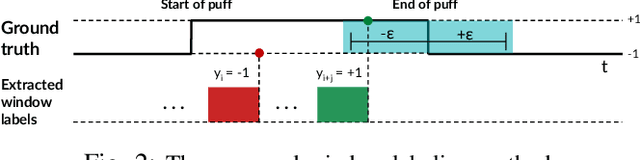

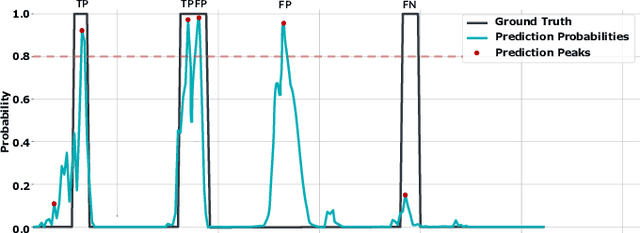

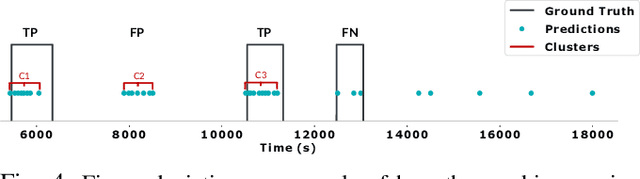

A Bottom-up method Towards the Automatic and Objective Monitoring of Smoking Behavior In-the-wild using Wrist-mounted Inertial Sensors

Sep 08, 2021

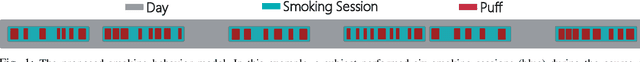

Abstract:The consumption of tobacco has reached global epidemic proportions and is characterized as the leading cause of death and illness. Among the different ways of consuming tobacco (e.g., smokeless, cigars), smoking cigarettes is the most widespread. In this paper, we present a two-step, bottom-up algorithm towards the automatic and objective monitoring of cigarette-based, smoking behavior during the day, using the 3D acceleration and orientation velocity measurements from a commercial smartwatch. In the first step, our algorithm performs the detection of individual smoking gestures (i.e., puffs) using an artificial neural network with both convolutional and recurrent layers. In the second step, we make use of the detected puff density to achieve the temporal localization of smoking sessions that occur throughout the day. In the experimental section we provide extended evaluation regarding each step of the proposed algorithm, using our publicly available, realistic Smoking Event Detection (SED) and Free-living Smoking Event Detection (SED-FL) datasets recorded under semi-controlled and free-living conditions, respectively. In particular, leave-one-subject-out (LOSO) experiments reveal an F1-score of 0.863 for the detection of puffs and an F1-score/Jaccard index equal to 0.878/0.604 towards the temporal localization of smoking sessions during the day. Finally, to gain further insight, we also compare the puff detection part of our algorithm with a similar approach found in the recent literature.

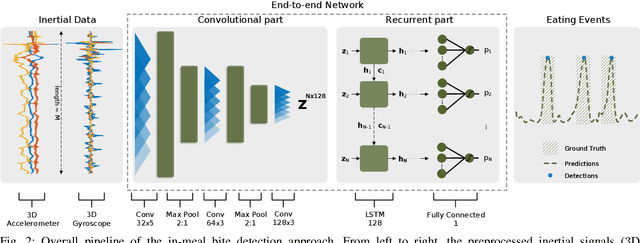

A Data Driven End-to-end Approach for In-the-wild Monitoring of Eating Behavior Using Smartwatches

Oct 12, 2020

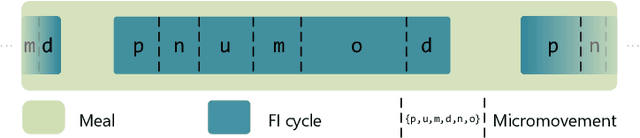

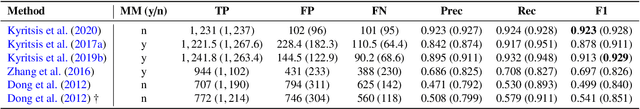

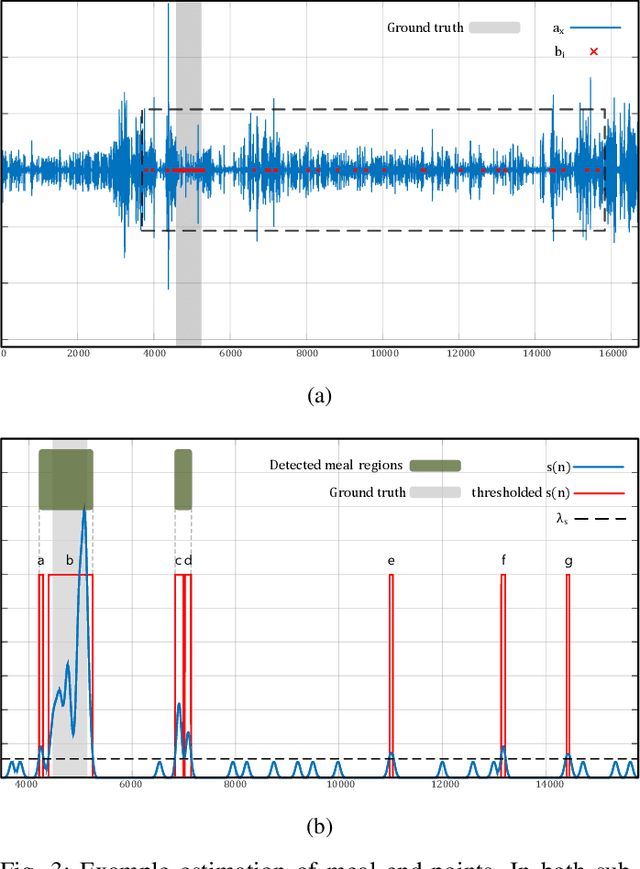

Abstract:The increased worldwide prevalence of obesity has sparked the interest of the scientific community towards tools that objectively and automatically monitor eating behavior. Despite the study of obesity being in the spotlight, such tools can also be used to study eating disorders (e.g. anorexia nervosa) or provide a personalized monitoring platform for patients or athletes. This paper presents a complete framework towards the automated i) modeling of in-meal eating behavior and ii) temporal localization of meals, from raw inertial data collected in-the-wild using commercially available smartwatches. Initially, we present an end-to-end Neural Network which detects food intake events (i.e. bites). The proposed network uses both convolutional and recurrent layers that are trained simultaneously. Subsequently, we show how the distribution of the detected bites throughout the day can be used to estimate the start and end points of meals, using signal processing algorithms. We perform extensive evaluation on each framework part individually. Leave-one-subject-out (LOSO) evaluation shows that our bite detection approach outperforms four state-of-the-art algorithms towards the detection of bites during the course of a meal (0.923 F1 score). Furthermore, LOSO and held-out set experiments regarding the estimation of meal start/end points reveal that the proposed approach outperforms a relevant approach found in the literature (Jaccard Index of 0.820 and 0.821 for the LOSO and heldout experiments, respectively). Experiments are performed using our publicly available FIC and the newly introduced FreeFIC datasets.

Detecting Parkinsonian Tremor from IMU Data Collected In-The-Wild using Deep Multiple-Instance Learning

May 06, 2020

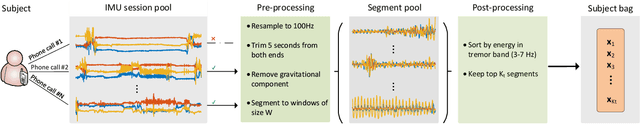

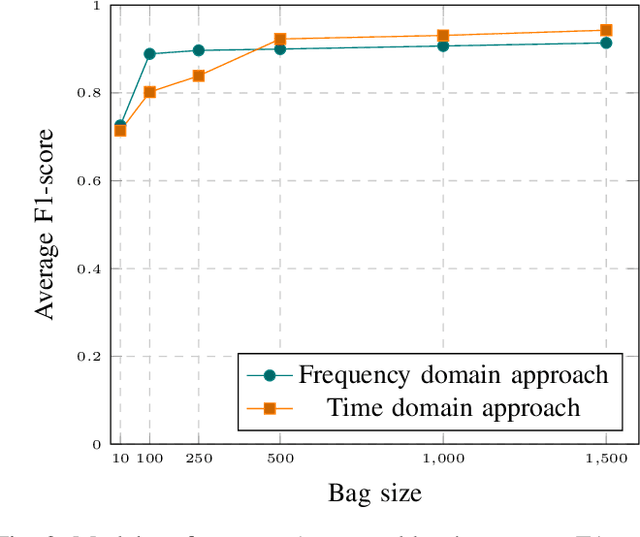

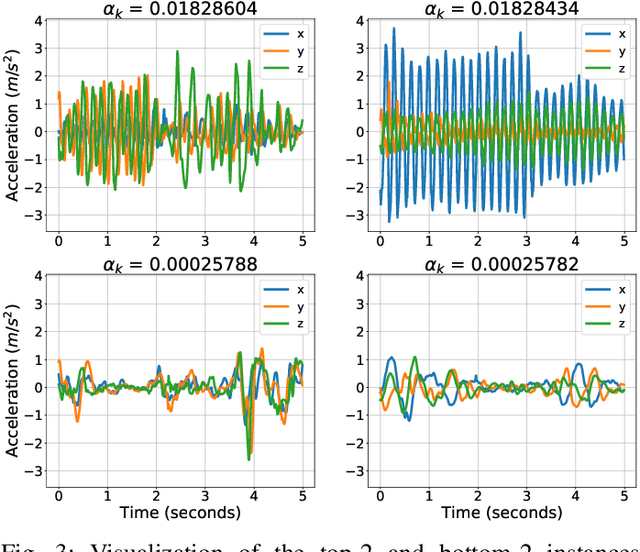

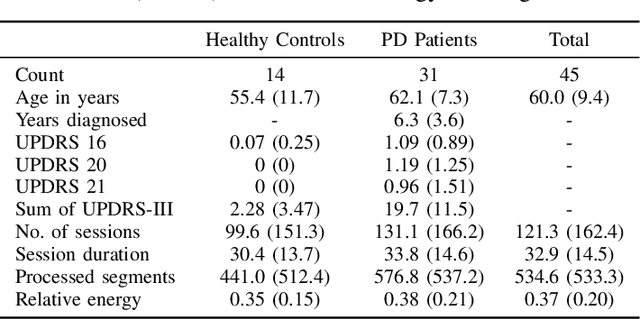

Abstract:Parkinson's Disease (PD) is a slowly evolving neuro-logical disease that affects about 1% of the population above 60 years old, causing symptoms that are subtle at first, but whose intensity increases as the disease progresses. Automated detection of these symptoms could offer clues as to the early onset of the disease, thus improving the expected clinical outcomes of the patients via appropriately targeted interventions. This potential has led many researchers to develop methods that use widely available sensors to measure and quantify the presence of PD symptoms such as tremor, rigidity and braykinesia. However, most of these approaches operate under controlled settings, such as in lab or at home, thus limiting their applicability under free-living conditions. In this work, we present a method for automatically identifying tremorous episodes related to PD, based on IMU signals captured via a smartphone device. We propose a Multiple-Instance Learning approach, wherein a subject is represented as an unordered bag of accelerometer signal segments and a single, expert-provided, tremor annotation. Our method combines deep feature learning with a learnable pooling stage that is able to identify key instances within the subject bag, while still being trainable end-to-end. We validate our algorithm on a newly introduced dataset of 45 subjects, containing accelerometer signals collected entirely in-the-wild. The good classification performance obtained in the conducted experiments suggests that the proposed method can efficiently navigate the noisy environment of in-the-wild recordings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge