Alessandro Savino

EHWGesture -- A dataset for multimodal understanding of clinical gestures

Sep 09, 2025Abstract:Hand gesture understanding is essential for several applications in human-computer interaction, including automatic clinical assessment of hand dexterity. While deep learning has advanced static gesture recognition, dynamic gesture understanding remains challenging due to complex spatiotemporal variations. Moreover, existing datasets often lack multimodal and multi-view diversity, precise ground-truth tracking, and an action quality component embedded within gestures. This paper introduces EHWGesture, a multimodal video dataset for gesture understanding featuring five clinically relevant gestures. It includes over 1,100 recordings (6 hours), captured from 25 healthy subjects using two high-resolution RGB-Depth cameras and an event camera. A motion capture system provides precise ground-truth hand landmark tracking, and all devices are spatially calibrated and synchronized to ensure cross-modal alignment. Moreover, to embed an action quality task within gesture understanding, collected recordings are organized in classes of execution speed that mirror clinical evaluations of hand dexterity. Baseline experiments highlight the dataset's potential for gesture classification, gesture trigger detection, and action quality assessment. Thus, EHWGesture can serve as a comprehensive benchmark for advancing multimodal clinical gesture understanding.

Energy-Efficient Digital Design: A Comparative Study of Event-Driven and Clock-Driven Spiking Neurons

Jun 16, 2025Abstract:This paper presents a comprehensive evaluation of Spiking Neural Network (SNN) neuron models for hardware acceleration by comparing event driven and clock-driven implementations. We begin our investigation in software, rapidly prototyping and testing various SNN models based on different variants of the Leaky Integrate and Fire (LIF) neuron across multiple datasets. This phase enables controlled performance assessment and informs design refinement. Our subsequent hardware phase, implemented on FPGA, validates the simulation findings and offers practical insights into design trade offs. In particular, we examine how variations in input stimuli influence key performance metrics such as latency, power consumption, energy efficiency, and resource utilization. These results yield valuable guidelines for constructing energy efficient, real time neuromorphic systems. Overall, our work bridges software simulation and hardware realization, advancing the development of next generation SNN accelerators.

CARACAS: vehiCular ArchitectuRe for detAiled Can Attacks Simulation

Jun 11, 2024Abstract:Modern vehicles are increasingly vulnerable to attacks that exploit network infrastructures, particularly the Controller Area Network (CAN) networks. To effectively counter such threats using contemporary tools like Intrusion Detection Systems (IDSs) based on data analysis and classification, large datasets of CAN messages become imperative. This paper delves into the feasibility of generating synthetic datasets by harnessing the modeling capabilities of simulation frameworks such as Simulink coupled with a robust representation of attack models to present CARACAS, a vehicular model, including component control via CAN messages and attack injection capabilities. CARACAS showcases the efficacy of this methodology, including a Battery Electric Vehicle (BEV) model, and focuses on attacks targeting torque control in two distinct scenarios.

R-CONV: An Analytical Approach for Efficient Data Reconstruction via Convolutional Gradients

Jun 06, 2024Abstract:In the effort to learn from extensive collections of distributed data, federated learning has emerged as a promising approach for preserving privacy by using a gradient-sharing mechanism instead of exchanging raw data. However, recent studies show that private training data can be leaked through many gradient attacks. While previous analytical-based attacks have successfully reconstructed input data from fully connected layers, their effectiveness diminishes when applied to convolutional layers. This paper introduces an advanced data leakage method to efficiently exploit convolutional layers' gradients. We present a surprising finding: even with non-fully invertible activation functions, such as ReLU, we can analytically reconstruct training samples from the gradients. To the best of our knowledge, this is the first analytical approach that successfully reconstructs convolutional layer inputs directly from the gradients, bypassing the need to reconstruct layers' outputs. Prior research has mainly concentrated on the weight constraints of convolution layers, overlooking the significance of gradient constraints. Our findings demonstrate that existing analytical methods used to estimate the risk of gradient attacks lack accuracy. In some layers, attacks can be launched with less than 5% of the reported constraints.

SpikeExplorer: hardware-oriented Design Space Exploration for Spiking Neural Networks on FPGA

Apr 04, 2024Abstract:One of today's main concerns is to bring Artificial Intelligence power to embedded systems for edge applications. The hardware resources and power consumption required by state-of-the-art models are incompatible with the constrained environments observed in edge systems, such as IoT nodes and wearable devices. Spiking Neural Networks (SNNs) can represent a solution in this sense: inspired by neuroscience, they reach unparalleled power and resource efficiency when run on dedicated hardware accelerators. However, when designing such accelerators, the amount of choices that can be taken is huge. This paper presents SpikExplorer, a modular and flexible Python tool for hardware-oriented Automatic Design Space Exploration to automate the configuration of FPGA accelerators for SNNs. Using Bayesian optimizations, SpikerExplorer enables hardware-centric multi-objective optimization, supporting factors such as accuracy, area, latency, power, and various combinations during the exploration process. The tool searches the optimal network architecture, neuron model, and internal and training parameters, trying to reach the desired constraints imposed by the user. It allows for a straightforward network configuration, providing the full set of explored points for the user to pick the trade-off that best fits the needs. The potential of SpikExplorer is showcased using three benchmark datasets. It reaches 95.8% accuracy on the MNIST dataset, with a power consumption of 180mW/image and a latency of 0.12 ms/image, making it a powerful tool for automatically optimizing SNNs.

SpikingJET: Enhancing Fault Injection for Fully and Convolutional Spiking Neural Networks

Mar 30, 2024

Abstract:As artificial neural networks become increasingly integrated into safety-critical systems such as autonomous vehicles, devices for medical diagnosis, and industrial automation, ensuring their reliability in the face of random hardware faults becomes paramount. This paper introduces SpikingJET, a novel fault injector designed specifically for fully connected and convolutional Spiking Neural Networks (SNNs). Our work underscores the critical need to evaluate the resilience of SNNs to hardware faults, considering their growing prominence in real-world applications. SpikingJET provides a comprehensive platform for assessing the resilience of SNNs by inducing errors and injecting faults into critical components such as synaptic weights, neuron model parameters, internal states, and activation functions. This paper demonstrates the effectiveness of Spiking-JET through extensive software-level experiments on various SNN architectures, revealing insights into their vulnerability and resilience to hardware faults. Moreover, highlighting the importance of fault resilience in SNNs contributes to the ongoing effort to enhance the reliability and safety of Neural Network (NN)-powered systems in diverse domains.

Spiker+: a framework for the generation of efficient Spiking Neural Networks FPGA accelerators for inference at the edge

Jan 02, 2024

Abstract:Including Artificial Neural Networks in embedded systems at the edge allows applications to exploit Artificial Intelligence capabilities directly within devices operating at the network periphery. This paper introduces Spiker+, a comprehensive framework for generating efficient, low-power, and low-area customized Spiking Neural Networks (SNN) accelerators on FPGA for inference at the edge. Spiker+ presents a configurable multi-layer hardware SNN, a library of highly efficient neuron architectures, and a design framework, enabling the development of complex neural network accelerators with few lines of Python code. Spiker+ is tested on two benchmark datasets, the MNIST and the Spiking Heidelberg Digits (SHD). On the MNIST, it demonstrates competitive performance compared to state-of-the-art SNN accelerators. It outperforms them in terms of resource allocation, with a requirement of 7,612 logic cells and 18 Block RAMs (BRAMs), which makes it fit in very small FPGA, and power consumption, draining only 180mW for a complete inference on an input image. The latency is comparable to the ones observed in the state-of-the-art, with 780us/img. To the authors' knowledge, Spiker+ is the first SNN accelerator tested on the SHD. In this case, the accelerator requires 18,268 logic cells and 51 BRAM, with an overall power consumption of 430mW and a latency of 54 us for a complete inference on input data. This underscores the significance of Spiker+ in the hardware-accelerated SNN landscape, making it an excellent solution to deploy configurable and tunable SNN architectures in resource and power-constrained edge applications.

Design Space Exploration of Approximate Computing Techniques with a Reinforcement Learning Approach

Dec 29, 2023Abstract:Approximate Computing (AxC) techniques have become increasingly popular in trading off accuracy for performance gains in various applications. Selecting the best AxC techniques for a given application is challenging. Among proposed approaches for exploring the design space, Machine Learning approaches such as Reinforcement Learning (RL) show promising results. In this paper, we proposed an RL-based multi-objective Design Space Exploration strategy to find the approximate versions of the application that balance accuracy degradation and power and computation time reduction. Our experimental results show a good trade-off between accuracy degradation and decreased power and computation time for some benchmarks.

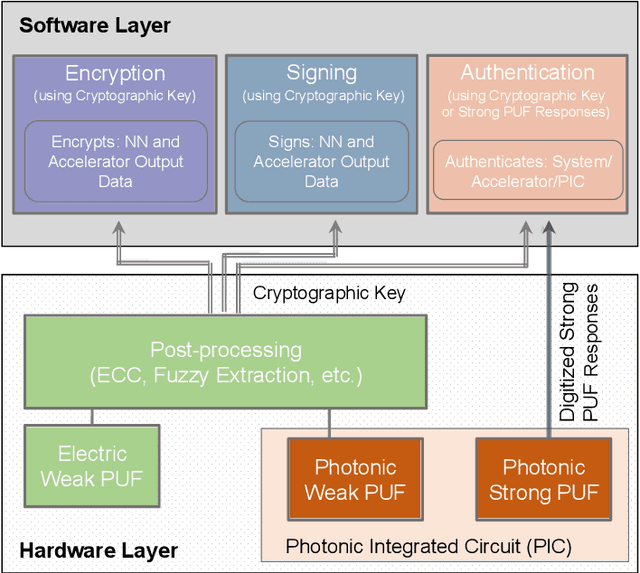

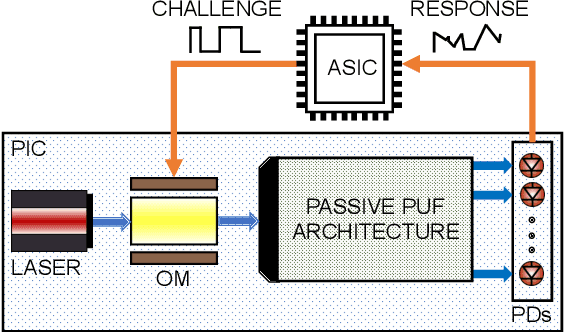

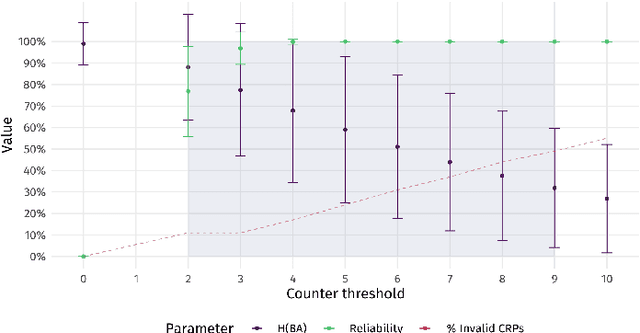

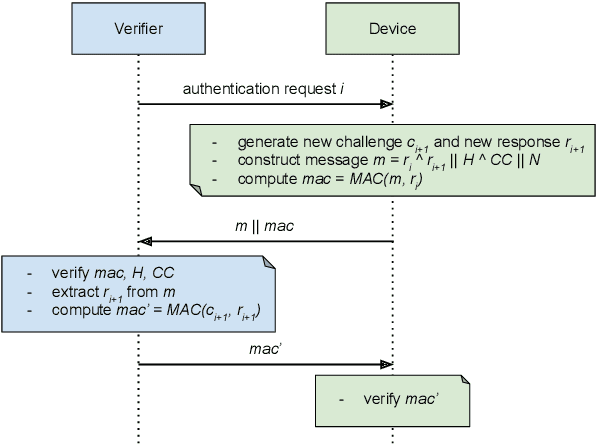

Security layers and related services within the Horizon Europe NEUROPULS project

Dec 14, 2023

Abstract:In the contemporary security landscape, the incorporation of photonics has emerged as a transformative force, unlocking a spectrum of possibilities to enhance the resilience and effectiveness of security primitives. This integration represents more than a mere technological augmentation; it signifies a paradigm shift towards innovative approaches capable of delivering security primitives with key properties for low-power systems. This not only augments the robustness of security frameworks, but also paves the way for novel strategies that adapt to the evolving challenges of the digital age. This paper discusses the security layers and related services that will be developed, modeled, and evaluated within the Horizon Europe NEUROPULS project. These layers will exploit novel implementations for security primitives based on physical unclonable functions (PUFs) using integrated photonics technology. Their objective is to provide a series of services to support the secure operation of a neuromorphic photonic accelerator for edge computing applications.

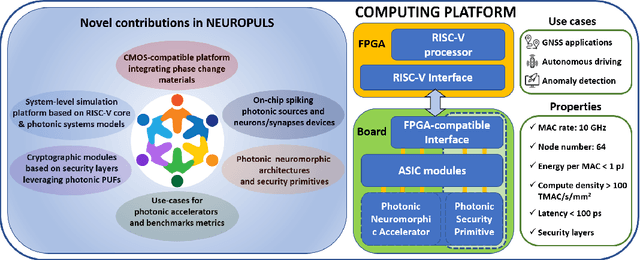

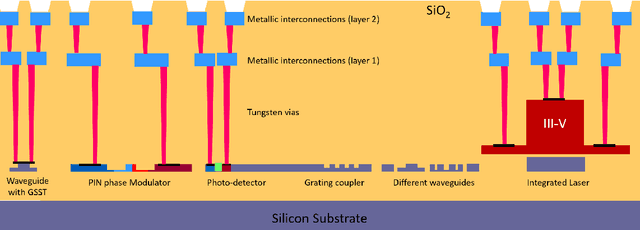

NEUROPULS: NEUROmorphic energy-efficient secure accelerators based on Phase change materials aUgmented siLicon photonicS

May 04, 2023

Abstract:This special session paper introduces the Horizon Europe NEUROPULS project, which targets the development of secure and energy-efficient RISC-V interfaced neuromorphic accelerators using augmented silicon photonics technology. Our approach aims to develop an augmented silicon photonics platform, an FPGA-powered RISC-V-connected computing platform, and a complete simulation platform to demonstrate the neuromorphic accelerator capabilities. In particular, their main advantages and limitations will be addressed concerning the underpinning technology for each platform. Then, we will discuss three targeted use cases for edge-computing applications: Global National Satellite System (GNSS) anti-jamming, autonomous driving, and anomaly detection in edge devices. Finally, we will address the reliability and security aspects of the stand-alone accelerator implementation and the project use cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge