Alessio Carpegna

Energy-Efficient Digital Design: A Comparative Study of Event-Driven and Clock-Driven Spiking Neurons

Jun 16, 2025Abstract:This paper presents a comprehensive evaluation of Spiking Neural Network (SNN) neuron models for hardware acceleration by comparing event driven and clock-driven implementations. We begin our investigation in software, rapidly prototyping and testing various SNN models based on different variants of the Leaky Integrate and Fire (LIF) neuron across multiple datasets. This phase enables controlled performance assessment and informs design refinement. Our subsequent hardware phase, implemented on FPGA, validates the simulation findings and offers practical insights into design trade offs. In particular, we examine how variations in input stimuli influence key performance metrics such as latency, power consumption, energy efficiency, and resource utilization. These results yield valuable guidelines for constructing energy efficient, real time neuromorphic systems. Overall, our work bridges software simulation and hardware realization, advancing the development of next generation SNN accelerators.

SpikeExplorer: hardware-oriented Design Space Exploration for Spiking Neural Networks on FPGA

Apr 04, 2024Abstract:One of today's main concerns is to bring Artificial Intelligence power to embedded systems for edge applications. The hardware resources and power consumption required by state-of-the-art models are incompatible with the constrained environments observed in edge systems, such as IoT nodes and wearable devices. Spiking Neural Networks (SNNs) can represent a solution in this sense: inspired by neuroscience, they reach unparalleled power and resource efficiency when run on dedicated hardware accelerators. However, when designing such accelerators, the amount of choices that can be taken is huge. This paper presents SpikExplorer, a modular and flexible Python tool for hardware-oriented Automatic Design Space Exploration to automate the configuration of FPGA accelerators for SNNs. Using Bayesian optimizations, SpikerExplorer enables hardware-centric multi-objective optimization, supporting factors such as accuracy, area, latency, power, and various combinations during the exploration process. The tool searches the optimal network architecture, neuron model, and internal and training parameters, trying to reach the desired constraints imposed by the user. It allows for a straightforward network configuration, providing the full set of explored points for the user to pick the trade-off that best fits the needs. The potential of SpikExplorer is showcased using three benchmark datasets. It reaches 95.8% accuracy on the MNIST dataset, with a power consumption of 180mW/image and a latency of 0.12 ms/image, making it a powerful tool for automatically optimizing SNNs.

SpikingJET: Enhancing Fault Injection for Fully and Convolutional Spiking Neural Networks

Mar 30, 2024

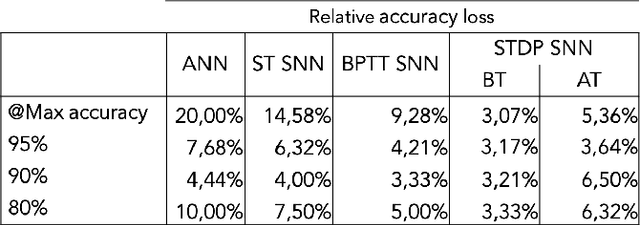

Abstract:As artificial neural networks become increasingly integrated into safety-critical systems such as autonomous vehicles, devices for medical diagnosis, and industrial automation, ensuring their reliability in the face of random hardware faults becomes paramount. This paper introduces SpikingJET, a novel fault injector designed specifically for fully connected and convolutional Spiking Neural Networks (SNNs). Our work underscores the critical need to evaluate the resilience of SNNs to hardware faults, considering their growing prominence in real-world applications. SpikingJET provides a comprehensive platform for assessing the resilience of SNNs by inducing errors and injecting faults into critical components such as synaptic weights, neuron model parameters, internal states, and activation functions. This paper demonstrates the effectiveness of Spiking-JET through extensive software-level experiments on various SNN architectures, revealing insights into their vulnerability and resilience to hardware faults. Moreover, highlighting the importance of fault resilience in SNNs contributes to the ongoing effort to enhance the reliability and safety of Neural Network (NN)-powered systems in diverse domains.

A Micro Architectural Events Aware Real-Time Embedded System Fault Injector

Jan 16, 2024Abstract:In contemporary times, the increasing complexity of the system poses significant challenges to the reliability, trustworthiness, and security of the SACRES. Key issues include the susceptibility to phenomena such as instantaneous voltage spikes, electromagnetic interference, neutron strikes, and out-of-range temperatures. These factors can induce switch state changes in transistors, resulting in bit-flipping, soft errors, and transient corruption of stored data in memory. The occurrence of soft errors, in turn, may lead to system faults that can propel the system into a hazardous state. Particularly in critical sectors like automotive, avionics, or aerospace, such malfunctions can have real-world implications, potentially causing harm to individuals. This paper introduces a novel fault injector designed to facilitate the monitoring, aggregation, and examination of micro-architectural events. This is achieved by harnessing the microprocessor's PMU and the debugging interface, specifically focusing on ensuring the repeatability of fault injections. The fault injection methodology targets bit-flipping within the memory system, affecting CPU registers and RAM. The outcomes of these fault injections enable a thorough analysis of the impact of soft errors and establish a robust correlation between the identified faults and the essential timing predictability demanded by SACRES.

Spiker+: a framework for the generation of efficient Spiking Neural Networks FPGA accelerators for inference at the edge

Jan 02, 2024

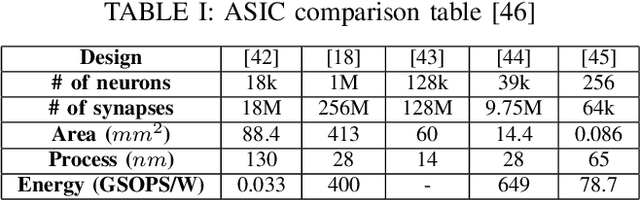

Abstract:Including Artificial Neural Networks in embedded systems at the edge allows applications to exploit Artificial Intelligence capabilities directly within devices operating at the network periphery. This paper introduces Spiker+, a comprehensive framework for generating efficient, low-power, and low-area customized Spiking Neural Networks (SNN) accelerators on FPGA for inference at the edge. Spiker+ presents a configurable multi-layer hardware SNN, a library of highly efficient neuron architectures, and a design framework, enabling the development of complex neural network accelerators with few lines of Python code. Spiker+ is tested on two benchmark datasets, the MNIST and the Spiking Heidelberg Digits (SHD). On the MNIST, it demonstrates competitive performance compared to state-of-the-art SNN accelerators. It outperforms them in terms of resource allocation, with a requirement of 7,612 logic cells and 18 Block RAMs (BRAMs), which makes it fit in very small FPGA, and power consumption, draining only 180mW for a complete inference on an input image. The latency is comparable to the ones observed in the state-of-the-art, with 780us/img. To the authors' knowledge, Spiker+ is the first SNN accelerator tested on the SHD. In this case, the accelerator requires 18,268 logic cells and 51 BRAM, with an overall power consumption of 430mW and a latency of 54 us for a complete inference on input data. This underscores the significance of Spiker+ in the hardware-accelerated SNN landscape, making it an excellent solution to deploy configurable and tunable SNN architectures in resource and power-constrained edge applications.

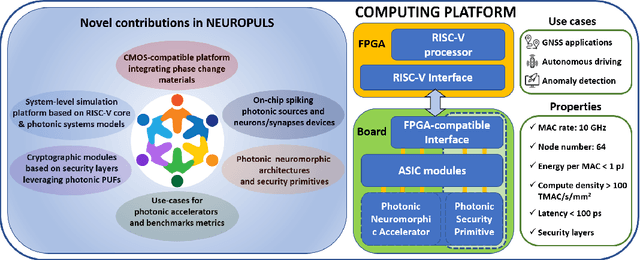

NEUROPULS: NEUROmorphic energy-efficient secure accelerators based on Phase change materials aUgmented siLicon photonicS

May 04, 2023

Abstract:This special session paper introduces the Horizon Europe NEUROPULS project, which targets the development of secure and energy-efficient RISC-V interfaced neuromorphic accelerators using augmented silicon photonics technology. Our approach aims to develop an augmented silicon photonics platform, an FPGA-powered RISC-V-connected computing platform, and a complete simulation platform to demonstrate the neuromorphic accelerator capabilities. In particular, their main advantages and limitations will be addressed concerning the underpinning technology for each platform. Then, we will discuss three targeted use cases for edge-computing applications: Global National Satellite System (GNSS) anti-jamming, autonomous driving, and anomaly detection in edge devices. Finally, we will address the reliability and security aspects of the stand-alone accelerator implementation and the project use cases.

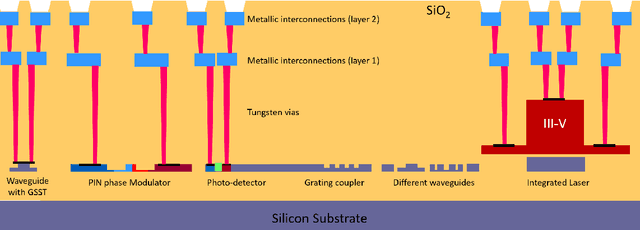

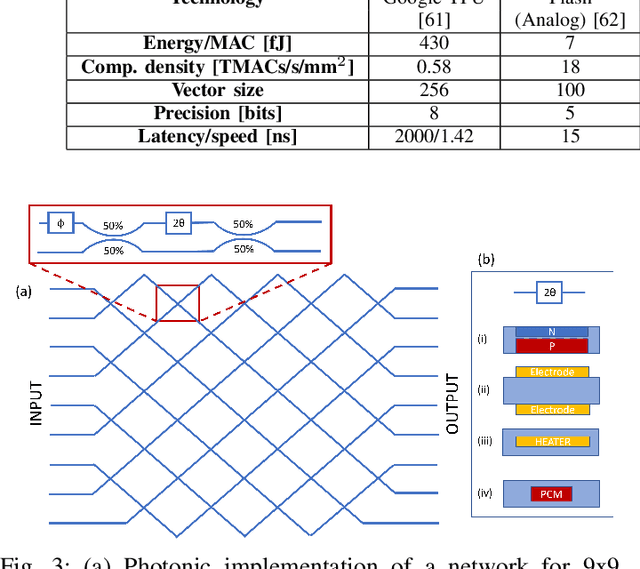

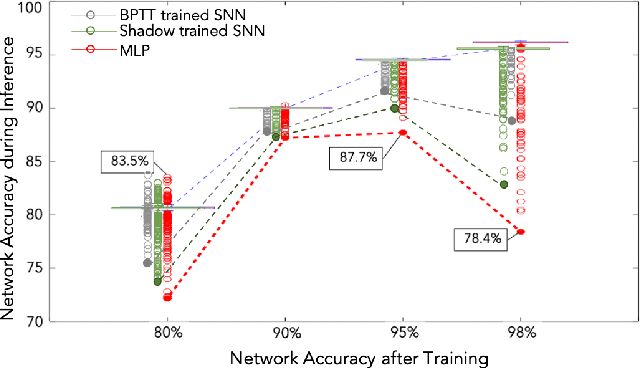

Special Session: Neuromorphic hardware design and reliability from traditional CMOS to emerging technologies

May 02, 2023

Abstract:The field of neuromorphic computing has been rapidly evolving in recent years, with an increasing focus on hardware design and reliability. This special session paper provides an overview of the recent developments in neuromorphic computing, focusing on hardware design and reliability. We first review the traditional CMOS-based approaches to neuromorphic hardware design and identify the challenges related to scalability, latency, and power consumption. We then investigate alternative approaches based on emerging technologies, specifically integrated photonics approaches within the NEUROPULS project. Finally, we examine the impact of device variability and aging on the reliability of neuromorphic hardware and present techniques for mitigating these effects. This review is intended to serve as a valuable resource for researchers and practitioners in neuromorphic computing.

FPGA-optimized Hardware acceleration for Spiking Neural Networks

Jan 18, 2022

Abstract:Artificial intelligence (AI) is gaining success and importance in many different tasks. The growing pervasiveness and complexity of AI systems push researchers towards developing dedicated hardware accelerators. Spiking Neural Networks (SNN) represent a promising solution in this sense since they implement models that are more suitable for a reliable hardware design. Moreover, from a neuroscience perspective, they better emulate a human brain. This work presents the development of a hardware accelerator for an SNN, with off-line training, applied to an image recognition task, using the MNIST as the target dataset. Many techniques are used to minimize the area and to maximize the performance, such as the replacement of the multiplication operation with simple bit shifts and the minimization of the time spent on inactive spikes, useless for the update of neurons' internal state. The design targets a Xilinx Artix-7 FPGA, using in total around the 40% of the available hardware resources and reducing the classification time by three orders of magnitude, with a small 4.5% impact on the accuracy, if compared to its software, full precision counterpart.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge