Alejandro Morales-Hernández

Multi-objective hyperparameter optimization with performance uncertainty

Sep 09, 2022

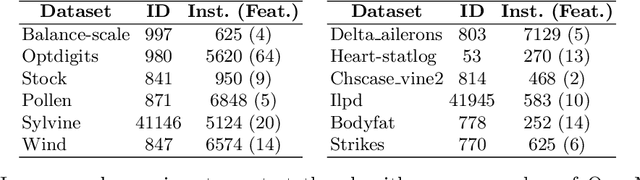

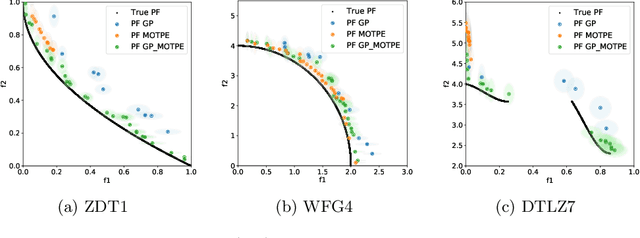

Abstract:The performance of any Machine Learning (ML) algorithm is impacted by the choice of its hyperparameters. As training and evaluating a ML algorithm is usually expensive, the hyperparameter optimization (HPO) method needs to be computationally efficient to be useful in practice. Most of the existing approaches on multi-objective HPO use evolutionary strategies and metamodel-based optimization. However, few methods have been developed to account for uncertainty in the performance measurements. This paper presents results on multi-objective hyperparameter optimization with uncertainty on the evaluation of ML algorithms. We combine the sampling strategy of Tree-structured Parzen Estimators (TPE) with the metamodel obtained after training a Gaussian Process Regression (GPR) with heterogeneous noise. Experimental results on three analytical test functions and three ML problems show the improvement over multi-objective TPE and GPR, achieved with respect to the hypervolume indicator.

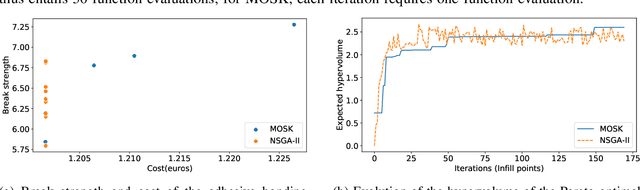

Constrained multi-objective optimization of process design parameters in settings with scarce data: an application to adhesive bonding

Dec 16, 2021

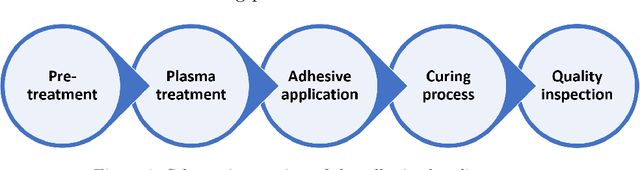

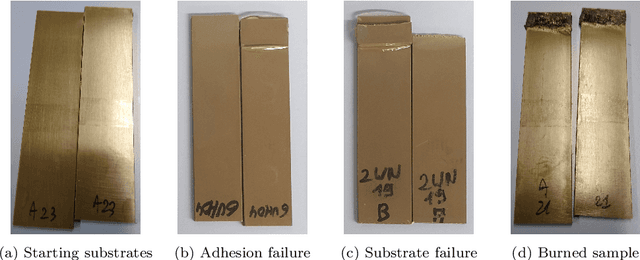

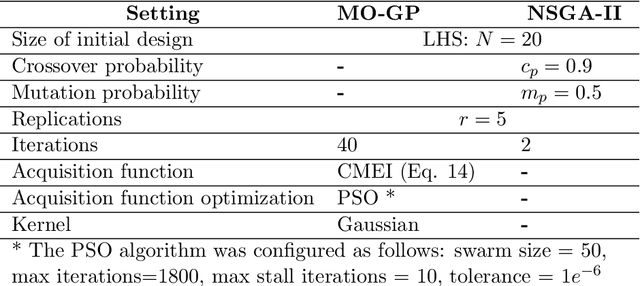

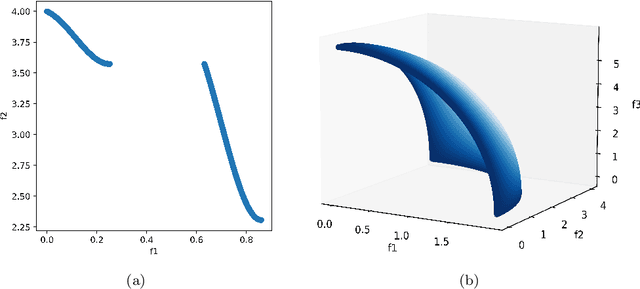

Abstract:Adhesive joints are increasingly used in industry for a wide variety of applications because of their favorable characteristics such as high strength-to-weight ratio, design flexibility, limited stress concentrations, planar force transfer, good damage tolerance and fatigue resistance. Finding the optimal process parameters for an adhesive bonding process is challenging: the optimization is inherently multi-objective (aiming to maximize break strength while minimizing cost) and constrained (the process should not result in any visual damage to the materials, and stress tests should not result in failures that are adhesion-related). Real life physical experiments in the lab are expensive to perform; traditional evolutionary approaches (such as genetic algorithms) are then ill-suited to solve the problem, due to the prohibitive amount of experiments required for evaluation. In this research, we successfully applied specific machine learning techniques (Gaussian Process Regression and Logistic Regression) to emulate the objective and constraint functions based on a limited amount of experimental data. The techniques are embedded in a Bayesian optimization algorithm, which succeeds in detecting Pareto-optimal process settings in a highly efficient way (i.e., requiring a limited number of extra experiments).

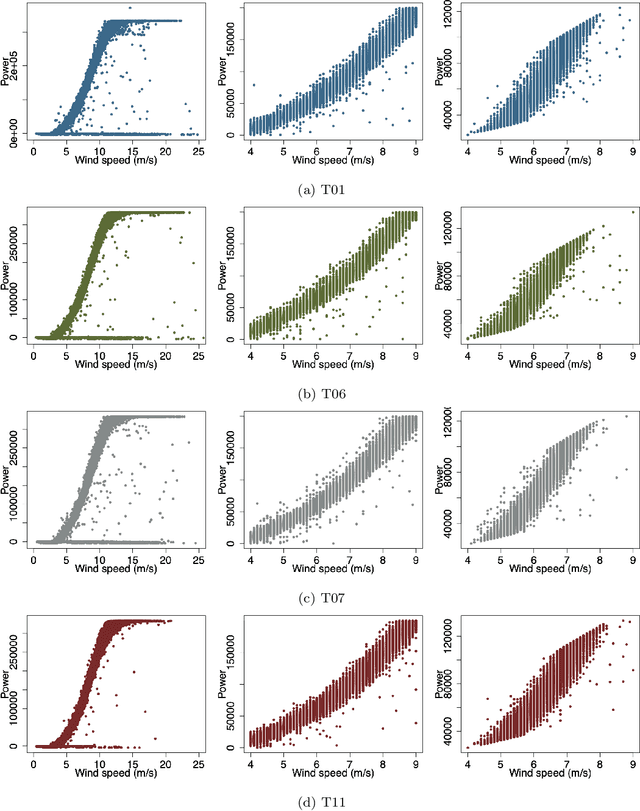

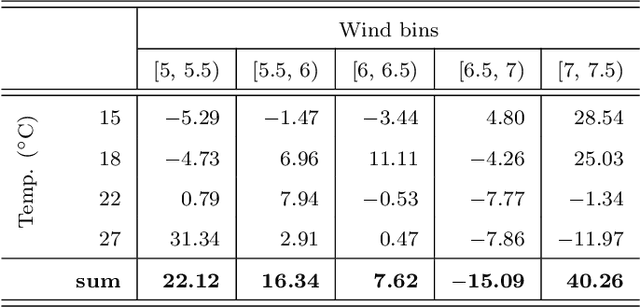

Measuring Wind Turbine Health Using Drifting Concepts

Dec 09, 2021

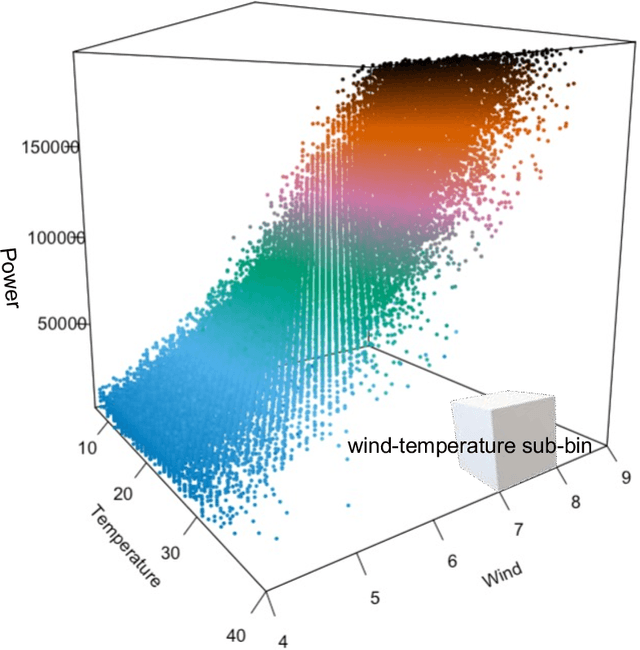

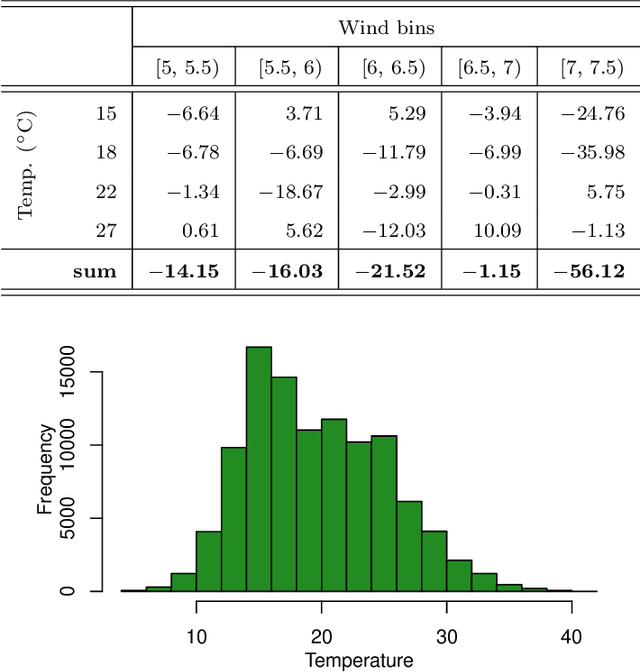

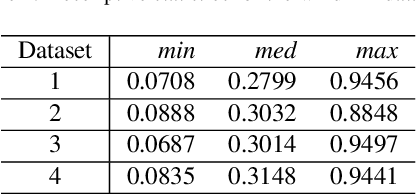

Abstract:Time series processing is an essential aspect of wind turbine health monitoring. Despite the progress in this field, there is still room for new methods to improve modeling quality. In this paper, we propose two new approaches for the analysis of wind turbine health. Both approaches are based on abstract concepts, implemented using fuzzy sets, which summarize and aggregate the underlying raw data. By observing the change in concepts, we infer about the change in the turbine's health. Analyzes are carried out separately for different external conditions (wind speed and temperature). We extract concepts that represent relative low, moderate, and high power production. The first method aims at evaluating the decrease or increase in relatively high and low power production. This task is performed using a regression-like model. The second method evaluates the overall drift of the extracted concepts. Large drift indicates that the power production process undergoes fluctuations in time. Concepts are labeled using linguistic labels, thus equipping our model with improved interpretability features. We applied the proposed approach to process publicly available data describing four wind turbines. The simulation results have shown that the aging process is not homogeneous in all wind turbines.

Multi-objective simulation optimization of the adhesive bonding process of materials

Dec 09, 2021

Abstract:Automotive companies are increasingly looking for ways to make their products lighter, using novel materials and novel bonding processes to join these materials together. Finding the optimal process parameters for such adhesive bonding process is challenging. In this research, we successfully applied Bayesian optimization using Gaussian Process Regression and Logistic Regression, to efficiently (i.e., requiring few experiments) guide the design of experiments to the Pareto-optimal process parameter settings.

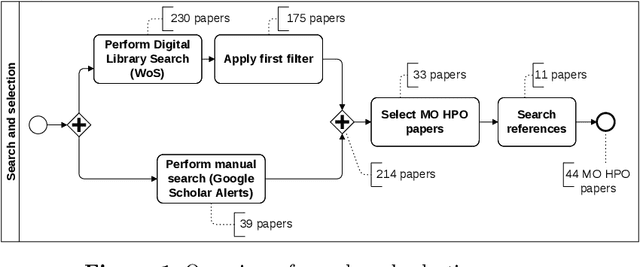

A survey on multi-objective hyperparameter optimization algorithms for Machine Learning

Nov 23, 2021

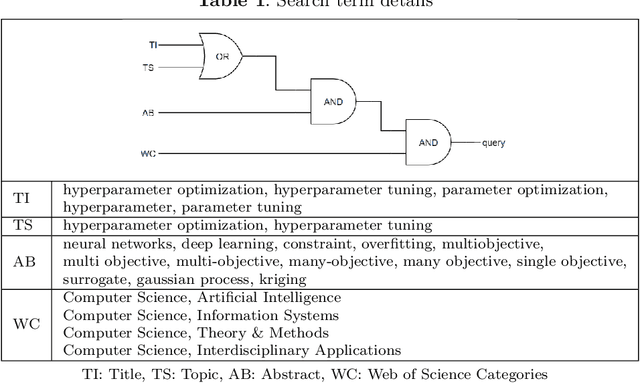

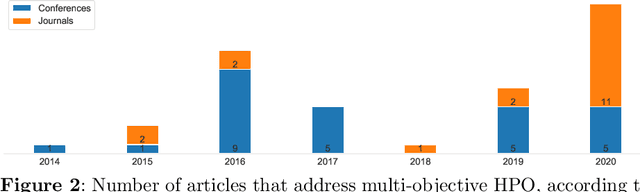

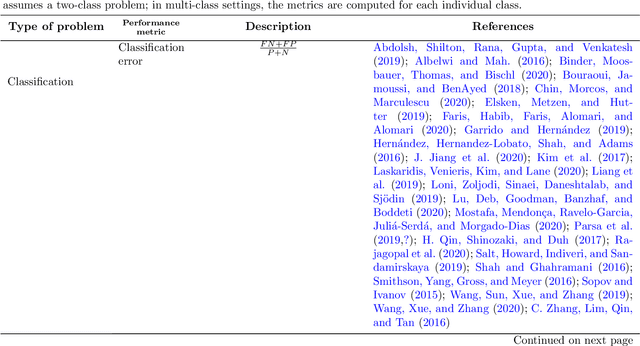

Abstract:Hyperparameter optimization (HPO) is a necessary step to ensure the best possible performance of Machine Learning (ML) algorithms. Several methods have been developed to perform HPO; most of these are focused on optimizing one performance measure (usually an error-based measure), and the literature on such single-objective HPO problems is vast. Recently, though, algorithms have appeared which focus on optimizing multiple conflicting objectives simultaneously. This article presents a systematic survey of the literature published between 2014 and 2020 on multi-objective HPO algorithms, distinguishing between metaheuristic-based algorithms, metamodel-based algorithms, and approaches using a mixture of both. We also discuss the quality metrics used to compare multi-objective HPO procedures and present future research directions.

Online learning of windmill time series using Long Short-term Cognitive Networks

Jul 01, 2021

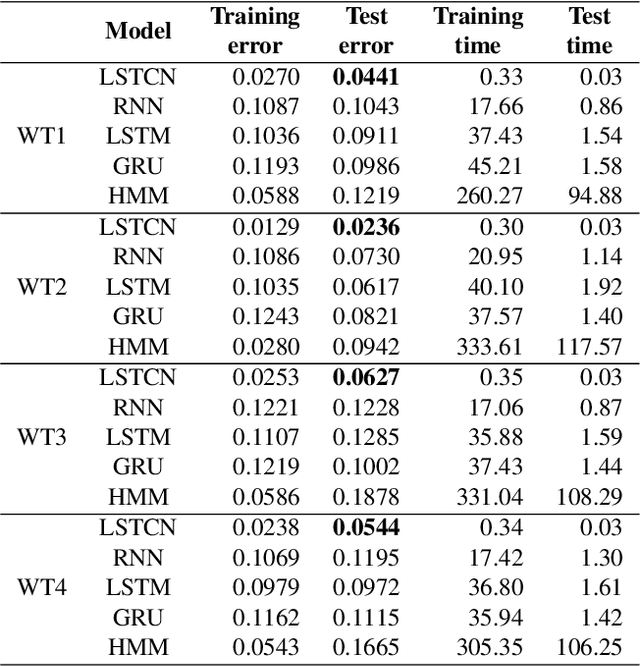

Abstract:Forecasting windmill time series is often the basis of other processes such as anomaly detection, health monitoring, or maintenance scheduling. The amount of data generated on windmill farms makes online learning the most viable strategy to follow. Such settings require retraining the model each time a new batch of data is available. However, update the model with the new information is often very expensive to perform using traditional Recurrent Neural Networks (RNNs). In this paper, we use Long Short-term Cognitive Networks (LSTCNs) to forecast windmill time series in online settings. These recently introduced neural systems consist of chained Short-term Cognitive Network blocks, each processing a temporal data chunk. The learning algorithm of these blocks is based on a very fast, deterministic learning rule that makes LSTCNs suitable for online learning tasks. The numerical simulations using a case study with four windmills showed that our approach reported the lowest forecasting errors with respect to a simple RNN, a Long Short-term Memory, a Gated Recurrent Unit, and a Hidden Markov Model. What is perhaps more important is that the LSTCN approach is significantly faster than these state-of-the-art models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge