Yamisleydi Salgueiro

Which is the best model for my data?

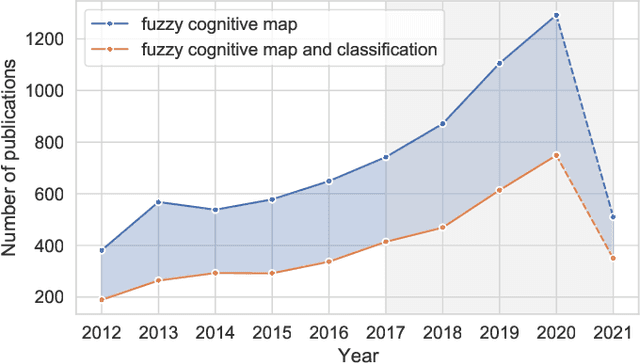

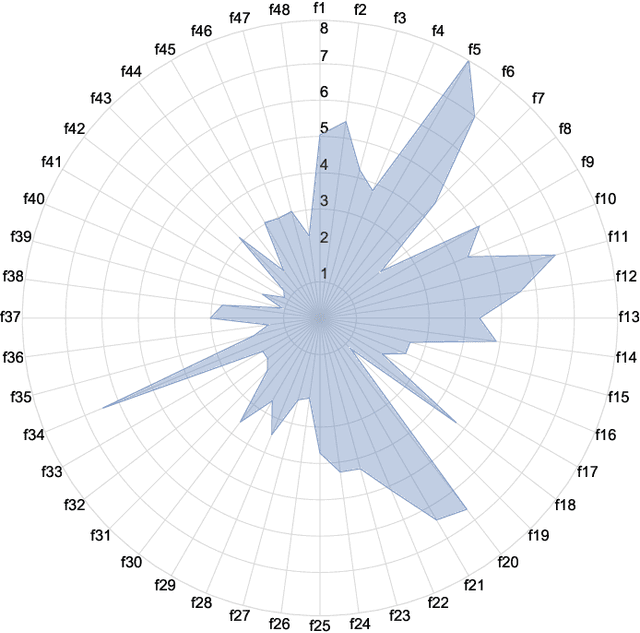

Oct 26, 2022Abstract:In this paper, we tackle the problem of selecting the optimal model for a given structured pattern classification dataset. In this context, a model can be understood as a classifier and a hyperparameter configuration. The proposed meta-learning approach purely relies on machine learning and involves four major steps. Firstly, we present a concise collection of 62 meta-features that address the problem of information cancellation when aggregation measure values involving positive and negative measurements. Secondly, we describe two different approaches for synthetic data generation intending to enlarge the training data. Thirdly, we fit a set of pre-defined classification models for each classification problem while optimizing their hyperparameters using grid search. The goal is to create a meta-dataset such that each row denotes a multilabel instance describing a specific problem. The features of these meta-instances denote the statistical properties of the generated datasets, while the labels encode the grid search results as binary vectors such that best-performing models are positively labeled. Finally, we tackle the model selection problem with several multilabel classifiers, including a Convolutional Neural Network designed to handle tabular data. The simulation results show that our meta-learning approach can correctly predict an optimal model for 91% of the synthetic datasets and for 87% of the real-world datasets. Furthermore, we noticed that most meta-classifiers produced better results when using our meta-features. Overall, our proposal differs from other meta-learning approaches since it tackles the algorithm selection and hyperparameter tuning problems in a single step. Toward the end, we perform a feature importance analysis to determine which statistical features drive the model selection mechanism.

Forward Composition Propagation for Explainable Neural Reasoning

Dec 23, 2021

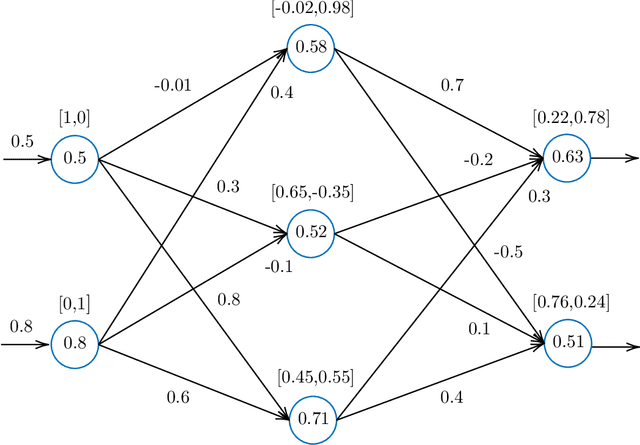

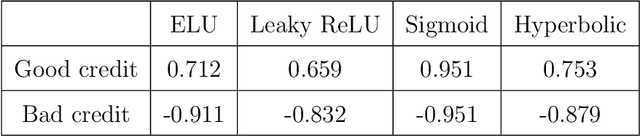

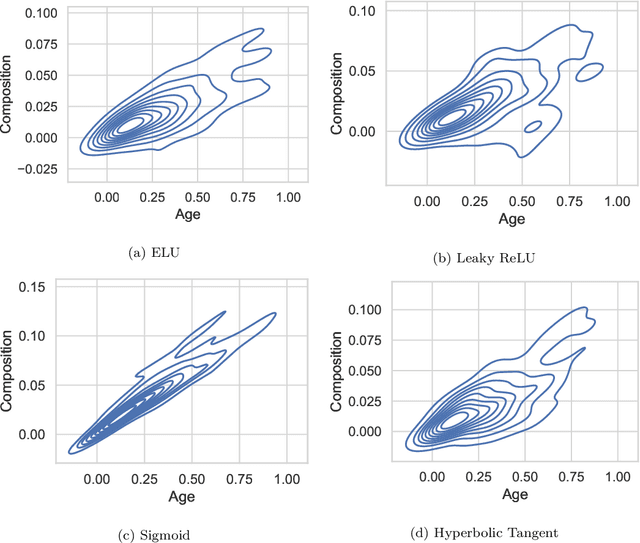

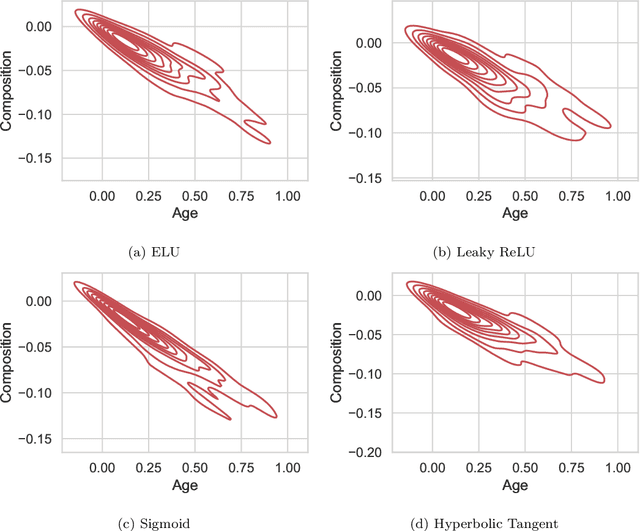

Abstract:This paper proposes an algorithm called Forward Composition Propagation (FCP) to explain the predictions of feed-forward neural networks operating on structured pattern recognition problems. In the proposed FCP algorithm, each neuron is described by a composition vector indicating the role of each problem feature in that neuron. Composition vectors are initialized using a given input instance and subsequently propagated through the whole network until we reach the output layer. It is worth mentioning that the algorithm is executed once the network's training network is done. The sign of each composition value indicates whether the corresponding feature excites or inhibits the neuron, while the absolute value quantifies such an impact. Aiming to validate the FCP algorithm's correctness, we develop a case study concerning bias detection in a state-of-the-art problem in which the ground truth is known. The simulation results show that the composition values closely align with the expected behavior of protected features.

Measuring Wind Turbine Health Using Drifting Concepts

Dec 09, 2021

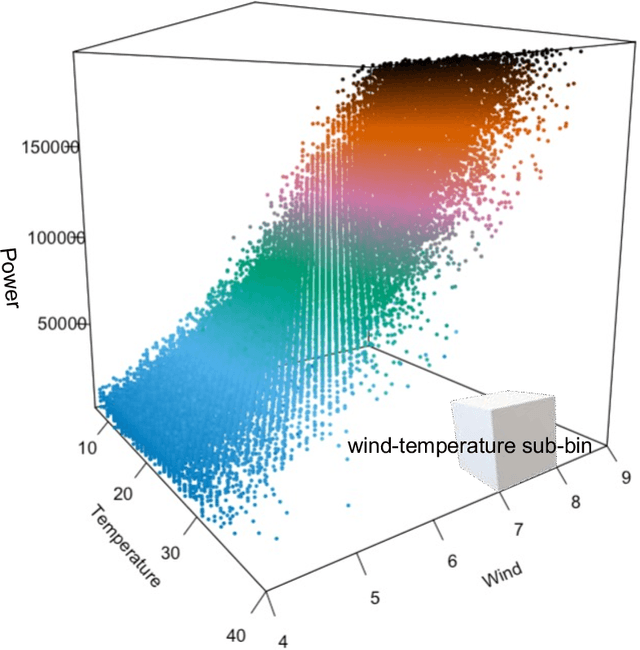

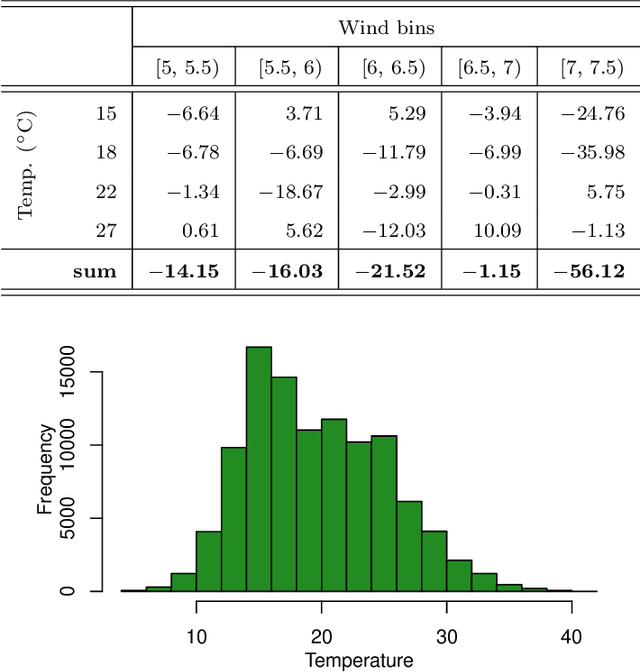

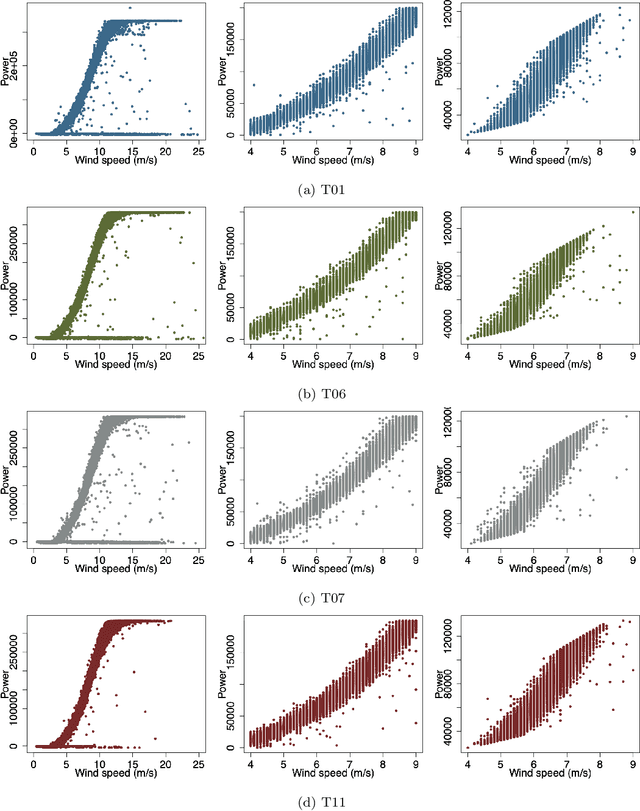

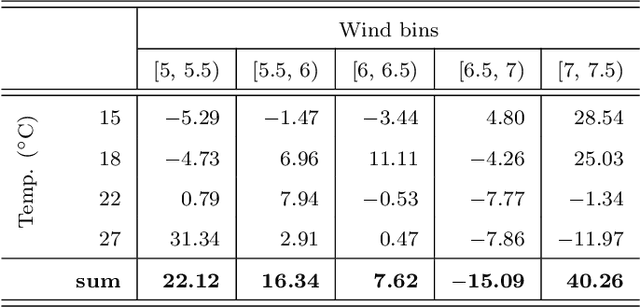

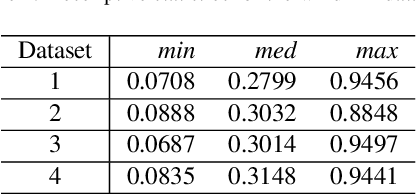

Abstract:Time series processing is an essential aspect of wind turbine health monitoring. Despite the progress in this field, there is still room for new methods to improve modeling quality. In this paper, we propose two new approaches for the analysis of wind turbine health. Both approaches are based on abstract concepts, implemented using fuzzy sets, which summarize and aggregate the underlying raw data. By observing the change in concepts, we infer about the change in the turbine's health. Analyzes are carried out separately for different external conditions (wind speed and temperature). We extract concepts that represent relative low, moderate, and high power production. The first method aims at evaluating the decrease or increase in relatively high and low power production. This task is performed using a regression-like model. The second method evaluates the overall drift of the extracted concepts. Large drift indicates that the power production process undergoes fluctuations in time. Concepts are labeled using linguistic labels, thus equipping our model with improved interpretability features. We applied the proposed approach to process publicly available data describing four wind turbines. The simulation results have shown that the aging process is not homogeneous in all wind turbines.

Recurrence-Aware Long-Term Cognitive Network for Explainable Pattern Classification

Jul 07, 2021

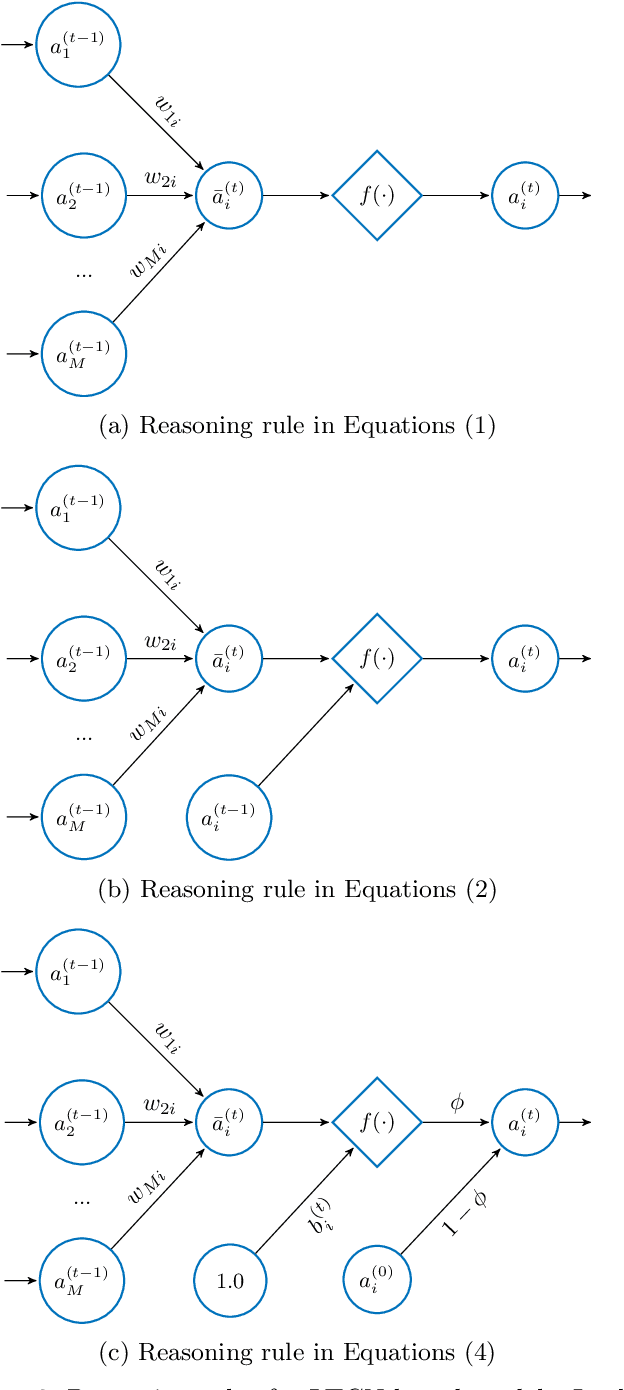

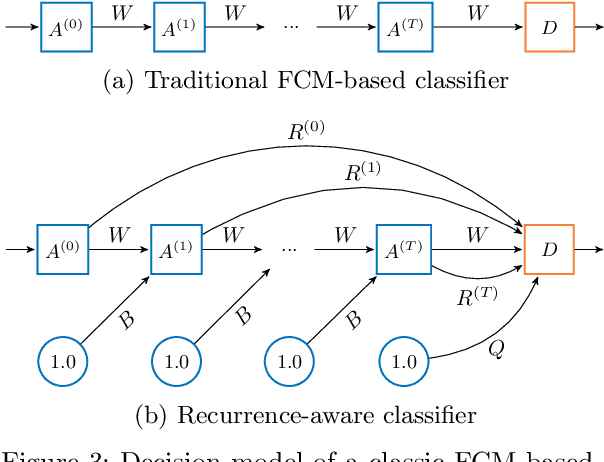

Abstract:Machine learning solutions for pattern classification problems are nowadays widely deployed in society and industry. However, the lack of transparency and accountability of most accurate models often hinders their meaningful and safe use. Thus, there is a clear need for developing explainable artificial intelligence mechanisms. There exist model-agnostic methods that summarize feature contributions, but their interpretability is limited to specific predictions made by black-box models. An open challenge is to develop models that have intrinsic interpretability and produce their own explanations, even for classes of models that are traditionally considered black boxes like (recurrent) neural networks. In this paper, we propose an LTCN-based model for interpretable pattern classification of structured data. Our method brings its own mechanism for providing explanations by quantifying the relevance of each feature in the decision process. For supporting the interpretability without affecting the performance, the model incorporates more flexibility through a quasi-nonlinear reasoning rule that allows controlling nonlinearity. Besides, we propose a recurrence-aware decision model that evades the issues posed by unique fixed points while introducing a deterministic learning method to compute the learnable parameters. The simulations show that our interpretable model obtains competitive performance when compared to the state-of-the-art white and black boxes.

Online learning of windmill time series using Long Short-term Cognitive Networks

Jul 01, 2021

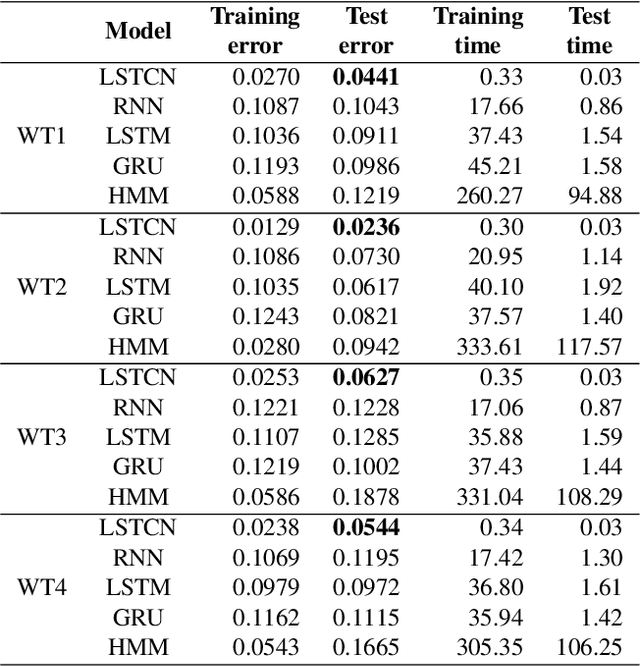

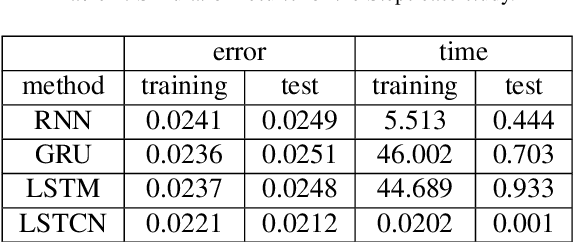

Abstract:Forecasting windmill time series is often the basis of other processes such as anomaly detection, health monitoring, or maintenance scheduling. The amount of data generated on windmill farms makes online learning the most viable strategy to follow. Such settings require retraining the model each time a new batch of data is available. However, update the model with the new information is often very expensive to perform using traditional Recurrent Neural Networks (RNNs). In this paper, we use Long Short-term Cognitive Networks (LSTCNs) to forecast windmill time series in online settings. These recently introduced neural systems consist of chained Short-term Cognitive Network blocks, each processing a temporal data chunk. The learning algorithm of these blocks is based on a very fast, deterministic learning rule that makes LSTCNs suitable for online learning tasks. The numerical simulations using a case study with four windmills showed that our approach reported the lowest forecasting errors with respect to a simple RNN, a Long Short-term Memory, a Gated Recurrent Unit, and a Hidden Markov Model. What is perhaps more important is that the LSTCN approach is significantly faster than these state-of-the-art models.

Long Short-term Cognitive Networks

Jun 30, 2021

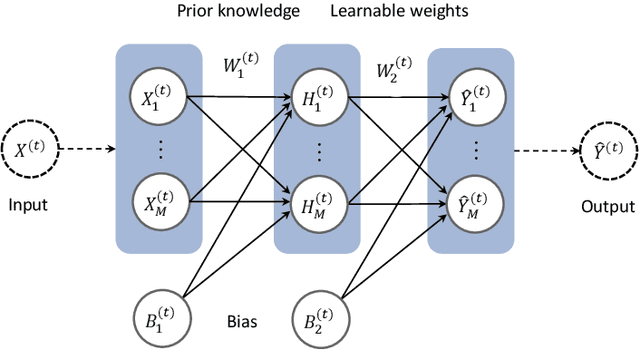

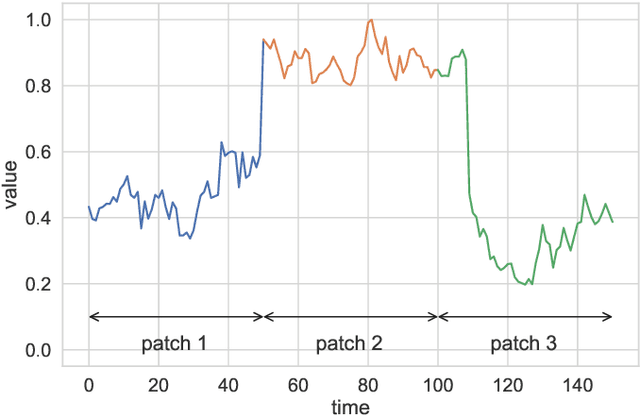

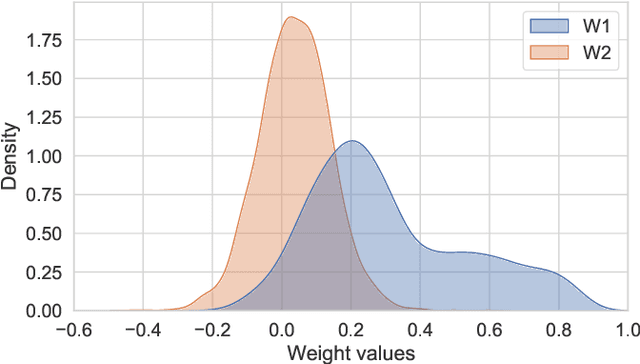

Abstract:In this paper, we present a recurrent neural system named Long Short-term Cognitive Networks (LSTCNs) as a generalisation of the Short-term Cognitive Network (STCN) model. Such a generalisation is motivated by the difficulty of forecasting very long time series in an efficient, greener fashion. The LSTCN model can be defined as a collection of STCN blocks, each processing a specific time patch of the (multivariate) time series being modelled. In this neural ensemble, each block passes information to the subsequent one in the form of a weight matrix referred to as the prior knowledge matrix. As a second contribution, we propose a deterministic learning algorithm to compute the learnable weights while preserving the prior knowledge resulting from previous learning processes. As a third contribution, we introduce a feature influence score as a proxy to explain the forecasting process in multivariate time series. The simulations using three case studies show that our neural system reports small forecasting errors while being up to thousands of times faster than state-of-the-art recurrent models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge