Maikel Leon Espinosa

Prolog-based agnostic explanation module for structured pattern classification

Dec 23, 2021

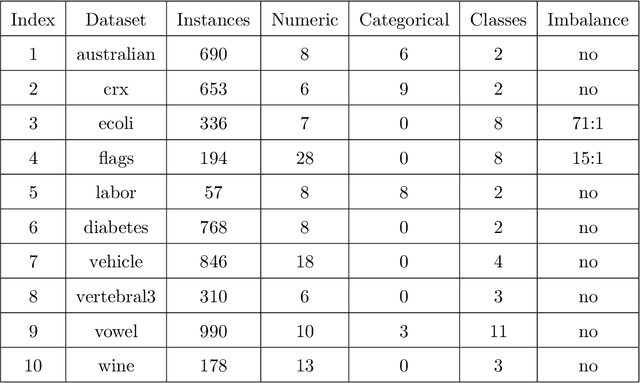

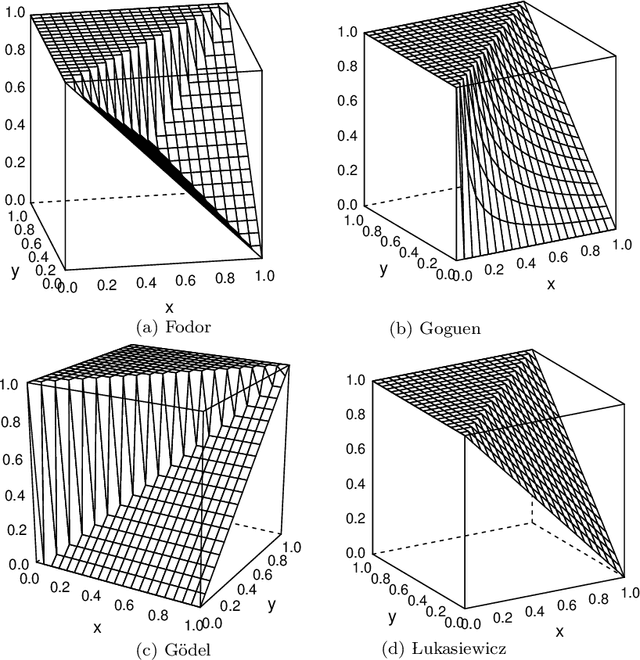

Abstract:This paper presents a Prolog-based reasoning module to generate counterfactual explanations given the predictions computed by a black-box classifier. The proposed symbolic reasoning module can also resolve what-if queries using the ground-truth labels instead of the predicted ones. Overall, our approach comprises four well-defined stages that can be applied to any structured pattern classification problem. Firstly, we pre-process the given dataset by imputing missing values and normalizing the numerical features. Secondly, we transform numerical features into symbolic ones using fuzzy clustering such that extracted fuzzy clusters are mapped to an ordered set of predefined symbols. Thirdly, we encode instances as a Prolog rule using the nominal values, the predefined symbols, the decision classes, and the confidence values. Fourthly, we compute the overall confidence of each Prolog rule using fuzzy-rough set theory to handle the uncertainty caused by transforming numerical quantities into symbols. This step comes with an additional theoretical contribution to a new similarity function to compare the previously defined Prolog rules involving confidence values. Finally, we implement a chatbot as a proxy between human beings and the Prolog-based reasoning module to resolve natural language queries and generate counterfactual explanations. During the numerical simulations using synthetic datasets, we study the performance of our system when using different fuzzy operators and similarity functions. Towards the end, we illustrate how our reasoning module works using different use cases.

Recurrence-Aware Long-Term Cognitive Network for Explainable Pattern Classification

Jul 07, 2021

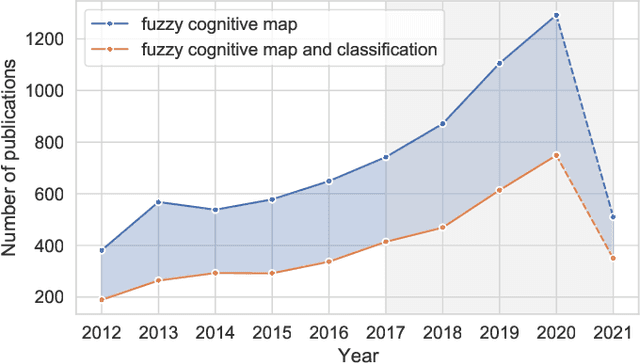

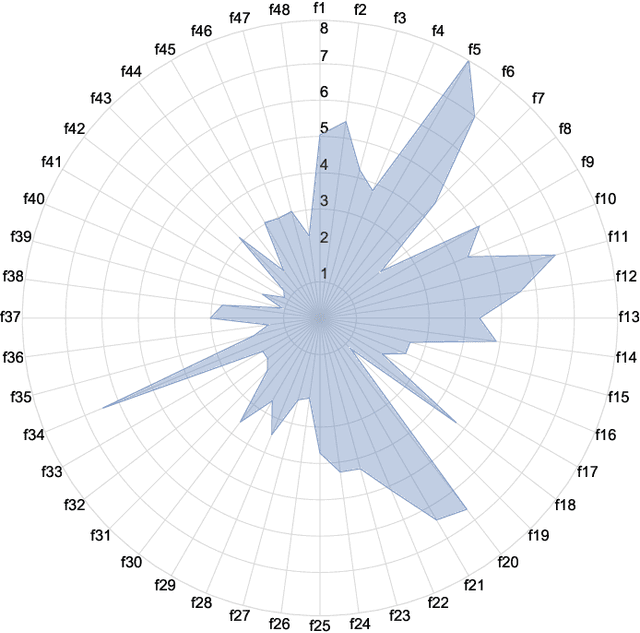

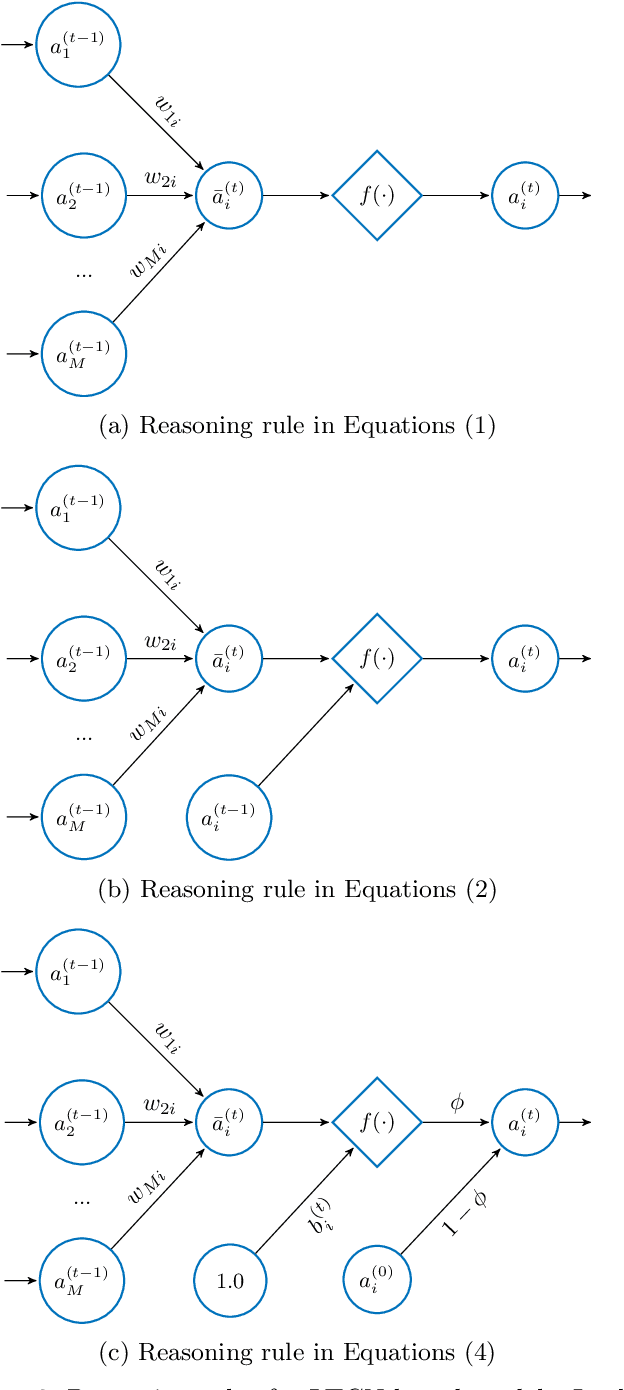

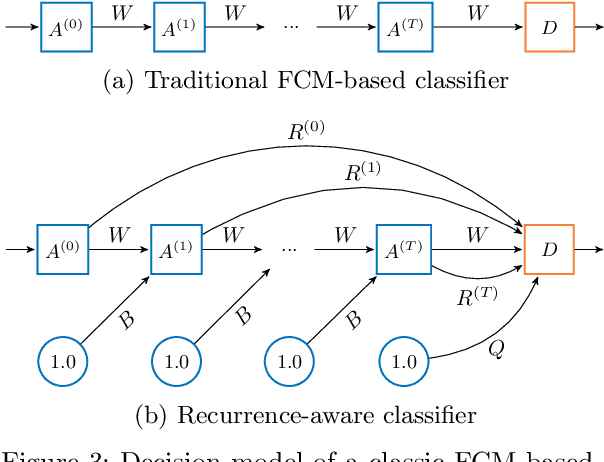

Abstract:Machine learning solutions for pattern classification problems are nowadays widely deployed in society and industry. However, the lack of transparency and accountability of most accurate models often hinders their meaningful and safe use. Thus, there is a clear need for developing explainable artificial intelligence mechanisms. There exist model-agnostic methods that summarize feature contributions, but their interpretability is limited to specific predictions made by black-box models. An open challenge is to develop models that have intrinsic interpretability and produce their own explanations, even for classes of models that are traditionally considered black boxes like (recurrent) neural networks. In this paper, we propose an LTCN-based model for interpretable pattern classification of structured data. Our method brings its own mechanism for providing explanations by quantifying the relevance of each feature in the decision process. For supporting the interpretability without affecting the performance, the model incorporates more flexibility through a quasi-nonlinear reasoning rule that allows controlling nonlinearity. Besides, we propose a recurrence-aware decision model that evades the issues posed by unique fixed points while introducing a deterministic learning method to compute the learnable parameters. The simulations show that our interpretable model obtains competitive performance when compared to the state-of-the-art white and black boxes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge