Alejandro Ariza-Casabona

Efficient Large-Scale Cross-Domain Sequential Recommendation with Dynamic State Representations

Aug 28, 2025Abstract:Recently, autoregressive recommendation models (ARMs), such as Meta's HSTU model, have emerged as a major breakthrough over traditional Deep Learning Recommendation Models (DLRMs), exhibiting the highly sought-after scaling law behaviour. However, when applied to multi-domain scenarios, the transformer architecture's attention maps become a computational bottleneck, as they attend to all items across every domain. To tackle this challenge, systems must efficiently balance inter and intra-domain knowledge transfer. In this work, we introduce a novel approach for scalable multi-domain recommendation systems by replacing full inter-domain attention with two innovative mechanisms: 1) Transition-Aware Positional Embeddings (TAPE): We propose novel positional embeddings that account for domain-transition specific information. This allows attention to be focused solely on intra-domain items, effectively reducing the unnecessary computational cost associated with attending to irrelevant domains. 2) Dynamic Domain State Representation (DDSR): We introduce a dynamic state representation for each domain, which is stored and accessed during subsequent token predictions. This enables the efficient transfer of relevant domain information without relying on full attention maps. Our method offers a scalable solution to the challenges posed by large-scale, multi-domain recommendation systems and demonstrates significant improvements in retrieval tasks by separately modelling and combining inter- and intra-domain representations.

ContextGNN goes to Elliot: Towards Benchmarking Relational Deep Learning for Static Link Prediction (aka Personalized Item Recommendation)

Mar 20, 2025

Abstract:Relational deep learning (RDL) settles among the most exciting advances in machine learning for relational databases, leveraging the representational power of message passing graph neural networks (GNNs) to derive useful knowledge and run predicting tasks on tables connected through primary-to-foreign key links. The RDL paradigm has been successfully applied to recommendation lately, through its most recent representative deep learning architecture namely, ContextGNN. While acknowledging ContextGNN's improved performance on real-world recommendation datasets and tasks, preliminary tests for the more traditional static link prediction task (aka personalized item recommendation) on the popular Amazon Book dataset have demonstrated how ContextGNN has still room for improvement compared to other state-of-the-art GNN-based recommender systems. To this end, with this paper, we integrate ContextGNN within Elliot, a popular framework for reproducibility and benchmarking analyses, counting around 50 state-of-the-art recommendation models from the literature to date. On such basis, we run preliminary experiments on three standard recommendation datasets and against six state-of-the-art GNN-based recommender systems, confirming similar trends to those observed by the authors in their original paper. The code is publicly available on GitHub: https://github.com/danielemalitesta/Rel-DeepLearning-RecSys.

MM-GEF: Multi-modal representation meet collaborative filtering

Aug 14, 2023

Abstract:In modern e-commerce, item content features in various modalities offer accurate yet comprehensive information to recommender systems. The majority of previous work either focuses on learning effective item representation during modelling user-item interactions, or exploring item-item relationships by analysing multi-modal features. Those methods, however, fail to incorporate the collaborative item-user-item relationships into the multi-modal feature-based item structure. In this work, we propose a graph-based item structure enhancement method MM-GEF: Multi-Modal recommendation with Graph Early-Fusion, which effectively combines the latent item structure underlying multi-modal contents with the collaborative signals. Instead of processing the content feature in different modalities separately, we show that the early-fusion of multi-modal features provides significant improvement. MM-GEF learns refined item representations by injecting structural information obtained from both multi-modal and collaborative signals. Through extensive experiments on four publicly available datasets, we demonstrate systematical improvements of our method over state-of-the-art multi-modal recommendation methods.

Exploiting Graph Structured Cross-Domain Representation for Multi-Domain Recommendation

Feb 22, 2023

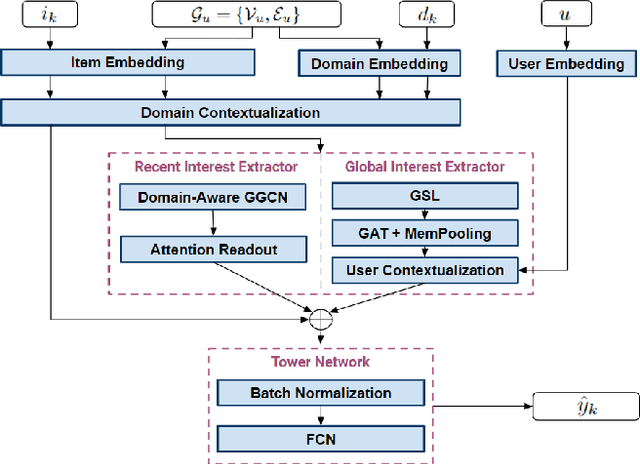

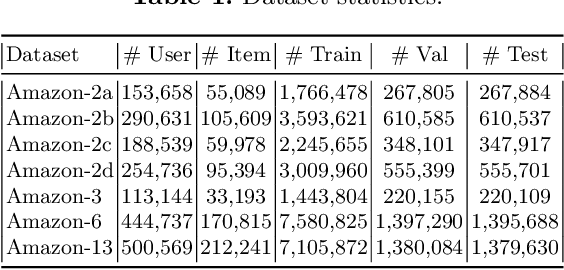

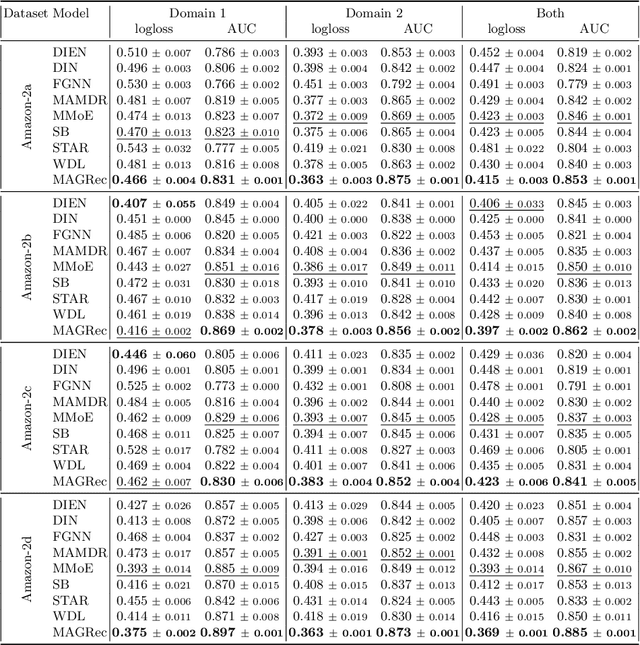

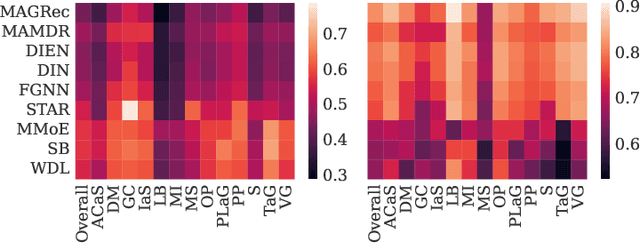

Abstract:Multi-domain recommender systems benefit from cross-domain representation learning and positive knowledge transfer. Both can be achieved by introducing a specific modeling of input data (i.e. disjoint history) or trying dedicated training regimes. At the same time, treating domains as separate input sources becomes a limitation as it does not capture the interplay that naturally exists between domains. In this work, we efficiently learn multi-domain representation of sequential users' interactions using graph neural networks. We use temporal intra- and inter-domain interactions as contextual information for our method called MAGRec (short for Multi-domAin Graph-based Recommender). To better capture all relations in a multi-domain setting, we learn two graph-based sequential representations simultaneously: domain-guided for recent user interest, and general for long-term interest. This approach helps to mitigate the negative knowledge transfer problem from multiple domains and improve overall representation. We perform experiments on publicly available datasets in different scenarios where MAGRec consistently outperforms state-of-the-art methods. Furthermore, we provide an ablation study and discuss further extensions of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge